Python Playground_ Geeky Projects for the Curious Programmer ( PDFDrive ).pdf

- 1. G E E K Y P R O J E C T S F O R T H E C U R I O U S P R O G R A M M E R M A H E S H V E N K I T A C H A L A M PY THON PL AYGROUND PY THON PL AYGROUND SHELVE IN: PROGRAMMING LANGUAGES/ PYTHON $29.95 ($34.95 CDN) Python is a powerful programming language that’s easy to learn and fun to play with. But once you’ve gotten a handle on the basics, what do you do next? Python Playground is a collection of imaginative programming projects that will inspire you to use Python to make art and music, build simulations of real-world phenomena, and interact with hardware like the Arduino and Raspberry Pi. You’ll learn to use common Python tools and libraries like numpy, matplotlib, and pygame to do things like: • Generate Spirograph-like patterns using parametric equations and the turtle module • Create music on your computer by simulating frequency overtones • Translate graphical images into ASCII art • Write an autostereogram program that produces 3D images hidden beneath random patterns • Make realistic animations with OpenGL shaders by exploring particle systems, transparency, and billboarding techniques • Construct 3D visualizations using data from CT and MRI scans • Build a laser show that responds to music by hooking up your computer to an Arduino Programming shouldn’t be a chore. Have some solid, geeky fun with Python Playground. A B O U T T H E A U T H O R Mahesh Venkitachalam is a software engineer with two decades of programming experience. He has nurtured a passion for technology since the eighth grade, which he channels into his popular electronics and programming blog, electronut.in. P R O G R A M M I N G B A C K I N P U T T H E F U N P R O G R A M M I N G B A C K I N P U T T H E F U N The projects in this book are compatible with Python 2 and 3. P Y T H O N P L AY G R O U N D P Y T H O N P L AY G R O U N D V E N K I T A C H A L A M www.nostarch.com THE FINEST IN GEEK ENTERTAINMENT™ “I LIE FLAT.” This book uses a durable binding that won’t snap shut. www.it-ebooks.info

- 4. Python Playground Geeky Projects for the Curious Programmer by Mahesh Venkitachalam San Francisco www.it-ebooks.info

- 5. Python Playground. Copyright © 2016 by Mahesh Venkitachalam. All rights reserved. No part of this work may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or by any information storage or retrieval system, without the prior written permission of the copyright owner and the publisher. First printing 19 18 17 16 15 1 2 3 4 5 6 7 8 9 ISBN-10: 1-59327-604-4 ISBN-13: 978-1-59327-604-1 Publisher: William Pollock Production Editor: Serena Yang Cover Illustration: Josh Ellingson Interior Design: Octopod Studios Developmental Editor: William Pollock Technical Reviewers: Nicholas Kramer and Raviprakash Jayaraman Copyeditor: Kim Wimpsett Compositor: Kathleen Miller Proofreader: Paula L. Fleming Figure 8-4 was created by Fred Hsu (March 2005) and has been reproduced under the Creative Commons Attribution-Share Alike 3.0 Unported license. For information on distribution, translations, or bulk sales, please contact No Starch Press, Inc. directly: No Starch Press, Inc. 245 8th Street, San Francisco, CA 94103 phone: 415.863.9900; info@nostarch.com www.nostarch.com Library of Congress Cataloging-in-Publication Data Venkitachalam, Mahesh. Python playground : geeky projects for the curious programmer / by Mahesh Venkitachalam. pages cm Includes index. ISBN 978-1-59327-604-1 -- ISBN 1-59327-604-4 1. Python (Computer program language) 2. Electronic apparatus and appliances--Automatic control. 3. Arduino (Programmable controller)--Programming. 4. Raspberry Pi (Computer)--Programming. I. Title. QA76.73.P98.V46 2015 005.13'3--dc23 2014046103 No Starch Press and the No Starch Press logo are registered trademarks of No Starch Press, Inc. Other product and company names mentioned herein may be the trademarks of their respective owners. Rather than use a trademark symbol with every occurrence of a trademarked name, we are using the names only in an editorial fashion and to the benefit of the trademark owner, with no intention of infringement of the trademark. The information in this book is distributed on an “As Is” basis, without warranty. While every precaution has been taken in the preparation of this work, neither the author nor No Starch Press, Inc. shall have any liability to any person or entity with respect to any loss or damage caused or alleged to be caused directly or indirectly by the information contained in it. www.it-ebooks.info

- 6. For my parents, A.V. Venkitachalam and N. Saraswathy, for giving me the greatest gift of all— an education & For Hema H = M 2 A www.it-ebooks.info

- 8. Br ie f Con t e n t s Acknowledgments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xix Part I: Warming Up . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 Chapter 1: Parsing iTunes Playlists . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3 Chapter 2: Spirographs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17 Part II: Simulating Life . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39 Chapter 3: Conway’s Game of Life . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41 Chapter 4: Generating Musical Overtones with the Karplus-Strong Algorithm . . . . . . . . . . . . 55 Chapter 5: Boids: Simulating a Flock . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71 Part III: Fun with Images . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87 Chapter 6: ASCII Art . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89 Chapter 7: Photomosaics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101 Chapter 8: Autostereograms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117 Part IV: Enter 3D . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131 Chapter 9: Understanding OpenGL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133 Chapter 10: Particle Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159 Chapter 11: Volume Rendering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 191 Part V: Hardware Hacking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233 Chapter 12: Introduction to the Arduino . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 235 Chapter 13: Laser Audio Display . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 249 Chapter 14: A Raspberry Pi–Based Weather Monitor . . . . . . . . . . . . . . . . . . . . . . . . . . . 273 Appendix A: Software Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 297 Appendix B: Basic Practical Electronics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 303 Appendix C: Raspberry Pi Tips and Tricks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 311 Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 319 www.it-ebooks.info

- 10. Con t e n t s in De ta il Acknowledgments xvii Introduction xix Who Is This Book For? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xix What’s in This Book? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xx Part I: Warming Up . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xx Part II: Simulating Life . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xx Part III: Fun with Images . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xx Part IV: Enter 3D . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xx Part V: Hardware Hacking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxi Why Python? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxi Python Versions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxii The Code in This Book . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxii part I: Warming Up 1 1 Parsing iTunes Playlists 3 Anatomy of the iTunes Playlist File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4 Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5 The Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5 Finding Duplicates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6 Extracting Duplicates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7 Finding Tracks Common Across Multiple Playlists . . . . . . . . . . . . . . . . . . . . . . . 7 Collecting Statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8 Plotting Your Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9 Command Line Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10 The Complete Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11 Running the Program . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15 Experiments! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15 2 Spirographs 17 Parametric Equations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18 Spirograph Equations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19 Turtle Graphics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22 Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23 The Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23 The Spiro Constructor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23 The Setup Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24 The restart() Method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24 www.it-ebooks.info

- 11. x Contents in Detail The draw() Method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25 Creating the Animation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25 The SpiroAnimator Class . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26 The genRandomParams() Method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27 Restarting the Program . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28 The update() Method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28 Showing or Hiding the Cursor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29 Saving the Curves . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29 Parsing Command Line Arguments and Initialization . . . . . . . . . . . . . . . . . . . . 30 The Complete Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31 Running the Spirograph Animation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37 Experiments! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37 part II: Simulating Life 39 3 Conway’s Game of Life 41 How It Works . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42 Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44 The Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44 Representing the Grid . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44 Initial Conditions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45 Boundary Conditions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46 Implementing the Rules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47 Sending Command Line Arguments to the Program . . . . . . . . . . . . . . . . . . . . 47 Initializing the Simulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48 The Complete Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49 Running the Game of Life Simulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52 Experiments! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52 4 Generating Musical Overtones with the Karplus-Strong Algorithm 55 How It Works . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57 The Simulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57 Creating WAV Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59 The Minor Pentatonic Scale . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60 Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61 The Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61 Implementing the Ring Buffer with deque . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61 Implementing the Karplus-Strong Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . 62 Writing a WAV File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62 Playing WAV Files with pygame . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63 The main() Method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64 www.it-ebooks.info

- 12. Contents in Detail xi The Complete Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65 Running the Plucked String Simulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69 Experiments! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69 5 Boids: Simulating a Flock 71 How It Works . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72 Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72 The Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73 Computing the Position and Velocities of the Boids . . . . . . . . . . . . . . . . . . . . . 73 Setting Boundary Conditions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74 Drawing a Boid . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75 Applying the Rules of the Boids . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77 Adding a Boid . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79 Scattering the Boids . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80 Command Line Arguments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80 The Boids Class . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81 The Complete Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82 Running the Boids Simulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85 Experiments! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86 part iii: Fun with Images 87 6 ASCII Art 89 How It Works . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90 Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92 The Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92 Defining the Grayscale Levels and Grid . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92 Computing the Average Brightness . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93 Generating the ASCII Content from the Image . . . . . . . . . . . . . . . . . . . . . . . . 93 Command Line Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95 Writing the ASCII Art Strings to a Text File . . . . . . . . . . . . . . . . . . . . . . . . . . 95 The Complete Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95 Running the ASCII Art Generator . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99 Experiments! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99 7 Photomosaics 101 How It Works . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102 Splitting the Target Image . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103 Averaging Color Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103 Matching Images . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104 www.it-ebooks.info

- 13. xii Contents in Detail Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104 The Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104 Reading in the Tile Images . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104 Calculating the Average Color Value of the Input Images . . . . . . . . . . . . . . . 105 Splitting the Target Image into a Grid . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106 Finding the Best Match for a Tile . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106 Creating an Image Grid . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107 Creating the Photomosaic . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108 Adding the Command Line Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110 Controlling the Size of the Photomosaic . . . . . . . . . . . . . . . . . . . . . . . . . . . 110 The Complete Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110 Running the Photomosaic Generator . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116 Experiments! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116 8 Autostereograms 117 How It Works . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118 Perceiving Depth in an Autostereogram . . . . . . . . . . . . . . . . . . . . . . . . . . . 118 Depth Maps . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120 Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121 The Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121 Repeating a Given Tile . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121 Creating a Tile from Random Circles . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122 Creating Autostereograms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123 Command Line Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125 The Complete Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125 Running the Autostereogram Generator . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129 Experiments! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129 part iv: Enter 3D 131 9 Understanding OpenGL 133 Old-School OpenGL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134 Modern OpenGL: The 3D Graphics Pipeline . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136 Geometric Primitives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137 3D Transformations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137 Shaders . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139 Vertex Buffers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141 Texture Mapping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141 Displaying OpenGL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142 Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142 www.it-ebooks.info

- 14. Contents in Detail xiii The Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142 Creating an OpenGL Window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142 Setting Callbacks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143 The Scene Class . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146 The Complete Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151 Running the OpenGL Application . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157 Experiments! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157 10 Particle Systems 159 How It Works . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 161 Modeling the Motion of a Particle . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162 Setting a Maximum Spread . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162 Rendering the Particles . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163 Using OpenGL Blending to Create More Realistic Sparks . . . . . . . . . . . . . . . 164 Using Billboarding . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 165 Animating the Sparks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166 Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166 The Code for the Particle System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166 Defining the Particle Geometry . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 167 Defining the Time-Lag Array for the Particles . . . . . . . . . . . . . . . . . . . . . . . . 168 Setting the Initial Particle Velocities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 168 Creating the Vertex Shader . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 169 Creating the Fragment Shader . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171 Rendering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172 The Camera Class . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173 The Complete Particle System Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174 The Box Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180 The Code for the Main Program . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183 Updating the Particles at Each Step . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184 The Keyboard Handler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185 Managing the Main Program Loop . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185 The Complete Main Program Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 186 Running the Program . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 189 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 189 Experiments! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 189 11 Volume Rendering 191 How It Works . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 192 Data Format . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193 Generating Rays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193 Displaying the OpenGL Window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196 Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196 An Overview of the Project Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196 Generating a 3D Texture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197 www.it-ebooks.info

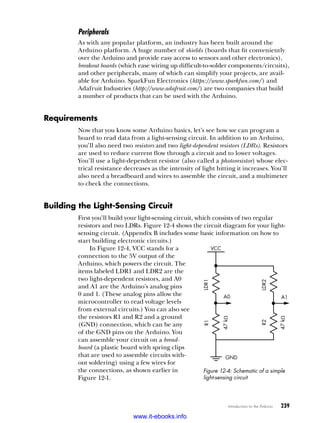

- 15. xiv Contents in Detail The Complete 3D Texture Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199 Generating Rays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 200 Defining the Color Cube Geometry . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201 Creating the Frame Buffer Object . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 203 Rendering the Back-Faces of the Cube . . . . . . . . . . . . . . . . . . . . . . . . . . . . 204 Rendering the Front-Faces of the Cube . . . . . . . . . . . . . . . . . . . . . . . . . . . . 205 Rendering the Whole Cube . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 205 The Resize Handler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 206 The Complete Ray Generation Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 206 Volume Ray Casting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212 The Vertex Shader . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 214 The Fragment Shader . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 214 The Complete Volume Ray Casting Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 216 2D Slicing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 220 The Vertex Shader . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 222 The Fragment Shader . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 223 A User Interface for 2D Slicing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 223 The Complete 2D Slicing Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 224 Putting the Code Together . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 227 The Complete Main File Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 228 Running the Program . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231 Experiments! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232 part v: Hardware Hacking 233 12 Introduction to the Arduino 235 The Arduino . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236 The Arduino Ecosystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 237 Language . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238 IDE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238 Community . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238 Peripherals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239 Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239 Building the Light-Sensing Circuit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239 How the Circuit Works . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 240 The Arduino Sketch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 240 Creating the Real-Time Graph . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241 The Python Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 242 The Complete Python Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 244 Running the Program . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 246 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 247 Experiments! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 247 www.it-ebooks.info

- 16. Contents in Detail xv 13 Laser Audio Display 249 Generating Patterns with a Laser . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 250 Motor Control . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 251 The Fast Fourier Transform . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 252 Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 254 Constructing the Laser Display . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 254 Wiring the Motor Driver . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 256 The Arduino Sketch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 258 Configuring the Arduino’s Digital Output Pins . . . . . . . . . . . . . . . . . . . . . . . 258 The Main Loop . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 259 Stopping the Motors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 261 The Python Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 261 Selecting the Audio Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 261 Reading Data from the Input Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 262 Computing the FFT of the Data Stream . . . . . . . . . . . . . . . . . . . . . . . . . . . . 263 Extracting Frequency Information from the FFT Values . . . . . . . . . . . . . . . . . . 263 Converting Frequency to Motor Speed and Direction . . . . . . . . . . . . . . . . . . 263 Testing the Motor Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 264 Command Line Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 265 Manual Testing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 266 The Complete Python Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 267 Running the Program . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 270 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 271 Experiments! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 271 14 A Raspberry Pi–Based Weather Monitor 273 The Hardware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 274 The DHT11 Temperature and Humidity Sensor . . . . . . . . . . . . . . . . . . . . . . . 274 The Raspberry Pi . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 275 Setting Up Your Pi . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 276 Installing and Configuring Software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 277 The Operating System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 277 Initial Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 277 Wi-Fi Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 277 Setting Up the Programming Environment . . . . . . . . . . . . . . . . . . . . . . . . . . 278 Connecting via SSH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 279 The Bottle Web Framework . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 280 Plotting with flot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 281 Shutting Down the Pi . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 282 Building the Hardware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 283 The Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 284 Handling Sensor Data Requests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 285 Plotting the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 285 The update() Method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 288 www.it-ebooks.info

- 17. xvi Contents in Detail The JavaScript Handler for the LED . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 289 Adding Interactivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 290 The Complete Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 290 Running the Program . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 294 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 295 Experiments! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 295 A Software Installation 297 Installing Source Code for the Book’s Projects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 297 Installing on Windows . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 298 Installing GLFW . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 298 Installing Prebuilt Binaries for Each Module . . . . . . . . . . . . . . . . . . . . . . . . . 298 Other Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 299 Installing on OS X . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 299 Installing Xcode and MacPorts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 300 Installing Modules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 300 Installing on Linux . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 301 B Basic Practical Electronics 303 Common Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 304 Essential Tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 306 Building Circuits . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 307 Going Further . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 310 C Raspberry Pi Tips and Tricks 311 Setting Up Wi-Fi . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 311 Checking Whether Your Pi Is Connected . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 312 Preventing the Wi-Fi Adapter from Going to Sleep . . . . . . . . . . . . . . . . . . . . . . . . . . 312 Backing Up Your Code and Data from the Pi . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 313 Backing Up Your Entire Pi OS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 314 Logging In to Your Pi with SSH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 314 Using the Raspberry Pi Camera . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 315 Enabling Sound on Your Pi . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 315 Making Your Pi Talk . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 316 Making HDMI Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 316 Making Your Pi Mobile . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 317 Checking Your Raspberry Pi Hardware Version . . . . . . . . . . . . . . . . . . . . . . . . . . . . 317 Index 319 www.it-ebooks.info

- 18. Ack now l e dg m e n t s Writing a book is like running a marathon. Or so I’ve been told. But what I do know is that writing this book tested the limits of my endurance, and I couldn’t have done it without my personal cheerleading squad of close friends and family. First of all, I’d like to thank my wife Hema for her constant love, encouragement, and patience throughout the two years it took to com- plete this work. I thank my friend Raviprakash Jayaraman for being a co- conspirator in all my dubious projects, for being a technical reviewer for this book, and for interesting lunches, movies, and trips to the S.P. Road Zoo. I thank my friend Seby Kallarakkal for pushing me to pursue this book and for all the interesting discussions we had together. I am grateful to my pal Dr. Santosh Hemachandra for helpful discussions on fast Fourier transforms. I’d like to thank Karthikeyan Chellappa for help- ing me test out the installation of Python modules and for our running www.it-ebooks.info

- 19. xviii Acknowledgments sessions around Kaikondrahalli Lake. I would also like to thank Matthew Denham (with whom I conversed on reddit) for his help on the mathe matics of Spirographs. I’d like to thank Tyler Ortman and Bill Pollock of No Starch Press, for accepting my book proposal, and Serena Yang, for her professional work in editing the book. I’d like to thank Nicholas Kramer for his technical review of the book. I thank my parents A.V. Venkitachalam and N. Saraswathy for providing me with an education far beyond their financial means. Finally, I thank all my teachers who inspired me. I hope to remain a student all my life. www.it-ebooks.info

- 20. In t roduc t ion Welcome to Python Playground! Within these pages, you’ll find 14 exciting proj- ects designed to encourage you to explore the world of programming with Python. The projects cover a wide range of topics, such as draw- ing Spirograph-like patterns, creating ASCII art, 3D rendering, and projecting laser patterns in sync with music. In addition to being fun in and of themselves, these projects are designed to be jumping- off points for you to explore your own ideas by expanding on each of the projects. Who Is This Book For? Python Playground is written for anyone curious about how to use program- ming to understand and explore ideas. The projects in this book assume that you know basic Python syntax and basic programming concepts and that you’re familiar with high-school level mathematics. I’ve done my best to explain in detail the math you need for all projects. www.it-ebooks.info

- 21. xx Introduction This book is not intended to be your first book on Python. I won’t walk you through the basics. I will, however, show you how to use Python to solve a variety of real-world problems in a series of nontrivial projects. As you work through the projects, you’ll explore the nuances of the Python program- ming language and learn how to work with some popular Python libraries. But perhaps even more importantly, you’ll learn how to break down a prob- lem into parts, develop an algorithm to solve that problem, and then imple- ment a solution from the ground up using Python. It can be difficult to solve real-world problems because they are often open-ended and require exper- tise in various domains. But Python offers the tools to facilitate problem- solving. Overcoming difficulties and finding solutions to real problems is the most important part of your journey on the way to becoming an expert programmer. What’s in This Book? Let’s take a quick tour through the chapters in this book. Part I: Warming Up Chapter 1 will show you how to parse iTunes playlist files and gather use- ful information from them, such as track lengths and common tracks. In Chapter 2, we use parametric equations and Turtle graphics to draw curves like the ones generated by a Spirograph. Part II: Simulating Life This part is about using mathematical models to simulate phenomena. In Chapter 3, you’ll learn how to implement the Conway’s Game of Life algorithm to generate dynamic patterns that create other patterns as a sort of simulation of artificial life. Chapter 4 will show you how to create real- istic plucked string sounds using the Karplus-Strong algorithm. Then, in Chapter 5, you’ll learn how to implement the Boids algorithm to simulate the flocking behavior of birds. Part III: Fun with Images This part will introduce you to reading and manipulating 2D images with Python. Chapter 6 shows you how to create ASCII art from an image. In Chapter 7, you’ll make a photomosaic, and in Chapter 8, you’ll learn how to generate autostereograms, which create the illusion of a 3D image. Part IV: Enter 3D The projects in this part use the OpenGL 3D graphics library. Chapter 9 introduces the basics of using OpenGL to create simple 3D graphics. In Chapter 10, you’ll create a particle simulation—a fountain of fireworks that uses math and OpenGL shaders for computation and rendering. Then in www.it-ebooks.info

- 22. Introduction xxi Chapter 11, you’ll use OpenGL shaders to implement a volume ray casting algorithm to render volumetric data—a technique commonly used for medi- cal imaging such as MRI and CT scans. Part V: Hardware Hacking In the final part, you’ll use Python to explore the Arduino microcontroller and the Raspberry Pi. In Chapter 12, you’ll use the Arduino to read and plot sensor data from a simple circuit. In Chapter 13, you’ll combine Python with an Arduino to control two rotating mirrors and a laser to produce a laser show that responds to sound. In Chapter 14, you’ll use the Raspberry Pi to build a web-based weather monitoring system. Why Python? Python is an ideal language for exploring programming. As a multi paradigm language, it provides you with a lot of flexibility in how you structure your programs. You can use Python as a scripting language to simply execute code, as a procedural language to organize your program into a collection of functions which call each other, or as an object-oriented language that uses classes, inheritance, and modules to build up a hierarchy. This flexibility allows you to choose the programming style most suited to a particular project. When you develop using a more traditional language like C or C++, you have to compile and link your code before you can run it. With Python, you can run it directly after editing. (Under the hood, Python compiles your code into an intermediate bytecode that is then run by the Python inter- preter, but these processes are transparent to you, the user.) In practice, the process of modifying and running your code over and over is much less cumbersome with Python. Furthermore, the Python interpreter is a very handy tool for checking code syntax, getting help with modules, doing quick computations, and even testing code under development. For example, when I write Python code, I keep three windows open: a text editor, a shell, and a Python inter- preter. As I develop code in the editor, I import my functions or classes into the interpreter and test them as I go. Python has a very small set of simple and powerful data structures. If you already understand strings, lists, tuples, dictionaries, list comprehen- sions, and basic control structures such as for and while loops, you’re off to a great start. Python’s succinct and expressive syntax makes it easy to do complex operations with just a few lines of code. And once you’re familiar with Python’s built-in and third-party modules, you’ll have an arsenal of tools to tackle real problems like the ones covered on this book. There are standard ways to call C/C++ code from Python and vice versa, and because you can find libraries to do almost anything in Python, it’s easy to com- bine Python with other language modules in bigger projects. This is why Python is considered a great glue language—it makes it easy to combine diverse software components. The hardware projects at the end of this www.it-ebooks.info

- 23. xxii Introduction book demonstrate how Python can work side by side with Arduino code and JavaScript. Real software projects often use a mix of software technologies, and Python fits very well into such layered architectures. The following example demonstrates the ease of working with Python. While developing code for the Raspberry Pi weather monitor in Chapter 14, I wrote this string looking at the oscilloscope output from the tempera- ture/humidity sensor: 0011011100000000000110100000000001010001 Since I don’t speak binary (especially at 7 am on a Sunday morning), I fired up the Python interpreter and entered: >>> str = '0011011100000000000110100000000001010001' >>> len(str) 40 >>> [int(str[i:i+8], 2) for i in range(0, 40, 8)] [55, 0, 26, 0, 81] This code splits up the 40-bit string into five 8-bit integers, which I can actually interpret. The data above is decoded as a 55.0 percent humidity reading at a temperature of 26.0 degrees centigrade, and the checksum is 55 + 26 = 81. This example demonstrates the practical use of the Python interpreter as a very power calculator. You don’t need to write a complete program to do quick computations; just open up the interpreter and get going. This is just one of the many reasons why I love Python, and why I think you will too. Python Versions This book was built with Python 3.3.3, but all code is compatible with Python 2.7.x and 3.x. The Code in This Book I’ve done my best throughout this book to walk you through the code for each project in detail, piece by piece. You can either enter the code yourself or download the complete source code (using the Download Zip option) for all programs in the book at https://github.com/electronut/pp/. You’ll find several exciting projects in the following pages. I hope you have as much fun playing with them as I had creating them. And don’t forget to explore further with the exercises presented at the end of each project. I wish you many happy hours of programming with Python Playground! www.it-ebooks.info

- 24. Part I Wa r m ing Up “In the beginner’s mind there are many possibilities; in the expert’s mind there are few.” —Shunryu Suzuki www.it-ebooks.info

- 26. 1 Pa r sing i T u n e s Pl ay l i s t s Our Python expedition begins with a simple project that finds duplicate music tracks in iTunes playlist files and plots vari- ous statistics such as track lengths and ratings. You’ll start by taking a look at the iTunes playlist for- mat and then learn how to extract information from these files using Python. To plot this data, you’ll use the matplotlib library. In this project, you will learn the about the following topics: • XML and property list (p-list) files • Python lists and dictionaries • Using Python set objects • Using numpy arrays • Histograms and scatter plots www.it-ebooks.info

- 27. 4 Chapter 1 • Making simple plots with the matplotlib library • Creating and saving data files Anatomy of the iTunes Playlist File The information in an iTunes library can be exported into playlist files. (Choose File4Library4Export Playlist in iTunes.) The playlist files are written in Extensible Markup Language (XML), a text-based language designed to represent text-based information hierarchically. It consists of a tree-like collection of user-defined tags in the form <myTag>, each of which can have attribute and child tags with additional information. When you open a playlist file in a text editor, you’ll see something like this abbreviated version: <?xml version="1.0" encoding="UTF-8"?> u <!DOCTYPE plist PUBLIC "-//Apple Computer//DTD PLIST 1.0//EN" "http://www .apple.com/DTDs/PropertyList-1.0.dtd"> v <plist version="1.0"> w <dict> x <key>Major Version</key><integer>1</integer> <key>Minor Version</key><integer>1</integer> --snip-- y <key>Tracks</key> <dict> <key>2438</key> <dict> <key>Track ID</key><integer>2438</integer> <key>Name</key><string>Yesterday</string> <key>Artist</key><string>The Beatles</string> <key>Composer</key><string>Lennon [John], McCartney [Paul]</string> <key>Album</key><string>Help!</string> </dict> --snip-- </dict> z <key>Playlists</key> <array> <dict> <key>Name</key><string>Now</string> <key>Playlist ID</key><integer>21348</integer> --snip-- <array> <dict> <key>Track ID</key><integer>6382</integer> </dict> --snip-- </array> </dict> </array> </dict> </plist> www.it-ebooks.info

- 28. Parsing iTunes Playlists 5 The <dict> and <key> tags relate to the way a property list (p-list) file represents objects as dictionaries, which are data structures that link a key with a value to make it easy to find a corresponding value. P-list files use dictionaries of dictionaries, where values associated with a key in one dictionary are often themselves yet another dictionary (or even a list of dictionaries). The <xml> tag identifies the file as XML. Following this opening tag, a document type declaration (DTD) defines the structure of the XML docu- ment u. As you can see, Apple defines this structure at a uniform resource locator (URL) visible in the tag. At v, the file declares the top-level <plist> tag whose only child element is the dictionary <dict> w. This dictionary contains various keys including, at x, Major Version, Minor Version, and so on, but you’re interested in the Tracks key at y. Notice that the value corresponding to this key is also a dictionary, which maps an integer track ID to another dictionary containing elements such as Name, Artist, and so on. Each track in a music collection has a unique track ID key. The playlist order is defined at z by Playlists, a child of the top-level dictionary. Requirements In this project, we’ll use the built-in module plistlib to read the playlist files. We’ll also use the matplotlib library for plotting and numpy arrays to store data. The Code The goals in this project are to find duplicates in your music collection, identify tracks shared between playlists, plot the distribution of track dura- tions, and graph the relationship between song ratings and length. As your music collection grows, you’ll invariably end up with dupli- cate songs. To identify duplicates, search the names in the dictionary associ- ated with the Tracks key (discussed earlier) for duplicates and use track length as an additional criterion to detect duplicates, since a track with the same name but a different length is likely unique. To find tracks shared between two or more playlists, you’ll export the collections as playlist files, gather the track names for each playlist, and compare them as sets to discover common tracks by finding the intersection between sets. While gathering data from your music collection, you’ll create a couple of plots with the powerful matplotlib (http://matplotlib.org/) plotting pack- age developed by the late John Hunter. You’ll draw a histogram to show the distribution of track durations and a scatter plot to compare song rat- ings with song length. To see the full project code, skip ahead to “The Complete Code” on page 11. www.it-ebooks.info

- 29. 6 Chapter 1 Finding Duplicates You’ll start by finding duplicate tracks with the findDuplicates() method, as shown here: def findDuplicates(fileName): print('Finding duplicate tracks in %s...' % fileName) # read in a playlist u plist = plistlib.readPlist(fileName) # get the tracks from the Tracks dictionary v tracks = plist['Tracks'] # create a track name dictionary w trackNames = {} # iterate through the tracks x for trackId, track in tracks.items(): try: y name = track['Name'] duration = track['Total Time'] # look for existing entries z if name in trackNames: # if a name and duration match, increment the count # round the track length to the nearest second { if duration//1000 == trackNames[name][0]//1000: count = trackNames[name][1] | trackNames[name] = (duration, count+1) else: # add dictionary entry as tuple (duration, count) } trackNames[name] = (duration, 1) except: # ignore pass At u, the readPlist() method takes a p-list file as input and returns the top-level dictionary. You access the Tracks dictionary at v, and at w, you create an empty dictionary to keep track of duplicates. At x, you begin iterating through the Tracks dictionary using the items() method, which is commonly used in Python to retrieve both the key and the value of a dic- tionary as you iterate through it. At y, you retrieve the name and duration of each track in the diction- ary. You check to see whether the current track name already exists in the dictionary being built by using the in keyword z. If so, the program checks whether the track lengths of the existing and newly found tracks are identi- cal { by dividing the track length of each by 1,000 with the // operator to convert milliseconds to seconds and then rounding to the nearest second. (Of course, this means that two tracks that differ in length only by milli seconds are considered to be the same length.) If you determine that the two track lengths are equal, you get the value associated with name, which is the tuple (duration, count), and increment count at |. If this is the first time the program has come across the track name, it creates a new entry for it, with a count of 1 }. www.it-ebooks.info

- 30. Parsing iTunes Playlists 7 You enclose the body of the code’s main for loop in a try block because some music tracks may not have the track name defined. In that case, you skip the track and include only pass (which does nothing) in the except section. Extracting Duplicates To extract duplicates, you use this code: # store duplicates as (name, count) tuples u dups = [] for k, v in trackNames.items(): v if v[1] > 1: dups.append((v[1], k)) # save duplicates to a file w if len(dups) > 0: print("Found %d duplicates. Track names saved to dup.txt" % len(dups)) else: print("No duplicate tracks found!") x f = open("dups.txt", "w") for val in dups: y f.write("[%d] %sn" % (val[0], val[1])) f.close() At u, you create an empty list to hold the duplicates. Next, you iterate through the trackNames dictionary, and if count (accessed with v[1] because it’s the second element in the tuple) is greater than 1, you append a tuple with (name, count) to the list. At w, the program prints information about what it has found and then saves that information to a file using the open() method x. At y, you iterate through the dups list, writing out the dupli- cate entries. Finding Tracks Common Across Multiple Playlists Now let’s look at how you find music tracks that are common across mul- tiple playlists: def findCommonTracks(fileNames): # a list of sets of track names u trackNameSets = [] for fileName in fileNames: # create a new set v trackNames = set() # read in playlist w plist = plistlib.readPlist(fileName) # get the tracks tracks = plist['Tracks'] # iterate through the tracks for trackId, track in tracks.items(): try: # add the track name to a set x trackNames.add(track['Name']) www.it-ebooks.info

- 31. 8 Chapter 1 except: # ignore pass # add to list y trackNameSets.append(trackNames) # get the set of common tracks z commonTracks = set.intersection(*trackNameSets) # write to file if len(commonTracks) > 0: { f = open("common.txt", "w") for val in commonTracks: s = "%sn" % val | f.write(s.encode("UTF-8")) f.close() print("%d common tracks found. " "Track names written to common.txt." % len(commonTracks)) else: print("No common tracks!") First, you pass a list of playlist filenames to findCommonTracks(), which creates an empty list u to store a set of objects created from each play list. The program then iterates through each file in the list. For each file, you create a Python set object called trackNames v; then as in findDuplicates(), you use plistlib to read in the file w and get the Tracks dictionary. Next, you iter- ate through each track in this dictionary and add the trackNames object x. Once the program has finished with all tracks in a file, it adds this set to trackNameSets y. At z, you use the set.intersection() method to get the set of tracks that are common among the sets. (You use the Python * operator to unpack the argument lists.) If the program finds any tracks that are common among sets, it writes the track names to a file. At {, you open the file, and the two lines that follow do the actual writing. Use encode() to format the output and to ensure that any Unicode characters are handled correctly |. Collecting Statistics Next, use the plotStats() method to collect statistics for the track names: def plotStats(fileName): # read in a playlist u plist = plistlib.readPlist(fileName) # get the tracks from the playlist tracks = plist['Tracks'] # create lists of song ratings and track durations v ratings = [] durations = [] # iterate through the tracks for trackId, track in tracks.items(): try: w ratings.append(track['Album Rating']) durations.append(track['Total Time']) www.it-ebooks.info

- 32. Parsing iTunes Playlists 9 except: # ignore pass # ensure that valid data was collected x if ratings == [] or durations == []: print("No valid Album Rating/Total Time data in %s." % fileName) return The goal here is to gather ratings and track durations and then do some plotting. At u and in the lines that follow, you read the playlist file and get access to the Tracks dictionary. Next, you create two empty lists to store ratings and durations v. (Ratings in iTunes playlists are stored as integers in the range [0, 100]). Iterating through the tracks, you get and append the ratings and durations to the appropriate lists at w. Finally, the sanity check at x makes sure you collected valid data from the playlist file. Plotting Your Data You’re now ready to plot some data. # scatter plot u x = np.array(durations, np.int32) # convert to minutes v x = x/60000.0 w y = np.array(ratings, np.int32) x pyplot.subplot(2, 1, 1) y pyplot.plot(x, y, 'o') z pyplot.axis([0, 1.05*np.max(x), -1, 110]) { pyplot.xlabel('Track duration') | pyplot.ylabel('Track rating') # plot histogram pyplot.subplot(2, 1, 2) } pyplot.hist(x, bins=20) pyplot.xlabel('Track duration') pyplot.ylabel('Count') # show plot ~ pyplot.show() At u, you put the data for the track durations into a 32-bit integer array using numpy.array() (imported as np in the code); then at v, you use numpy to apply an operation to every element in the array. In this case, you’re converting the duration in milliseconds to seconds by dividing each value by 60 × 1000. You store the track ratings in another numpy array, y, at w. Use matplotlib to draw two plots in the same figure. At x, the argu- ments to subplot()—namely, (2, 1, 1)—tell matplotlib that the figure should have two rows (2) and one column (1) and that the next plot should go in the first row (1). You create the plot at y by calling plot(), and the o tells matplotlib to use circles to represent the data. www.it-ebooks.info

- 33. 10 Chapter 1 At z, you set slightly inflated ranges for both the x-axis and y-axis to produce some padding between the plot and the axes. At { and |, you set the text for the x-axis and y-axis labels. Now you plot the duration histogram in the second row of the same fig- ure using the matplotlib method hist() }. The bins argument sets the num- ber of data partitions, each of which is used for adding counts in that range. Finally, you call show() ~, and matplotlib displays your beautiful graph in a new window. Command Line Options Now let’s look at the main() method of the program to see how it handles command line arguments: def main(): # create parser descStr = """ This program analyzes playlist files (.xml) exported from iTunes. """ u parser = argparse.ArgumentParser(description=descStr) # add a mutually exclusive group of arguments v group = parser.add_mutually_exclusive_group() # add expected arguments w group.add_argument('--common', nargs='*', dest='plFiles', required=False) x group.add_argument('--stats', dest='plFile', required=False) y group.add_argument('--dup', dest='plFileD', required=False) # parse args z args = parser.parse_args() if args.plFiles: # find common tracks findCommonTracks(args.plFiles) elif args.plFile: # plot stats plotStats(args.plFile) elif args.plFileD: # find duplicate tracks findDuplicates(args.plFileD) else: { print("These are not the tracks you are looking for.") Most projects in this book have command line arguments. Rather than trying to parse them by hand and creating a mess, delegate this mundane task to Python’s argparse module. At u, you create an ArgumentParser object for this purpose. The program can be used to do three different things such as find common tracks among playlists, plot statistics, or find duplicate tracks in a playlist. However, it can do only one of them at a time, and you don’t want it to crash if the user decides to specify two or more of these www.it-ebooks.info

- 34. Parsing iTunes Playlists 11 options at the same time. The argparse module provides a solution to this challenge in the form of mutually exclusive argument groups. At v, you use the parser.add_mutually_exclusive_group() method to create such a group. At w, x, and y, you specify the command line options mentioned earlier and enter the variable names (args.plFiles, args.plFile, and args.plFileD) the parsed values should be stored in. The actual parsing is done at z. Once the arguments are parsed, you pass them to the appro- priate functions, findCommonTracks(), plotStats(), and findDuplicates(), as discussed earlier in this chapter. To see whether an argument was parsed, test the appropriate variable name in args. For example, if the user did not use the --common option (which finds common tracks among playlists), args.plFiles should be set to None after parsing. You handle the case in which the user didn’t enter any arguments at {. The Complete Code Here is the complete program. You can also find the code and some test data for this project at https://github.com/electronut/pp/tree/master/playlist/. import re, argparse import sys from matplotlib import pyplot import plistlib import numpy as np def findCommonTracks(fileNames): """ Find common tracks in given playlist files, and save them to common.txt. """ # a list of sets of track names trackNameSets = [] for fileName in fileNames: # create a new set trackNames = set() # read in playlist plist = plistlib.readPlist(fileName) # get the tracks tracks = plist['Tracks'] # iterate through the tracks for trackId, track in tracks.items(): try: # add the track name to a set trackNames.add(track['Name']) except: # ignore pass # add to list trackNameSets.append(trackNames) www.it-ebooks.info

- 35. 12 Chapter 1 # get the set of common tracks commonTracks = set.intersection(*trackNameSets) # write to file if len(commonTracks) > 0: f = open("common.txt", 'w') for val in commonTracks: s = "%sn" % val f.write(s.encode("UTF-8")) f.close() print("%d common tracks found. " "Track names written to common.txt." % len(commonTracks)) else: print("No common tracks!") def plotStats(fileName): """ Plot some statistics by reading track information from playlist. """ # read in a playlist plist = plistlib.readPlist(fileName) # get the tracks from the playlist tracks = plist['Tracks'] # create lists of song ratings and track durations ratings = [] durations = [] # iterate through the tracks for trackId, track in tracks.items(): try: ratings.append(track['Album Rating']) durations.append(track['Total Time']) except: # ignore pass # ensure that valid data was collected if ratings == [] or durations == []: print("No valid Album Rating/Total Time data in %s." % fileName) return # scatter plot x = np.array(durations, np.int32) # convert to minutes x = x/60000.0 y = np.array(ratings, np.int32) pyplot.subplot(2, 1, 1) pyplot.plot(x, y, 'o') pyplot.axis([0, 1.05*np.max(x), -1, 110]) pyplot.xlabel('Track duration') pyplot.ylabel('Track rating') # plot histogram pyplot.subplot(2, 1, 2) pyplot.hist(x, bins=20) pyplot.xlabel('Track duration') pyplot.ylabel('Count') www.it-ebooks.info

- 36. Parsing iTunes Playlists 13 # show plot pyplot.show() def findDuplicates(fileName): """ Find duplicate tracks in given playlist. """ print('Finding duplicate tracks in %s...' % fileName) # read in playlist plist = plistlib.readPlist(fileName) # get the tracks from the Tracks dictionary tracks = plist['Tracks'] # create a track name dictionary trackNames = {} # iterate through tracks for trackId, track in tracks.items(): try: name = track['Name'] duration = track['Total Time'] # look for existing entries if name in trackNames: # if a name and duration match, increment the count # round the track length to the nearest second if duration//1000 == trackNames[name][0]//1000: count = trackNames[name][1] trackNames[name] = (duration, count+1) else: # add dictionary entry as tuple (duration, count) trackNames[name] = (duration, 1) except: # ignore pass # store duplicates as (name, count) tuples dups = [] for k, v in trackNames.items(): if v[1] > 1: dups.append((v[1], k)) # save duplicates to a file if len(dups) > 0: print("Found %d duplicates. Track names saved to dup.txt" % len(dups)) else: print("No duplicate tracks found!") f = open("dups.txt", 'w') for val in dups: f.write("[%d] %sn" % (val[0], val[1])) f.close() # gather our code in a main() function def main(): # create parser descStr = """ This program analyzes playlist files (.xml) exported from iTunes. """ parser = argparse.ArgumentParser(description=descStr) www.it-ebooks.info

- 37. 14 Chapter 1 # add a mutually exclusive group of arguments group = parser.add_mutually_exclusive_group() # add expected arguments group.add_argument('--common', nargs='*', dest='plFiles', required=False) group.add_argument('--stats', dest='plFile', required=False) group.add_argument('--dup', dest='plFileD', required=False) # parse args args = parser.parse_args() if args.plFiles: # find common tracks findCommonTracks(args.plFiles) elif args.plFile: # plot stats plotStats(args.plFile) elif args.plFileD: # find duplicate tracks findDuplicates(args.plFileD) else: print("These are not the tracks you are looking for.") # main method if __name__ == '__main__': main() Running the Program Here is a sample run of the program: $ python playlist.py --common test-data/maya.xml test-data/rating.xml Here is the output: 5 common tracks found. Track names written to common.txt. $ cat common.txt God Shuffled His Feet Rubric Floe Stairway To Heaven Pi's Lullaby moksha:playlist mahesh$ Now let’s plot some statistics for the tracks. $ python playlist.py --stats test-data/rating.xml Figure 1-1 shows the output from this sample run. www.it-ebooks.info

- 38. Parsing iTunes Playlists 15 Figure 1-1: Sample run of playlist.py Summary In this project, we developed a program that analyzes iTunes playlists and, in the process, learned some useful Python constructs. In upcoming projects, you’ll build on some of the basics covered here to explore a wide range of interesting topics and delve deeper into Python. Experiments! Here are a few ways you could build on this program: 1. When finding duplicate tracks, you considered track duration as an additional criterion to determine whether two tracks were identical. But when finding common tracks, you used only track names to make comparisons. Incorporate track duration as an additional check in findCommonTracks(). 2. In the plotStats() method, you used the matplotlib hist() method to compute and display the histogram. Write code to compute the histogram manually and display it without using the hist() method. To display a plot as a bar chart, read up on bar charts in the matplotlib documentation. 3. Several mathe matical formulas exist for calculating a correlation coefficient, which measures the strength of a relationship between two variables. Read up on correlation and calculate a correlation value for a rating/duration scatter plot using your own music data. Consider other scatter plots you can make with data gleaned from your playlists. www.it-ebooks.info

- 40. 2 Spirog r a ph s You can use a Spirograph toy (shown in Figure 2-1) to draw mathematical curves. The toy consists of two different sized rings with plastic teeth, one large and one small. The small one has several holes. You put a pen or pencil through one of the holes and then rotate the smaller wheel inside the larger one (which has gears on its inside), keeping the pen in contact with the outer wheel, to draw an endless number of complex and wonderfully symmetric patterns. In this project, you’ll use Python to create an animation of Spirograph- like drawing curves. Our spiro.py program will use Python and parametric equations to describe the motion of the program’s Spirograph’s rings and draw the curves (which I call spiros). You’ll save the completed drawings as PNG image files and use command line options to specify parameters or to generate random spiros. www.it-ebooks.info

- 41. 18 Chapter 2 Figure 2-1: A Spirograph toy In this project, you’ll learn how to draw spiros on your computer. You’ll also learn how to do the following: • Create graphics with the turtle module. • Use parametric equations. • Use mathematical equations to generate curves. • Draw a curve using lines. • Use a timer to animate graphics. • Save graphics to image files. A word of caution about this project: I’ve chosen to use the turtle mod- ule for this project mainly for illustrative purposes and because it’s fun, but turtle is slow and not ideal for creating graphics when performance is criti- cal. (What do you expect from turtles?) If you want to draw something fast, there are better ways to do so, and you’ll explore some of these options in upcoming projects. Parametric Equations In this section, you will look at a simple example of using parametric equa- tions to draw a circle. Parametric equations express the coordinates of the points of a curve as functions of a variable, called a parameter. They make it easy to draw curves because you can just plug parameters into equations to produce a curve. NOTE If you’d rather not get into this math right now, you can skip ahead to the next sec- tion, which talks about the equations specific to the Spirograph project. www.it-ebooks.info

- 42. Spirographs 19 Let’s begin by considering that the equation used to describe a circle with radius r, centered at the origin of a two-dimensional plane, is. A circle consists of all the points at the x- and y-coordinates that satisfy this equation. Now, consider the following equations: x = r cos(q) y = r sin(q) These equations are a parametric representation of a circle, where the angle q is the parameter. Any value of (x, y) in these equations will satisfy the equation for a circle described earlier, x 2 + y 2 = r 2 . If you vary q from 0 to 2p, you can use these equations to compute a corresponding x-and-y coordinate along the circle. Figure 2-2 shows this scheme. (x, y) Y X θ 0 x = r cos(θ) y = r sin(θ) Figure 2-2: Describing a circle with a parametric equation Remember, these two equations apply to a circle centered at the origin of the coordinate system. You can put a circle at any point in the xy plane by translating the center of the circle to the point (a, b). So the more general parametric equations then become x = a + r cos(q) and y = b + r cos(q). Now let’s look at the equations that describe your spiros. Spirograph Equations Figure 2-3 shows a mathematical model of Spirograph-like motion. The model has no gears because they’re used in the toy only to prevent slippage, and here you don’t have to worry about anything slipping. www.it-ebooks.info

- 43. 20 Chapter 2 P = (x, y) Y X θ 0 R P r C Figure 2-3: Spirograph mathematical model In Figure 2-3, C is the center of the smaller circle, and P is the pen’s tip. The radius of the bigger circle is R, and that of the smaller circle is r. You express the ratio of the radii as follows: k r R = You express the ratio of segment PC to the smaller circle’s radius r as the variable l (l = PC / r), which determines how far the pen tip is from the center of the small circle. You then combine these variables to represent the motion of P to produce these parametric equations: x R k lk k k = − ( ) ( ) + − 1 1 cos cos θ θ y R k lk k k = − ( ) ( ) + − 1 1 sin sin θ θ NOTE These curves are called hypotrochoids and epitrochoids. Although the equations may look a bit scary, the derivation is pretty straightforward. See the Wikipedia page if you’d like to explore the math. 1 1. http://en.wikipedia.org/wiki/Spirograph/ www.it-ebooks.info

- 44. Spirographs 21 Figure 2-4 shows how you use these equations to produce a curve that varies based on the parameters used. By varying the parameters R, r, and l, you can produce an endless variety of fascinating curves. R = 220 r = 65 l = 0.8 Figure 2-4: A sample curve You draw the curve as a series of lines between points. If the points are close enough, the drawing looks like a smooth curve. If you’ve played with a real Spirograph, you know that depending on the parameters used, Spirographs can require many revolutions to com- plete. To determine when to stop drawing, you use the periodicity of the Spirograph (how long before the Spirograph starts repeating itself) by looking at the ratio of the radii of the inner and outer circles: r R You reduce this fraction by dividing the numerator and denominator by the greatest common divisor (GCD), and the numerator tells you how many periods the curve needs to complete itself. For example, in Figure 2-4, the GCD of (r, R) is 5. r R = 65 220 Here is the reduced form of this fraction: 65 5 220 5 13 44 / / ( ) ( ) = This tells you that in 13 revolutions, the curve will start repeating itself. The number 44 tells you the number of times the smaller circle revolves www.it-ebooks.info