Towards Automated A/B Testing

- 1. Towards Automated A/B Testing Alessandro Margara alessandro.margara@usi.ch Università della Svizzera Italiana, Lugano (CH) Giordano Tamburrelli giordano.tamburrelli@usi.ch Università della Svizzera Italiana, Lugano (CH)

- 2. User-intensive Applications • Large and evolving populations of users • Meeting user preferences is a crucial factor • Almost impossible to design applications that accurately capture all possible and meaningful user preferences upfront.

- 3. A/B Testing: what? • Initial (inaccurate) implementation based on the available knowledge A/B Testing: two distinct variants of the same application are compared using live experiments. • Run-time continuous monitoring, refinement and improvement to meet newly discovered user preferences

- 5. A/B Testing: what? • Widely adopted in industry: “The A/B Test: Inside the Technology Thats Changing the Rules of Business”.

- 6. A/B Testing: pitfalls 1. Development and deployment of multiple variants. 2. What is a variant 3. How many variants. 4. How to select variants. 5. How to evaluate variants. 6. When to stop • Still a difficult, tedious, and costly manual activity.

- 7. About this work • Adopt SBSE methods to improve/automate the A/B Testing process • Investigate the feasibility and draft a possible solution (GA+AOP) • A polished & ready-to-use solution

- 8. A/B Testing: an optimization problem • Features: From an abstract viewpoint a program p can be viewed as a finite set of features: F = {f1 . . . fn}. • Each feature f has an associated domain D that specifies which values are valid/allowed for f.

- 9. A/B Testing: an optimization problem • Instantiation functions: Function that associates a feature with a specific value from its domain. • To obtain a concrete implementation for a program it is necessary to specify the instantiations for all the features • The specification of different instantiations yields to different concrete implementations of the same abstract program.

- 10. A/B Testing: an optimization problem • Variants: We call a concrete implementation of a program p a variant of p. • Constraints: A constraint is a function Ci,j : Di → P(Dj) that, given a domain value for a feature, returns a subset of values not allowed for other features • A variant is valid if it satisfies all the defined constraints.

- 11. A/B Testing: an optimization problem • Assessment Function: An assessment function is a function defined as o(v) : Vp → R, where Vp = {v1, . . . , vm} is the set of all possible variants for the program. • This function associates to each and every variant of a program a numeric value, which indicates the goodness of the variant with respect to the goal of the program.

- 12. A/B Testing: an optimization problem • Thus A/B testing can be formulated as: v = argmax o(v) v • Goal: exploit search algorithms to enable automated A/B testing.

- 13. Towards automated A/B Testing • Two ingredients: • A design-time declarative facility to specify program features • A run-time framework in charge of automatically and iteratively exploring the solution space

- 14. Towards automated A/B Testing

- 15. Specifying Features • Ad-hoc annotations to specify the set of relevant features • They allow to write a parametric program, that represents all possible variants • We rely on Aspect Oriented Programming to dynamically create variants from the parametric program

- 16. Primitive Type Features @StringFeature(name=“checkOutButtonText”, values={"CheckOut", "Buy", "Buy Now!"}) String buttonText; // Primitive feature specification Button checkOutButton = new Button(); checkOutButton.setText(buttonText); // Primitive feature use checkOutButton.setFontSize(textSize); // Primitive feature use @IntegerFeature(name="fontSize", range="12:1:18") int textSize; // Primitive feature specification

- 17. Generic Data Type Feature @GenericFeatureInterface(name="sort", values={"com.example.SortByPrice", "com.example.SortByName"}) public interface AbstractSortingInterface{ public List<Item> sort(); } @GenericFeature AbstractSortingInterface sortingFeature; // ADT feature specification sortingFeature.sort(..); // ADT feature use public class SortByPrice implements AbstractSortingInterface{ public List<Item> sort(){ // Sort by price } } public class SortByName implements AbstractSortingInterface{ public List<Item> sort(){ // Sort by name } }

- 18. Towards automated A/B Testing

- 19. Encoding • Each feature declared by developers directly maps into a gene, while each variant maps into a chromosome. • The assessment function, which evaluates variants on live users, corresponds directly to the fitness function, which evaluates chromosomes.

- 20. Selection • Identifying, at each iteration, a finite number of chromosomes in the population that survive • Several possible strategies • Selection strategies relieve the developer from manually selecting variants during A/B testing.

- 21. Crossover & Mutation • Crossover and mutation contribute to the generation of new variants. • In traditional A/B testing, the process of generating new variants of a program is performed manually by the developers.

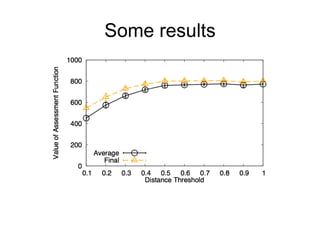

- 22. Some results: setup • We consider a program with n features. • We assume that each feature has a finite, countable domain • We split the users into groups • We adopt an assessment function that ranges from 0 (worst case) to 1000 (best case). • We set a distance threshold t. If the distance between the value of a feature and the user’s favourite value is higher than t, then the value of the variant is 0.

- 23. Some results

- 24. Some results

- 25. Some results

- 26. Some results

- 27. Key messages • An automated solution is indeed possible and worth investigating. • Heterogenous groups implies complex A/B Testing • Intermediate variants of the programs were providing good assessment function values • Future work: • Real testbed • Customised mutation operators • Full support for constraints.

- 28. Thank you 1: A/B Testing as a complex and manual activity 2: A/B Testing can be seen as an optimisation problem 3: We can write parametric programs 4: GA can carry on A/B Testing campaigns for us

Editor's Notes

- #3: Modern software systems increasingly deal with large and evolving populations of users that may issue up to millions of requests per day. These systems are commonly referred to as user-intensive software systems (e.g., Web and mobile applications). A key distinguishing feature of these systems is the heavy dependence on the interactions with many users, who approach the applications with different needs, attitudes, navigation profiles, and preferences Designing applications that meet user preferences is a crucial factor that may directly affect the success of user-intensive systems. Underestimating its importance can lead to substantial economic losses. For example, an inadequate or distorted knowledge of user preferences in a Web application can lead to an unsatisfactory user experience with consequent loss of customers and revenues. Domain experts typically provide valuable insights concerning user preferences that engineers can exploit to obtain an effective design of user-intensive applications. Unfortunately, this information could be inaccurate, generic, and obsolete. In practice, it is almost impossible to design applications that accurately capture all possible and meaningful user preferences upfront.

- #4: Engineers typically design a user-intensive application relying on the initial available knowledge while, at run-time, they continuously monitor, refine, and improve the system to meet newly discovered user preferences. In this context, engineers increasingly rely on A/B testing2 [11] to evaluate and improve their applications. In A/B testing, two distinct variants (i.e., vari- ant A and B) of the same application are compared using live experiments.

- #5: In A/B testing, two distinct variants (i.e., vari- ant A and B) of the same application are compared using live experiments. Live users are randomly assigned to one of the two variants and some metrics of inter- est (e.g., the likelihood for a user to buy in an e-commerce Web application) are collected. The two variants are compared based on these metrics, and the best one is selected, while the other is discarded. The iterative development of vari- ants and their comparative evaluation through live experiments allow designers to gradually evolve their applications maximizing a given metric of interest. For example, an e-commerce application may be refactored adopting variants that maximize sales, while a mobile application may be refactored adopting variants that maximize the advertisements’ views.

- #6: Even if widely and successfully adopted in industry [3,10], A/B testing is still considered by the majority of developers as a complex and crafted activity rather than a well-established software engineering practice. Indeed, conceiving, running, and summarizing the results of A/B tests is a difficult, tedious, and costly manual activity. More precisely, an accurate and consistent A/B testing demands for several complex engineering decisions and tasks. The most relevant ones are illustrated hereafter. 1. Development and deployment of multiple variants. A/B testing requires a continuous modification and deployment of the application codebase to imple- ment and evaluate variants. These variants are deployed and monitored concur- rently serving at the same time a certain percentage of users. 2. Whatisavariant.Programsmaybecustomizedalongdifferentlines.Because of this, a critical choice for developers is the selection of how many and which aspects of the program to change when generating a new variant. 3. How many variants. We defined A/B testing as the process of concurrently deploy and evaluate two variants of the system. In the general case, developers may concurrently deploy more than two variants. However, they do not typically have evidences to select this number effectively and to tune it over time. 4. How to select variants. As previously mentioned, A/B testing works itera- tively. At the beginning of each iteration, developers have to decide which vari- ants to deploy and test. Prioritizing certain variants is critical for quickly finding better program configurations. However, selecting the most promising variants is also difficult, especially for large and complex programs. 5. How to evaluate variants. A sound and accurate comparison of variants in live experiments with users requires mathematical skills (e.g., statistics) that developers do not necessarily have. For example, sizing the duration of tests and the number of users involved is a crucial factor that affects the quality of results. 6. When to stop. Usually, A/B testing enacts a continuous adaptation process that focuses on certain specific aspects of a program. Understanding when a nearly optimal solution has been reached for those aspects is critical to avoid investing time and effort on changes that provide only a minimal impact on the quality of the program.

- #7: Even if widely and successfully adopted in industry [3,10], A/B testing is still considered by the majority of developers as a complex and crafted activity rather than a well-established software engineering practice. Indeed, conceiving, running, and summarizing the results of A/B tests is a difficult, tedious, and costly manual activity. More precisely, an accurate and consistent A/B testing demands for several complex engineering decisions and tasks. The most relevant ones are illustrated hereafter. 1. Development and deployment of multiple variants. A/B testing requires a continuous modification and deployment of the application codebase to implement and evaluate variants. These variants are deployed and monitored concurrently serving at the same time a certain percentage of users. 2. What is a variant. Programs may be customised along different lines. Because of this, a critical choice for developers is the selection of how many and which aspects of the program to change when generating a new variant. 3. How many variants. We defined A/B testing as the process of concurrently deploy and evaluate two variants of the system. In the general case, developers may concurrently deploy more than two variants. However, they do not typically have evidences to select this number effectively and to tune it over time. 4. How to select variants. As previously mentioned, A/B testing works iteratively. At the beginning of each iteration, developers have to decide which vari- ants to deploy and test. Prioritizing certain variants is critical for quickly finding better program configurations. However, selecting the most promising variants is also difficult, especially for large and complex programs. 5. How to evaluate variants. A sound and accurate comparison of variants in live experiments with users requires mathematical skills (e.g., statistics) that developers do not necessarily have. For example, sizing the duration of tests and the number of users involved is a crucial factor that affects the quality of results. 6. When to stop. Usually, A/B testing enacts a continuous adaptation process that focuses on certain specific aspects of a program. Understanding when a nearly optimal solution has been reached for those aspects is critical to avoid investing time and effort on changes that provide only a minimal impact on the quality of the program.

- #8: First it lays the foundations and explores the potential of automated A/B testing as an optimization problem. Specifically, it proposes an initial approach based on aspect-oriented programming [8] and genetic algorithms [13], which can be considered as a primer to demonstrate the feasibility of the concepts introduced in the paper and a first concrete step towards their practical application. Second, it provides the SBSE community with a novel and crucial domain where its expertise can be applied.

- #9: The concept of feature is very broad and may include entities of different nature and at different level of abstraction. For example, a feature could be a primitive integer value that specifies how many results are displayed per page in an e-commerce Web appli- cation. Similarly, a feature could be a string that specifies the text applied to a certain button in a mobile application. However, a feature can also represent more abstract software entities such as a component in charge of sorting some items displayed to the user. The features above are associated to the domains of integers, strings, and sorting algorithms, respectively.

- #10: Two key concepts follow: (i) to obtain a concrete implementation for a program p it is necessary to specify the instantiations for all the features in p; (ii) the specification of different instantiations yields to different concrete implementations of the same abstract program p. We call a concrete implementation of a program p a variant of p. As a practical example, recalling the features exemplified above, three possible instantiations may assign: (i) 10 to the feature that specifies the number of items displayed per page, (ii) the label “Buy Now” to the button, (iii) an algorithm that sorts items by their name to the sorting component. These instantiations define a possible variant of the system.

- #11: Intuitively, constraints can be used to inhibit combinations of features that are not valid in the application domains. For example, consider a Web application including two features: font color and background color. A developer can use constraints to express undesired combination of colors. We say that a variant of a program p satisfies a constraint Ci,j if Ip,fj ̸∈ Ci,j (Ip,fi ). A variant is valid for p if it satisfies all the constraints defined for p.

- #12: This function associates to each and every variant of a program a numeric value, which indicates the goodness of the variant with respect to the goal of the program. The assessment function depends on the preferences of users and can only be evaluated by monitoring variants at run-time. As previously mentioned, the likelihood for a user to buy is a valid assessment function for an e-commerce Web application. Indeed, this metric is evaluated at run-time for a specific variant of the application and concretely measures its goodness with respect to the ultimate goal of the program (i.e., selling goods): higher values indicate better variants.

- #13: Given a program p characterised by a set of features Fp, a set of constraints Cp, and an assessment function o(v), find the variant v ∈ Vp such that v is valid and maximizes o(v):

- #14: automated A/B testing can be achieved by combining together two ingredients: (i) an appropriate design-time declarative facility to specify program features, and (ii) a run-time framework in charge of automatically and iteratively exploring the solution space of possible concrete programs by: generating, executing, and evaluating variants. We captured these ideas in a reference architecture (see Fig. 2) and we implemented it in a prototype tool. The architecture consists of two main steps, summarized below and detailed in the following paragraphs.

- #15: automated A/B testing can be achieved by combining together two ingredients: (i) an appropriate design-time declarative facility to specify program features, and (ii) a run-time framework in charge of automatically and iteratively exploring the solution space of possible concrete programs by: generating, executing, and evaluating variants. We captured these ideas in a reference architecture (see Fig. 2) and we implemented it in a prototype tool. The architecture consists of two main steps, summarized below and detailed in the following paragraphs.

- #16: automated A/B testing can be achieved by combining together two ingredients: (i) an appropriate design-time declarative facility to specify program features, and (ii) a run-time framework in charge of automatically and iteratively exploring the solution space of possible concrete programs by: generating, executing, and evaluating variants. We captured these ideas in a reference architecture (see Fig. 2) and we implemented it in a prototype tool. The architecture consists of two main steps, summarized below and detailed in the following paragraphs.

- #17: automated A/B testing can be achieved by combining together two ingredients: (i) an appropriate design-time declarative facility to specify program features, and (ii) a run-time framework in charge of automatically and iteratively exploring the solution space of possible concrete programs by: generating, executing, and evaluating variants. We captured these ideas in a reference architecture (see Fig. 2) and we implemented it in a prototype tool. The architecture consists of two main steps, summarized below and detailed in the following paragraphs.

- #18: automated A/B testing can be achieved by combining together two ingredients: (i) an appropriate design-time declarative facility to specify program features, and (ii) a run-time framework in charge of automatically and iteratively exploring the solution space of possible concrete programs by: generating, executing, and evaluating variants. We captured these ideas in a reference architecture (see Fig. 2) and we implemented it in a prototype tool. The architecture consists of two main steps, summarized below and detailed in the following paragraphs.

- #19: automated A/B testing can be achieved by combining together two ingredients: (i) an appropriate design-time declarative facility to specify program features, and (ii) a run-time framework in charge of automatically and iteratively exploring the solution space of possible concrete programs by: generating, executing, and evaluating variants. We captured these ideas in a reference architecture (see Fig. 2) and we implemented it in a prototype tool. The architecture consists of two main steps, summarized below and detailed in the following paragraphs.

- #20: automated A/B testing can be achieved by combining together two ingredients: (i) an appropriate design-time declarative facility to specify program features, and (ii) a run-time framework in charge of automatically and iteratively exploring the solution space of possible concrete programs by: generating, executing, and evaluating variants. We captured these ideas in a reference architecture (see Fig. 2) and we implemented it in a prototype tool. The architecture consists of two main steps, summarized below and detailed in the following paragraphs.

- #21: Each feature declared by developers directly maps into a gene, while each variant maps into a chromosome. Analogously, the assessment function, which evaluates variants on live users, corresponds directly to the fitness func- tion, which evaluates chromosomes. Two additional aspects are required to fully specify a valid encoding: the number of chromosomes used in each iteration and the termination condition. Our framework enables the developers to specify application specific fitness functions. In addition, it accepts a preferred population size, but adaptively changes it at run-time based on the measured fitness values. Furthermore, the framework is responsible for terminating the experiment when the newly gener- ated variants do not provide improvements over a certain threshold.

- #22: Selection is the process of identifying, at each iteration, a finite number of chromosomes in the population that survive, i.e., that are considered in the next iteration. Several possible strategies have been discussed in the literature. For example, the threshold selection strategy selects all and only the chromosomes whose fitness value is higher than a given threshold. Traditional A/B testing, as described in Section 2, corresponds to the tournament strategy when the population size is limited to two chromosomes. Our framework supports several different strategies. This enables for more complex decision schemes than in traditional A/B testing (e.g., by comparing several variants concurrently). At the same time, selection strategies relieve the developer from manually selecting variants during A/B testing.

- #23: Crossover and mutation contribute to the generation of new variants. Crossover randomly selects two chromosomes from the population and generates new ones by combining them. Mutation produces instead a new chromosome (i.e., a new variant) starting from an existing one by randomly mutating some of its genes. One of the key roles of mutation is to widen the scope of exploration, thus trying to avoid converging to local minima. In traditional A/B testing, the process of generating new variants of a program is performed manually by the developers. Thanks to the crossover and mutation steps of genetic algorithms, also this critical activity is completely automated.

- #24: Intuitively, the presence of multiple groups models the differences in the users’ profile (e.g., differences in age, location, culture, etc.). The more the value of features differ from a user’s favourite ones, the worse she will evaluate it. The threshold mimics the user tolerance: after a maximum distance, the program does not have any value for her.

- #25: Our default scenario considers a program with 10 different features, each one selecting values from a finite domain of 100 elements. At each iteration, we con- currently evaluate 100 program variants, submitting each variant to 1000 random users. Users are clustered into 4 different groups. They do not tolerate features whose distance from their favourite value is higher than 80% of the maximum distance. At each iteration, the genetic algorithm keeps the number of chromo- somes (i.e., program variants) fixed. We adopt a natural selection strategy that selects the best 90% chromosomes to survive for the next generation. Selected chromosomes are combined with a crossover rate of 35% and modified using a mutation rate of 0.08%. The process stops when the improvement in fitness value is lower than 0.1% for 10 subsequent iterations.

- #26: Intuitively, the presence of multiple groups models the differences in the users’ profile (e.g., differences in age, location, culture, etc.). The more the value of features differ from a user’s favourite ones, the worse she will evaluate it. The threshold mimics the user tolerance: after a maximum distance, the program does not have any value for her.

- #27: shows that the value of the assessment func- tion decreases with the number of user groups. Indeed, a higher number of groups introduces heterogeneous preferences and constraints. Finding a suitable variant that maximizes the user satisfaction becomes challenging. With one group, the solution proposed by the genetic algorithm is optimal, i.e., it selects all the pre- ferred features of the users in the group. This is not possible in presence of more than one group, due to differences in requirements.

- #28: When reducing the maximum tolerated difference, it becomes more and more difficult to find a solution that satisfies a high number of users. Because of this, when considering a threshold of only 10% of the maximum distance, the final solution can satisfy only a fraction of the users. Thus, the quality of the solution drops below 600.

- #29: an automated solution is indeed pos- sible and worth investigating. Indeed, in all the experiments we performed, the genetic algorithm was capable to converge towards a good solution. Moreover, it always converged within a small number of steps our experiments confirmed and emphasized some key problems in per- forming manual A/B testing: in presence of heterogeneous user groups, with different preferences and constraints, devising a good strategy for evolving and improving the program is extremely challenging.