150807 Fast R-CNN

- 1. Perception and Intelligence Laboratory Seoul National University Fast R-CNN Ross Girshick, MSRA Junho Cho 15/08/07

- 2. • FRCN (Fast R-CNN) • Fast Region-based Convolutional Networks (R-CNNs) for Object Detection • VGG16: Trains 9x faster than RCNN, 3x faster than SPPnet Runs 200x faster than RCNN, 10x faster than SPPnet • Implemented on Python and C++/Caffe https://github.com/rbgirshick/fast-rcnn Perception and Intelligence Lab., Copyright © 2015 2 Introduction < VGG16>

- 3. Previous methods -RCNN & SPPnet Chapter 01.

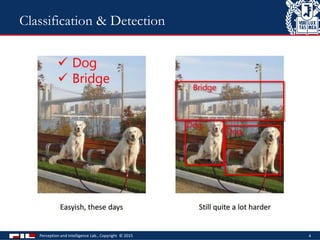

- 4. Perception and Intelligence Lab., Copyright © 2015 4 Classification & Detection

- 5. • R-CNN Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation [CVPR 2014] • SPPnet Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition [ECCV 2014] • DeepMultiBox Scalable Object Detection using Deep Neural Networks [CVPR 2014] 5 Previously Lab meeting

- 6. R-CNN:Regions with CNNfeatures aeroplane? no. .. person? yes. tvmonitor? no. .. CNN Input image Extract region proposals(~2k/ image) ComputeCNN features Classifyregions (linearSVM)

- 7. Traditionally.. 4096 1000 4096 traditional CNN (R-CNN) fixed size conv fcfixed size

- 8. SPP net SPP-net any size 4096 1000 4096 spatial pyramid pooling • Fix bin numbers • DO NOT fix bin size Spatial Pyramid Pooling conv feature maps conv layers input image region fc layers …...

- 9. SPP net SPP-net any size 4096 1000 4096 spatial pyramid pooling • Fix bin numbers • DO NOT fix bin size 4096 1000 4096 traditional CNN (R-CNN) fixed size conv fcfixed size

- 10. RCNN vs. SPP • image regions vs. feature map regions image SPP-net 1 net on full image net feature feature feature net feature image R-CNN 2000 nets on image regions net feature net feature net feature “Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition” K. He, X. Zhang, S. Ren, J. Sun. ECCV 2014

- 11. Perception and Intelligence Lab., Copyright © 2015 11 SPP-net Forward any size 4096 1000 4096 spatial pyramid pooling R times back-propagation Backward R times Slow and Heavy computation R: # of RoIs

- 12. FRCN • Higher mAP on PASCAL VOC than RCNN & SPPnet • Training is single-stage, using multi-task loss • No liniear SVM (unlike RCNN SPPnet) • Softmax & BB regressor altogether! • Simpler training & Higher mAP • All network layers can be updated during training • SPPnet only can update FCs • Higher mAP • No disk storage is required for feature caching. • Unlike RCNN & SPPnet • Very fast training & test time • Novel method to train(BP) ConvNet faster than SPPnet Perception and Intelligence Lab., Copyright © 2015 12 Contribution of FRCN

- 13. FRCN Chapter 02.

- 14. • Caffe implemented architecture Perception and Intelligence Lab., Copyright © 2015 14 FRCN (test-time detection)

- 15. Perception and Intelligence Lab., Copyright © 2015 15 FRCN (test-time detection)

- 16. Each RoI pooled into fixed-size feature map Mapped to RoI feature vector by fully-connected layers (FCs). 𝑁: # of feature maps 𝐾: # of object classes 𝑅: # of RoIs Perception and Intelligence Lab., Copyright © 2015 16 FRCN architecture (RoI pooling layer)

- 17. 𝑁: # of feature maps 𝐾: # of object classes 𝑅: # of RoIs RoI Pooling Layer • Special case of SPP layer • Two inputs • Conv feature map: 512 × 𝐻 × 𝑊 (512&𝐻&𝑊: 𝑏𝑙𝑜𝑏 𝑠𝑖𝑧𝑒 𝑎𝑓𝑡𝑒𝑟 𝑐𝑜𝑛𝑣) • RoI: 𝑅 × 5 • 5 from 𝑟, 𝑥, 𝑦, ℎ, 𝑤 • 𝑟 ∈ 0, 𝑅 − 1 : image batch index • Adaptive max pooling • Pooled to fixed size feature vector Perception and Intelligence Lab., Copyright © 2015 17 FRCN architecture (RoI pooling layer)

- 18. Perception and Intelligence Lab., Copyright © 2015 18 FRCN architecture (RoI pooling layer)

- 19. • Two sibling layers per each RoI 1. Softmax probability estimates over the K objects + 1 b.g. 2. 4 real-valued numbers (x, y, h, w) for each of the K object classes • 4K values encode refined b.b. for each class Perception and Intelligence Lab., Copyright © 2015 19 FRCN architecture (Two sibling layers)

- 20. 𝑁: # of object box proposals 𝐾: # of object classes • Two output types: 1. Softmax: 𝑁𝐾 regressed object boxes 2. Bbox regressors: 𝑃(𝑐𝑙𝑠 = 𝑘|𝑏𝑜𝑥 = 𝑛, 𝑖𝑚𝑎𝑔𝑒) for each 𝑁𝐾 boxes Perception and Intelligence Lab., Copyright © 2015 20 FRCN architecture (Two sibling layers)

- 21. • Use 3 pre-trained ImageNet networks • CaffeNet(AlexNet) as S (5convs 3FCs) • VGG_CNN_M_1024 as M (deep as S but wider) • VGG16 as L (13convs 3FCs) Perception and Intelligence Lab., Copyright © 2015 21 Training < AlexNet> < VGG16>

- 22. Modification based on RCNN • Last max pooling layer: replaced by RoI pooling layer • Pooled to fixed size 𝐻′ , 𝑊′ compatible with FCs • Final FC layer & softmax two sibling layers • a FC layer and softmax over 𝐾 + 1 categories • BB regressors • Two data inputs • A batch of 𝑁 images • A list of 𝑅 RoIs Perception and Intelligence Lab., Copyright © 2015 22 Training

- 23. • SPPnet • SPP applied to pre-computed conv feature maps of whole image • Conv features computed offline, fine-tuning can’t back-propagate errors below SPP layer • VGG16: first 13 conv layers remain fixed. Only 3 FC layers updated • RoI-centric sampling • Sample from all RoIs (like RCNN) • SGD back propagation for each RoIs • Too much memory, too slow • FRCN • Image-centric sampling: more efficient • Mini-batches are sampled hierarchically • First sampling images and then RoIs within those images • RoIs share CNN (computation and memory) more efficient • Thus, one fine-tuning stage: jointly optimizes softmax classifier & BB regressors • Loss, mini-batch sampling strategy, bp through RoI pooling layers, and SGD hyperparameters Perception and Intelligence Lab., Copyright © 2015 23 Fine-tuning

- 24. • Mini-batch sampling • Each SGD mini-batch from 𝑁 = 2 images • Mini-batches of 𝑅 = 128, 64 RoIs from 2 images • 25% from RoIs from obj proposals which IoU ≥ 0.5 with ground truth • Maximum IoU with ground truth in [0.1, 0.5) used as BG • Sampled image horizontally flipped with probability 0.5 Perception and Intelligence Lab., Copyright © 2015 24 Fine-tuning (mini-batch sampling)

- 25. Perception and Intelligence Lab., Copyright © 2015 25 Fine-tuning (mini-batch sampling) 84=21class * 4 co-ord values 5:Index and co-ord

- 26. • 𝑅 = 128 • Multi-task loss 𝐿 is averaged over 𝑅 outputs. • Input variable 𝑥 • Sum over all RoIs that max-pooled 𝑥 in the forward pass: Perception and Intelligence Lab., Copyright © 2015 26 Fine-tuning (Back-propagation through RoI pooling layer) For all RoI, for all y in pooled vector, if y pooled x

- 27. Perception and Intelligence Lab., Copyright © 2015 27 SPP-net Forward any size 4096 1000 4096 spatial pyramid pooling R times back-propagation Backward R times Slow and Heavy computation R: # of RoIs FRCN Backward R times back-propagation1 time back-propagation Fast & Efficient

- 28. Multi-task loss 𝑳 to train network jointly for CLS and BB regressors • Two sibling layers 1. Discrete probability distribution per RoI • 𝑝 = (𝑝0, … , 𝑝 𝐾) over 𝐾 + 1 categories • 𝑝 computed by a softmaxover 𝐾 + 1 categories 2. BB regressor offsets • 𝑡 𝑘 = (𝑡 𝑥 𝑘 , 𝑡 𝑦 𝑘 , 𝑡 𝑤 𝑘 , 𝑡ℎ 𝑘 ) fore each 𝐾 object classes, indexed by 𝑘 ∈ [0, … , 𝐾]. 0 as back ground (BG). 28 Fine-tuning (Multi-task loss)

- 29. • Multi-task loss 𝑳 to train network jointly for CLS and BB regressors 𝑝 = (𝑝0, … , 𝑝 𝐾) over 𝐾 + 1 categories 𝑡 𝑘 = (𝑡 𝑥 𝑘 , 𝑡 𝑦 𝑘 , 𝑡 𝑤 𝑘 , 𝑡ℎ 𝑘 ) fore each 𝐾 object classes, indexed by 𝑘 ∈ [0, … , 𝐾]. 0 as BG. • 𝑘∗ : true class label • 𝐿 𝑐𝑙𝑠 𝑝, 𝑘∗ = − log 𝑝 𝑘∗ : standard cross-entropy/log loss • 𝐿𝑙𝑜𝑐 : true bb for class 𝑘∗ : 𝑡∗ = (𝑡 𝑥 ∗ , 𝑡 𝑦 ∗ , 𝑡 𝑤 ∗ , 𝑡ℎ ∗ ) predicted bb: 𝑡 = (𝑡 𝑥, 𝑡 𝑦, 𝑡 𝑤, 𝑡ℎ) 29 Fine-tuning (Multi-task loss) Iversion bracket 0 if 𝑘∗ = 0 (𝐵𝐺) 1 otherwise

- 30. • Use L1 smooth • Less sensitive to outliers than L2 • L2 loss: significant tuning of learning rate • 𝜆 balance two losses. • Generally 1 Perception and Intelligence Lab., Copyright © 2015 30 Fine-tuning (Multi-task loss)

- 31. Perception and Intelligence Lab., Copyright © 2015 31 Fine-tuning (Multi-task loss)

- 32. RCNN SPPnet FRCN Multi-stage pipeline • Separate learning stage • Extract features • Fine-tune network with cross-entropy loss • Train SVMs • Fitting bounding box regressors Single-stage training algorithm • Simplification of learning process • Using multi-task loss (CLS+BB regressors) Expensive training on space • Caching features for SVM & regressors • Huge storage for VGG16 No disk storage is required for feature caching Slow test-time detection • CNN for all object proposals • VGG16 detection takes 47s/image Fast test-time detection Proposal warping after ConvNet & SPP. Only one CNN computation - Only fully-connected layers (after SPP) can be updated Whole network can be updated Perception and Intelligence Lab., Copyright © 2015 32 RCNN, SPPnet, FRCN comparison

- 33. Perception and Intelligence Lab., Copyright © 2015 33 Demo

- 34. Perception and Intelligence Lab., Copyright © 2015 34 Demo

- 36. 1. State-of-the-art mAP on VOC07, 2010, 2012 (at the moment) 2. Fast training & testing time compared to RCNN &SPPnet 3. Fine-tuning conv layers in VGG16 is important • NOT only FC layers Perception and Intelligence Lab., Copyright © 2015 36 Results < All networks are based on VGG16>

- 37. Perception and Intelligence Lab., Copyright © 2015 37 Results (mAP)

- 38. Training & Test time Perception and Intelligence Lab., Copyright © 2015 38 Results (Time)

- 39. Fine-tuning only FCs VS whole network? • Only FC layers fine-tuning seems fine • But doesn’t hold for VGG16 (very deep NNs) • Freezing 13 conv layers, only 3 FC layers learn, emulates SPPnet • mAP drop 66.9% 61.4% • Training through the RoI pooling layer very important for very deep net (VGG16) Perception and Intelligence Lab., Copyright © 2015 39 Results (Fine-tuning how many layers?)

- 40. • But fine-tuning all conv layers Inefficient • Updating from conv2_1 slows training 1.3x compared to conv3_1(12.5h vs 9.5h) • Over-runs GPU memory • Conv1: generic and task independent Perception and Intelligence Lab., Copyright © 2015 40 Results (Fine-tuning how many layers?)

- 41. Benefits from Multi-task training • Convenient training • Improve results. Tasks influence each other through the ConvNet • 𝜆 = 0, not BB regressors. Only CLS • 𝜆 = 1, but disabled BB regressors at test time • Isolates network’s CLS accuracy for comparison • Improves pure CLS accuracy! (+0.8~1.1 mAP) • Train with CLS loss only, then train BB regressors layer 𝐿𝑙𝑜𝑐 freezing others. • Good, but still under performs multi-task learning Results (Multi-task training)

- 42. More training data • RCNN based on deep ConvNet learns better with larger dataset Perception and Intelligence Lab., Copyright © 2015 42 Results (Additional Data)

- 43. • Increase # of object proposals don’t help. (Although Average Recall ↑) • Sparse object proposal methods (e.g. Selective Search) are bottleneck. • Replacement with dense set of sliding window (free cost) • Still sparse proposals better on detection quality Perception and Intelligence Lab., Copyright © 2015 43 Results (Object proposals)

- 44. • State-of-the-art detection result • Detailed experiments providing insights. • Sparse object proposals improve detector quality • But, a bottleneck • Decreasing the object proposal time is critical in the future. Further more • Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks Object proposal [ArXiv] • Detection network also proposes objects • Cost of proposals: 10ms, VGG16 runtime ~200ms including all steps • Higher mAP, faster • R-CNN minus R [BMVC2015] • Fast detector without Selective Search • No algorithms other than CNN itself • Attempts to remove Object proposal algorithms and rely exclusively on CNN • More integrated, simpler and faster detector • Share full-image convolutional features with detection net Perception and Intelligence Lab., Copyright © 2015 44 Conclusion

- 45. Thank you

- 46. • DeepMultiBox • Scalable object detection using DNN • Class-agnostic scalable object detection • Only Bounding box. Not aware of what the object is in the box. • Prediction a set of bounding boxes where potential objects are • Localize then recognize • Boxes generated using single DNN • Outputs • fixed number of bounding boxes. • A score for each box. Confidence of the box containing an object. 46 Introduction

- 47. Training & Test time • Truncated SVD for network compression (on FC layers) • High speed-ups with smaller drops in mAP (reduce detection time 30%, 0.3mAP drop) Perception and Intelligence Lab., Copyright © 2015 47 Results (Time)

- 48. • Multi-stage pipeline • Separate learning stages • FRCN: Simplification no learning process & state of art Single-stage training algorithm Perception and Intelligence Lab., Copyright © 2015 48 RCNN & SPPnet

- 49. Method dataset Measure 1 Measure 2 Measure 3 Measure 4 Baseline ABC 92 12 34 45 XXX ABC 32 32 54 76 YYY ABC 14 14 12 98 ZZZ ABC 32 23 32 67 Proposed ABC 14 42 41 87 Proposed (w.XX) ABC 32 15 35 67 Perception and Intelligence Lab., Copyright © 2015 49 Table example Table Title (if you want it to place here)

- 50. Perception and Intelligence Lab., Copyright © 2015 50 Figure example < Updated cells > < CNN architecture > For highlight

![• R-CNN

Rich Feature Hierarchies for Accurate Object Detection

and Semantic Segmentation [CVPR 2014]

• SPPnet

Spatial Pyramid Pooling in Deep Convolutional Networks

for Visual Recognition [ECCV 2014]

• DeepMultiBox

Scalable Object Detection using Deep Neural Networks

[CVPR 2014]

5

Previously

Lab meeting](https://image.slidesharecdn.com/150807frcn-170610061041/85/150807-Fast-R-CNN-5-320.jpg)

![Multi-task loss 𝑳 to train network jointly for CLS and BB regressors

• Two sibling layers

1. Discrete probability distribution per RoI

• 𝑝 = (𝑝0, … , 𝑝 𝐾) over 𝐾 + 1 categories

• 𝑝 computed by a softmaxover 𝐾 + 1 categories

2. BB regressor offsets

• 𝑡 𝑘

= (𝑡 𝑥

𝑘

, 𝑡 𝑦

𝑘

, 𝑡 𝑤

𝑘

, 𝑡ℎ

𝑘

) fore each 𝐾 object classes,

indexed by 𝑘 ∈ [0, … , 𝐾]. 0 as back ground (BG).

28

Fine-tuning (Multi-task loss)](https://image.slidesharecdn.com/150807frcn-170610061041/85/150807-Fast-R-CNN-28-320.jpg)

![• Multi-task loss 𝑳 to train network jointly for CLS and BB regressors

𝑝 = (𝑝0, … , 𝑝 𝐾) over 𝐾 + 1 categories

𝑡 𝑘

= (𝑡 𝑥

𝑘

, 𝑡 𝑦

𝑘

, 𝑡 𝑤

𝑘

, 𝑡ℎ

𝑘

) fore each 𝐾 object classes, indexed by 𝑘 ∈ [0, … , 𝐾]. 0 as BG.

• 𝑘∗

: true class label

• 𝐿 𝑐𝑙𝑠 𝑝, 𝑘∗ = − log 𝑝 𝑘∗ : standard cross-entropy/log loss

• 𝐿𝑙𝑜𝑐 : true bb for class 𝑘∗

: 𝑡∗

= (𝑡 𝑥

∗

, 𝑡 𝑦

∗

, 𝑡 𝑤

∗

, 𝑡ℎ

∗

)

predicted bb: 𝑡 = (𝑡 𝑥, 𝑡 𝑦, 𝑡 𝑤, 𝑡ℎ)

29

Fine-tuning (Multi-task loss)

Iversion bracket

0 if 𝑘∗

= 0 (𝐵𝐺)

1 otherwise](https://image.slidesharecdn.com/150807frcn-170610061041/85/150807-Fast-R-CNN-29-320.jpg)

![• State-of-the-art detection result

• Detailed experiments providing insights.

• Sparse object proposals improve detector quality

• But, a bottleneck

• Decreasing the object proposal time is critical in the future.

Further more

• Faster R-CNN: Towards Real-Time Object Detection with Region Proposal

Networks Object proposal [ArXiv]

• Detection network also proposes objects

• Cost of proposals: 10ms, VGG16 runtime ~200ms including all steps

• Higher mAP, faster

• R-CNN minus R [BMVC2015]

• Fast detector without Selective Search

• No algorithms other than CNN itself

• Attempts to remove Object proposal algorithms and rely exclusively on CNN

• More integrated, simpler and faster detector

• Share full-image convolutional features with detection net

Perception and Intelligence Lab., Copyright © 2015 44

Conclusion](https://image.slidesharecdn.com/150807frcn-170610061041/85/150807-Fast-R-CNN-44-320.jpg)