Agents1

- 2. Agents and environments • Agent: An agent is anything that can be viewed as: – perceiving its environment through sensors and – acting upon that environment through actuators.

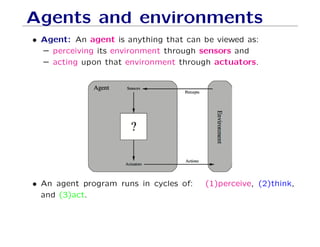

- 3. Agents and environments • Agent: An agent is anything that can be viewed as: – perceiving its environment through sensors and – acting upon that environment through actuators. • An agent program runs in cycles of: (1)perceive, (2)think, and (3)act.

- 4. Agents and environments • Agent: An agent is anything that can be viewed as: – perceiving its environment through sensors and – acting upon that environment through actuators. • An agent program runs in cycles of: (1)perceive, (2)think, and (3)act. • Agent = Architecture + Program

- 5. Agents and environments • Human agent: – Sensors: eyes, ears, and other organs. – Actuators: hands, legs, mouth, and other body parts. • Robotic agent: – Sensors: Cameras and infrared range finders. – Actuators: Various motors.

- 6. Agents and environments • Human agent: – Sensors: eyes, ears, and other organs. – Actuators: hands, legs, mouth, and other body parts. • Robotic agent: – Sensors: Cameras and infrared range finders. – Actuators: Various motors. • Agents everywhere! – Thermostat – Cell phone – Vacuum cleaner – Robot – Alexa Echo – Self-driving car – Human – etc.

- 7. Vacuum cleaner A B • Percepts: location and contents e.g., [A, Dirty] • Actions: Left, Right, Suck, NoOp • Agent function: mapping from percepts to actions.

- 8. Vacuum cleaner A B • Percepts: location and contents e.g., [A, Dirty] • Actions: Left, Right, Suck, NoOp • Agent function: mapping from percepts to actions. Percept Action [A, clean] Right [A, dirty] Suck [B, clean] Left [B, dirty] Suck

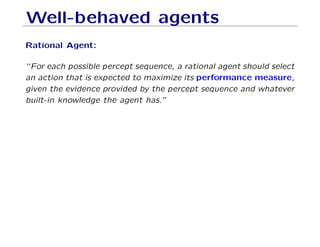

- 9. Well-behaved agents Rational Agent: “For each possible percept sequence, a rational agent should select an action that is expected to maximize its performance measure, given the evidence provided by the percept sequence and whatever built-in knowledge the agent has.”

- 10. Rationality • Rationality is relative to a performance measure. • Judge rationality based on: – The performance measure that defines the criterion of suc- cess. – The agent prior knowledge of the environment. – The possible actions that the agent can perform. – The agent’s percept sequence to date.

- 11. PEAS • When we define a rational agent, we group these properties under PEAS, the problem specification for the task environ- ment. • The rational agent we want to design for this task environment is the solution. • PEAS stands for: – Performance – Environment – Actuators – Sensors

- 12. PEAS What is PEAS for a self-driving car? • Performance: • Environment: • Actuators: • Sensors:

- 13. PEAS What is PEAS for a self-driving car? • Performance: Safety, time, legal drive, comfort. • Environment: • Actuators: • Sensors:

- 14. PEAS What is PEAS for a self-driving car? • Performance: Safety, time, legal drive, comfort. • Environment: Roads, other cars, pedestrians, road signs. • Actuators: • Sensors:

- 15. PEAS What is PEAS for a self-driving car? • Performance: Safety, time, legal drive, comfort. • Environment: Roads, other cars, pedestrians, road signs. • Actuators: Steering, accelerator, brake, signal, horn. • Sensors:

- 16. PEAS What is PEAS for a self-driving car? • Performance: Safety, time, legal drive, comfort. • Environment: Roads, other cars, pedestrians, road signs. • Actuators: Steering, accelerator, brake, signal, horn. • Sensors: Camera, sonar, GPS, Speedometer, odometer, ac- celerometer, engine sensors, keyboard.

- 17. PEAS How about a vacuum cleaner? iRobot Roomba series

- 18. PEAS How about a vacuum cleaner? iRobot Roomba series • Performance: cleanness, efficiency: distance traveled to clean, battery life, security. • Environment: • Actuators: • Sensors:

- 19. PEAS How about a vacuum cleaner? iRobot Roomba series • Performance: cleanness, efficiency: distance traveled to clean, battery life, security. • Environment: room, table, wood floor, carpet, different ob- stacles. • Actuators: • Sensors:

- 20. PEAS How about a vacuum cleaner? iRobot Roomba series • Performance: cleanness, efficiency: distance traveled to clean, battery life, security. • Environment: room, table, wood floor, carpet, different ob- stacles. • Actuators: wheels, different brushes, vacuum extractor. • Sensors:

- 21. PEAS How about a vacuum cleaner? iRobot Roomba series • Performance: cleanness, efficiency: distance traveled to clean, battery life, security. • Environment: room, table, wood floor, carpet, different ob- stacles. • Actuators: wheels, different brushes, vacuum extractor. • Sensors: camera, dirt detection sensor, cliff sensor, bump sen- sors, infrared wall sensors.

- 22. Environment types • Fully observable (vs. partially observable): An agent’s sensors give it access to the complete state of the environment at each point in time. • Deterministic (vs. stochastic): The next state of the en- vironment is completely determined by the current state and the action executed by the agent. (If the environment is de- terministic except for the actions of other agents, then the environment is strategic) • Episodic (vs. sequential): The agent’s experience is divided into atomic ”episodes” (each episode consists of the agent perceiving and then performing a single action), and the choice of action in each episode depends only on the episode itself.

- 23. Environment types • Static (vs. dynamic): The environment is unchanged while an agent is deliberating. (The environment is semi-dynamic if the environment itself does not change with the passage of time but the agent’s performance score does.) • Discrete (vs. continuous): A limited number of distinct, clearly defined percepts and actions. E.g., checkers is an ex- ample of a discrete environment, while self-driving car evolves in a continuous one. • Single agent (vs. multi-agent): An agent operating by itself in an environment. • Known (vs. Unknown): The designer of the agent may or may not have knowledge about the environment makeup. If the environment is unknown the agent will need to know how it works in order to decide.

- 25. Agent types • Four basic types in order of increasing generality: – Simple reflex agents – Model-based reflex agents – Goal-based agents – Utility-based agents • All of which can be generalized into learning agents that can improve their performance and generate better actions.

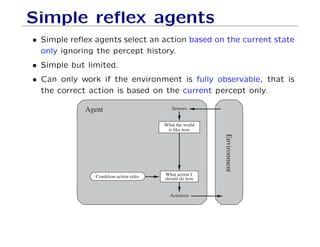

- 26. Simple reflex agents • Simple reflex agents select an action based on the current state only ignoring the percept history. • Simple but limited. • Can only work if the environment is fully observable, that is the correct action is based on the current percept only. Agent Environment Sensors What action I should do nowCondition-action rules Actuators What the world is like now

- 27. Vacuum (reflex) agent A B • Let’s write the algorithm for the Vacuum cleaner... • Percepts: location and content (location sensor, dirt sensor). • Actions: Left, Right, Suck, NoOp Percept Action [A, clean] Right [A, dirty] Suck [B, clean] Left [B, dirty] Suck

- 28. Vacuum (reflex) agent A B • Let’s write the algorithm for the Vacuum cleaner... • Percepts: location and content (location sensor, dirt sensor). • Actions: Left, Right, Suck, NoOp Percept Action [A, clean] Right [A, dirty] Suck [B, clean] Left [B, dirty] Suck What if the vacuum agent is deprived from its location sen- sor?

- 29. Model-based reflex agents • Handle partial observability by keeping track of the part of the world it can’t see now. • Internal state depending on the percept history (best guess). • Model of the world based on (1) how the world evolves in- dependently from the agent, and (2) how the agent actions affects the world. Agent Environment Sensors State How the world evolves What my actions do Condition-action rules Actuators What the world is like now What action I should do now

- 30. Goal-based agents • Knowing the current state of the environment is not enough. The agent needs some goal information. • Agent program combines the goal information with the envi- ronment model to choose the actions that achieve that goal. • Consider the future with “What will happen if I do A?” • Flexible as knowledge supporting the decisions is explicitly rep- resented and can be modified. Agent Environment Sensors What action I should do now State How the world evolves What my actions do Actuators What the world is like now What it will be like if I do action A Goals

- 31. Utility-based agents • Sometimes achieving the desired goal is not enough. We may look for quicker, safer, cheaper trip to reach a destination. • Agent happiness should be taken into consideration. We call it utility. • A utility function is the agent’s performance measure • Because of the uncertainty in the world, a utility agent choses the action that maximizes the expected utility. Agent Environment Sensors How happy I will be in such a state State How the world evolves What my actions do Utility Actuators What action I should do now What it will be like if I do action A What the world is like now

- 32. Learning agents • Programming agents by hand can be very tedious. “Some more expeditious method seem desirable” Alan Turing, 1950. • Four conceptual components: – Learning element: responsible for making improvements – Performance element: responsible for selecting external ac- tions. It is what we considered as agent so far. – Critic: How well is the agent is doing w.r.t. a fixed perfor- mance standard. – Problem generator: allows the agent to explore. Performance standard Agent Environment Sensors Performance element changes knowledge learning goals Problem generator feedback Learning element Critic Actuators

- 33. Agent’s organization a) Atomic Representation: Each state of the world is a black- box that has no internal structure. E.g., finding a driving route, each state is a city. AI algorithms: search, games, Markov decision processes, hidden Markov models, etc.

- 34. Agent’s organization b) Factored Representation: Each state has some attribute- value properties. E.g., GPS location, amount of gas in the tank. AI algorithms: constraint satisfaction, and Bayesian networks.

- 35. Agent’s organization c) Structured Representation: Relationships between the ob- jects of a state can be explicitly expressed. AI algorithms: first or- der logic, knowledge-based learning, natural language understand- ing.

- 36. Intelligent agents • The concept of intelligent agent is central in AI. • AI aims to design intelligent agents that are useful, reactive, autonomous and even social and pro-active. • An agent perceives its environment through percept and acts through actuators. • A performance measure evaluates the behavior of the agent. • An agent that acts to maximize its expected performance mea- sure is called a rational agent. • PEAS: A task environment specification that includes Perfor- mance measure, Environment, Actuators and Sensors. Agent = Architecture + Program

- 37. Intelligent agents • Four types of agents: Reflex agents, model-based agents, goal- based agents, and utility-based agents. • Agents can improve their performance through learning. • This is a high-level present of agent programs. • States representations: atomic, factored, structured. Increas- ing expressiveness power.

- 38. Intelligent agents • Four types of agents: Reflex agents, model-based agents, goal- based agents, and utility-based agents. • Agents can improve their performance through learning. • This is a high-level present of agent programs. • States representations: atomic, factored, structured. Increas- ing expressiveness power. Credit: Courtesy Percy Liang

- 39. Credit • Artificial Intelligence, A Modern Approach. Stuart Russell and Peter Norvig. Third Edition. Pearson Education. http://aima.cs.berkeley.edu/

![Vacuum cleaner

A B

• Percepts: location and contents e.g., [A, Dirty]

• Actions: Left, Right, Suck, NoOp

• Agent function: mapping from percepts to actions.](https://image.slidesharecdn.com/agents1-171227052052/85/Agents1-7-320.jpg)

![Vacuum cleaner

A B

• Percepts: location and contents e.g., [A, Dirty]

• Actions: Left, Right, Suck, NoOp

• Agent function: mapping from percepts to actions.

Percept Action

[A, clean] Right

[A, dirty] Suck

[B, clean] Left

[B, dirty] Suck](https://image.slidesharecdn.com/agents1-171227052052/85/Agents1-8-320.jpg)

![Vacuum (reflex) agent

A B

• Let’s write the algorithm for the Vacuum cleaner...

• Percepts: location and content (location sensor, dirt sensor).

• Actions: Left, Right, Suck, NoOp

Percept Action

[A, clean] Right

[A, dirty] Suck

[B, clean] Left

[B, dirty] Suck](https://image.slidesharecdn.com/agents1-171227052052/85/Agents1-27-320.jpg)

![Vacuum (reflex) agent

A B

• Let’s write the algorithm for the Vacuum cleaner...

• Percepts: location and content (location sensor, dirt sensor).

• Actions: Left, Right, Suck, NoOp

Percept Action

[A, clean] Right

[A, dirty] Suck

[B, clean] Left

[B, dirty] Suck

What if the vacuum agent is deprived from its location sen-

sor?](https://image.slidesharecdn.com/agents1-171227052052/85/Agents1-28-320.jpg)