Airflow and supervisor

- 1. Intro to Airflow or All the stuff you are now able to do, but you did not know about

- 2. Airflow—what is it? It’s a workflow management platform

- 3. Airflow—what is it? It’s a workflow management platform Task a Task b Task c Task e Task d

- 4. Airflow—what is it? It’s a workflow management platform Task a Task b Task c Task e Task d

- 5. Airflow—what is it? It’s a workflow management platform Task a Task b Task c Task e Task d Directed Acyclic Graph (DAG)

- 6. Airflow—what is it? It’s a workflow management platform Task a Task b Task c Task e Task d Does not support data streaming

- 7. Airflow—what is it? It’s a workflow management platform Task a Task b Task c Task e Task d Meant for batch processing Does not support data streaming

- 8. Airflow—what is it? It’s a workflow management platform Task a Task b Task c Task e Task d Meant for batch processing Does not support data streaming .pickle .pickle

- 9. Airflow—what is it? It’s a workflow management platform Task a Task b Task c Task e Task d DB DB Meant for batch processing Does not support data streaming

- 10. Airflow—what is it? It’s a workflow management platform Task a Task b Task c Task e Task d Meant for batch processing Does not support data streaming

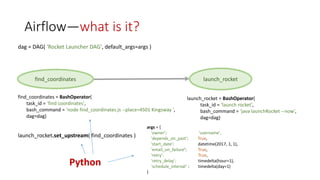

- 11. Airflow—what is it? dag = DAG( ‘Rocket Launcher DAG', default_args=args ) find_coordinates launch_rocket find_coordinates = BashOperator( task_id = ‘find coordinates', bash_command = ‘node find_coordinates.js --place=4501 Kingsway ', dag=dag) launch_rocket = BashOperator( task_id = ‘launch rocket', bash_command = ‘java launchRocket --now', dag=dag)

- 12. Airflow—what is it? launch_rocket.set_upstream( find_coordinates ) dag = DAG( ‘Rocket Launcher DAG', default_args=args ) find_coordinates launch_rocket find_coordinates = BashOperator( task_id = ‘find coordinates', bash_command = ‘node find_coordinates.js --place=4501 Kingsway ', dag=dag) launch_rocket = BashOperator( task_id = ‘launch rocket', bash_command = ‘java launchRocket --now', dag=dag)

- 13. Airflow—what is it? launch_rocket.set_upstream( find_coordinates ) dag = DAG( ‘Rocket Launcher DAG', default_args=args ) find_coordinates launch_rocket find_coordinates = BashOperator( task_id = ‘find coordinates', bash_command = ‘node find_coordinates.js --place=4501 Kingsway ', dag=dag) launch_rocket = BashOperator( task_id = ‘launch rocket', bash_command = ‘java launchRocket --now', dag=dag) args = { 'owner': ‘username', 'depends_on_past': True, 'start_date': datetime(2017, 1, 1), 'email_on_failure': True, ‘retry': True, ‘retry_delay': timedelta(hour=1), ‘schedule_interval’ : timedelta(day=1) }

- 14. Airflow—what is it? launch_rocket.set_upstream( find_coordinates ) dag = DAG( ‘Rocket Launcher DAG', default_args=args ) find_coordinates launch_rocket find_coordinates = BashOperator( task_id = ‘find coordinates', bash_command = ‘node find_coordinates.js --place=4501 Kingsway ', dag=dag) launch_rocket = BashOperator( task_id = ‘launch rocket', bash_command = ‘java launchRocket --now', dag=dag) args = { 'owner': ‘username', 'depends_on_past': True, 'start_date': datetime(2017, 1, 1), 'email_on_failure': True, ‘retry': True, ‘retry_delay': timedelta(hour=1), ‘schedule_interval’ : timedelta(day=1) } Python

- 15. Airflow—Internals Python 3 Runtime Airflow Local Executor Web Server Scheduler SQL Engine Airflow Metadata DB dag dag dags

- 16. Airflow—Internals Python 3 Runtime Airflow Local Executor Web Server Scheduler SQL Engine Airflow Metadata DB dag dag dags Per task: • Status (succes/execution/fail) • Runtime • etc

- 17. Airflow—Internals Python 3 Runtime Airflow Local Executor Web Server Scheduler SQL Engine Airflow Metadata DB dag dag dags Per task: • Status (succes/execution/fail) • Runtime • etc

- 18. Airflow—Internals Python 3 Rutime Airflow Sequential Executor Web Server Scheduler SQL Engine Airflow Metadata DB dag dag dags Per task: • Status (succes/execution/fail) • Runtime • etc Single node

- 19. Airflow—Internals Python 3 Rutime Airflow Local Executor Web Server Scheduler SQL Engine Airflow Metadata DB dag dag dags Per task: • Status (succes/execution/fail) • Runtime • etc Single node

- 20. Airflow—Internals Python 3 Rutime Airflow Celery Executor Web Server Scheduler SQL Engine Airflow Metadata DB dag dag dags Per task: • Status (succes/execution/fail) • Runtime • etc

- 21. Airflow—what is it? Operators • BashOperator • HTTPSensor • SSHExecuteOperator • … Documentation here: https://airflow.incubator.apache.org/code.html

- 22. Airflow—Demo What we have so far….

- 23. Airflow—Benefits • Scalable workflows (with Celery) • Easy parallelizing of workflows • With Celery: Dedicated low-priority n-thread queue • Beautiful monitoring from the UI

- 24. Airflow—Benefits • Some automation • Scheduling and triggering of DAGS from the UI • Auto Retrying • Auto Notifying (emails, callbacks) • Some interesting Operators • Place for contribution • Automatic way of splitting a workload into n tasks (Dask style)

- 26. Airflow—The case against cron But I could “just” use Cron

- 27. Airflow—The case against cron But I could “just” use Cron Cron Airflow

- 28. Supervisor—what is it? It’s a process demonization and monitoring tool

- 29. Supervisor—what is it? It’s a process demonization and monitoring tool Long story short: We use them to run and monitor Celery, Flower, and Airflow

- 30. Supervisor—Demo What we have so far….

- 31. Supervisor—Benefits • Centralized control • The server was re-started? What was running on the background?! Panick!! Panick!! (Chill… start supervisor and everything that needs to be started will be started)

- 32. Supervisor—Benefits • Centralized control • The server was re-started? What was running on the background?! Panick!! Panick!! (Chill… start supervisor and everything that needs to be started will be started) • Web UI (for monitoring and control) • Status and Logs are visible directly on the UI • Can stop/start/re-start

- 33. Supervisor—Benefits • Centralized control • The server was re-started? What was running on the background?! Panick!! Panick!! (Chill… start supervisor and everything that needs to be started will be started) • Web UI (for monitoring and control) • Status and Logs are visible directly on the UI • Can stop/start/re-start • Basic automation • If an app fails. It can automatically re-start it up to n retries

- 34. Supervisor—The case against nohup But I could “just” use nohup

- 35. Supervisor—The case against nohup But I could “just” use nohup

Editor's Notes

- #3: Airflow is a … In airflow workflows consists of tasks.

- #4: In airflow workflows consists of tasks.

- #5: And tasks have dependencies. In this example In this manner, tasks can be seen as the vertices of a graph and dependencies as the edges.

- #6: The only condition is that there can not be cyclic dependencies. In other words, the dependency graph is a DAG

- #7: One thing to have in consideration is that Airflow…. Meaning, tasks do no exchange data. So, for instance, you cannot create a data pipeline where data is passed from task to task.

- #8: And this is because airflow is meant for batch processing

- #9: If you need to… because you want to create a data pipeline that does some sort of batch processing… then you can always pass data in files. The only drawback with airflow is that this step is manual… there’s no way to do it from airflow automatically… you have to create and read your won pickle files… so that’s pehaps a place where we could improve

- #10: Or even in the DB… this would be handy if you wanted to persist intermediate results

- #11: IN some contexts, a DAG is a pipeline. So you can think of DAGs as either workflows or pipelines. Spark is for data streamin?? (Ask leandro)

- #12: So this is a sample workflow… on the left you have the task definition for….

- #13: Then to specify a dependency between to tasks you can say…

- #14: You can also configure the DAG.

- #15: And if you haven’t notice… the language for specifying a DAG is python… The ability to define a DAG programmatically can be useful when workflows start to grow in complexity. You can. for instance, define dependencies on a loop

- #16: As I already mentioned, airflow is a batch processing platform. To support batch processing, the first thing Airflow has to have is an SCHEDULER. The scheduler is in charge of scheduling DAGs for execution

- #17: Airlfow also keeps track of tasks states via its metadata DB, whether…

- #18: This metadata can then be accessed by the webserver to display information about a task in the UI

- #19: As for the executor… Airflow supports three options. The sequential executor, which runs all DAGS in a single process in a single machine.

- #20: As for the executor… Airflwo supports three options. I’m showing here the local executor, which let you do multiprocessing in a single machine.

- #21: And there’s the option for a Celery Executor, which enables Airflow to distribute and parallelize DAGs.

- #22: Airflow operators provide you with options so that you can create DAGS…. I have never used any of them (so I can’t tell you about them).. But I can show you a couple of them…. To give you an idea….

- #23: Consider the previous example.. The rocker laiuncher one. In Java, I would have to create a POST request that looks as follows: SHOW LAUN ROCKET TASK IN CHROME ARC

- #24: It’s a… it sits right between Celery applications and everybody else.

- #25: It’s a… it sits right between Celery applications and everybody else.

- #26: And others….

- #27: Now….If after this explanation you’re still thinking cron is a good idea to run your batch processes. That means you haven't listened at all. So this is my closing argument…

- #28: Cron is like a 1970s vokswagen. It was good, everybody used it… but we’ve moved on

- #29: Supervisor is… Meaning you can create daemon processes and monitor them

- #30: Long story short…

- #31: Consider the previous example.. The rocker laiuncher one. In Java, I would have to create a POST request that looks as follows: SHOW LAUN ROCKET TASK IN CHROME ARC

- #32: Why supervisor… that do we gain?

- #33: Why supervisor… that do we gain?

- #34: Why supervisor… that do we gain?

- #35: Now.. If you think… well I could just use

- #36: Please don’t