Appearance based gaze estimation using deep features and random forest regression

- 1. Interaction Lab. Kumoh National Institute of Technology Appearance-based gaze estimation using deep feature and random forest regression Knowledge-Based System 2016. Jeong JaeYeop

- 2. ■Intro ■Method ■Experimental result ■Conclusion Agenda Interaction Lab., Kumoh National Institue of Technology 2

- 3. Intro Method Experimental result Data Engineering Lab., Kumoh National Institue of Technology 3

- 4. ■Gaze Significant role in understanding human attention, feeling and mind • Cognitive processes analysis • Human computer interaction Non-intrusive gaze tracking systems • One single web camera under natural light with free head movement • Hard to get accuracy Intro(1/4) Interaction Lab., Kumoh National Institue of Technology 4

- 5. ■Gaze estimation methods Feature-based method • Depend on pupil detection with the light sources’ reflections on cornea • Map from pupil center of geometry model to the gaze calibration points • High accuracy, fluctuate strongly due to free head movement Appearance-based method • Point representation in high dimensional space • Learn the mapping relation from this point in given feature space to screen coordinates Intro(2/4) Interaction Lab., Kumoh National Institue of Technology 5

- 6. Intro(3/4) Interaction Lab., Kumoh National Institue of Technology 6

- 7. ■Focus Deep feature extraction and feature forest regression • Using one web camera under natural light • Free head movement Intro(4/4) Interaction Lab., Kumoh National Institue of Technology 7

- 8. Method Experimental result Conclusion Data Engineering Lab., Kumoh National Institue of Technology 8

- 9. Method(1/7)

- 10. ■Eye image data Crop eye region from its localization of facial landmarks • Firstly, the subject’s face which always appears fully in the FOV ■ Using Viola-Jones method • Get Eye region ■ SDM(Supervised Descent Method) ■ Facial landmark detector ▪ Eye corner and other fiducial points ▪ Eye region are shown as red dots • “Face in the wild” datasets ■ Robust under natural light with different illumination conditions ■ Significantly fast and accurate for face align in the wild with large head rotary Method(2/7) Interaction Lab., Kumoh National Institue of Technology 10

- 11. ■Eye image data Cropped eye images without background • About 4% of all cases are discarded • Images dataset delivers eye images without background noise Method(3/7) Interaction Lab., Kumoh National Institue of Technology 11

- 12. ■Deep feature learning Task of CNN • Learning deep feature from the input eye images • Last hidden layer ■ Fully connected ■ 160 dimension deep feature is extracted Method(4/7) Interaction Lab., Kumoh National Institue of Technology 12

- 13. Method(5/7) Interaction Lab., Kumoh National Institue of Technology 13

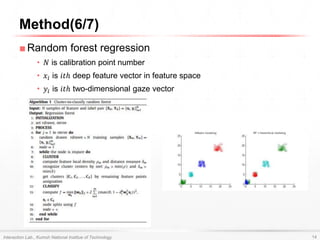

- 14. ■Random forest regression • 𝑁 is calibration point number • 𝑥𝑖 is 𝑖𝑡ℎ deep feature vector in feature space • 𝑦𝑖 is 𝑖𝑡ℎ two-dimensional gaze vector Method(6/7) Interaction Lab., Kumoh National Institue of Technology 14

- 15. ■Random forest regression Method(7/7) Interaction Lab., Kumoh National Institue of Technology 15

- 16. Experimental result Conclusion Data Engineering Lab., Kumoh National Institue of Technology 16

- 17. ■Dataset and experimental setup Desktop computer using USB camera • The image resolution is 640 by 480 Data • Collected different time ■ Under normal natural light, strong sun light, weak screen light at night • 22 groups of video from 6 subjects ■ Different light illumination conditions ■ 16 groups wearing glasses ■ Calibration step ■ Without head movement ■ Free head movement • Calibration points ■ 25 points is training ■ 16 points is test Experimental result(1/10)

- 18. ■Dataset and experimental setup Distribution of head pose angle in the free head movement • Using POSIT(Pose from Orthography and Scaling with Iterations) • Pitch : [0°, 15°], Yaw : [-10°, 10°] Mean grayscale intensity of images • Darker light condition has a lower mean grayscale intensity Experimental result(2/10) Interaction Lab., Kumoh National Institue of Technology 18

- 19. ■Deep gaze feature The deep CNN was implemented based on theano Experimental result(3/10) Interaction Lab., Kumoh National Institue of Technology 19

- 20. ■Comparison with other methods MAE(Mean Absolute Error) • Gaze angle on different regression approaches and different features Experimental result(4/10) Interaction Lab., Kumoh National Institue of Technology 20

- 21. ■Comparison with other methods Time cost(𝑚𝑠) • Single image testing ■ Feature extraction and feature regression Experimental result(5/10) Interaction Lab., Kumoh National Institue of Technology 21

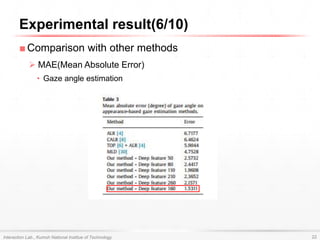

- 22. ■Comparison with other methods MAE(Mean Absolute Error) • Gaze angle estimation Experimental result(6/10) Interaction Lab., Kumoh National Institue of Technology 22

- 23. ■Comparison on illumination condition Gaze estimation under natural light • The red points and curves ■ Error of training data • The blue points and curves ■ Error of test data Experimental result(7/10) Interaction Lab., Kumoh National Institue of Technology 23

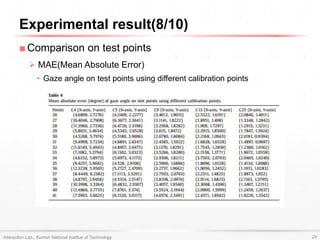

- 24. ■Comparison on test points MAE(Mean Absolute Error) • Gaze angle on test points using different calibration points Experimental result(8/10) Interaction Lab., Kumoh National Institue of Technology 24

- 25. ■Comparison on person-independent MAE(Mean Absolute Error) • Gaze angle for cross subject gaze estimation • Low resolution, free head movement and natural light Experimental result(9/10) Interaction Lab., Kumoh National Institue of Technology 25

- 26. ■Comparison on occlusion Experimental result(10/10) Interaction Lab., Kumoh National Institue of Technology 26

- 27. Conclusion Data Engineering Lab., Kumoh National Institue of Technology 27

- 28. ■In this work CNN-based regression method for gaze estimation • Under natural light • CNN built a sparse active feature space from the training eye images • Gaze estimation model learned mapping between deep feature and gaze coordinate by random forest regression Deep feature significantly improves the performance Hope to apply • Real time driver gaze tracking • Gaze estimation in real driving environment with background noise Conclusion

![■Dataset and experimental setup

Distribution of head pose angle in the free head movement

• Using POSIT(Pose from Orthography and Scaling with Iterations)

• Pitch : [0°, 15°], Yaw : [-10°, 10°]

Mean grayscale intensity of images

• Darker light condition has a lower mean grayscale intensity

Experimental result(2/10)

Interaction Lab., Kumoh National Institue of Technology 18](https://image.slidesharecdn.com/appearancebasedgazeestimationusingdeepfeaturesandrandomforestregression-210528115146/85/Appearance-based-gaze-estimation-using-deep-features-and-random-forest-regression-18-320.jpg)