Basic software-testing-concepts

- 2. Content Introduction to Software Testing Software testing techniques Software testing strategies Software testing tools Classic testing mistakes Good software testing Test documentation

- 3. INTRODUCTION TO SOFTWARE TESTING SOFTWARE BUG (error, flaw, mistake, failure, or fault) The software doesn't do something that the product specification says it should do. The software does something that the product specification says it shouldn't do. The software does something that the product specification doesn't mention. The software doesn't do something that the product specification doesn't mention but should. The software is difficult to understand, hard to use, slow, or in the software tester's eyes will be viewed by the end user as just plain not right.

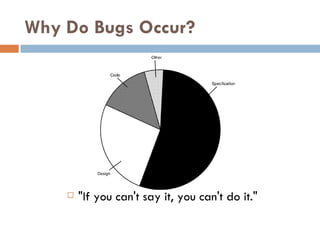

- 4. Why Do Bugs Occur? "If you can't say it, you can't do it."

- 5. The Cost of Bugs

- 6. Cost of Software Engineering

- 8. What Does a Software Tester Do? The goal of a software tester is to find bugs. The goal of a software tester is to find bugs and find them as early as possible. The goal of a software tester is to find bugs, find them as early as possible, and make sure they get fixed.

- 9. Ontologies for Software Engineering and Software Technology Software Engineering (SE) Generic (all-domain) Specific (sub-domain) Software Requirements Software Design Software Construction Software Testing Software Maintenance Software Configuration Management Software Quality Software Engineering Tools & Methods Software Engineering Process Software Engineering Management

- 10. Ontologies for Software Engineering and Software Technology Software Technology (ST) Software Programming Techniques Programming Languages Operating Systems Data Data Structures Data Storage Representations Data Encryption Coding and Information Theory Files Information Technology and Systems Models and Principles Database Management Information Storage and Retrieval Information Technology and Systems Applications Information Interfaces and Representation (HCI)

- 11. SOFTWARE TESTING TECHNIQUES Software Testing Fundamentals Test Case Design White-Box Testing Black-Box Testing Control Structure Testing

- 12. Software Testing Prinpicles All tests should be traceable to customer requirements. Tests should be planned long before testing begins. The Pareto principle applies to software testing. Testing should begin “in the small” and progress towards testing “in the large”. Exhaustive testing is not possible. To be most effective, testing should be conducted by an independent third party.

- 13. Testability Operability “The better it works, the more efficiently it can be tested.” Observability “What you see is what you test” Controllability "The better we can control the software, the more the testing can be automated and optimized.“ Decomposability "By controlling the scope of testing, we can more quickly isolate problems and perform smarter retesting."

- 14. Testability (Cont.) Simplicity "The less there is to test, the more quickly we can test it.“ Stability "The fewer the changes, the fewer the disruptions to testing.“ Understandability "The more information we have, the smarter we will test."

- 15. Test Case Design “ There is only one rule in designing test cases: cover all features, but do not make too many test cases.” It is not possible to exhaustively test every program path because the number of paths is simply too large. White-Box Testing Black-Box Testing

- 16. Control Structure Testing Condition Testing • Boolean operator error (incorrect/missing/extra Boolean operators). • Boolean variable error. • Boolean parenthesis error. • Relational operator error. • Arithmetic expression error. Data Flow Testing DEF( S) = {X | statement S contains a definition of X} USE( S) = {X | statement S contains a use of X} Loop Testing Simple loops Nested loops Concatenated loops Unstructyred loops

- 17. SOFTWARE TESTING STRATEGIES A Strategic Approach to Software Testing Strategic Issues Unit Testing Integration Testing Validation Testing System Testing The Art of Debugging

- 18. SOFTWARE TESTING STRATEGY Testing begins at the component level and works "outward" toward the integration of the entire computer-based system. Different testing techniques are appropriate at different points in time. Testing is conducted by the developer of the software and (for large projects) an independent test group. Testing and debugging are different activities, but debugging must be accommodated in any testing strategy.

- 19. SOFTWARE TESTING STRATEGY A strategy for software testing must accommodate low-level tests that are necessary to verify that a small source code segment has been correctly implemented as well as high-level tests that validate major system functions against customer requirements. A strategy must provide guidance for the practitioner and a set of milestones for the manager. Because the steps of the test strategy occur at a time when dead-line pressure begins to rise, progress must be measurable and problems must surface as early as possible.

- 20. VERIFICATION And VALIDATION Verification: "Are we building the product right?“ Validation: "Are we building the right product?"

- 21. ORGANIZING FOR SOFTWARE TESTING ???

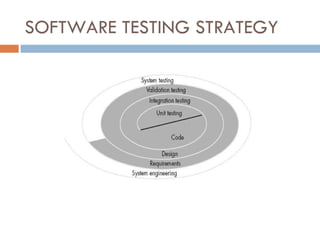

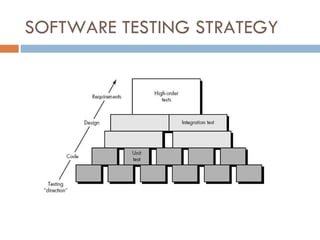

- 24. SOFTWARE TESTING STRATEGY Unit testing begins at the vortex of the spiral and concentrates on each unit (i.e., component) of the software as implemented in source code. Testing progresses Integration testing, where the focus is on design and the construction of the software architecture. Validation testing, where requirements established as part of software requirements analysis are validated against the software that has been constructed. S ystem testing, where the software and other system elements are tested as a whole.

- 25. CRITERIA FOR COMPLETION OF TESTING f(t) = (1/p) ln [l0 pt + 1] (18-1) where f(t) = cumulative number of failures that are expected to occur once the software has been tested for a certain amount of execution time, t, l0 = the initial software failure intensity (failures per time unit) at the beginning of testing, p = the exponential reduction in failure intensity as errors are uncovered and repairs are made. The instantaneous failure intensity, l(t) can be derived by taking the derivative f(t) l(t) = l0 / (l0 pt + 1)

- 26. STRATEGIC ISSUES Specify product requirements in a quantifiable manner long before testing commences. State testing objectives explicitly. Understand the users of the software and develop a profile for each user category. Develop a testing plan that emphasizes “rapid cycle testing.” Build “robust” software that is designed to test itself. Use effective formal technical reviews as a filter prior to testing. Conduct formal technical reviews to assess the test strategy and test cases themselves. Develop a continuous improvement approach for the testing process.

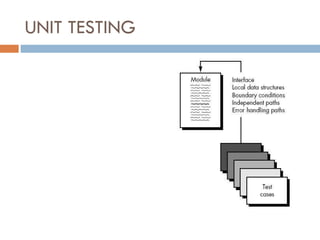

- 27. UNIT TESTING

- 28. UNIT TESTING The module interface is tested to ensure that information properly flows into and out of the program unit under test. The local data structure is examined to ensure that data stored temporarily maintains its integrity during all steps in an algorithm's execution. Boundary conditions are tested to ensure that the module operates properly at boundaries established to limit or restrict processing. All independent paths (basis paths) through the control structure are exercised to ensure that all statements in a module have been executed at least once. And finally, all error handling paths are tested.

- 29. What errors are commonly found during unit testing? Among the more common errors in computation are misunderstood or incorrect arithmetic precedence, mixed mode operations, incorrect initialization, precision inaccuracy, incorrect symbolic representation of an expression. Comparison and control flow are closely coupled to one another (i.e., change of flow frequently occurs after a comparison). Test cases should uncover errors such as Comparison of different data types, Incorrect logical operators or precedence, Expectation of equality when precision error makes equality unlikely, Incorrect comparison of variables, Improper or nonexistent loop termination, Failure to exit when divergent iteration is encountered, and Improperly modified loop variables.

- 30. Potential errors while error handling is evaluated Error description is unintelligible. Error noted does not correspond to error encountered. Error condition causes system intervention prior to error handling. Exception-condition processing is incorrect. Error description does not provide enough information to assist in the location of the cause of the error.

- 32. Unit Test Procedures Because a component is not a stand-alone program, driver and/or stub software must be developed for each unit test. In most applications a driver is nothing more than a "main program" that accepts test case data, passes such data to the component (to be tested), and prints relevant results. Stubs serve to replace modules that are subordinate (called by) the component to be tested. A stub or "dummy subprogram" uses the subordinate module‘s interface, may do minimal data manipulation, prints verification of entry, and returns control to the module undergoing testing.

- 33. INTEGRATION TESTING Top-down Integration Bottom-up Integration Regression Testing Smoke Testing

- 34. Top-down Integration Depth-first integration Breadth-first integration

- 35. Top-down Integration The integration process is performed in a series of five steps: The main control module is used as a test driver and stubs are substituted for all components directly subordinate to the main control module. Depending on the integration approach selected (i.e., depth or breadth first), subordinate stubs are replaced one at a time with actual components. Tests are conducted as each component is integrated. On completion of each set of tests, another stub is replaced with the real component. Regression testing (Section 18.4.3) may be conducted to ensure that new errors have not been introduced. The process continues from step 2 until the entire program structure is built.

- 37. Bottom-up Integration A bottom-up integration strategy may be implemented with the following steps: Low-level components are combined into clusters (sometimes called builds) that perform a specific software subfunction. A driver (a control program for testing) is written to coordinate test case input and output. The cluster is tested. Drivers are removed and clusters are combined moving upward in the program structure.

- 38. Regression Testing Each time a new module is added as part of integration testing, the software changes. New data flow paths are established, new I/O may occur, and new control logic is invoked. These changes may cause problems with functions that previously worked flawlessly. In the context of an integration test strategy, regression testing is the reexecution of some subset of tests that have already been conducted to ensure that changes have not propagated unintended side effects.

- 39. Regression Testing The regression test suite (the subset of tests to be executed) contains three different classes of test cases: • A representative sample of tests that will exercise all software functions. • Additional tests that focus on software functions that are likely to be affected by the change. • Tests that focus on the software components that have been changed.

- 40. Smoke Testing Software components that have been translated into code are integrated into a “build.” A build includes all data files, libraries, reusable modules, and engineered components that are required to implement one or more product functions. A series of tests is designed to expose errors that will keep the build from properly performing its function. The intent should be to uncover “show stopper” errors that have the highest likelihood of throwing the software project behind schedule. The build is integrated with other builds and the entire product (in its current form) is smoke tested daily. The integration approach may be top down or bottom up.

- 41. Smoke Testing Integration risk is minimized. Because smoke tests are conducted daily, incompatibilities and other show-stopper errors are uncovered early, thereby reducing the likelihood of serious schedule impact when errors are uncovered. The quality of the end-product is improved. Because the approach is construction (integration) oriented, smoke testing is likely to uncover both functional errors and architectural and component-level design defects. If these defects are corrected early, better product quality will result. Error diagnosis and correction are simplified. Like all integration testing approaches, errors uncovered during smoke testing are likely to be associated with “new software increments”—that is, the software that has just been added to the build(s) is a probable cause of a newly discovered error. Progress is easier to assess. With each passing day, more of the software has been integrated and more has been demonstrated to work. This improves team morale and gives managers a good indication that progress is being made.

- 42. Comments on Integration Testing As integration testing is conducted, the tester should identify critical modules. A critical module has one or more of the following characteristics: (1) addresses several software requirements, (2) has a high level of control (resides relatively high in the program structure), (3) is complex or error prone (cyclomatic complexity may be used as an indicator), or (4) has definite performance requirements. Critical modules should be tested as early as is possible. In addition, regression tests should focus on critical module function.

- 43. Integration Testing Document Test specification Test plan Test procedure

- 44. Validation Testing Validation succeeds when software functions in a manner that can be reasonably expected by the customer. "Who or what is the arbiter of reasonable expectations?“ The specification contains a section called Validation Criteria. Information contained in that section forms the basis for a validation testing approach.

- 45. Validation Testing Criteria After each validation test case has been conducted, one of two possible conditions exist: (1) The function or performance characteristics conform to specification band are accepted or (2) a deviation from specification is uncovered and a deficiency list is created. Deviation or error discovered at this stage in a project can rarely be corrected prior to scheduled delivery. It is often necessary to negotiate with the customer to establish a method for resolving deficiencies.

- 46. Configuration Review The intent of the review is to ensure that all elements of the software configuration have been properly developed, are cataloged, and have the necessary detail to bolster the support phase of the software life cycle.

- 47. Alpha and Beta Testing Alpha and beta testing is used to uncover errors that only the end-user seems able to find. Alpha Test Beta Test

- 48. System Testing Recovery Testing Security Testing Stress Testing Performance Testing

- 49. Recovery Testing Recovery testing is a system test that forces the software to fail in a variety of ways and verifies that recovery is properly performed. I f recovery is automatic (performed by the system itself), reinitialization, checkpointing mechanisms, data recovery, and restart are evaluated for correctness. If recovery requires human intervention, the mean-time-to-repair (MTTR) is evaluated to determine whether it is within acceptable limits.

- 50. Security Testing Recovery testing is a system test that forces the software to fail in a variety of ways and erifies that recovery is properly performed. If recovery is automatic (performed by the ystem itself), reinitialization, checkpointing mechanisms, data recovery, and restart are evaluated for correctness. If recovery requires human intervention, the mean-time-to-repair (MTTR) is evaluated to determine whether it is within acceptable limits.

- 51. Stress Testing Stress testing executes a system in a manner that demands resources in abnormal quantity, frequency, or volume. For example, (1)special tests may be designed that generate ten interrupts per second, when one or two is the average rate, (2) input data rates may be increased by an order of magnitude to determine how input functions will respond, (3) test cases that require maximum memory or other resources are executed, (4) test cases that may cause thrashing in a virtual operating system are designed, (5) test cases that may cause excessive hunting for disk-resident data are created. Essentially, the tester attempts to break the program.

- 52. Performance Testing Performance tests are often coupled with stress testing and usually require both hardware and software instrumentation. That is, it is often necessary to measure resource utilization (e.g., processor cycles) in an exacting fashion. External instrumentation can monitor execution intervals, log events (e.g., interrupts) as they occur, and sample machine states on a regular basis. By instrumenting a system, the tester can uncover situations that lead to degradation and possible system failure.

- 53. Debugging Debugging occurs as a consequence of successful testing. That is, when a test case uncovers an error, debugging is the process that results in the removal of the error.

- 54. Why is debugging so difficult? 1. The symptom and the cause may be geographically remote. That is, the symptom may appear in one part of a program, while the cause may actually be located at a site that is far removed. 2. The symptom may disappear (temporarily) when another error is corrected. 3. The symptom may actually be caused by nonerrors (e.g., round-off inaccuracies). 4. The symptom may be caused by human error that is not easily traced. 5. The symptom may be a result of timing problems, rather than processing problems.

- 55. 6. It may be difficult to accurately reproduce input conditions (e.g., a real-time application in which input ordering is indeterminate). 7. The symptom may be intermittent. This is particularly common in embedded systems that couple hardware and software inextricably. 8. The symptom may be due to causes that are distributed across a number of tasks running on different processors [CHE90].

- 56. When I correct an error, what questions should I ask myself? Is the cause of the bug reproduced in another part of the program? In many situations, a program defect is caused by an erroneous pattern of logic that may be reproduced elsewhere. Explicit consideration of the logical pattern may result in the discovery of other errors.

- 57. When I correct an error, what questions should I ask myself? What "next bug" might be introduced by the fix I'm about to make? Before the correction is made, the source code (or, better, the design) should be evaluated to assess coupling of logic and data structures. If the correction is to be made in a highly coupled section of the program, special care must be taken when any change is made.

- 58. When I correct an error, what questions should I ask myself? What could we have done to prevent this bug in the first place? This question is the first step toward establishing a statistical software quality assurance approach. If we correct the process as well as the product, the bug will be removed from the current program and may be eliminated from all future programs.

- 59. SOFTWARE TESTING TOOLS Functional testing Performance testing Test management Bug databases Link checkers Security Unit Testing Tools (Ada | C/C++ | HTML | Java | Javascript | .NET | Perl | PHP | Python | Ruby | SQL | Tcl | XML)

- 60. Borland SILKTEST SilkCentral Test Manager - A Powerful Software Test Management Tool SilkTest - Automated functional and regression testing SilkPerformer - Automated load and performance testing SilkMonitor - 24x7 monitoring and reporting of Web, application and database servers

- 61. SILKTEST

- 62. SAMPLE SCRIPT FOR SILKTEST Browser.LoadPage ("mail.yoo.com") Sign In YahooMail.SetActive () SignIn Yahoo.Mail.objSingIn YahooMail.SignUp.Now.Click() Sleep (3) WelcomeTo Yahoo.Set Active Welcome To yahoo.objWelcomeToYahoo.LastName.SetText("lastname") Welcome To Yahoo.objWelcomeToYahoo.LanguageContent.Select(5) WelcomeTo Yahoo.objWelcome ToYahoo.ContactMeOccassionally About.Click () Welcome To Yahoo.objWelcome To Yahoo.Submit ThisForm.Click() If Registration Success.Exists () Print ("Test Pass") else logerror ("Test Fail")

- 63. HP QuickTest Professional TM - E-business functional testing LoadRunner® - Enterprise load testing TestDirector TM – Integrated test management WinRunner® - Test automation for the enterprise

- 64. HP QUICKTEST

- 65. HP LOADRUNNER

- 66. Unit Testing – C# – NUnit namespace bank { public class Account { private float balance; public void Deposit(float amount) { balance+=amount; } public void Withdraw(float amount) { balance-=amount; } public void TransferFunds(Account destination, float amount) { } public float Balance { get{ return balance;} } } } namespace bank { using NUnit.Framework; [TestFixture] public class AccountTest { [Test] public void TransferFunds() { Account source = new Account(); source.Deposit(200.00F); Account destination = new Account(); destination.Deposit(150.00F); source.TransferFunds(destination, 100.00F); Assert.AreEqual(250.00F, destination.Balance); Assert.AreEqual(100.00F, source.Balance); } } }

- 67. Software Testing Environments Software testing labs.

- 68. CLASSICAL TESTING MISTAKES De-humanize the test process AKA Management by Spreadsheet, Management by Email, Management by MS Project … Testers Responsible for Quality Task-based status reporting Evaluating testers by bugs found Lack of test training for developers Separate developers and testers

- 69. CLASSICAL TESTING MISTAKES Over-reliance on scripted testing Untrained exploratory Testing Trying to fix things beyond your reach Vacuous Documentation

- 70. GOOD SOFTWARE TESTING Start as soon as possible Share test results on every stage Firstly test in-limits, then limit points, and then out of limits. Firstly test last changes, then olds Firstly test basic functions, then details Firstly test big risks, then others Force devs to fix the problems found in tests. The best software test is needed software test

- 71. Test Documentation Reasons of Test Documentation Clearing quality targets Logging test activities Evaluating test activities Coordination of test resources and environments Controlling testers’ works Indentifying next test cases (Risk based testing) Finding unnecessary tests Examining user needs

- 72. References “ Software Engineering - A Practitioner's Approach” Pressman (5th Ed), McGraw Hill, 2001 “ How To Design Programs”, MIT Press “ Software Testing”, Ron Patton, 2005 “ Automated and Manual Software Testing Tools and Services” http://www.aptest.com/ “ Open source software testing tools” http://www.opensourcetesting.org/ “ Classic Mistakes in Software Testing”, Brian Marick, STQE, 1997

- 73. More Resources

- 74. Basis Path Testing Flow Graph Notation

- 75. Basis Path Testing Cyclomatic Complexity

- 76. Basis Path Testing path 1: 1-11 path 2: 1-2-3-4-5-10-1-11 path 3: 1-2-3-6-8-9-10-1-11 path 4: 1-2-3-6-7-9-10-1-11 V(G) = E -N + 2 V(G) = 11 edges 9 nodes + 2 = 4 V(G) = P + 1 V(G) = 3 predicate nodes + 1 = 4

- 77. Basis Path Testing Deriving Test Cases Using the design or code as a foundation, draw a corresponding flow graph. Determine the cyclomatic complexity of the resultant flow graph. Determine a basis set of linearly independent paths. Prepare test cases that will force execution of each path in the basis set.

- 79. Basis Path Testing V(G) = 6 regions V(G) = 17 edges 13 nodes + 2 = 6 V(G) = 5 predicate nodes + 1 = 6 path 1: 1-2-10-11-13 path 2: 1-2-10-12-13 path 3: 1-2-3-10-11-13 path 4: 1-2-3-4-5-8-9-2-. . . path 5: 1-2-3-4-5-6-8-9-2-. . . path 6: 1-2-3-4-5-6-7-8-9-2-. . .

- 80. Basis Path Testing Path 1 test case: value( k) = valid input, where k < i for 2 ≤ i ≤ 100 value( i) = 999 where 2 ≤ i ≤ 100 Expected results: Correct average based on k values and proper totals. Note: Path 1 cannot be tested stand-alone but must be tested as part of path 4, 5, and 6 tests. Path 2 test case: value(1) = 999 Expected results: Average = 999; other totals at initial values. Path 3 test case: Attempt to process 101 or more values. First 100 values should be valid. Expected results: Same as test case 1. Path 4 test case: value( i) = valid input where i < 100 value( k) < minimum where k < i Expected results: Correct average based on k values and proper totals. Path 5 test case: value( i) = valid input where i < 100 value( k) > maximum where k <= i Expected results: Correct average based on n values and proper totals. Path 6 test case: value( i) = valid input where i < 100 Expected results: Correct average based on n values and proper totals.

- 81. Basis Path Testing Graph Matrices

- 83. Black-Box Testing Error Categories (1) incorrect or missing functions (2) interface errors (3) errors in data structures or external database access (4) behavior or performance errors (5) initialization and termination errors

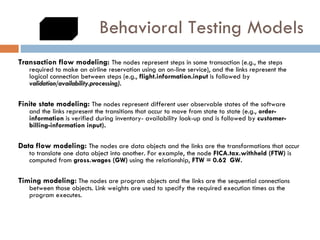

- 85. Behavioral Testing Models Transaction flow modeling: The nodes represent steps in some transaction (e.g., the steps required to make an airline reservation using an on-line service), and the links represent the logical connection between steps (e.g., flight.information.input is followed by validation/availability.processing). Finite state modeling: The nodes represent different user observable states of the software and the links represent the transitions that occur to move from state to state (e.g., order-information is verified during inventory- availability look-up and is followed by customer-billing-information input). Data flow modeling: The nodes are data objects and the links are the transformations that occur to translate one data object into another. For example, the node FICA.tax.withheld (FTW) is computed from gross.wages (GW) using the relationship, FTW = 0.62 GW. Timing modeling: The nodes are program objects and the links are the sequential connections between those objects. Link weights are used to specify the required execution times as the program executes.

- 86. Equivalance Partitioning area code —blank or three-digit number prefix —three-digit number not beginning with 0 or 1 suffix —four-digit number password —six digit alphanumeric string commands —check, deposit, bill pay, and the like area code: Input condition, Boolean—the area code may or may not be present. Input condition, range—values defined between 200 and 999, with specific exceptions. prefix: Input condition, range—specified value >200 Input condition, value—four-digit length password: Input condition, Boolean—a password may or may not be present. Input condition, value—six-character string. command: Input condition, set—containing commands noted previously. ............ -2 -1 0 1 ............ 12 13 14 15 ..... invalid partition 1 valid partition invalid partition 2

- 87. Boundary Value Analysis Guidelines for BVA 1. For input ranges bounded by a and b , test cases should include values a and b and just above and just below a and b respectively. 2. If an input condition specifies a number of values, test cases should be developed to exercise the minimum and maximum numbers and values just above and below these limits. 3. Apply guidelines 1 and 2 to output conditions. 4. If internal program data structures have prescribed boundaries (e.g., an array has a defined limit of 100 entries), be certain to design a test case to exercise the data structure at its boundary.

- 88. Cause-Effect Graphing Guidelines Causes (input conditions) and effects (actions) are listed for a module and an identifier is assigned to each. A cause-effect graph is developed. The graph is converted to a decision table. Decision table rules are converted to test cases.

![CRITERIA FOR COMPLETION OF TESTING f(t) = (1/p) ln [l0 pt + 1] (18-1) where f(t) = cumulative number of failures that are expected to occur once the software has been tested for a certain amount of execution time, t, l0 = the initial software failure intensity (failures per time unit) at the beginning of testing, p = the exponential reduction in failure intensity as errors are uncovered and repairs are made. The instantaneous failure intensity, l(t) can be derived by taking the derivative f(t) l(t) = l0 / (l0 pt + 1)](https://image.slidesharecdn.com/basic-software-testing-concepts-100915012746-phpapp01/85/Basic-software-testing-concepts-25-320.jpg)

![6. It may be difficult to accurately reproduce input conditions (e.g., a real-time application in which input ordering is indeterminate). 7. The symptom may be intermittent. This is particularly common in embedded systems that couple hardware and software inextricably. 8. The symptom may be due to causes that are distributed across a number of tasks running on different processors [CHE90].](https://image.slidesharecdn.com/basic-software-testing-concepts-100915012746-phpapp01/85/Basic-software-testing-concepts-55-320.jpg)

![Unit Testing – C# – NUnit namespace bank { public class Account { private float balance; public void Deposit(float amount) { balance+=amount; } public void Withdraw(float amount) { balance-=amount; } public void TransferFunds(Account destination, float amount) { } public float Balance { get{ return balance;} } } } namespace bank { using NUnit.Framework; [TestFixture] public class AccountTest { [Test] public void TransferFunds() { Account source = new Account(); source.Deposit(200.00F); Account destination = new Account(); destination.Deposit(150.00F); source.TransferFunds(destination, 100.00F); Assert.AreEqual(250.00F, destination.Balance); Assert.AreEqual(100.00F, source.Balance); } } }](https://image.slidesharecdn.com/basic-software-testing-concepts-100915012746-phpapp01/85/Basic-software-testing-concepts-66-320.jpg)