Basics of coding theory

- 1. Coding Procedure for mapping set of messages x1, x2, x3, … xm into a new set of encoded messages C1, C2, C3, … Cm. Improves efficiency. Special codes can give facilities as error detection, error correction, security etc..

- 2. Source Encoding m-ary source alphabets x1, x2, x3, … xm should be coded in binary or D-ary digits to improve efficiency of communication link. Rate of transmission of information is maximum in noisy channel if source probability p(xi) are all equal. Hence codes {C1, C2, C3, … Cm } have equal probabilities of occurrence. This technique is called ENTROPY CODING.

- 3. Properties of codes. Codes should avoid ambiguity in deciphering the codes. Should minimize time of transmission of message. Hence.. 1. Codes should be uniquely decodable. 2. No synchronizing signals should be required to recognize the word. 3. No shorter code should be a prefix to a longer code.. 4. Also called instantaneous codes.

- 4. Average length of the code 1st code C1, --- length L1---- occurs N1 times with probability p1. Total length = N1 L1 2nd code C2, --- length L2---- occurs N2 times with probability p2…….. Total length = N2 L2 Ĺ = N [N1 L1 + N2 L2 + …. + Nm Lm } Ĺ = p1 L1 + p2 L2 + …. + pm Lm } Ĺ = Σi pi Li For smaller Ĺ Messages with higher probabilities should have smaller length.

- 5. Code Efficiency M messages to be coded. Information transferred per message.= H(X). Coded with average length Ĺ digits. Each position of code can take any of D digits. Ideally D digits are equally probable. Maximum information per coded digit = log D. Average information per message before coding should be equal to average information per message after coding. Average length of ideal code = Ĺmin digits. H(X) = Ĺmin log D.

- 6. Code Efficiency Practically D digits are not equally probable. Average information per coded digit will be less than logD. To match with H(X), we require more than Ĺmin digits.----- say Ĺ η = Ĺmin/ Ĺ η = H(X) / Ĺ log D Redundancy = 1- η

- 7. Necessary and sufficient condition for noiseless coding – KRAFT INEQUALITY The necessary and sufficient condition for existence of an irreducible noiseless encoding procedure with specified word length {L1, L2, …Lm} is that a set of positive integers {L1, L2, …Lm} can be found such that m ∑i=1 D-li ≤ 1 Li = length of ith code.

- 8. PROOF Let D = 2 0, 1 Single bit codes can be 0, 1. If there are only 2 messages to be coded, both codes can be taken. say “0” for A and “1” for B. If there are more than two messages….. To satisfy prefix property, one to be chosen for single bit code say “0” for A Other to be used for two bit code 10, 11 for B and C There will be no ambiguity if there are only 3 symbols to be coded. If there are more than three messages….. To satisfy prefix property, one to be chosen for two bit code say “10” for B Other to be used for three bit code 110, 111 for C and D There will be no ambiguity if there are only 4 symbols to be coded. And so on….

- 9. PROOF contd.. Let D = 4 a, b, c, d. Single bit codes can be a, b, c, d. If there are only 4 messages to be coded, 4 codes can be taken. If there are more than four messages single bit codes will be less than 4. To satisfy prefix property, number of codes with length 1 W1 will be.. W1 ≤ D i.e. W1 ≤ 4 True for any D.

- 10. If there are more than four messages….. To satisfy prefix property, Say W1 = 2say “a” and “b”. Others to be used for two bit code Say ca, cb, cc, cd, da, db, dc, dd There will be no ambiguity if there are only 10 symbols to be coded. If there are more than ten messages two bit codes should be less than 8. To satisfy prefix property, number of codes with length 2 W2 will be.. W2 ≤ (D - W1 ) D or D2 – W1 D i.e. W2 ≤ 8 True for any D.

- 11. If there are more than ten messages….. To satisfy prefix property, Say W2 = 4 say ca, cb, da, db Others to be used for three bit code cca, ccb, ccc, ccd cda, cdb, bdc, cdd dca, dcb, dcc, dcd dda, ddb, ddc, ddd and so on… To satisfy prefix property, number of codes with length 3 W3 will be.. W3 ≤ [ (D - W1 ) D - W2 ]D i.e. W2 ≤ 16 or W3 ≤ D3 - W1 D2 - W2 D

- 12. Generalizing.. n is maximum length of encoded word, then number of words with length n – Wn ≤ Dn - W1 Dn-1 - W2 Dn-2 ….. – Wn-1 D 0 ≤ Dn - W1 Dn-1 - W2 Dn-2 ….. – Wn-1 D - Wn Dividing by Dn 0 ≤ 1 - W1 D-1 - W2 D-2 ….. – Wn-1 D–(n-1) - Wn D-n W1 D-1 + W2 D-2 …..+ Wn-1 D–(n-1) + Wn D-n =≤ 1 n ∑i=1 Wi D- i ≤ 1

- 13. Necessary and sufficient condition for noiseless coding is n ∑i=1 Wi D-i ≤ 1 Which is same as m ∑i=1 D-li ≤ 1

- 14. Example [ X ] = { x1, x2, x3, x4, x5, x6, x7} 7 codes Li = { 2, 2, 3, 3, 3, 4, 5 } code lengths. Hence Wi = { 0, 2, 3, 1, 1,} n ∑i=1 Wi D- i = 2/D2 + 3/D3 + 1/D4 + 1/D4 =1/D2+1/D2+1/D3+1/D3+1/D3 + 1/D4+1/D4 7 =∑i=1 D- Li

- 15. PROBLEMS Find the smallest number of letters in the alphabets D for devising a code with prefix property such that [W] = { 0, 3, 0, 5 }. Devise such a code. SOLUTION m n ∑i=1 D- Li ≤ 1 or ∑i=1 Wi D- i ≤ 1 3 D-2 + 5 D-4 ≤ 1 D4 – 3d2 - 5 ≥ 0 D = 3 smallest value Say { 0, 1, 2}

- 16. [W] = { 0, 3, 0, 5 }. W1 + W2 + W3 + …. Wn = m M = 8 Possible options: 00 01 02 1002 1000 1001 2000 2022

- 17. PROBLEMS Verify if following sets of words lengths may correspond to uniquely decipherable binary codes. a) [W] = { 0, 2, 3, 2} b) [W] = { 0, 2, 2, 2, 5}

- 18. Shanon-Fano coding theorems: Theorem 1: Given source with alphabets x1, x2, x3, … xm Probabilities are p1, p2, p3, … pm Average length of code - L̅ Alphabet size – D Then L̅ ≥ H(x) / log D Obvious but can be proved as ---

- 19. H(x) - L̅ log D = - ∑ pi log pi - ∑ pi Li log D = ∑ pi log (D -Li / pi) ≤∑ pi {(D -Li / pi) – 1} ≤ {∑ (D -Li)} - {∑ pi)} ≤ {∑ (D -Li)} – 1 KRAFT UNEQUALITY ≤ 1-1 H(x) - L̅ log D ≤ 0 L̅ ≥ H(x) / log D

- 20. Shanon-Fano coding theorems: Theorem 2: Given source with alphabets x1, x2, x3, … xm Probabilities are p1, p2, p3, … pm Average length of code - L̅ Alphabet size – D Then it is possible to construct a prefix-free code such that L̅ ≤ {H(x) / log D} +1

- 21. L̅ ≥ H(x) / log D Li = │̅-logD Pi ̅ │ │̅a ̅ │ = smallest integer ≥ a Li < -logD Pi + 1 ∑ pi Li < - ∑ pi logD pi + ∑ pi L̅ ≤ {H(x) / log D} +1

- 22. Shannon’s encoding algorithm If log(1/pi) is not an integer then log(1/pi) ≤ Li ≤(1+ log(1/pi) ) and Ci = (Fi)binary (Li bits) i-1 where- Fi = Σk=1 pk Method of encoding— List message and its probability in tabular form. Find corresponding Fi . Convert Fi to binary. Find and list Li . Select Li no of bits from binary and form code.

- 23. Example – Encode 27/128, 27/128, 9/128, 9/128, 9/128, 9/128, 9/128, 9/128, 3/128, 3/128, 3/128, 3/128, 3/128, 3/128, 2/128. Solve and note that no smaller code is a prefix to a larger code.

- 24. Message Prob Fi Binary Fi Li CODE x1 27/128 0 .00000000 3 000 X2 27/128 27/128 .00110110 3 001 X3 9/128 54/128 .0110110 4 0110 X4 9/128 63/128 .0111111 4 0111 X5 9/128 72/128 .1001000 4 1001 X6 9/128 81/128 .1010001 4 1010 X7 9/128 90/128 .1011010 4 1011 X8 9/128 99/128 .1100011 4 1100 X9 3/128 108/128 .1101100 6 110110 X10 3/128 111/128 .1101111 6 110111 X11 3/128 114/128 .1110010 6 111001 X12 3/128 117/128 .1110101 6 111010 X13 3/128 120/128 .1111000 6 111100 X14 3/128 123/128 .1111011 6 111101 X15 2/128 126/128 .1111110 6 111111

- 25. SHANNON—FANO CODING Arrange the probabilities in decreasing order. Make two groups such that sum of probabilities in each group is equal to the best possible way. Assign “1” to all members in upper group and “0” to all members below. Subdivide each group into two, such that sum of probabilities in each group is equal, to the best possible way. Assign “1” to all members in upper sub-group of each group and “0” to all members to lower sub-group of each group . Continue till all messages are sub-grouped.

- 26. A source is generating alphabets A, B, C, …., H having following probabilities. P(xi) = { 1/2, 1/4, 1/16, 1/16, 1/32, 1/32, 1/32, 1/32} Find average code length, efficiency, p(0), p(1), and redundancy, Find efficiency if they are coded directly by 3 bit binary code.

- 27. SYMBOL P(xi) Ist IInd IIIrd CODE A 1/2 1 1 B 1/4 0 1 01 C 1/16 0 0 1 0011 D 1/16 0 0 1 0010 E 1/32 0 0 0 00011 F 1/32 0 0 0 00010 G 1/32 0 0 0 00001 H 1/32 0 0 0 00000

- 28. shorter code is not a prefix to longer code. Ĺ = Σi pi Li = 2.125 bits/symbol H(X) = 2.125 bits/symbol η = H(X) / Ĺ log D = 2.125/ ( 2.125 log2 ) Redundancy = 0 meaning = ? P(0) = Average number of ‘0’s per code / average length of code ={ Σi pi N(0)i } / {Σi pi Li } P(1) = Average number of ‘1’s per code / average length of code ={ Σi pi N(1)i } / {Σi pi Li }

- 29. If coded directly into binary as 000, 001, 010, 011, 100, 101, 110, 111 Ĺ = 3 bits per symbol H(X) = 2.125 η = 2.125/(3 log2) = 0.7083 or 70.83% Redundancy = 29.2% ASSIGNMENT-- Find H(X) , Ĺ, η, p(0), p(1) for following probabilities. 0.3, 0.2, 0.15, 0.12, 0.1, 0.07, 0.04, 0.02. ( Sum should be 1.)

- 30. HUFFMAN CODING Arrange symbols in descending order. For minimum Ĺ , L(i) ≥ L(i-1). If L(m) = L(m-1), they should defer only in last digit.. Select last two symbols and assign 0/1 to them. Form a composite symbol with probability {p(m) + p(m-1)}. Rearrange the reduced source with (m-1) symbols, in next column in descending order. Track the sum in the second column. Assign 0/1 to last two symbols in second column and form a reduced source with (m-2) symbols in third column. Continue till all messages are assigned 0/1.. Track a p(xi) to find assigned 0/1 in all columns from left to right and write the code from right to left.

- 31. Find Huffman code for a source with 8 alphabets with probabilities – 0.22, 0.2, 0.18, 0.15, 0.1, 0.08, 0.05, 0.02. Find H(X) , Ĺ, η, p(0), p(1) EXAMPLE (D = 2)

- 32. 0.02 .070.05 0.10.080.08 0.150.10.1 0.150.150.15 0.180.180.18 0.20.20.2 0.220.220.22 2nd reduction1st reductionMessage } 0 1 } 0 1 } 0 1 If sum is equal to a already existing probability, sum should be placed below it as sum comes from lower probability. Continue….

- 33. 0 1 0 1 } } Message I II III IV V VI VII 0.22 0.22 0.22 0.25 0.33 0.42 0.58 10 0.2 0.2 0.2 0.22 0.25 0.33 0.42 11 0.18 0.18 0.18 0.2 0.22 0.25 000 0.15 0.15 0.15 0.18 0.2 001 0.1 0.1 0.15 0.15 011 0.08 0.08 0.1 0100 0.05 0.07 01010 0.02 01011} } }0 1 0 1 0 1 } }0 1 0 1 Last 2 codes- same length , deffer in last bit.

- 34. SOLUTION H(X) = 2.75 bits per symbol Ĺ = 2.8 bits per symbol η = 98.2%

- 35. Find Huffman code with D = 4 for a source with 8 alphabets with probabilities – 0.22, 0.2, 0.18, 0.15, 0.1, 0.08, 0.05, 0.02. Find H(X) , Ĺ, η, p(0), p(1) EXAMPLE (D = 4)

- 36. D = 4 The group in each column will have D messages and 4 codes will be assigned, say 0, 1, 2, 3 or 4 voltage levels etc.. But… First column group should have r messages such that.. r = [ m – k (D-1) ] K is highest possible integer to keep r positive. r = 8 –K(4-1) r = 8 – 2(4-1) Largest possible k = 2 r = 2 First column group will have 2 symbols.

- 37. Symbol Probability I II CODE A 0.22 0.22 0.4 1 B 0.2 0.2 0.22 2 C 0.18 0.18 0.2 3 D 0.15 0.15 0.18 00 E 0.1 0.1 01 F 0.08 0.08 02 G 0.05 0.07 030 H 0.02 031 0 1 } 0 1 2 3 0 1 2 3

- 38. SOLUTION H(X) = 2.75 bits per symbol Ĺ = 1.47 bits per symbol η = 93.5%

- 39. ASSIGNMENTS 1. We have one 2-alphabet source with p(0) = 0.25 and p(1) = 0.75. Find H(X), η . Now if we extend the source to S3 with alphabets {000, 001, 010, 011, 100, 101, 110, 111}, find probabilities for these symbols and find Huffman code for them. Find H(X), η . 2. Design Shannon-fano code and Huffman code for set of 10 messages with probabilities- { 0.2, 0.18, 0.12, 0.1, 0.1, 0.08, 0.06, 0.06, 0.06, 0.04} Find H(X), η . 3. Design Huffman code using D = 4 for 27/128, 27/128, 9/128, 9/128, 9/128, 9/128, 9/128, 9/128, 3/128, 3/128, 3/128, 3/128, 3/128, 3/128, 2/128.

- 40. ERROR CONTROL CODING Probability of error for a particular signaling scheme is a function of S/N at the receiver input and the data rate. In practical systems, the maximum signal power and bandwidth of the channel are restricted. Noise power spectral density is also fixed for a particular operating environment. Parameters of the signaling scheme as number and type of signal used are chosen to specially minimize complexity and cost of equipment.

- 41. Result of the constrains It is not possible to design a signaling scheme which will yield acceptable probability of error. Only practical alternative for reducing probability of error is the use of error control; coding. It is called Channel Coding.

- 42. CHANNEL ENCODER Channel encoder systematically adds digits to the transmitted message digits. Added digits convey no new information. Added digits help channel decoder to detect and correct errors in information digits. Error detection and correction lowers the overall probability of error.

- 43. CHANNEL ENCODER Channel Encoder Demodulator Modulator Channel decoder Noisy channel I/P message k bits, bit rate rb O/P message k bits bit rate rb Coded O/P, n bits, bit rate rc Coded O/P, n bits, bit rate rc k r n = k + r

- 44. ERROR CONTROL METHODS Forward error correcting method: Channel decoder corrects the possible errors in the received sequence. Error detecting method: Decoder matches the received code with fixed predetermined code and accepts only if it matches with any of them. Requests for retransmission if it doesn’t match with any of the set codes..

- 45. TYPES OF CODES BLOCK CODES: A block of k information bits is followed by a group of r check bits that are derived from block of information bits. At the receiver, the check bits are used to verify the information bits in information block preceding the check bits. CONVOLUTION CODES: Check bits are continuously interleaved with information bits The check bits verify the information bits not only in the block immediately preceding them but also in other blocks .

- 46. Definitions Code dictionary- Total set of codes Code vector- individual codes Hamming distance- d(X,Y) between two code vectors X and Y is equal to number of discrepancies between them. X = 101 Y = 110 Hamming distance d(X,Y) = 2 Minimum distance- dmin. In a code dictionary, we can find hamming distances between all possible pairs of codes . Minimum of all the hamming distance is called Minimum distance of the code.

- 47. CONDITION FOR ERROR DETECTION AND CORRECTION Error detection is always possible if number of transmission errors in a code word is less than dmin. Otherwise codeword will change to another code vector. X = 000 Y = 111 dmin = 3 Single or double errors may give 001, 011, 101, etc. Occurrence of errors can be detected. If number of errors = dmin =3, codeword will turn to other codeword and can not be detected.

- 48. CONDITION FOR ERROR DETECTION AND CORRECTION dmin.= 2 Can detect single error. dmin.= 3 Can correct single error. dmin.= 4 Can correct single error and detect double error. In general – 1. dmin.= 2 l l error detection 2. dmin.= 2 t + 1 t error correction 3. dmin.= t + l +1 l>t, l error detection , t error correction

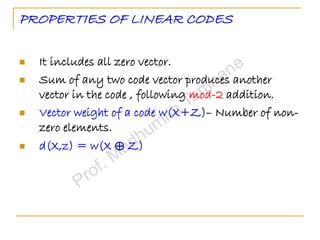

- 49. PROPERTIES OF LINEAR CODES It includes all zero vector. Sum of any two code vector produces another vector in the code , following mod-2 addition. Vector weight of a code w(X+Z)– Number of non- zero elements. d(X,z) = w(X ⊕ Z)

- 50. Single error correcting Hamming code using generator polynomial k data bits converted to n code bits (n>k) . n – k check bits are generated from linear combination of message bits and data is augmented with it.. In the code, first k bits are same as data bits. D = { d1, d2, d3,….dk} k-tuple vector having 0 or 1. Ci = di for I = 1 to k. Ck+1 = p11d1 + p21d2 + ….pk1dk Ck+2 = p12d1 + p22d2 + ….pk2dk…… Cn = p1,n-kd1 + p2, n-kd2 + ….pk, n-kdk Pij are 0 or 1 and all addition are mod-2.

- 51. [ C1, C2, C3….Cn] = 1 0 0 0 …0 p11 p12 p13…p1,n-1 [d1, d2, d3…dk] 0 1 0 0 …0 p21 p22 p23…p2,n-1 0 0 1 0 …0 p31 p32 p33…p3,n-1 . . 0 0 0 0 …1 pk1 pk2 pk3…pk,n-1 General Formula

- 52. General Formula C = D G G = [ Ik P] Ik is identity matrix of order k P is an arbitrary matrix ( k by n-k). Important step is choice of P.

- 53. The generator matrix for a ( 6,3 ) block code is given below. Find all code vector. 1 0 0 0 1 1 G = 0 1 0 1 0 1 0 0 1 1 1 0

- 54. SOLUTION k = 3, n = 6 Possible data messages are 000, 001, 010, 011, 100, 101, 110, 111. We have to find all 8 code vectors. Let d = 101 1 0 0 0 1 1 C = DG = (1 0 1) 0 1 0 1 0 1 0 0 1 1 1 0 C = 1 0 1 1 0 1

- 55. SOLUTION d C 0 0 0 0 0 0 0 0 0 0 0 1 0 0 1 1 1 0 o 1 0 0 1 0 1 0 1 0 1 1 0 1 1 0 1 1 1 0 0 1 0 0 0 1 1 1 0 1 1 0 1 1 0 1 1 1 0 1 1 0 1 1 0 1 1 1 1 1 1 0 0 0

- 56. Checking for errors Assume a parity check matrix H as p11 p21 p31…pk,1 1 0 0 0…0 H = p12 p22 p32…pk,2 0 1 0 0 …0 p13 p23 p33…pk,3 0 0 1 0 …0 . p1,n-k p2,n-k …pk,n-k0 0 0 0 …1 H = [ PT In-k] (n-k)x nmatrix

- 57. Checking for errors RULE C is a code word in the (n,k) block code, generated by G = [ Ik P] if and only if CHT = 0

- 58. Checking for errors - Find the product of received code with HT. If product is 0 , there is no error. If not,.. Received word is R. Error Syndrome S = RHT S = ( C + E ) HT S = ( C HT + E HT ) S = 0 + E HT S = E HT

- 59. Taking same example – make matrix H and HT The generator matrix for a ( 6,3 ) block code is given below. Find all code vector. 1 0 0 0 1 1 G = 0 1 0 1 0 1 0 0 1 1 1 0 0 1 1 1 0 0 H = 1 0 1 0 1 0 1 1 0 0 0 1 n-k rows, n columns 0 1 1 1 0 1 HT = 1 1 0 1 0 0 0 1 0 0 0 1 n rows, n-k columns

- 60. C = 1 0 1 1 0 1 No error Finding CHT for a correct code Using -mod-2 addition 0 1 1 1 0 1 CHT = [1 0 1 1 0 1] 1 1 0 = 0 0 0 1 0 0 0 1 0 0 0 1

- 61. C = 1 0 1 0 0 1 Error in 4th bit. Try introducing error in other bits and in other codes as well. Finding CHT for a CORRUPTED code -Using mod-2 addition 0 1 1 1 0 1 CHT = [1 0 1 0 0 1] 1 1 0 = 1 0 0 1 0 0 0 1 0 0 0 1

- 62. ASSIGNMENT : The generator matrix for a ( 7,4 ) block code is given below. Find all code vector. 1 0 0 0 1 1 1 G = 0 1 0 0 1 1 0 0 0 1 0 1 0 1 0 0 0 1 0 1 1 Find 16 code words. Show that for correct code error syndrome is zero. Show how this code corrects single errors at any position. NOTE: All codes are self checked.

- 63. Generation of H or HT matrix RULE: No row should have all zero in HT . Each row should be distinct. Last (n-k) rows should have identity matrix of order (n-k). First k rows should have two or more than two number of 1’s.

- 64. Generation of H or HT matrix RULE: Contd… Number of bits in each row of HT is (n-k). 2(n-k) possible combinations are possible to fill rows of HT . All zeros combination is to be avoided . 2(n-k) -1 combinations are available to chose n combinations from. Single ones occupy lower (n-k) rows. Out of remaining combinations , we can choose any k combinations and fill top k rows.

- 65. Generation of G matrix if only k is given 2(n-k) -1 combinations are available to chose n combinations from. 2(n-k) -1 ≥ n 2(n-k) ≥ n +1 n-k ≥ log2 (n+1) n ≥ k + log2 (n+1) Find minimum value of n for which above is true.

- 66. EXAMPLE: Design a linear block codes for minimum distance of 3 and message block size of 3. Solution: n ≥ k + log2 (n+1) Largest n to satisfy above = 6. n-k = 3 2(n-k) possible combinations are: 0 0 0 0 0 1 0 1 0 0 1 1 1 0 0 1 0 1 1 1 0 1 1 1 All zeros are to be avoided. Single ones are to be avoided for upper k rows. Remaining possible combinations are: 0 1 1 1 0 1 1 1 0 1 1 1 Contd..

- 67. Chose any 3 from above 4 combinations in any order and fill top k rows of HT . Bottom n-k rows of HT are identity matrix . Generate G matrix from HT. Sample: Find code words. 1 0 0 0 1 1 G = 0 1 0 1 0 1 0 0 1 1 1 1 0 1 1 1 0 1 HT = 1 1 1 1 0 0 0 1 0 0 0 1

- 68. ASSIGNMENT: Design a linear block codes for minimum distance of 3 and message block size of 4. Check for correctness of codes. Introduce single errors at various position and show that this code can correct single errors.

- 69. Coder for Linear Block Code (Single error correcting Hamming code) 1 0 0 0 1 1 G = 0 1 0 1 0 1 0 0 1 1 1 0 M U X X3 X2 X1 + + + P3 P2 P1 Output P3P2P1X3X2X1 Input X1X2X3

- 70. Decoder for Linear Block Code (Single error correcting Hamming code) 1 0 0 0 1 1 G = 0 1 0 1 0 1 0 0 1 1 1 0 0 1 1 1 0 1 HT = 1 1 1 1 0 0 0 1 0 0 0 1 C = X1X2X3P1P2P3 e1 = X2 ⊕ X3 ⊕ P1 X1 0 1 1 X2 1 0 1 X3 1 1 0 P1 1 0 0 P2 0 0 1 P3 0 0 1 No error 0 0 0

- 71. Decoder for Linear Block Code (Single error correcting Hamming code) X1 0 1 1 X2 1 0 1 X3 1 1 0 P1 1 0 0 P2 0 0 1 P3 0 0 1 No error 0 0 0 D E M U X X1 X2 X3 P1 P2 P3 + + + X2 X3 e1 e2 e3 + + + AND Gates X1 X1 X2 X3 X2 X3

- 72. CYCLIC REDUNDANCY CODES CRC Sub class of linear block codes. Encoding and syndrome calculation can be easily implemented by using simple shift registers. Has fair amount of mathematical structure to achieve error correcting properties.

- 73. CYCLIC REDUNDANCY CODES CRC An (n,k) linear block code is called cyclic code if it has following property- C(o) = ( C0, C1, C2, …Cn-1 ) is a code vector of C , then C(1) = ( C1, C2, C3, …Cn-1, C0 ) obtained by shifting C0 cyclically is also a valid code vector of C. Or ( Cn-1, C0, C1, …Cn-2, ) is also a valid code vector. Property of cyclic code allow us to write c as: C = ( C0 + C1x + C2x2+ …Cn-1X n-1) C0, C1, C2, …Cn-1 can be “0” 0r “1”.

- 74. CRC Generation NON SYSTEMATIC CODES RULE: If g(x) is a polynomial of degree (n-k) and is a factor of (xn+1), then g(x) generates an (n,k) cyclic code in which the code polynomial C(x) for a data vector D = ( d0, d1, d2…dk-1) is generated by C(x) = D(x) g(x) Where D(x) = d0 + d1 x + d2 x2 …dk-1xk-1

- 75. Generator polynomial for (7,4) cyclic code is g(x) = 1 + x + X3. Find non-systematic 16 code words. D = 1010 D(x) =1 + x2 C(x) = D(x) g(x) = (1 + x2 ) (1 + x + X3) Multiplication and addition should be binary and mod-2. C(x) = 1 + 1.x + 1.x2 + 0.x3 + 0.x4+ 1.x5 + 0.x6 C = 1 1 1 0 0 1 0 Find all 16 codes and check cyclicity.

- 76. CODES 0 0 0 0 0 0 1 0 1 1 1 0 0 1 0 10 0 0 0 1 1 0 1 1 1 1 1 1 1 1 1 11 0 0 1 1 0 1 0 2 1 0 1 1 1 0 0 12 0 0 1 0 1 1 1 3 1 0 1 0 0 0 1 13 0 1 1 0 1 0 0 4 1 0 0 0 1 1 0 14 0 1 1 1 0 0 1 5 1 0 0 1 0 1 1 15 0 1 0 1 1 1 0 6 0 1 0 0 0 1 1 7 1 1 0 1 0 0 0 8 1 1 0 0 1 0 1 9

- 77. Error Syndrome If R(x) is the received code word then S(x) = R(x) / g(x) If R(x) has no error, S(x) will be zero. If not, it will point to position of error. Error in a particular position i in all codes will always give same remainder.

- 78. Example: let code is 1110010 or 1 + x + x2 + x5 x2 + 1 x3 + x + 1 ) x5 + x2 + x + 1 x5 + x3+ x 2 x3+ x + 1 x3+ x + 1 0 0 0 Remainder is zero . No Error. Introduce error in all position in all codes and observe remainder. Quotient is desired data. 1 + x2 Or 1010

- 79. let code has error in 3rd bit from left as 1100010 x2 + 1 x3 + x + 1 )x5 + x + 1 x5 + x3+ x 2 x3 + x2 + x + 1 x3+ x + 1 x2 This particular dictionary generated by x3 + x + 1 , if has error in 3rd bit , will always give remainder as x2 . Data bank can be created. Divide corrected code by g(x) again to get data (quotient).

- 80. ASSIGNMENT: Generator polynomial for (7,3) cyclic code is g(x) = 1 + X2 +X3 + X4 . Find 8 non systematic code words. Check cyclicity and show that it can correct single error.

- 81. CRC Generation SYSTEMATIC CODES RULE: If g(x) is a polynomial of degree (n-k) and is a factor of (xn+1), then g(x) generates an (n,k) cyclic code in which the code polynomial C(x) for a data vector D = ( d0, d1, d2…dk-1) is generated as C(x) = r(x) + xn-kD(x) Where {xn-k D(x) } = g(x) q(x) + r(x) q(x) is quotient and r(x) is remainder. R(x) is of the order n-k.

- 82. Generator polynomial for (7,4) cyclic code is g(x) = 1 + x + X3. Find systematic 16 code words. D = 1110 D(x) =1 + x+x2 {xn-k D(x) } = x3 D(x) {xn-k D(x) } = x3 + x4 +x5 x2 + x x3 + x + 1 )x5 + x4 + x3 x5 + x3+ x 2 x4+ x2 x4+ x2+ x x

- 83. CODE C(x) = r(x) + xn-kD(x) C(x) = 0+ 1.x + 0.x2 + 1.x3 + 1.x4 + 1.x5 + 0.x6 C = 0 1 0 1 1 1 0 Same thing can be achieved if approached from right. Find all code words and check cyclicity.

- 84. CODES 0 0 0 0 0 0 0 0 0 0 1 1 0 1 0 10 1 0 1 0 0 0 1 1 1 0 0 1 0 1 1 11 1 1 1 0 0 1 0 2 1 0 1 1 1 0 0 12 0 1 0 0 0 1 1 3 0 0 0 1 1 0 1 13 0 1 1 0 1 0 0 4 0 1 0 1 1 1 0 14 1 1 0 0 1 0 1 5 1 1 1 1 1 1 1 15 1 0 0 0 1 1 0 6 0 0 1 0 1 1 1 7 1 1 0 1 0 0 0 8 0 1 1 1 0 0 1 9

- 85. ASSIGNMENT: Generator polynomial for (7,3) cyclic code is g(x) = 1 + X2 +X3 + X4 . Find 8 systematic code words. Check cyclicity and show that it can correct single error. NOTE: Error syndrome calculation is same as non-systematic codes .

- 86. MAJORITY LOGIC CODING AND DECODING Coding and decoding using shift registers.

- 87. Representation of g(x) = 1 + x + x3 using shift registers. Three shift registers indicate x, x2 and x3 positions. x3 is added to input i.e. 1 and to x. For product input the multiplicand from right with MSB input first and product comes out from left, MSB out first. M1 M2 M3 PRODUCT MSB out first Data input MSB in first g(x)

- 88. Representation of g(x) = 1 + x + x3 using shift registers. For division, input the multiplicand from right with LSB input first and quotient comes out from left, LSB out first. Remainder is what remains in the shift registers after last bit of data leaves the last register. M1 M2 M3 Divisor LSB in first Quotient g(x) PRODUCT

- 89. Generation of non systematic CRC using shift registers. CRC is generated by multiplying input with g(x). Input the data bits from right one by one with MSB input first and code comes out from left, MSB out first. M1 M2 M3 CRC code MSB out first Data input MSB in first g(x)

- 90. OUT x1 x2 x3 IN let input Is 1101 1 1 0 1 1 0 1 1 1 1 1 1 1 0 0 0 0 0 1 1 0 0 1 0 0 0 1 0 0 0 1 0 0 0 0 Code IS 1 0 1 0 0 0 1 M1 M2 M3 Data input MSB in first CRC code MSB out first

- 91. OUT x1 x2 x3 IN INPUT 1011 1 1 0 1 1 1 0 1 0 0 1 0 0 1 1 1 1 1 1 1 1 1 1 0 0 1 1 0 0 0 1 0 0 0 0 Code IS 1 1 1 1 1 1 1 M1 M2 M3 Data input MSB in first CRC code MSB out first Try other codes.

- 92. Generation of systematic CRC using shift registers. CRC is generated by dividing g(x) by input data shifted bi xn-k. Input the data bits from left one by one with LSB input first . Input more zeroes so that MSB of data leaves last shift register. Note the remainder . Join the remainder to data, to make code.

- 93. IN x1 x2 x3 OUT let input Is 1110 0 0 0 0 0 1 1 0 0 0 1 1 1 0 0 1 1 1 1 1 0 1 0 1 1 0 1 0 0 0 0 0 1 0 0 Code IS 0 1 0 1 1 1 0 M1 M2 M3 Data in LSB in first

- 94. IN x1 x2 x3 let input Is 1 0 1 0 0 0 0 0 1 1 0 0 0 0 1 0 1 1 0 1 0 1 0 0 0 0 1 0 0 0 0 1 Code IS 0 0 1 1 0 1 0 M1 M2 M3 Data in LSB in first Try other codes.

- 95. Detection of systematic and non-systematic CRC using shift registers. CRC is decoded by dividing code by g(x) . Input the code bits from left one by one with LSB input first . Note the remainder . If remainder is all zeroes, there is no error in the code. If not, note the remainder. Compare it to syndrome calculated using polynomials.

- 96. IN x1 x2 x3 let input Is 0111001 1 1 0 0 0 0 1 0 0 0 0 1 1 0 1 0 1 1 0 1 1 0 0 0 0 0 0 0 Remainder zero. No error. M1 M2 M3 Code in LSB in first Try 1111111 with no error.

- 97. IN x1 x2 x3 let input Is 0111001 0 0 0 0 Error in 1st bit from right. 0 0 0 0 R = 0111000 0 0 0 0 1 1 0 0 1 1 1 0 1 1 1 0 0 1 0 1 Remainder 101.. 1st bit from right in error. M1 M2 M3 Code in LSB in first Try 1111111 with Error in 1st bit from right..

- 98. Take codes 0111001 and 1111111 . Introduce errors in all positions. Compare remainders. This method is same for systematic and nonsystematic codes. All properties of CRC holds good for both codes.

- 99. Self correction using majority logic decoding. USING ROM M1 M2 M3 Set of 8 ROM of 7 bit each 7 bit shift register Buffer register Used as address Corrected code C(x) E(x) R(x)

- 100. How does it correct? Non systematic code: If there is error, first correct error to get C. To correct last position error, we must EX-OR R(x) with 0000001. The remainder for last bit error is used as, address for RAM location, where 0000001 is stored. And so on… Divide C by g(x) as before. Output of M3 gives data after two bits. (LSB out first ). Refer back. Check with new combinations.- ASSIGNMENT

- 101. How does it correct? Systematic code: If there is error, first correct error to get C. If there is error, first correct error to get C. To correct last position error, we must EX-OR R(x) with 0000001. The remainder for last bit error is used as, address for RAM location, where 0000001 is stored. And so on… Last 4 bits of corrected code are data directly. Refer back. Check with new combinations.- ASSIGNMENT

- 102. Self correction using majority logic decoding. USING RING COUNTER M1 M2 M3 Check for 000 at end of 7th bit. Check for 100 from 8th bit. Shift ring counter Buffer register Used as address Corrected code C Count check

- 103. let input Is 0111001. Error in 1st bit from right.. R = 0111000 IN x1 x2 x3 0 0 0 0 0 0 0 0 0 0 0 0 1 1 0 0 1 1 1 0 1 1 1 0 0 1 0 1 0 1 0 0 1 zero input gives 100. Error in 1st bit from right. Input more zeroes

- 104. let input Is 0010111 Error in 5th bit from right. R = 00000111 IN x1 x2 x3 1 1 0 0 1 1 1 0 1 1 1 1 0 1 0 1 0 1 0 0 0 0 1 0 0 0 0 1 0 1 1 0 0 0 1 1 0 1 1 1 0 1 0 1 0 1 0 0 5 zero input gives 100. Error in 5th bit from right. Input more zeroes

- 105. CONVOLUTION CODES Difference between block codes and convolution codes.: Block code: Block of n digits generated by the encoder in a particular time unit depends only on block of k input massage digits within that time unit. Used mainly for error detection as data are transmitted in blocks. Convolution code: Block of n digits generated by the encoder in a particular time unit depends not only on block of k input massage digits within that time unit but also on the previous (N-1) blocks of message digits (N>1). Mainly used for error correction.

- 106. TREE CODES and TRELLIS CODES Input stream broken into m segments of ko symbols each. Each segment – Information frame. Encoder – (Memory + logic circuit.) m memory to store m recent information frames at a time. Total mko information symbols. At each input, logic circuit computes codeword. For each information frames (ko symbols), we get a codeword frame (no symbols ). Same information frame may not generate same code word frame. Why?

- 107. TREE CODES ko ko ko ko ko ko ko Logic no Encoder Constraint Length v = mko Information Frame Codeword Frame •Constraint length of a shift register encoder is number of symbols it can store in its memory. • v = mko

- 108. TREE CODES – some facts/definitions! Wordlength of shift register encoder k = (m+1)ko Blocklength of shift register encoder n = (m+1)no (no,ko)code tree rate = ko/no =k/n. A (no,ko) tree code that is linear, time invariant and has finite Wordlength k = (m+1)ko is called (n,K) Convolution codes. A (no,ko) tree code that is time invariant and has finite Wordlength k = (m+1)ko is called (n,K) Sliding Block codes. Not linear.

- 109. Convolutional Encoder One bit input converts to two bits code. ko =1, no= 2, Block length = (m+1)no =6 Constraint length = 2 , Code rate = ½ + ++ Shift RegisterInput Encoded output

- 110. Convolutional Encoder- Input and output bits Incoming Bit Current state of Encoder Outgoing Bits 0 0 0 0 0 1 0 0 1 1 0 0 1 1 0 1 0 1 0 1 1 0 0 1 1 1 1 1

- 111. Convolutional Encoder- Input and output bits Incoming Bit Current state of Encoder Outgoing Bits 0 0 0 0 0 1 0 0 1 1 0 0 1 1 1 1 0 1 0 0 0 1 0 0 1 1 1 0 1 0 0 1 1 1 0 1 1 1 0 1

- 112. Convolutional Encoder - State Diagram Same bit gets coded differently depending upon previous bits. Make state diagram of Encoder. 2 bits encoder – 4 states.

- 113. Convolutional Encoder - State Diagram 01 10 1100 0 0 0 0 1 1 1 1

- 114. Convolutional Encoder - Trellis codes/Diagram 4 Nodes are 4 States. Code rate is ½. In trellis diagram, follow upper or lower branch to next node if input is 0 or 1. Traverse to next state of node with this input, writing code on the branch. Continues… Complete the diagram.

- 115. Convolutional Encoder - Trellis Diagram 00 10 01 11 00 11 States

- 116. Convolutional Encoder - Trellis Diagram 00 10 01 11 00 11 States 00 00 00 11 11 01 10 00 10 01

- 117. Trellis Diagram – Input 1 0 0 1 1 0 1 Output – 11 01 11 11 10 10 00 00 10 01 11 00 11 States 00 00 11 11 01 10 00 10 01 00 00 00 11 10 10 00

- 118. Convolutional Encoder - Polynomial description Any information sequence i0, i1, i2, i3, …can be expressed in terms of delay element D as I(D) = i0 + i1 D + i2 D2 + i3 D3 + i4D4 + … 10100011 will be 1+ D2 + D6+ D7

- 119. Convolutional Encoder - Polynomial description Similarly, encoder can also be expressed as polynomial of D. Using previous problem, ko =1, no= 2, first bit of code g11(D)= D2+D+1 Second bit of code g12(D)= D2+1 G(D) = [gij(D)]= [D2+D+1 D2+1] cj(D) = ∑i il(D)gl,j(D) C(D) = I(D)G(D)

- 120. Convolutional encoder – Example Find Generator polynomial matrix, rate of code and Trellis diagram. i ab +

- 121. Convolutional encoder – Example k0 = 1, n0 = 2, rate = ½. G(D) = [1 D4+1] Called systematic convolution encoder as k0 bits of code are same as data.

- 122. Convolutional encoder – Example Find Generator polynomial matrix, rate of code and Trellis diagram. k2 k1 n1 n2 n3 ++

- 123. Convolutional encoder – Example k0 = 2, n0 = 3, rate = 2/3. G(D) = g11(D) g12(D) g13(D) g21(D) g22(D) g23(D) = 1 0 D3+D+1 0 1 0

- 124. Convolutional encoder – Formal definitions Wordlength k = k0 maxi,j{deg gij (D) + 1]. Blocklength n = n0 maxi,j{deg gij (D) + 1]. Constraint length v = ∑ maxi,j{deg gij (D)]. Parity Check matrix H(D) is (n0-k0) by n0 matrix of polynomials which satisfies- G(D) H(D)- 1= 0 Syndrome polynomial s(D) is (n0-k0) component row vector s(D) = v(D) H(D)- 1

- 125. Convolutional encoder – Formal definitions Systematic Encoder has G(D) = [I | P(D)] Where I is k0 by ko identity matrix and P(D) is ko by ( n0-k0) parity check polynomial given by H(D) = [-P(D)T |I] Where I is ( n0-k0) by( n0-k0) identity matrix. Also G(D) H(D)T = 0

- 126. Convolutional encoder – Error correction lth minimum distance dl* is smallest hamming distance between any two initial codeword segments l frames long that are not identical in initial frames. If l=m+1, then (m+1)th minimum distance is minimum distance of the code d* or dmin.. Code can correct “t” errors in first l frames if dl* ≥ 2t+1

- 127. Convolutional encoder -Example m = 2, l = 3 d1*=2, d2*=3, d3*=5… comparing with all ‘0’s. d* = 5 t = 2

- 128. Convolutional encoder – Free Distance Minimum distance between arbitrarily long encoded sequence. ( Possibly infinite) Free distance is dfree = maxl[dj] It can be minimum weight of a path that deviates from the all zero path and later merges back into the all zero path. Also dm+1 ≤ dm+2 ≤ …≤ dfree

- 129. Convolutional encoder – Free Length Free length nfree is length of non-zero segment of smallest weight convolution codeword of nonzero weight. dl = dfree if l = nfree.

- 130. Convolutional encoder – Free Length Here Free distance = 5 Free length = 6 00 10 01 11 00 11 00 00 11 11 01 10 00 10 01 00 00 00 11 10 10 00

- 131. Matrix description of Convolutional encoder - Example

- 132. Matrix description of Convolutional encoder - Example Generator polynomials are: g11(D) = D + D2 g12(D) = D2 g13(D) = D + D2 g21(D) = D2 g22(D) = D g23(D) = D

- 133. Matrix description of Convolutional encoder - Example Matrix G0 has coefficients of D0 i.e. constant s: Matrix G1 has coefficients of D1 : Matrix G2 has coefficients of D2 :

- 134. Matrix description of Convolutional encoder - Example Generator matrix is only the representation of a convolution coder. It is actually not used to generate codes. 1 0 0 1 0 0

- 135. Matrix description of Convolutional encoder - General Generator matrix is only the representation of a convolution coder. It is actually not used to generate codes.

- 136. VITERBI DECODING Advantages: Highly satisfactory bit error performance High speed of operation. Ease of implementation. Low cost.

- 137. VITERBI DECODING Given Trellis Diagram of rate 1/3. Transmitted word – 000000000000000000000… Received word – 010000100001…

- 138. VITERBI DECODING Procedure: Divide the incoming stream into groups of three. 010 000 100 001… Find hamming distances between first group with two options at first node. Called Branch Metric. Take second group and continue with second node. Find total hamming distance called Path Metric. At any node if two branches meet, discard branch with higher Path Metric. Continue…

- 139. VITERBI DECODING First group - 010 d(000,010) = 1 BM1 = 1 d(111,010) = 2 BM2 = 2

- 140. VITERBI DECODING Second group – 000 Branch Metric and Path Metric(circled) are found. At each node three compare total path metric.

- 141. VITERBI DECODING Third group – 100 At each node three compare total path metric. Discard longer Path and retain Survivor.

- 143. Assignments: 1. Design a rate ½ Convolutional encoder with a constraint length v = 4 and d* = 6. Construct state diagram for this encoder. Construct trellis diagram. What is dfree for this code. Give generator matrix G.

- 144. 2 ) For given encoders each, construct Trellis Diagram. Find k0, n0, v, m, d* and dfree. Find G.

- 145. 3) Write k, n, v, m and Rate R for this coder. Give Generator polynomial matrix G(D), generator matrix G and Parity check matrix H. Find d*, dfree, nfree. Encode 101 001 001 010 000

- 146. CONVOLUTION CODES – using Code Tree M1, M2, M3, M4 are 1 bit storage elements feeding to 3 summers as per design of the code. v1, v2, v3 are commutator pins, to be sampled one after the other. M1 M2 M4 I/P data stream b1 MSB in first M3 v1 v2 v3 Code output bo Commutator

- 147. v1 = s1. v2 = s1 ⊕ s2 ⊕ s3 ⊕ s4 v3 = s1 ⊕ s3 ⊕ s4 I/p stream enters registers one by one with MSB going in first. As each bit enters, logic summer gives 3-bit O/p v1v2v3. Enough zeroes to be added so that last bit leaves m4. M1 M2 M4 I/P data stream b1 MSB in first M3 v1 v2 v3 Code output bo Commutator

- 148. Code generated by combining outputs of k stage shift registers, through v EX-OR’s . No of bits in message stream = L. No of bits in output code = v (L + k). M1 M2 M4 I/P data stream b1 MSB in first M3 v1 v2 v3 Code output bo Commutator

- 149. Input stream m=1 0 1 1 0 L = 5, v = 3, k = 4 Code bits = 27 Rate of code = 1/3 CODE bo = 111 010 100 110 001 000 011 000 000 M1 M2 M4 I/P data stream b1 MSB in first M3 v1 v2 v3 Code output bo Commutator

- 150. Code depends on No of shift registers k. No of shift summers v. No of shift input bits L. Manner in which all are connected. M1 M2 M4 I/P data stream b1 MSB in first M3 v1 v2 v3 Code output bo Commutator

- 151. ASSIGNMENT : Bring codes for all possible combinations to make code tree.

- 153. Decoding the convolution code EXHUSTIVE SEARCH METHOD : With no noise Start at A. Take first v-bit s and compare with two sets of v- bits connected to A. If input matches with a v-bit set of tree for which we have to go up the tree, decoded data first bit is “0”. If input matches with a v-bit set of tree for which we have to go down the tree, decoded data first bit is “1”. Go to nodes B, C etc and repeat the process to decode other bits.

- 154. Decoding the convolution code EXHUSTIVE SEARCH METHOD : With noise Each input message bit effects k x v bits of the code = 4X3 = 12 bits. At node A , we must check first 12 bits only. 16 such unique 12-bit combinations from A. Compare first 12 bits with 12 combinations and select best match. If it takes up the tree at A, first bit decoded to “0” If it takes down the tree at A, first bit decoded to “1”. Discard first 3 bits of input code and repeat the same at B comparing next 12 bits with next 16 combinations…..and so on.

- 155. Decoding the convolution code EXHUSTIVE SEARCH METHOD : With noise Discard first 3 bits of input code and come to node B. Repeat the same at B comparing next 12 bits with next 16 combinations…..and so on. Disadvantages: Although it offers low probability of errors, we have to search kXv code bits in 2k branches. Lengthy. Errors tend to propagate . If incorrect direction is chosen at a node due to more errors. No coming back.

- 156. Decoding the convolution code SEQUENTIAL DECODING METHOD : With noise v bits at a time are considered at a node. At node A. we move in the direction of least discrepancies and decode accordingly. No of discrepancies are noted. Move to node B and repeat. At every node compare no of discrepancies with already decided maximum permissible errors. If current error exceed decided value at any node, traverse back one node and take the other direction. Repeat the process.

- 157. Number of message bits decoded Total no of discrepancies at a node or errors while decoding Discard level (1) (4) (3) (2) Correct Path

- 158. Decoding the convolution code SEQUENTIAL DECODING METHOD : With noise Advantages: Works on smaller code blocks. Faster. Although trial and error and retracing involved, still gives better results at smaller time.

- 159. ASSIGNMENT find code tree. M1 M2 3 bit input M3 v1 v2 v3 Code output bo Commutator

- 160. Two dimensional codes. Errors may occur in bursts. Due to mechanical faults of transmitting and receiving heads. Due to Impulse noise. Due to Fading. Single error correcting codes can not correct these errors.

- 161. Two dimensional codes. RULE FOR q ERROE CORRECTION: n – k ≥ 2q Example: (n,k) = (7,3) can correct burst of 2. (n,k) = (15,9) can correct burst of 3. (n,k) = (27,17) can correct burst of 5. (n,k) = (27,20) can correct burst of 3. BASIC TECHNIQUE: Encoding and decoding basics are interlacing or interleaving bits.

- 162. Two dimensional codes: Block codes kl number of shift registered in k rows and l columns. kl input bits are loaded in the registers as shown . d11 d12 d13 d14………….d1l d21 d22 d23 d24… ……..d2k -- dk1 dk2 dk3 dk4………….dkl ( first bit)

- 163. All bits in 1st column are taken as one k-bit word and r parity bits are found as before. r parity bits are entered individually into r shift registers placed below 1st column. 1st column has now n terms. Finish rest columns.

- 164. Two dimensional codes: Block codes d11 d12 d13 d14………….d1l d21 d22 d23 d24… ……..d2l -- dk1 dk2 dk3 dk4………….dkl p11 p12 p13 p14………….p1l p21 p22 p23 p24… ……..p2k -- pr1 pr2 pr3 pr4………….prl Code out

- 165. Two dimensional codes: RECEPTION d11 d12 d13 d14………….d1l d21 d22 d23 d24… ……..d2l -- dk1 dk2 dk3 dk4………….dkl p11 p12 p13 p14………….p1l p21 p22 p23 p24… ……..p2k -- pr1 pr2 pr3 pr4………….prl Code in

- 166. If the total kl bit code has maximum of l errors together at any place, each column will have maximum 1 error. All bits in 1st column are taken as one n-bit code word and decoded to find k bit data. Single error in each column can thus be corrected. Data bits are taken out serially from dkl. d11 d12 d13 d14………….d1l d21 d22 d23 d24… ……..d2k -- dk1 dk2 dk3 dk4………….dkl ( first bit)

- 167. Two dimensional codes: using CRC We have (n,k) coder capable of correcting single error. Can be used as (λn, λk) code for burst error correction. λk message bits are divided into λ message blocks, each with k bits. Each k bits converted to n bit CRC. These n-bits are arranged into rows one below the other. One code below other. Transmission done column wise.

- 168. (15,7) code with g(x) = 1 + x + x2 + x4 + x8. dmin = 5, capable of correcting 2 errors. Used to construct (75,35) interlaced code. Can correct burst of 10 errors. 35 bits message broken into 5 blocks, 7 bits each. Each 7 bit converted to 15 bit code. Words arranged one below the other. Taken out serially as..

- 169. 1 6 x x 2 7 x x 3 8 x x 4 9 x x 5 x x out Two dimensional codes: using CRC

- 170. At receiver, entered serially. CRC detection performed row wise. Data bits seperated.

![ If there are more than ten messages…..

To satisfy prefix property,

Say W2 = 4 say ca, cb, da, db

Others to be used for three bit code

cca, ccb, ccc, ccd

cda, cdb, bdc, cdd

dca, dcb, dcc, dcd

dda, ddb, ddc, ddd

and so on…

To satisfy prefix property, number of codes with length

3 W3 will be..

W3 ≤ [ (D - W1 ) D - W2 ]D i.e. W2 ≤ 16

or W3 ≤ D3 - W1 D2 - W2 D](https://image.slidesharecdn.com/basicsofcodingtheory-160202182933/85/Basics-of-coding-theory-11-320.jpg)

![Example

[ X ] = { x1, x2, x3, x4, x5, x6, x7} 7 codes

Li = { 2, 2, 3, 3, 3, 4, 5 } code lengths.

Hence

Wi = { 0, 2, 3, 1, 1,}

n

∑i=1 Wi D- i = 2/D2 + 3/D3 + 1/D4 + 1/D4

=1/D2+1/D2+1/D3+1/D3+1/D3 + 1/D4+1/D4

7

=∑i=1 D- Li](https://image.slidesharecdn.com/basicsofcodingtheory-160202182933/85/Basics-of-coding-theory-14-320.jpg)

![PROBLEMS

Find the smallest number of letters in the alphabets D for devising a

code with prefix property such that

[W] = { 0, 3, 0, 5 }. Devise such a code.

SOLUTION

m n

∑i=1 D- Li ≤ 1 or ∑i=1 Wi D- i ≤ 1

3 D-2 + 5 D-4 ≤ 1

D4 – 3d2 - 5 ≥ 0

D = 3 smallest value

Say { 0, 1, 2}](https://image.slidesharecdn.com/basicsofcodingtheory-160202182933/85/Basics-of-coding-theory-15-320.jpg)

![ [W] = { 0, 3, 0, 5 }.

W1 + W2 + W3 + …. Wn = m

M = 8

Possible options:

00

01

02

1002

1000

1001

2000

2022](https://image.slidesharecdn.com/basicsofcodingtheory-160202182933/85/Basics-of-coding-theory-16-320.jpg)

![PROBLEMS

Verify if following sets of words lengths may

correspond to uniquely decipherable binary

codes.

a) [W] = { 0, 2, 3, 2}

b) [W] = { 0, 2, 2, 2, 5}](https://image.slidesharecdn.com/basicsofcodingtheory-160202182933/85/Basics-of-coding-theory-17-320.jpg)

![D = 4

The group in each column will have D messages

and 4 codes will be assigned, say 0, 1, 2, 3 or 4

voltage levels etc.. But…

First column group should have r messages

such that..

r = [ m – k (D-1) ]

K is highest possible integer to keep r positive.

r = 8 –K(4-1)

r = 8 – 2(4-1) Largest possible k = 2

r = 2

First column group will have 2 symbols.](https://image.slidesharecdn.com/basicsofcodingtheory-160202182933/85/Basics-of-coding-theory-36-320.jpg)

![ [ C1, C2, C3….Cn] =

1 0 0 0 …0 p11 p12 p13…p1,n-1

[d1, d2, d3…dk] 0 1 0 0 …0 p21 p22 p23…p2,n-1

0 0 1 0 …0 p31 p32 p33…p3,n-1

.

.

0 0 0 0 …1 pk1 pk2 pk3…pk,n-1

General Formula](https://image.slidesharecdn.com/basicsofcodingtheory-160202182933/85/Basics-of-coding-theory-51-320.jpg)

![General Formula

C = D G

G = [ Ik P]

Ik is identity matrix of order k

P is an arbitrary matrix ( k by n-k).

Important step is choice of P.](https://image.slidesharecdn.com/basicsofcodingtheory-160202182933/85/Basics-of-coding-theory-52-320.jpg)

![Checking for errors

Assume a parity check matrix H as

p11 p21 p31…pk,1 1 0 0 0…0

H = p12 p22 p32…pk,2 0 1 0 0 …0

p13 p23 p33…pk,3 0 0 1 0 …0

.

p1,n-k p2,n-k …pk,n-k0 0 0 0 …1

H = [ PT In-k] (n-k)x nmatrix](https://image.slidesharecdn.com/basicsofcodingtheory-160202182933/85/Basics-of-coding-theory-56-320.jpg)

![Checking for errors

RULE

C is a code word in the (n,k) block code,

generated by G = [ Ik P] if and only

if

CHT = 0](https://image.slidesharecdn.com/basicsofcodingtheory-160202182933/85/Basics-of-coding-theory-57-320.jpg)

![ C = 1 0 1 1 0 1

No error

Finding CHT for a correct code Using -mod-2

addition

0 1 1

1 0 1

CHT = [1 0 1 1 0 1] 1 1 0 = 0 0 0

1 0 0

0 1 0

0 0 1](https://image.slidesharecdn.com/basicsofcodingtheory-160202182933/85/Basics-of-coding-theory-60-320.jpg)

![ C = 1 0 1 0 0 1

Error in 4th bit.

Try introducing error in other bits and in other

codes as well.

Finding CHT for a CORRUPTED code -Using

mod-2 addition

0 1 1

1 0 1

CHT = [1 0 1 0 0 1] 1 1 0 = 1 0 0

1 0 0

0 1 0

0 0 1](https://image.slidesharecdn.com/basicsofcodingtheory-160202182933/85/Basics-of-coding-theory-61-320.jpg)

![Convolutional Encoder - Polynomial description

Similarly, encoder can also be expressed as

polynomial of D.

Using previous problem, ko =1, no= 2,

first bit of code g11(D)= D2+D+1

Second bit of code g12(D)= D2+1

G(D) = [gij(D)]= [D2+D+1 D2+1]

cj(D) = ∑i il(D)gl,j(D)

C(D) = I(D)G(D)](https://image.slidesharecdn.com/basicsofcodingtheory-160202182933/85/Basics-of-coding-theory-119-320.jpg)

![Convolutional encoder – Example

k0 = 1, n0 = 2, rate = ½.

G(D) = [1 D4+1]

Called systematic convolution encoder as k0 bits of

code are same as data.](https://image.slidesharecdn.com/basicsofcodingtheory-160202182933/85/Basics-of-coding-theory-121-320.jpg)

![Convolutional encoder – Formal definitions

Wordlength k = k0 maxi,j{deg gij (D) + 1].

Blocklength n = n0 maxi,j{deg gij (D) + 1].

Constraint length

v = ∑ maxi,j{deg gij (D)].

Parity Check matrix H(D) is (n0-k0) by n0 matrix

of polynomials which satisfies-

G(D) H(D)- 1= 0

Syndrome polynomial s(D) is (n0-k0) component

row vector

s(D) = v(D) H(D)- 1](https://image.slidesharecdn.com/basicsofcodingtheory-160202182933/85/Basics-of-coding-theory-124-320.jpg)

![Convolutional encoder – Formal definitions

Systematic Encoder has G(D) = [I | P(D)]

Where I is k0 by ko identity matrix and P(D) is ko by

( n0-k0) parity check polynomial given by

H(D) = [-P(D)T |I]

Where I is ( n0-k0) by( n0-k0) identity matrix.

Also G(D) H(D)T = 0](https://image.slidesharecdn.com/basicsofcodingtheory-160202182933/85/Basics-of-coding-theory-125-320.jpg)

![Convolutional encoder – Free Distance

Minimum distance between arbitrarily long encoded

sequence. ( Possibly infinite)

Free distance is dfree = maxl[dj]

It can be minimum weight of a path that deviates

from the all zero path and later merges back into the

all zero path.

Also dm+1 ≤ dm+2 ≤ …≤ dfree](https://image.slidesharecdn.com/basicsofcodingtheory-160202182933/85/Basics-of-coding-theory-128-320.jpg)