Getting Started with Hadoop

- 2. Getting Started with Hadoop

- 3. 3 Agenda • 1999 the Database Story • Intro to Hadoop • Basic Hadoop Eco-system • Why CDH is Awesome • QA

- 5. 5

- 6. 6 Indexing the Web • Web is huge • Hundreds of millions of pages in 1999 • How do you index it? • Crawl all the pages • Rank pages based on relevance metrics • Build search index of keywords to pages • Do it in real-time!

- 7. 7

- 8. 8 Databases in 1999 1. Buy a really big machine 2. Install an expensive DBMS on it 3. Point your workload at it 4. Hope it doesn’t fail 5. Ambitious: buy another really big machine as a backup

- 9. 9 Database Limitations • Didn’t scale horizontally • High marginal cost ($$$) • No real fault-tolerance story • Vendor lock in ($$$) • SQL unsuited for search ranking • Complex analysis (PageRank) • Unstructured data

- 10. 10 Google Does Something Different • Designed their own storage and processing infrastructure • Google File System and MapReduce • Goals: • Cheap • Scalable • Reliable

- 11. 11 Google Does Something Different • It worked! • Powered Google Search for many years • General framework for large-scale batch computation tasks • Still used internally at Google to this day

- 14. 14

- 15. 15 Google’s messages from the future • Google was benevolent enough to publish • 2003: Google File System (GFS) paper • 2004: MapReduce paper • Already mature technologies at this point

- 16. 16 Google’s messages from the future • Community didn’t get it immediately • DB people thought it was silly • Non-Google weren’t at the same scale yet • Google had little interest in releasing GFS and MapReduce • Business was ads, not infrastructure

- 17. 17 Birth of Hadoop • Doug Cutting and Mike Cafarella • Nutch • Open-source search platform • Ran into scaling issues • 4 nodes • Hard to program • Hard to manage • Immediate application for GFS and MR

- 18. 18 Birth of Hadoop • 2004-2006: Implemented GFS/MR and ported Nutch to it • 2006: Spun out into Apache Hadoop • Name of Doug’s son’s stuffed elephant

- 19. Birth of Hadoop

- 20. 20 Summary • The web is huge and unstructured • Databases didn’t fit the problem • Didn’t scale, expensive, SQL limitations • Google did their own thing: GFS + MR • Hadoop is based on the Google papers

- 22. 22 HDFS • Based on GFS • Distributed, fault-tolerant filesystem • Primarily designed for cost and scale • Works on commodity hardware • 20PB / 4000 node cluster at Facebook

- 23. 23 HDFS design assumptions • Failures are common • Massive scale means more failures • Disks, network, node • Files are append-only • Files are large (GBs to TBs) • Accesses are large and sequential

- 24. 24 Quick primers • Filesystems • Hard drives • Datacenter networking

- 25. 25 Quick filesystem primer • Same concepts as the FS on your laptop • Directory tree • Create, read, write, delete files • Filesystems store metadata and data • Metadata: filename, size, permissions, … • Data: contents of a file • Other concerns • Data integrity, durability, management

- 26. 26 Quick disk primer • Disk does a seek for each I/O operation • Seeks are expensive (~10ms) • Throughput / IOPS tradeoff • 100 MB/s and 10 IOPS • 10MB/s and 100 IOPS • Big I/Os mean better throughput

- 27. 27 Quick networking primer Rack Top-of-rack switch Core switch

- 28. 28 Quick networking primer 40 Gbit 1, 2, 4, 10 Gbit 10 Gbit

- 29. 29 HDFS Architecture Overview Secondary Namenode Host 2 Namenode Host 1 DataNode Host 3 DataNode Host 4 DataNode Host 5 DataNode Host n

- 30. 30 Block Size = 64MB Replication Factor = 3 HDFS Block Replication 1 2 3 4 5 2 3 4 2 4 5 1 3 5 1 2 5 1 3 4 HDFS Node 1 Node 2 Node 3 Node 4 Node 5 Blocks

- 31. 31 HDFS Write Path • Talk to NameNode • Store metadata for new file • Get topology-aware list of DataNodes • Setup the write pipeline • Stream data to pipeline • Tell NameNode when done

- 32. 32 HDFS Fault-tolerance • Many different failure modes • Disk corruption, node failure, switch failure • Primary concern • Data is safe!!! • Secondary concerns • Keep accepting reads and writes • Do it transparently to clients

- 33. 33 MapReduce – Map • Records from the data source (lines out of files, rows of a database, etc) are fed into the map function as key*value pairs: e.g., (filename, line). • map() produces one or more intermediate values along with an output key from the input. Map Task (key 1, values) (key 2, values) (key 3, values) Shuffle Phase (key 1, int. values) (key 1, int. values) (key 1, int. values) Reduce Task Final (key, values)

- 34. 34 MapReduce – Reduce • After the map phase is over, all the intermediate values for a given output key are combined together into a list • reduce() combines those intermediate values into one or more final values for that same output key Map Task (key 1, values) (key 2, values) (key 3, values) Shuffle Phase (key 1, int. values) (key 1, int. values) (key 1, int. values) Reduce Task Final (key, values)

- 35. 35 MapReduce – Shuffle and Sort

- 36. 36 Word Count Example The cat sat on the mat The aardvark sat on the sofa The, 1 cat, 1 sat, 1 on, 1 the, 1 mat, 1 The, 1 aardvark, 1 sat, 1 on, 1 the, 1 sofa, 1 Mapper Input Mapping aardvark, 1 cat, 1 mat, 1 on, 2 sat, 2 sofa, 1 the, 4 aardvark, 1 cat, 1 mat, 1 on, 2 sat, 2 sofa, 1 the, 4 aardvark, 1 cat, 1 mat, 1 on [1, 1] sat [1, 1] sofa, 1 the [1, 1, 1, 1] Shuffling Reducing Final Result

- 37. 37 Summary • GFS and MR co-design • Cheap, simple, effective at scale • Fault-tolerance baked in • Replicate data 3x • Incrementally re-execute computation • Avoid single points of failure

- 38. 38 Hadoop Ecosystem Overview What Are All These Things?

- 39. 39 Sqoop Performs bidirectional data transfers between Hadoop and almost any SQL database with a JDBC driver

- 40. 40 Flume Client Client Client Client Agent Agent Agent A streaming data collection and aggregation system for massive volumes of data, such as RPC services, Log4J, Syslog, etc.

- 41. 41 Hive SELECT s.word, s.freq, k.freq FROM shakespeare JOIN ON (s.word= k.word) WHERE s.freq >= 5; • Relational database abstraction using a SQL like dialect called HiveQL • Statements are executed as one or more MapReduce Jobs

- 42. 42 Pig • High-level scripting language for for executing one or more MapReduce jobs • Created to simplify authoring of MapReduce jobs • Can be extended with user defined functions emps = LOAD 'people.txt’ AS (id,name,salary); rich = FILTER emps BY salary > 200000; sorted_rich = ORDER rich BY salary DESC; STORE sorted_rich INTO ’rich_people.txt';

- 43. 43 HBase • Low-latency, distributed, columnar key-value store • Based on BigTable • Efficient random reads/writes on HDFS • Useful for frontend applications

- 44. 44 Oozie A workflow engine and scheduler built specifically for large- scale job orchestration on a Hadoop cluster

- 45. 45 Hue • Hue is an open source web- based application for making it easier to use Apache Hadoop. • Hue features • File Browser for HDFS • Job Designer/Browser for MapReduce • Query editors for Hive, Pig and Cloudera Impala • Oozie

- 46. 46 Zookeeper • Zookeeper is a distributed consensus engine • Provides well-defined concurrent access semantics: • Leader election • Service discovery • Distributed locking / mutual exclusion • Message board / mailboxes

- 47. 47 Hadoop Ecosystem INGEST STORE EXPLORE PROCESS ANALYZE SERVE CONNECTORS STORAGE RESOURCE MGMT & COORDINATION USER INTERFACE WORKFLOW MGMT METADATACLOUD INTEGRATION YA YARN ZO ZOOKEEPER HDFS HADOOP DFS HB HBASE HU HUE OO OOZIE WH WHIRR SQ SQOOP FL FLUME FILE FUSE-DFS REST WEBHDFS / HTTPFS SQL ODBC / JDBC MS META STORE AC ACCESS BI ETL RDBMS BATCH COMPUTE BATCH PROCESSING REAL-TIME ACCESS & COMPUTE MR MAPREDUCE MR2 MAPREDUCE2 HI HIVE PI PIG MA MAHOUT DF DATAFU IM IMPALA MANAGEMENT SOFTWARE & TECHNICAL SUPPORT SUBSCIPTION OPTIONS CLOUDERA NAVIGATOR CLOUDERA MANAGER CORE (REQUIRED) RTD RTQ BDR AUDIT (v1.0) LINEAGE ACCESS (v1.0) LIFECYCLE EXPLORE CORE

- 49. 49 Cloudera Impala Cost-effective, ad hoc query environment that offloads the data warehouse for: • Interactive BI/analytics on more data • Asking new questions • Data processing with tight SLAs • Query-able archive w/full fidelity

- 50. 50 Cloudera Impala Interactive SQL for Hadoop • Responses in seconds • Nearly ANSI-92 standard SQL with Hive SQL Native MPP Query Engine • Purpose-built for low-latency queries • Separate runtime from MapReduce • Designed as part of the Hadoop ecosystem Open Source • Apache-licensed

- 51. 51 Impala Key Features Fast Flexible Secure Easy to Implement Easy to Use Simple to Manage • In-memory data transfers • Partitioned joins • Fully distributed aggregations • Query data in HDFS & HBase • Supports multiple file formats & compression algorithms • Integrated with Hadoop security • Kerberos authentication • Authorization (Sentry) • Leverages Hive’s ODBC/JDBC connectors, metastore & SQL syntax • Open source • Interact with data via SQL • Certified with leading BI tools • Deploy, configure & monitor with Cloudera Manager • Integrated with Hadoop resource management

- 52. 52 The Impala Advantage BI Partners: Building on the Enterprise Standard

- 53. 53 Cloudera Search Offer easy access to non-technical resources Explore data prior to processing and modeling Gain immediate access and find correlations in mission-critical data Powerful, proven search capabilities that let organizations:

- 54. 54 Cloudera Search Interactive Search for All Data • Full-text and faceted navigation • Batch, near real-time, and on-demand indexing Apache Solr Integrated with CDH • Established, mature search with vibrant community • Separate runtime like MapReduce, Impala • Incorporated as part of the Hadoop ecosystem Open Source • 100% Apache, 100% Solr • Standard Solr APIs

- 55. 55 Search Key Features Scalable Flexible Timely Mature Simple to Use Easy to Manage • Index storage & retrieval on HDFS • Indexing with MapReduce and Flume • Shard management with Zookeeper • Indexing and query of any data in HDFS and HBase • Support for multiple file formats • Field mapping and matching with Morphlines • Indexing in batch, on-demand, and in near real-time • Scalable extraction and mapping with built-in Solr sink for Flume • Proven, enterprise-ready technology • Rich ecosystem and knowledge within community • Familiar full-text search and faceted navigation • Out-of-the-box Search GUI • Known, readily available standard Solr APIs • Integrated with Cloudera Manager and Apache Sentry • Integrated coordination and execution of jobs • GoLive for incremental changes

- 56. 56 The Search Advantage Search Partners: Building on the Cloudera Enterprise Data Hub

- 57. 57 Sentry Unlocks Key RBAC Requirements Secure, fine-grained, role-based authorization Multi-tenant administration Open Source Submitted to ASF Open Source authorization module for Impala & Hive

- 58. 58 Defining Security Functions Perimeter Guarding access to the cluster itself Technical Concepts: Authentication Network isolation Data Protecting data in the cluster from unauthorized visibility Technical Concepts: Encryption, Tokenization Data masking Access Defining what users and applications can do with data Technical Concepts: Permissions Authorization Visibility Reporting on where data came from and how it’s being used Technical Concepts: Auditing Lineage

- 59. 59 Enabling Enterprise Security Perimeter Guarding access to the cluster itself Technical Concepts: Authentication Network isolation Data Protecting data in the cluster from unauthorized visibility Technical Concepts: Encryption, Tokenization Data masking Access Defining what users and applications can do with data Technical Concepts: Permissions Authorization Visibility Reporting on where data came from and how it’s being used Technical Concepts: Auditing Lineage Apache SentryKerberos, AD/LDAP Cloudera NavigatorCertified Partners

- 60. 60 Authorization Requirements Secure Authorization Ability to control access to data and/or privileges on data for authenticated users Fine-Grained Authorization Ability to give users access to a subset of data (e.g. column) in a database Role-Based Authorization Ability to create/apply templatized privileges based on functional roles Multitenant Administration Ability for central admin group to empower lower-level admins to manage security for each database/schema

- 61. 61 Key Capabilities of Sentry Fine-Grained Authorization Specify security for SERVERS, DATABASES, TABLES & VIEWS Role-Based Authorization SELECT privilege on views & tables INSERT privilege on tables TRANSFORM privilege on servers ALL privilege on the server, databases, tables & views ALL privilege is needed to create/modify schema Multitenant Administration Separate policies for each database/schema Can be maintained by separate admins

- 62. 62 Challenges with Hadoop without Management Hadoop is more than a dozen services running across many machines • Hundreds of hardware components • Thousands of settings • Limitless permutations Complexity Hadoop is a system, not just a collection of parts • Everything is interrelated • Raw data about individual pieces is not enough • Must extract what’s important Context Managing Hadoop with multiple tools and manual process takes longer • Complicated, error-prone workflows • Longer issue resolution • Lack of consistent and repeatable processes Efficiency

- 63. 63 Cloudera Manager End-to-End Administration for Your Enterprise Data Hub Manage Easily deploy, configure & optimize clusters1 Monitor Maintain a central view of all activity2 Diagnose Easily identify and resolve issues3 Integrate Use Cloudera Manager with existing tools4

- 64. 64 One Tool For Everything Managing Complexity + DEPLOYMENT & CONFIGURATION MONITORING WORKFLOWS EVENTS & ALERTS LOG SEARCH DIAGNOSTICS REPORTING ACTIVITY MONITORING DO-IT-YOURSELF WITH CLOUDERA

- 65. 65 $7M $5M $3M $1.8M $852K $3.5M $1.7M $8.4M $5.4M SELF MANAGE CLOUDERA ENTERPRISE SELF MANAGE CLOUDERA ENTERPRISE SELF MANAGE CLOUDERA ENTERPRISE 25 NODES $948K SAVINGS 50 NODES $1.8M SAVINGS 100 NODES $3M SAVINGS Three-Year TCO Comparison Maximizing Efficiency

- 66. 66 Why Cloudera Manager End-to-end administration for the Enterprise Data Hub in a single tool Simple Manages Hadoop at a system level – Cloudera’s experience realized in software Intelligent Simplifies complex workflows and makes administrators more productive Efficient The only enterprise-grade Hadoop management application available Best-in-Class

- 67. 67 Why Backup and Disaster Recovery? Cloudera Enterprise is a Mission-Critical Part of the Data Management Infrastructure • Stores valuable data and runs important workloads • Business continuity is a MUST HAVE 1 Managing Business Continuity for Hadoop is Complex • Different services that store data – HDFS, HBase, Hive • Backup and disaster recovery is configured separately for each • Processes are manual 2

- 68. 68 BDR in Cloudera Enterprise Simplified Management of Backup & DR Policies Central Configuration • HDFS - Select files & directories to replicate • Hive - Select tables to replicate • Schedule replication jobs for optimal times HDFS HIVE NODES Monitoring & Alerting • Track progress of replication jobs • Get notified when data is out of sync Performance & Reliability • High performance replication using MapReduce • CDH-optimized version of DistCP SITE A SITE B HDFS HIVE NODES

- 69. 69 Benefits of BDR Reduce Complexity • Centrally manage backup and DR workflows • Simple setup via an intuitive user interface Maximize Efficiency • Simplify processes to meet or exceed SLAs and Recovery Time Objectives (RTOs) • Optimize system performance and network impact through scheduling Reduce Risk & Exposure • Eliminate error-prone manual processes • Get notified when issues occur • The only solution for metadata replication (Hive)

- 70. 70 One Tool For Everything Managing Complexity + DEPLOYMENT & CONFIGURATION MONITORING WORKFLOWS EVENTS & ALERTS LOG SEARCH DIAGNOSTICS REPORTING ACTIVITY MONITORING DO-IT-YOURSELF WITH CLOUDERA

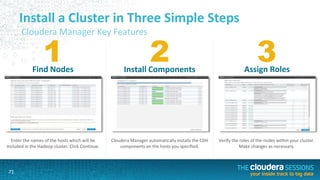

- 71. 71 1 2 3Find Nodes Install Components Assign Roles Enter the names of the hosts which will be included in the Hadoop cluster. Click Continue. Cloudera Manager automatically installs the CDH components on the hosts you specified. Verify the roles of the nodes within your cluster. Make changes as necessary. Install a Cluster in Three Simple Steps Cloudera Manager Key Features

- 72. 72 View Service Health and Performance 72 Cloudera Manager Key Features

- 73. 73 Monitor and Diagnose Cluster Workloads Cloudera Manager Key Features

- 74. 74 Rolling Upgrades Cloudera Manager Key Features

- 75. 75 Why You Need Cloudera Navigator Lots of Data Landing in Cloudera Enterprise • Huge quantities • Many different sources – structured and unstructured • Varying levels of sensitivity 1 Many Users Working with the Data • Administrators and compliance officers • Analysts and data scientists • Business users 2 Need to Effectively Control and Consume Data • Get visibility and control over the environment • Discover and explore data 3

- 76. 76 Cloudera Navigator Data Management Layer for Cloudera Enterprise Audit & Access Control Ensuring appropriate permissions and reporting on data access for compliance Discovery & Exploration Finding out what data is available and what it looks like Lineage Tracing data back to its original source Lifecycle Management Migration of data based on policies Enterprise Metadata Repository • Business metadata • Lineage metadata • Operational metadata Audit & Access Control Discovery & Exploration Lineage Lifecycle Mgmt. HDFS HBASE HIVE CLOUDERA NAVIGATOR CDH

- 77. 77 Cloudera Navigator Data Audit & Access Control Verify Permissions View which users and groups have access to files and directories Audit Configuration Configuration of audit tracking for HDFS, HBase and Hive Audit Dashboard Simple, queryable interface to view data access Information Export Export audit information for integration with SIEM tools HDFS HBASE HIVE IAM / LDAP SYSTEM 3rd PARTY SIEM / GRC SYSTEM ACCESS SERVICE AUDIT LOG SERVICE VIEW PERMISSIONS AUDIT LOG CONFIG AUDIT LOG COLLECTION CLOUDERA NAVIGATOR 1.0

- 78. 78 Benefits of Cloudera Navigator Control • Store sensitive data • Maintain full audit history • The first and only centralized audit tool for Hadoop Visibility • Verify access permissions to files and directories • Report on data access by user and type Integration • View permissions for LDAP/IAM users • Export audit data for integration with third-party SIEM tools

![36

Word Count Example

The cat sat on the mat

The aardvark sat on the sofa

The, 1

cat, 1

sat, 1

on, 1

the, 1

mat, 1

The, 1

aardvark, 1

sat, 1

on, 1

the, 1

sofa, 1

Mapper Input

Mapping

aardvark, 1

cat, 1

mat, 1

on, 2

sat, 2

sofa, 1

the, 4

aardvark, 1

cat, 1

mat, 1

on, 2

sat, 2

sofa, 1

the, 4

aardvark, 1

cat, 1

mat, 1

on [1, 1]

sat [1, 1]

sofa, 1

the [1, 1, 1, 1]

Shuffling Reducing

Final Result](https://image.slidesharecdn.com/clouderasessions-afternoon-gettingstarted1-140324163701-phpapp01/85/Getting-Started-with-Hadoop-36-320.jpg)