Computer vision handbook of computer vision and applications volume 1 - sensors and imaging

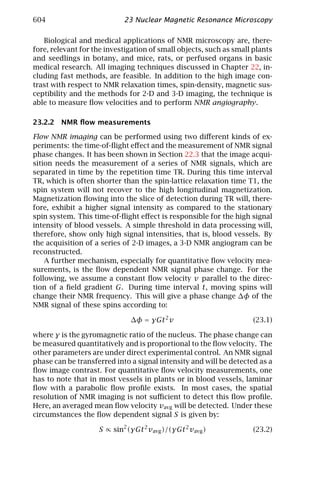

- 1. 1 1 2 2 1 1 2 4 2 4 2 2 1 1 2 2 1 1 HANDBOOK OF COMPUTER VISION AND APPLICATIONS Volume 1 Sensors and Imaging Bernd Jähne Horst Haußecker Peter Geißler ACADEMIC PRESS

- 2. Handbook of Computer Vision and Applications Volume 1 Sensors and Imaging

- 4. Handbook of Computer Vision and Applications Volume 1 Sensors and Imaging Editors Bernd Jähne Interdisciplinary Center for Scientific Computing University of Heidelberg, Heidelberg, Germany and Scripps Institution of Oceanography University of California, San Diego Horst Haußecker Peter Geißler Interdisciplinary Center for Scientific Computing University of Heidelberg, Heidelberg, Germany ACADEMIC PRESS San Diego London Boston New York Sydney Tokyo Toronto

- 5. This book is printed on acid-free paper. Copyright © 1999 by Academic Press. All rights reserved. No part of this publication may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopy, recording, or any information storage and retrieval system, without permission in writing from the publisher. The appearance of code at the bottom of the first page of a chapter in this book indicates the Publisher’s consent that copies of the chapter may be made for personal or internal use of specific clients. This consent is given on the con- dition, however, that the copier pay the stated per-copy fee through the Copy- right Clearance Center, Inc. (222 Rosewood Drive, Danvers, Massachusetts 01923), for copying beyond that permitted by Sections 107 or 108 of the U.S. Copyright Law. This consent does not extend to other kinds of copying, such as copying for general distribution, for advertising or promotional purposes, for creating new collective works, or for resale. Copy fees for pre-1999 chap- ters are as shown on the title pages; if no fee code appears on the title page, the copy fee is the same as for current chapters. ISBN 0-12-379770-5/$30.00 ACADEMIC PRESS A Division of Harcourt Brace & Company 525 B Street, Suite 1900, San Diego, CA 92101-4495 http://www.apnet.com ACADEMIC PRESS 24-28 Oval Road, London NW1 7DX, UK http://www.hbuk.co.uk/ap/ Library of Congress Cataloging-In-Publication Data Handbook of computer vision and applications / edited by Bernd Jähne, Horst Haussecker, Peter Geissler. p. cm. Includes bibliographical references and indexes. Contents: v. 1. Sensors and imaging — v. 2. Signal processing and pattern recognition — v. 3. Systems and applications. ISBN 0–12–379770–5 (set). — ISBN 0–12–379771-3 (v. 1) ISBN 0–12–379772–1 (v. 2). — ISBN 0–12–379773-X (v. 3) 1. Computer vision — Handbooks, manuals. etc. I. Jähne, Bernd 1953– . II. Haussecker, Horst, 1968– . III. Geissler, Peter, 1966– . TA1634.H36 1999 006.3 7 — dc21 98–42541 CIP Printed in the United States of America 99 00 01 02 03 DS 9 8 7 6 5 4 3 2 1

- 6. Contents Preface xi Contributors xiii 1 Introduction 1 B. Jähne 1.1 Components of a vision system . . . . . . . . . . . . . . . . . . . 1 1.2 Imaging systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2 I Illumination and Image Formation 2 Radiation 7 H. Haußecker 2.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8 2.2 Fundamentals of electromagnetic radiation . . . . . . . . . . . . 9 2.3 Radiometric quantities . . . . . . . . . . . . . . . . . . . . . . . . . 13 2.4 Fundamental concepts of photometry . . . . . . . . . . . . . . . 24 2.5 Thermal emission of radiation . . . . . . . . . . . . . . . . . . . . 28 2.6 Acoustic waves . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34 2.7 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35 3 Interaction of Radiation with Matter 37 H. Haußecker 3.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37 3.2 Basic definitions and terminology . . . . . . . . . . . . . . . . . . 39 3.3 Properties related to interfaces and surfaces . . . . . . . . . . . 43 3.4 Bulk-related properties of objects . . . . . . . . . . . . . . . . . . 52 3.5 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61 4 Imaging Optics 63 P. Geißler 4.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64 4.2 Basic concepts of geometric optics . . . . . . . . . . . . . . . . . 64 4.3 Lenses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67 4.4 Optical properties of glasses and other materials . . . . . . . . 78 4.5 Aberrations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81 4.6 Optical image formation . . . . . . . . . . . . . . . . . . . . . . . . 90 4.7 Wave and Fourier optics . . . . . . . . . . . . . . . . . . . . . . . . 96 4.8 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101 v

- 7. vi Contents 5 Radiometry of Imaging 103 H. Haußecker 5.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104 5.2 Observing surfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104 5.3 Propagating radiance . . . . . . . . . . . . . . . . . . . . . . . . . . 112 5.4 Radiance of imaging . . . . . . . . . . . . . . . . . . . . . . . . . . . 115 5.5 Detecting radiance . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118 5.6 Concluding summary . . . . . . . . . . . . . . . . . . . . . . . . . . 134 5.7 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135 6 Illumination Sources and Techniques 137 H. Haußecker 6.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137 6.2 Natural illumination . . . . . . . . . . . . . . . . . . . . . . . . . . . 138 6.3 Artificial illumination sources . . . . . . . . . . . . . . . . . . . . . 141 6.4 Illumination setups . . . . . . . . . . . . . . . . . . . . . . . . . . . 157 6.5 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162 II Imaging Sensors 7 Solid-State Image Sensing 165 P. Seitz 7.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166 7.2 Fundamentals of solid-state photosensing . . . . . . . . . . . . 168 7.3 Photocurrent processing . . . . . . . . . . . . . . . . . . . . . . . . 175 7.4 Transportation of photosignals . . . . . . . . . . . . . . . . . . . . 182 7.5 Electronic signal detection . . . . . . . . . . . . . . . . . . . . . . . 185 7.6 Architectures of image sensors . . . . . . . . . . . . . . . . . . . . 189 7.7 Camera and video standards . . . . . . . . . . . . . . . . . . . . . 194 7.8 Semiconductor technology for image sensing . . . . . . . . . . 204 7.9 Practical limitations of semiconductor photosensors . . . . . . 207 7.10 The future of image sensing . . . . . . . . . . . . . . . . . . . . . . 209 7.11 Conclusions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 218 7.12 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 219 8 HDRC-Imagers for Natural Visual Perception 223 U. Seger, U. Apel, and B. Höfflinger 8.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 223 8.2 Log compression at the pixel site . . . . . . . . . . . . . . . . . . 224 8.3 Random pixel access . . . . . . . . . . . . . . . . . . . . . . . . . . 228 8.4 Optimized SNR by bandwidth control per pixel . . . . . . . . . 228 8.5 Data density in the log space . . . . . . . . . . . . . . . . . . . . . 230 8.6 Color constancy in the log space . . . . . . . . . . . . . . . . . . . 230 8.7 Development of functionality and spatial resolution . . . . . . 231 8.8 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 235 9 Image Sensors in TFA (Thin Film on ASIC) Technology 237 B. Schneider, P. Rieve, and M. Böhm 9.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238 9.2 Thin-film detectors . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

- 8. Contents vii 9.3 TFA properties and design considerations . . . . . . . . . . . . 249 9.4 TFA array prototypes . . . . . . . . . . . . . . . . . . . . . . . . . . 256 9.5 TFA array concepts . . . . . . . . . . . . . . . . . . . . . . . . . . . 262 9.6 Conclusions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 267 9.7 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 268 10 Poly SiGe Bolometers 271 S. Sedky and P. Fiorini 10.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 272 10.2 Principle of operation of bolometers . . . . . . . . . . . . . . . . 274 10.3 Microbolometer focal plane arrays . . . . . . . . . . . . . . . . . . 280 10.4 Bolometer materials . . . . . . . . . . . . . . . . . . . . . . . . . . . 284 10.5 Poly SiGe bolometers . . . . . . . . . . . . . . . . . . . . . . . . . . 288 10.6 Characterization of poly SiGe bolometers . . . . . . . . . . . . . 292 10.7 Conclusions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 302 10.8 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 303 11 Hyperspectral and Color Imaging 309 B. Jähne 11.1 Spectral signatures . . . . . . . . . . . . . . . . . . . . . . . . . . . . 309 11.2 Spectral sampling methods . . . . . . . . . . . . . . . . . . . . . . 310 11.3 Human color vision . . . . . . . . . . . . . . . . . . . . . . . . . . . 315 11.4 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 320 III Two-Dimensional Imaging 12 Dynamic Fluorescence Imaging 323 D. Uttenweiler and R. H. A. Fink 12.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 323 12.2 Fluorescence . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 324 12.3 Fluorescent indicators . . . . . . . . . . . . . . . . . . . . . . . . . 328 12.4 Microscopic techniques . . . . . . . . . . . . . . . . . . . . . . . . . 332 12.5 Analysis of fluorescence images . . . . . . . . . . . . . . . . . . . 342 12.6 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 343 12.7 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 344 13 Electron Microscopic Image Acquisition 347 H. Stegmann, R. Wepf, and R. R. Schröder 13.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 348 13.2 Electron-specimen interactions . . . . . . . . . . . . . . . . . . . . 349 13.3 Transmission electron microscopy (TEM) . . . . . . . . . . . . . 350 13.4 Scanning transmission electron microscopy (STEM) . . . . . . . 359 13.5 Analytical transmission electron microscopy . . . . . . . . . . . 361 13.6 Scanning electron microscopy (SEM) . . . . . . . . . . . . . . . . 364 13.7 Preparation techniques . . . . . . . . . . . . . . . . . . . . . . . . . 368 13.8 Digital image processing of electron micrographs . . . . . . . . 369 13.9 Imaging examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . 370 13.10 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 383

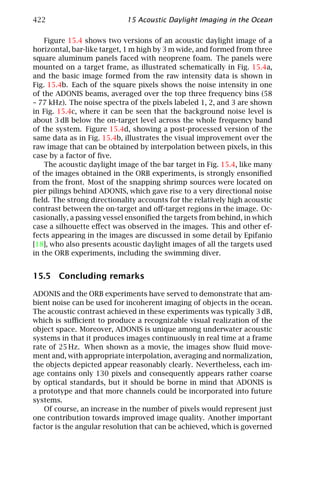

- 9. viii Contents 14 Processing of Ultrasound Images in Medical Diagnosis 387 W. Albert and M. Pandit 14.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 387 14.2 Ultrasound imaging systems . . . . . . . . . . . . . . . . . . . . . 390 14.3 Processing the B-mode image . . . . . . . . . . . . . . . . . . . . . 399 14.4 Examples of image processing of B-mode images . . . . . . . . 404 14.5 Conclusions and perspectives . . . . . . . . . . . . . . . . . . . . . 411 14.6 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 412 15 Acoustic Daylight Imaging in the Ocean 415 M. J. Buckingham 15.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 415 15.2 The pilot experiment . . . . . . . . . . . . . . . . . . . . . . . . . . 416 15.3 ADONIS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 418 15.4 Acoustic daylight images . . . . . . . . . . . . . . . . . . . . . . . . 420 15.5 Concluding remarks . . . . . . . . . . . . . . . . . . . . . . . . . . . 422 15.6 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 423 16 The Multisensorial Camera for Industrial Vision Applications 425 R. Massen 16.1 Image segmentation with little robustness . . . . . . . . . . . . 425 16.2 Sensor fusion and multisensorial camera . . . . . . . . . . . . . 426 16.3 A feature vector with every pixel . . . . . . . . . . . . . . . . . . . 428 16.4 A real-time three-dimensional linescan camera . . . . . . . . . 429 16.5 A real-time linescan scatter camera . . . . . . . . . . . . . . . . . 430 16.6 The multisensorial color-height-scatter camera . . . . . . . . . 433 16.7 Compressing the multisensorial camera signals . . . . . . . . . 435 16.8 The one-chip multisensorial camera . . . . . . . . . . . . . . . . . 435 16.9 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 436 16.10 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 437 IV Three-Dimensional Imaging 17 Geometric Calibration of Digital Imaging Systems 441 R. Godding 17.1 Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 442 17.2 Parameters influencing geometrical performance . . . . . . . . 442 17.3 Model of image formation with the aid of optical systems . . 444 17.4 Camera models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 445 17.5 Calibration and orientation techniques . . . . . . . . . . . . . . . 450 17.6 Photogrammetric applications . . . . . . . . . . . . . . . . . . . . 457 17.7 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 460 18 Principles of Three-Dimensional Imaging Techniques 463 R. Schwarte, H. Heinol, B. Buxbaum, T. Ringbeck, Z. Xu, and K. Hartmann 18.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 464 18.2 Basic principles . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 465 18.3 Some criteria and specifications . . . . . . . . . . . . . . . . . . . 467 18.4 Triangulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 469 18.5 Time-of-flight (TOF) of modulated light . . . . . . . . . . . . . . 474

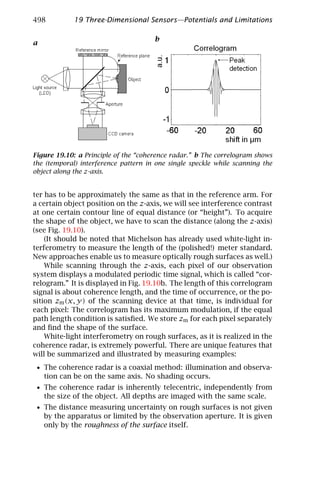

- 10. Contents ix 18.6 Optical Interferometry (OF) . . . . . . . . . . . . . . . . . . . . . . 479 18.7 Outlook . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 482 18.8 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 482 19 Three-Dimensional Sensors—Potentials and Limitations 485 G. Häusler 19.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 485 19.2 Why three-dimensional sensors? . . . . . . . . . . . . . . . . . . . 486 19.3 Some important questions about three-dimensional sensing . 488 19.4 Triangulation on optically rough surfaces . . . . . . . . . . . . . 489 19.5 White-light interferometry on rough surfaces . . . . . . . . . . 495 19.6 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 503 19.7 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 504 19.8 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 505 20 High-Performance Surface Measurement 507 R. W. Malz 20.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 508 20.2 Close-range photogrammetry . . . . . . . . . . . . . . . . . . . . . 511 20.3 Sequential light processing and information theory . . . . . . 517 20.4 Advanced self-calibration of three-dimensional sensors . . . . 526 20.5 Hybrid navigation of three-dimensional sensors . . . . . . . . . 529 20.6 Mobile measuring system “Ganymed” . . . . . . . . . . . . . . . 532 20.7 Conclusions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 536 20.8 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 538 21 Three-Dimensional Light Microscopy 541 E. H. K. Stelzer 21.1 Three-dimensional microscopy . . . . . . . . . . . . . . . . . . . . 542 21.2 Telecentricity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 543 21.3 Theory of three-dimensional imaging . . . . . . . . . . . . . . . . 547 21.4 Confocal microscopy . . . . . . . . . . . . . . . . . . . . . . . . . . 548 21.5 Index mismatching effects . . . . . . . . . . . . . . . . . . . . . . . 555 21.6 Developments in confocal microscopy . . . . . . . . . . . . . . . 556 21.7 Resolution versus distance . . . . . . . . . . . . . . . . . . . . . . 557 21.8 Perspectives of three-dimensional light microscope . . . . . . 558 21.9 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 559 22 Magnetic Resonance Imaging in Medicine 563 W. G. Schreiber and G. Brix 22.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 564 22.2 Basic magnetic resonance physics . . . . . . . . . . . . . . . . . . 564 22.3 Image acquisition and reconstruction . . . . . . . . . . . . . . . . 574 22.4 Image contrast . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 587 22.5 Fast imaging methods . . . . . . . . . . . . . . . . . . . . . . . . . . 591 22.6 Overview of quantitative applications . . . . . . . . . . . . . . . 596 22.7 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 598

- 11. x Contents 23 Nuclear Magnetic Resonance Microscopy 601 A. Haase, J. Ruff, and M. Rokitta 23.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 601 23.2 Methodology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 603 23.3 Applications to plant studies . . . . . . . . . . . . . . . . . . . . . 605 23.4 Applications to animal studies . . . . . . . . . . . . . . . . . . . . 609 23.5 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 611 23.6 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 612 Index 613

- 12. Preface What this handbook is about This handbook offers a fresh approach to computer vision. The whole vision process from image formation to measuring, recognition, or re- acting is regarded as an integral process. Computer vision is under- stood as the host of techniques to acquire, process, analyze, and un- derstand complex higher-dimensional data from our environment for scientific and technical exploration. In this sense the handbook takes into account the interdisciplinary nature of computer vision with its links to virtually all natural sciences and attempts to bridge two important gaps. The first is between mod- ern physical sciences and the many novel techniques to acquire images. The second is between basic research and applications. When a reader with a background in one of the fields related to computer vision feels he has learned something from one of the many other facets of com- puter vision, the handbook will have fulfilled its purpose. The handbook comprises three volumes. The first volume, Sensors and Imaging, covers image formation and acquisition. The second vol- ume, Signal Processing and Pattern Recognition, focuses on processing of the spatial and spatiotemporal signal acquired by imaging sensors. The third volume, Systems and Applications, describes how computer vision is integrated into systems and applications. Prerequisites It is assumed that the reader is familiar with elementary mathematical concepts commonly used in computer vision and in many other areas of natural sciences and technical disciplines. This includes the basics of set theory, matrix algebra, differential and integral equations, com- plex numbers, Fourier transform, probability, random variables, and graphing. Wherever possible, mathematical topics are described intu- itively. In this respect it is very helpful that complex mathematical relations can often be visualized intuitively by images. For a more for- xi

- 13. xii Preface mal treatment of the corresponding subject including proofs, suitable references are given. How to use this handbook The handbook has been designed to cover the different needs of its readership. First, it is suitable for sequential reading. In this way the reader gets an up-to-date account of the state of computer vision. It is presented in a way that makes it accessible for readers with different backgrounds. Second, the reader can look up specific topics of inter- est. The individual chapters are written in a self-consistent way with extensive cross-referencing to other chapters of the handbook and ex- ternal references. The CD that accompanies each volume of the hand- book contains the complete text of the handbook in the Adobe Acrobat portable document file format (PDF). This format can be read on all major platforms. Free Acrobat reader version 3.01 for all major com- puting platforms is included on the CDs. The texts are hyperlinked in multiple ways. Thus the reader can collect the information of interest with ease. Third, the reader can delve more deeply into a subject with the material on the CDs. They contain additional reference material, interactive software components, code examples, image material, and references to sources on the Internet. For more details see the readme file on the CDs. Acknowledgments Writing a handbook on computer vision with this breadth of topics is a major undertaking that can succeed only in a coordinated effort that involves many co-workers. Thus the editors would like to thank first all contributors who were willing to participate in this effort. Their cooperation with the constrained time schedule made it possible that the three-volume handbook could be published in such a short period following the call for contributions in December 1997. The editors are deeply grateful for the dedicated and professional work of the staff at AEON Verlag & Studio who did most of the editorial work. We also express our sincere thanks to Academic Press for the opportunity to write this handbook and for all professional advice. Last but not least, we encourage the reader to send us any hints on errors, omissions, typing errors, or any other shortcomings of the handbook. Actual information about the handbook can be found at the editors homepage http://klimt.iwr.uni-heidelberg.de. Heidelberg, Germany and La Jolla, California, December 1998 Bernd Jähne, Horst Haußecker, Peter Geißler

- 14. Contributors Werner F. Albert studied medicine at the Universities of Saarland and Cologne. He obtained the degree of Doc- tor of Medicine in 1970 and completed the Habilitation in 1981 at the University of Saarland. Since 1983 he has been Chief Physician of the Department of Internal Medicine of the Westpfalz-Klinikum Kaiserslautern and since 1991 its Medical Director. He has been an Adjunct Professor at the University of Saarland at Homburg since 1986. His current research interests include transplan- tation medicine and gastroenterology. Prof. Dr. Werner F. Albert, Medizinische Klinik III Westpfalz-Klinikum, D-67653 Kaiserslautern, Germany Uwe Apel received his diploma degree in Physics at the University of Gießen in 1984. From 1984 to 1987 he was engaged as a process engineer at the power semi- conductor facility of Robert Bosch GmbH at Reutlingen. In 1987 he changed to the Institute for Microelectronics in Stuttgart. In 1994 he joined the image sensor design team. He has made major contributions to several pend- ing patents in circuit design and camera system related topics. Uwe Apel, Institute for Microelectronics, Stuttgart Allmandring 30a, D-70569 Stuttgart, Germany apel@www.ims-chips.de Markus Böhm received the Dipl.-Ing. and the Dr.-Ing. de- grees in electrical engineering from the Technical Univer- sity, Berlin, Germany, in 1979 and 1983, respectively. In 1984/85, he was a visiting scientist with the Department of Electrical Engineering of the University of Delaware. In 1985, he joined Chronar Corporation in Princeton, New Jersey. Since 1989 he has been a Professor at the Univer- sity of Siegen, Germany, where he heads the Institute for Semiconductor Electronics. His research interests focus on thin-film technology, novel imaging devices and pho- tovoltaics. He is a co-founder of Silicon Vision GmbH. Prof. Markus Böhm, Institut für Halbleiterelektronik (IHE) Universität-GH Siegen, Hölderlinstr. 3, D-57068 Siegen, Germany boehm@teb.et-inf.uni-siegen.de, www.uni-siegen.de/˜ihe/ xiii

- 15. xiv Contributors Michael J. Buckingham is Professor of Ocean Acoustics at Scripps Institution of Oceanography. His research in- terests include imaging in the ocean, marine sediments, and sea-surface processes. He is a Fellow of the Acous- tical Society of America, the Institute of Acoustics, the Institute of Electrical Engineers, and the Explorers Club, and a member of the New York Academy of Sciences. In 1982 he received the A. B. Wood Medal from the IOA and he is the recipient of many other awards. Prof. Michael J. Buckingham Marine Physical Laboratory Scripps Institution of Oceanography University of California, San Diego 9500 Gilman Drive, La Jolla, CA 92093-0213, USA, mjb@mpl.ucsd.edu, Gunnar Brix studied physics in Karlsruhe and Heidel- berg. In 1985 he received his diploma degree from the University of Karlsruhe and in 1989 a doctoral degree from the University of Heidelberg. From 1994 to 1998 he was assistant professor for medical physics at the University of Heidelberg where he headed the depart- ment of biophysics and medical radiation physics at the German Cancer Research Center in Heidelberg. His cur- rent research interests include the development of new magnetic resonance imaging (MRI) and positron emis- sion tomography (PET) data acquisition techniques as well as the analysis of kinetic data within the framework of kinetic modeling. Priv.-Doz. Dr. Gunnar Brix Abteilung Medizinische Strahlenhygiene und nichtionisierende Strahlung Bundesamt für Strahlenschutz, Postfach 10 01 49 D-38201 Salzgitter, Germany Paolo Fiorini took his degree in Solid State Physics at the University of Rome in 1977; his thesis was on exi- tons in silicon. He has been active in the field of electri- cal and optical properties of semiconductors for many years, working at the University of Rome, Strasbourg (France), IBM Research Center in Yorktown Heights, NY (USA) and at the Interuniversity Microelectronic Center (IMEC) in Leuven (Belgium). At present, he is associate professor, Physics Department of the Third University of Rome. Prof. Paolo Fiorini, Dept. of Physics 3rd University of Rome, Via della Vasca Navale 86, I-00156 Rome, Italy

- 16. Contributors xv Rainer H.A. Fink is a professor at the II. Institute of Phys- iology at the University of Heidelberg. His research inter- ests comprise calcium regulation, activation of contrac- tile force, membrane electrophysiology, and laser appli- cations in the biophysics of muscular contraction. He held research and teaching positions at the University of Washington, Seattle, WA, U.S., La Trobe University, Mel- bourne, and the University of Adelaide, Australia, before taking up his professorship in Heidelberg in 1990. He received his PhD in 1979 at the University of Bochum, Germany. Prof. Dr. Rainer H.A. Fink, II. Physiologisches Institut Universität Heidelberg, Im Neuenheimer Feld 326 D-69120 Heidelberg, Germany fink@novsrv1.pio1.uni-heidelberg.de Peter Geißler studied physics in Heidelberg. He received his diploma and doctoral degree from Heidelberg Uni- versity in 1994 and 1998, respectively. His research in- terests include computer vision, especially depth-from- focus, adaptive filtering, and flow visualization as well as the application of image processing in physical sciences and oceanography. Dr. Peter Geißler Forschungsgruppe Bildverarbeitung, IWR Universität Heidelberg, Im Neuenheimer Feld 368 D-69120 Heidelberg, Germany Peter.Geissler@iwr.uni-heidelberg.de http://klimt.iwr.uni-heidelberg.de Robert Godding received his diploma in geodesy from the University of Bonn in 1987. From 1987 to 1989 he worked as research scientist at the Institute for Pho- togrammetry at the University of Bonn and from 1989 to 1994 at the Institute for Photogrammetry and Image Processing at the University of Braunschweig in the field of close-range photogrammetry. From 1994 to 1998 he was with Rollei Fototechnic in Braunschweig, first re- sponsible for research and development of close-range photogrammetry systems, later as head of the RolleiMet- ric Department. Since December 1998 he has been with AICON GmbH in Braunschweig. His main interests are close range-digital photogrammetry in industrial applications and the use of high-resolution digital cameras for optical measurement techniques. Robert Godding AICON GmbH, Celler Straße 32, D-38114 Braunschweig, Germany robert.godding@aicon.de, http://www.aicon.de

- 17. xvi Contributors Hermann Gröning graduated in 1996 from the Univer- sity of Heidelberg with a master degree in physics and is now pursuing his PhD at the Interdisciplinary Center for Scientific Computing. He is concerned mainly with radiometric and geometric camera calibration. Hermann Gröning Forschungsgruppe Bildverarbeitung, IWR Universität Heidelberg Im Neuenheimer Feld 368 D-69120 Heidelberg, Germany Hermann.Groening@iwr.uni-heidelberg.de Axel Haase studied physics at the universities of Erlan- gen and Gießen. He received his diploma from the Uni- versity of Gießen in 1977 and a doctoral degree in 1980. During his doctoral work and later in postdoctoral work, he worked at the Max-Planck-Institut für biophysikalis- che Chemie in Göttingen. In 1981 he spent one postdoc- toral year at the Biochemistry Department of the Univer- sity of Oxford, UK, with Prof. G. K. Radda. He worked as a scientist at the Max-Planck-Institut für biophysikalische Chemie until 1989. During this period he invented fast NMR imaging (FLASH) and other NMR techniques (CHESS imaging, STEAM imaging). He received his habilitation from the University of Frankfurt in 1987. Since 1989, he has held the chair of biophysics at the Uni- versity of Würzburg. Prof. Dr. Axel Haase, Physikalisches Institut, Universität Würzburg Am Hubland, D-97074 Würzburg, Germany haase@physik.uni-wuerzburg.de Gerd Häusler is adjunct professor, University of Erlan- gen, Chair for Optics, and director of the Optical Metrol- ogy Group. He received his diploma in 1970 and a doc- toral degree in 1974 from the Optical Institute, Techni- cal University Berlin. In 1974 he moved to the Chair for Applied Optics (later Chair for Optics), University of Er- langen. There he received his habilitation in 1982. As a doctoral fellow he worked with IBM (Sindelfingen), ENST Telecom (Paris), and RCA (Zürich). At the University of Munich and the RIKEN Institute in Tokyo he worked on optical and electronical image processing and nonlinear optical feedback systems. His current research interests include the investigation of the physical limits of range sensing and the con- struction of sensors that work at these limits and cover the nanometer to meter range, with applications in industry and medicine. Prof. Dr. Gerd Häusler, Chair for Optics, Universität Erlangen-Nürnberg Staudtstraße 7/B2, D-91056 Erlangen, Germany haeusler@physik.uni-erlangen.de

- 18. Contributors xvii Horst Haußecker studied physics in Heidelberg. He re- ceived his diploma in physics and his doctoral degree from Heidelberg University in 1994 and 1996, respec- tively. He was visiting scientist at the Scripps Institution of Oceanography in 1994. Currently he is conducting research in the image processing research group at the Interdisciplinary Center for Scientific Computing (IWR), where he also lectures on optical flow computation. His research interests include computer vision, especially image sequence analysis, infrared thermography, and fuzzy-image processing, as well as the application of im- age processing in physical sciences and oceanography. Dr. Horst Haußecker, Forschungsgruppe Bildverarbeitung, IWR Universität Heidelberg, Im Neuenheimer Feld 368, D-69120 Heidelberg Horst.Haussecker@iwr.uni-heidelberg.de http://klimt.iwr.uni-heidelberg.de Bernd Höfflinger received his Diploma in Physics in 1964 and his PhD in 1967. He was a member of the scientific staff of the Siemens Research Laboratory in Munich from 1964-1967. From 1967-1969 he was Assistant Profes- sor, School of Electrical Engineering, Cornell University, Ithaca, NY, USA. He was manager of the MOS Integrated Circuits Division of the Siemens Components Group in Munich from 1970-1972. He then founded the Depart- ment of Electrical Engineering at the University of Dort- mund. In 1981 he became Head of the Department of Electrical Engineering and Co-Director of the Microelec- tronics and Information Sciences Center at the University of Minnesota. Since September 1985 he has been Director and Chairman of the Board of the Institute for Microelectronics, Stuttgart. Prof. Dr. Bernd Höfflinger, Institute for Microelectronics Stuttgart (IMS) Allmandring 30a, D-70569 Stuttgart, Germany E-mail: hoefflinger@www.ims-chips.de Bernd Jähne studied physics in Saarbrücken and Hei- delberg. He received his diploma, doctoral degree, and habilitation degree from Heidelberg University in 1977, 1980, and 1985, respectively, and a habilitation de- gree in applied computer science from the University of Hamburg-Harburg in 1992. Since 1988 he has been a Ma- rine Research Physicist at Scripps Institution of Oceanog- raphy, University of California, and, since 1994, he has been professor of physics at the Interdisciplinary Center of Scientific Computing. He leads the research group on image processing. His research interests include com- puter vision, especially filter design and image sequence analysis, the application of image processing techniques in science and industry, and small-scale air-sea interaction processes.

- 19. xviii Contributors Prof. Dr. Bernd Jähne, Forschungsgruppe Bildverarbeitung, IWR Universität Heidelberg, Im Neuenheimer Feld 368, D-69120 Heidelberg Bernd.Jaehne@iwr.uni-heidelberg.de http://klimt.iwr.uni-heidelberg.de Reinhard Malz studied communication and computer science in Esslingen and electrical engineering in Stuttgart. He received diploma degrees in 1978 and 1984 and the doctoral degree from University of Stuttgart in 1992. His research interests include analog and digi- tal electronics, semiconductor physics, optics, pattern recognition, and active optical information processing for inspection and measurement. Currently he is a re- searcher at Daimler-Chrysler AG, where he develops 3-D measurement systems for reverse engineering and qual- ity control. Dr. Reinhard Malz, Daimler-Chrysler AG Wilhelm-Runge-Str. 11, D-89081 Ulm, Germany, Reinhard.Malz@t-online.de Robert Massen studied electronic communications at the University of Technology of Aachen, Germany. His PhD thesis covers stochastic computing, an early non-von Neumann computer architecture with random data cod- ing and massively parallel organization. In 1974, he became professor in the Department of Computer Sci- ence at the Fachhochschule (University of Applied Sci- ences) Konstanz. He has been active in industrial im- age processing since 1984, first as director of the for- profit Steinbeis Transfer Center for Image Processing. In 1992, he founded the MASSEN machine vision systems GmbH, Konstanz, through a management buy-out. The company is a major German supplier of advanced dedicated color vision systems for on-line monitoring of surfaces and for real-time sortation. Prof. Dr. Ing. Robert Massen, MASSEN machine vision systems GmbH Lohnerhofstrasse 2, D-78467 Konstanz, Germany, Robert.Massen@t-online.de Madhukar Pandit studied electrical engineering in Ban- galore and Karlsruhe. He obtained the Dr.-Ing. degree in Control Systems in the Technische Hochschule Karlsruhe and the Habilitation in the Kaiserslautern University. He worked at the National Aeronautical Laboratory, Banga- lore, Brown Boveri and Cie in Mannheim. Since 1978, he has been professor of Control Systems and Signal The- ory at the Kaiserslautern University. His group is active mainly in the areas of process control and image pro- cessing applied to medical imaging and quality control. Prof. Dr.-Ing. Madhukar Pandit, Lehrstuhl für Regelungstechnik und Signalthe- orie, Fachbereich Elektrotechnik, Universität Kaiserslautern Postfach 3049, D-67653 Kaiserslautern, Germany Pandit@e-technik.uni-kl.de, http://www.uni-kl.de/AG-Pandit/

- 20. Contributors xix Peter Rieve received the Dipl.-Ing. degree in electrical engineering from the University of Siegen, Germany, in 1994. From 1994 to 1997 he was a research engineer at the Institute for Semiconductor Electronics, University of Siegen. He worked in the field of sensor technolo- gies and focused on the development and optimization of amorphous silicon based black and white and color de- tectors for applications in image sensor systems in TFA technology. P. Rieve is now with Silicon Vision GmbH, Siegen. Peter Rieve, Silicon Vision GmbH Birlenbacher Str. 18, D-57078 Siegen, Germany rieve@siliconvision.de, http://www.siliconvision.de Markus Rokitta studied physics at the University of Würzburg. He received his diploma from the Univer- sity of Würzburg in 1994. Since 1996 he has been working for his doctoral degree in the area of NMR mi- croscopy applied to plant systems. He is member of the Graduiertenkolleg “Magnetische Kernresonanz in vivo und in vitro für die biologische und medizinische Grund- lagenforschung.” Dipl. Phys. Markus Rokitta Physikalisches Institut Universität Würzburg Am Hubland, D-97074 Würzburg, Germany Jan Ruff studied physics at the University of Würzburg. He received his diploma from the University of Würzburg in 1995. Since 1996 he has been working for his doc- toral degree in the area of NMR microscopy applied to animal studies. He is member of the Graduiertenkolleg “Magnetische Kernresonanz in vivo und in vitro für die biologische und medizinische Grundlagenforschung.” Dipl. Phys. Jan Ruff Physikalisches Institut Universität Würzburg Am Hubland, D-97074 Würzburg, Germany

- 21. xx Contributors Bernd Schneider received the Dipl.-Ing. degree in electri- cal engineering from the University of Siegen, Germany, in 1995. In the same year, he joined the Institute for Semiconductor Electronics at the University of Siegen. He works in the field of sensor technologies and focuses on the design, fabrication and characterization of ASICs for TFA image sensors. He is currently engaged in the development of new types of TFA sensor systems. Bernd Schneider, Institut für Halbleiterelektronik (IHE) Universität-GH Siegen Hölderlinstr. 3, D-57068 Siegen, Germany bernd s@teb.et-inf.uni-siegen.de http://www.uni-siegen.de/˜ihe/ Wolfgang Schreiber studied physics in Munich. He re- ceived his diploma from the University of Munich in 1990 and in 1994 a doctoral degree from the University of Hei- delberg. From 1994 to 1997 he was a postdoctoral fellow at the department of biophysics and medical radiation physics at the German Cancer Research Center in Hei- delberg. Since 1997 he has been head of the research group MR physics at the University of Mainz. His cur- rent research interests include the development of tech- niques for noninvasive assessment of physiology and pathophysiology by magnetic resonance imaging, pulse sequence development for ultrafast proton- and non-proton MRI in the brain, heart, and lung, and MRI data postprocessing techniques. Dr. Wolfgang Schreiber, Department of Radiology Johannes Gutenberg-University, Langenbeckstr. 1 D-55131 Mainz, Germany Wolfgang.Schreiber@radiologie.klinik.uni-mainz.de Rasmus R. Schröder studied physics and biology at the Ruprechts-Karls-Universität Heidelberg, Germany and at the Trinity College Dublin, Ireland. After receiving his PhD at Heidelberg University in theoretical elementary particle physics, he took up his biological interests by continuing his work in the department of Kenneth C. Holmes at the Max-Planck-Institut for medical research in Heidelberg. Here he started his work in electron mi- croscopy and image processing. Today he is group leader at the Max-Planck-Institut doing research on the image formation in energy filtered electron microscopes, quan- titative image processing, and investigating molecular structures of the muscle proteins actin and myosin in its force-producing interactions. Dr. Rasmus R. Schröder, MPI für medizinische Forschung Jahnstr. 29, D-69120 Heidelberg, Germany rasmus@mpimf-heidelberg.mpg.de

- 22. Contributors xxi Rudolf Schwarte studied electrical engineering at the RWTH Aachen. He received his diploma and doctoral de- gree from RWTH Aachen in 1965 and 1972. From 1973- 1978 he worked as the head research engineer at the In- stitute for Technical Electronics in Aachen, founded the company Sympuls GmbH in Aachen, followed by three years of leading research departments in several com- panies in Germany. Since 1981 he has been professor at the University of Siegen and head of the Institute for Data Processing (INV). He is the initiator and chair of the Center for Sensory Systems (ZESS) in Siegen. In 1995 he received the NRW innovation prize. He holds several patents in the fields of optical measurement and communication systems. In 1997 he founded S-TEC GmbH in Siegen. His main research interests include laser ranging systems, optical sensory systems, optical data communication, and digital signal processing. Prof. Dr. Rudolf Schwarte, Institut für Nachrichtenverarbeitung (INV) Universität-GH Siegen, Hölderlinstr. 3, D-57068 Siegen, Germany schwarte@nv.et-inf.uni-siegen.de http://www.nv.et-inf.uni-siegen.de/inv/inv.html Ulrich Seger received his diploma in electrical engineer- ing from the Fachhochschule Konstanz for his work on digital image preprocessing for optical character recognition in 1987. As design engineer in Computer Gesellschaft Konstanz mbHs R&D-department he was en- gaged in the development of a multiprocessor character recognition system. In 1989 he joint the Mikrosystems Division of the IMS, where he worked on chip and system design of CMOS microsystems involving optical sensors and analog signal processors and started the develop- ment of the first HDRC sensors. He is co-inventor of the basic HDRC principle and made major contributions to several pending patents in circuit design and camera system related topics. Ulrich Seger, Institute for Microelectronics Stuttgart Allmandring 30a, D-70569 Stuttgart, Germany seger@www.ims-chips.de Peter Seitz received his PhD degree in physics in 1984 from the Swiss Federal Institute of Technology (ETH) in Zürich, Switzerland. From 1984 to 1987 he was a staff member of the RCA research laboratories in Prince- ton, New Jersey and Zürich, Switzerland. Afterwards he transferred to the Swiss Paul Scherrer Institute. Since 1997 he has been working for the Swiss Center for Elec- tronics and Microtechnology (CSEM) in Neuchatel and Zürich, heading the Image Sensing Section in the Re- search division. Peter Seitz is the author of 90 publica- tions in the fields of applied optics, image sensing, ma- chine vision, and optical microsystems engineering, and he holds 8 patents.

- 23. xxii Contributors Prof. Dr. Peter Seitz Centre Suisse d’Electronique et de Microtechnique SA (CSEM) Badenerstrasse 569, CH-8048 Zurich, Switzerland peter.seitz@csem.ch, http://www.csem.ch/ Sherif Sedky graduated in 1992 from the department of Electrical and Communication Engineering of Cairo Uni- versity. In 1995 he obtained a master degree in Engi- neering Physics at the same university. In 1998 he was granted a PhD degree in micro electronics and material science from the Catholic University of Leuven (Belgium). He is active in the field of Sensors and Actuators. He is now a member of the microsystem technology group of the Interuniversity Microelectronics Center (IMEC) in Leuven (Belgium). He is also an assistant professor at the department of Engineering Physics, Faculty of Engineer- ing, Cairo University. Dr. Sherif Sedky Department of Engineering, Mathematics and Physics Faculty of Engineering, Cairo University, Giza, Egypt, sedky@imec.be E. H. K. Stelzer studied physics in Frankfurt am Main and in Heidelberg, Germany. During his Diploma thesis at the Max-Planck-Institut für Biophysik he worked on the physical chemistry of phospholipid vesicles, which he characterized by photon correlation spectroscopy. Since 1983 he has worked at the European Molecular Biol- ogy Laboratory (EMBL). He has contributed extensively to the development of confocal fluorescence microscopy and its application in life sciences. His group works on the development and application of high-resolution techniques in light microscopy, video microscopy, con- focal microscopy, optical tweezers, single particle analy- sis, and the documentation of relevant parameters with biological data. Prof. Dr. E. H. K. Stelzer, Light Microscopy Group, European Molecular Biology Laboratory (EMBL), Postfach 10 22 09 D-69120 Heidelberg, Germany, stelzer@EMBL-Heidelberg.de, Heiko Stegmann studied physics at the Ruprecht-Karls- Universität Heidelberg, Germany. He received his diplo- ma degree in 1996 and his PhD degree in 1998 from that university, working on analytical electron microscopy techniques for the investigation of muscle biophysics. At present he works on 3-D reconstruction of mo- tor molecules by cryo-electron microscopy at the Max- Planck-Institut for medical research in Heidelberg. Heiko Stegmann, MPI für medizinische Forschung Jahnstr. 29, D-69120 Heidelberg, Germany stegmann@mpimf-heidelberg.de

- 24. Contributors xxiii Dietmar Uttenweiler is a research fellow at the II. Insti- tute of Physiology at the University of Heidelberg in the group of Prof. Dr. R. H. A. Fink. He studied physics in Freiburg and Heidelberg. In 1990–1991 he worked at the University of Sussex, UK, supported by an Erasmus schol- arship. He graduated as Diplom-Physiker in 1994 and received his doctoral degree (Dr. rer. nat.) in physics in 1997 from the University of Heidelberg. His research interests in biophysics comprise fluorescence imaging techniques, mathematical modeling, and digital image processing, in particular for the study of motor proteins and the calcium regulation of force generation in muscle. Dr. Dietmar Uttenweiler, II. Physiologisches Institut University of Heidelberg, Im Neuenheimer Feld 326, D-69120 Heidelberg dietmar.uttenweiler@urz.uni-heidelberg.de Roger Wepf studied biology at the ETH, Swiss Federal Institute of Technology in Zurich, Switzerland, received his PhD at the Institute of Cell Biology, Swiss Federal In- stitute of Technology on surface imaging with high res- olution coating and worked as a postdoctoral fellow at the same institute on imaging of actin binding proteins, and at the EMBL, Heidelberg, Germany on new prepara- tion techniques for high-resolution LVSEM in the group of Max Haider. He then became a staff member in the junior-group Cryopreparation for EM and Cryo-SEM in the Cell Biology dept. at EMBL. At present he is the group leader of Electron Microscopy in the Central Analytical Dept. at Beiersdorf AG, Hamburg, Germany. Dr. Roger Wepf, Beiersdorf AG Unnastr. 48, D-20245 Hamburg, Germany wepf-r.ocp-65@bdfde86mhs.compuserve.com

- 25. xxiv Contributors

- 26. 1 Introduction Bernd Jähne Interdisziplinäres Zentrum für Wissenschaftliches Rechnen (IWR) Universität Heidelberg, Germany 1.1 Components of a vision system . . . . . . . . . . . . . . . . . . . 1 1.2 Imaging systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2 1.1 Components of a vision system Computer vision is a complex subject. As such it is helpful to divide it into the various components or function modules. On this level, it is also much easier to compare a technical system with a biological system. In this sense, the basic common functionality of biological and machine imaging includes the following components: Radiation source. If no radiation is emitted from the scene or the ob- ject of interest, nothing can be observed or processed. Thus appro- priate illumination is necessary for objects that are themselves not radiant. Camera. The “camera” collects the radiation received from the object in such a way that the radiation’s origins can be pinpointed. In the simplest case this is just an optical lens. But it could also be a completely different system, for example, an imaging optical spec- trometer, an x-ray tomograph, or a microwave dish. Sensor. The sensor converts the received radiative flux density into a suitable signal for further processing. For an imaging system nor- mally a 2-D array of sensors is required to capture the spatial dis- tribution of the radiation. With an appropriate scanning system in some cases a single sensor or a row of sensors could be sufficient. Processing unit. It processes the incoming, generally higher-dimen- sional data, extracting suitable features that can be used to measure object properties and categorize them into classes. Another impor- tant component is a memory system to collect and store knowl- edge about the scene, including mechanisms to delete unimportant things. 1 Handbook of Computer Vision and Applications Copyright © 1999 by Academic Press Volume 1 All rights of reproduction in any form reserved. Sensors and Imaging ISBN 0–12–379771–3/$30.00

- 27. 2 1 Introduction Property Radiance Irradiance Electric Digital Object signal ADC image Imaging Photo- radiation sampling system sensor s(x) interaction l(x) E(x) g(x) Gmn Figure 1.1: Chain of steps linking an object property to the signal measured by an imaging system. Actors. Actors react to the result of the visual observation. They be- come an integral part of the vision system when the vision system is actively responding to the observation by, for example, tracking an object of interest or by using a vision-guided navigation (active vision, perception action cycle). 1.2 Imaging systems Volume 1 of this handbook deals with imaging systems. It covers all processes involved in the formation of an image from objects and the sensors that convert radiation into electric signals. Generally the goal is to attain a signal from an object in such a form that we know where it is (geometry) and what it is or what properties it has. It is important to note that the type of answer we receive from these two implicit questions depends on the purpose of the vision system. The answer could be of qualitative or quantitative nature. For some ap- plications it could be sufficient to obtain a qualitative answer like “there is a car on the left coming towards you.” The “what” and “where” ques- tions can thus cover the entire range from “there is something,” a spec- ification of the object in the form of a class, to a detailed quantitative description of various properties of the objects of interest. The relation that links the object property to the signal measured by an imaging system is a complex chain of processes (Fig. 1.1). Interaction of the radiation with the object (possibly using an appropriate illumi- nation system) causes the object to emit radiation. A portion (usually only a very small part) of the emitted radiative energy is collected by the optical system and perceived as an irradiance (radiative energy/area). A sensor (or rather an array of sensors) converts the received radiation into an electrical signal that is subsequently sampled and digitized to form a digital image as an array of digital numbers. Only direct imaging systems provide a direct point to point corre- spondence between points of the objects in the 3-D world and at the image plane. Indirect imaging systems also give a spatially distributed irradiance but with no such one-to-one relation. Generation of an im- age requires reconstruction of the object from the perceived irradiance. Examples of such imaging techniques include radar imaging, various techniques for spectral imaging, acoustic imaging, tomographic imag- ing, and magnetic resonance imaging (Chapters 22 and 23).

- 28. 1.2 Imaging systems 3 The first part of this volume covers the basics of image formation (Chapters 2–6). The fundamentals of electromagnetic radiation, ra- diometry and photometry, and of thermal radiation are discussed in Chapter 2. Chapter 4 discusses basis knowledge regarding optics and optical systems, areas that are helpful to know for computer vision. Chapter 3 deals with the basic physical laws that determine the relation between object properties and the emitted radiation while Chapter 5 deals with the basic relations between the emitted radiation (radiance) and the received radiation at the sensor plane (irradiance). Chapter 6 covers two practical topics. First, it introduces various types of illu- mination sources that are available to illuminate a scene. Second, it describes the basic possibilities for illumination setups and their rela- tion to the imaged object properties. The second part of this volume covers imaging sensors. It starts with an survey of solid state imaging (Chapter 7) and then details some important recent developments including logarithmic complementary metal-oxide-semiconductor (CMOS) sensors for natural vision percep- tion (Chapter 8), a novel family of vision sensors built as thin films on top of application specific circuits (Chapter 9), and a chapter on mod- ern developments with uncooled infrared imaging sensors (Chapter 10). The second part concludes with a chapter on the principles of color and spectral imaging (Chapter 11). The third and fourth parts present in detail various 2-D (Chapters 12– 16) and 3-D (Chapters 17–23) imaging systems, respectively. The part on 2-D imaging discusses fluorescence imaging (Chapter 12), electron microscopic imaging (Chapter 13), acoustic imaging (Chapters 14 and 15), and multisensorial cameras for industrial vision applications (Chap- ter 16). Techniques for 3-D imaging have experienced an enormous progress in the last several years. While traditional computer vision is only con- cerned with classical paradigms such as structure from stereo, shape from shading, depth from focus, or structure from motion (see Vol- ume 2), recent advances in sensor technology have advanced a host of techniques for 3-D imaging. This is the topic of part IV of Volume 1. Reconstruction of 3-D geometry from images requires careful geomet- rical calibration (Chapter 17). Chapter 18 surveys the principles of 3-D imaging and shows that the wide variety of available techniques can be categorized into a scheme with only a few basic principles. Chap- ter 19 focuses on the physical principles that ultimately limit the accu- racy of 3-D imaging and explores some new techniques such as optical coherence tomography while Chapter 20 discusses high-performance surface measuring by combining photogrammetric and sequential-light techniques. The remainder of part IV deals with 3-D light microscopy (Chapter 21) and magnetic resonance (MR) imaging in medical and bio- logical research (Chapters 22 and 23).

- 29. 4 1 Introduction

- 30. Part I Illumination and Image Formation

- 32. 2 Radiation Horst Haußecker Interdisziplinäres Zentrum für Wissenschaftliches Rechnen (IWR) Universität Heidelberg, Germany 2.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8 2.2 Fundamentals of electromagnetic radiation . . . . . . . . . . . . 9 2.2.1 Electromagnetic waves . . . . . . . . . . . . . . . . . . . . 9 2.2.2 Dispersion and attenuation . . . . . . . . . . . . . . . . . 11 2.2.3 Polarization of radiation . . . . . . . . . . . . . . . . . . . 11 2.2.4 Coherence of radiation . . . . . . . . . . . . . . . . . . . . 12 2.3 Radiometric quantities . . . . . . . . . . . . . . . . . . . . . . . . . 13 2.3.1 Solid angle . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13 2.3.2 Conventions and overview . . . . . . . . . . . . . . . . . . 14 2.3.3 Definition of radiometric quantities . . . . . . . . . . . . 16 2.3.4 Relationship of radiometric quantities . . . . . . . . . . 19 2.3.5 Spectral distribution of radiation . . . . . . . . . . . . . 23 2.4 Fundamental concepts of photometry . . . . . . . . . . . . . . . 24 2.4.1 Spectral response of the human eye . . . . . . . . . . . 24 2.4.2 Definition of photometric quantities . . . . . . . . . . . 25 2.4.3 Luminous efficacy . . . . . . . . . . . . . . . . . . . . . . . 27 2.5 Thermal emission of radiation . . . . . . . . . . . . . . . . . . . . 28 2.5.1 Blackbody radiation . . . . . . . . . . . . . . . . . . . . . . 28 2.5.2 Properties of Planck’s distribution . . . . . . . . . . . . 30 2.5.3 Approximations of Planck’s distribution . . . . . . . . 32 2.5.4 Luminous efficacy of blackbody radiation . . . . . . . . 33 2.6 Acoustic waves . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34 2.7 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35 7 Handbook of Computer Vision and Applications Copyright © 1999 by Academic Press Volume 1 All rights of reproduction in any form reserved. Sensors and Imaging ISBN 0–12–379771–3/$30.00

- 33. 8 2 Radiation 2.1 Introduction Visual perception of scenes depends on appropriate illumination to vi- sualize objects. The human visual system is limited to a very narrow portion of the spectrum of electromagnetic radiation, called light . In some cases natural sources, such as solar radiation, moonlight, light- ning flashes, or bioluminescence, provide sufficient ambient light to navigate our environment. Because humankind was restricted mainly to daylight one of the first attempts was to invent an artificial light source, fire (not only as a food preparation method). Computer vision is not dependent upon visual radiation, fire, or glowing objects to illuminate scenes. As soon as imaging detector sys- tems became available other types of radiation were used to probe scenes and objects of interest. Recent developments in imaging sen- sors cover almost the whole electromagnetic spectrum from x-rays to radiowaves (Chapters 7–11). In standard computer vision applications illumination is frequently taken as given and optimized to illuminate objects evenly with high contrast. Such setups are appropriate for ob- ject identification and geometric measurements. Radiation, however, can also be used to visualize quantitatively physical properties of ob- jects by analyzing their interaction with radiation (Chapter 3). Physical quantities such as penetration depth or surface reflectivity are essential to probe the internal structures of objects, scene geome- try, and surface-related properties. The properties of physical objects therefore can be encoded not only in the geometrical distribution of emitted radiation but also in the portion of radiation that is emitted, scattered, absorbed, or reflected, and finally reaches the imaging sys- tem. Most of these processes are sensitive to certain wavelengths and additional information might be hidden in the spectral distribution of radiation. Using different types of radiation allows taking images from different depths or different object properties. As an example, infrared radiation of between 3 and 5 µm is absorbed by human skin to a depth of < 1 mm, while x-rays penetrate an entire body without major attenu- ation. Therefore, totally different properties of the human body (such as skin temperature as well as skeletal structures) can be revealed for medical diagnosis. This chapter provides the fundamentals for a quantitative descrip- tion of radiation emitted from sources. The interaction of radiation with objects and matter is the subject of Chapter 3. Radiometry, the measurement of radiation properties by imaging systems, will be de- tailed in Chapter 5. Although the theory will be introduced in a general way for all types of radiation, a large portion of this chapter is dedicated to the two spectral ranges of visible and infrared (IR) radiation. While visible radiation plays the most important role in computer vision, the latter has been gaining in importance due to recent performance im- provements in infrared imaging technology (see Chapter 10).

- 34. 2.2 Fundamentals of electromagnetic radiation 9 2.2 Fundamentals of electromagnetic radiation 2.2.1 Electromagnetic waves Electromagnetic radiation consists of electromagnetic waves carrying energy and propagating through space. Electrical and magnetic fields are alternating with a temporal frequency ν and a spatial wavelength λ. The metric units of ν and λ are cycles per second (s−1 ), and meter (m), respectively. The unit 1 s−1 is also called one hertz (1 Hz). Wavelength and frequency of waves are related by the speed of light c: c = νλ (2.1) The speed of light depends on the medium through which the electro- magnetic wave is propagating. In vacuum, the speed of light has the value 2.9979 × 108 m s−1 , which is one of the fundamental physical constants and constitutes the maximum possible speed of any object. The speed of light decreases as it penetrates matter, with slowdown dependent upon the electromagnetic properties of the medium (see Section 3.3.2). Photon energy. In addition to electromagnetic theory, radiation can be treated as a flow of particles, discrete packets of energy called pho- tons. One photon travels at the speed of light c and carries the energy hc ep = hν = (2.2) λ where h = 6.626 × 10−34 J s is Planck’s constant. Therefore the energy content of radiation is quantized and can only be a multiple of hν for a certain frequency ν. While the energy per photon is given by Eq. (2.2), the total energy of radiation is given by the number of photons. It was this quantization of radiation that gave birth to the theory of quantum mechanics at the beginning of the twentieth century. The energy of a single photon is usually given in electron volts (1 eV = 1.602 × 10−19 ). One eV constitutes the energy of an electron being accelerated in an electrical field with a potential difference of one volt. Although photons do not carry electrical charge this unit is useful in radiometry, as electromagnetic radiation is usually detected by inter- action of radiation with electrical charges in sensors (Chapter 7). In solid-state sensors, for example, the energy of absorbed photons is used to lift electrons from the valence band into the conduction band of a semiconductor. The bandgap energy Eg defines the minimum pho- ton energy required for this process. As a rule of thumb the detector material is sensitive to radiation with energies Ev > Eg . As an example, indium antimonide (InSb) is a doped semiconductor with a bandgap of only 0.18 eV. It is sensitive to wavelengths below 6.9 µm (which can be

- 35. 10 2 Radiation 100nm ν[Hz] -C UV 1024 10-15 ys γ-ra 280nm 1021 10-12 UV-B W 315nm W W av s x-ray Fr UV-A r r re av ave en e 1018 380nm En 10-9 qq q q number [m blue el ele ele uency [Hz ergy [eV] l UV mb mb mb ngth [ nc nght 1015 red visible y y y 10-6 780nm IR-A (near IR) 1µm t [m t IR 1,4µm m 1012 IR-B m ] ] ] 10-3 ] -1 ] ] ] 3µm mic 109 wa ro (radves 1 ar) IR (far -C 106 IR) r 103 waadio ve 10µm s λ[m] 100µm 1mm Figure 2.1: Spectrum of electromagnetic radiation. (By Sven Mann, University of Heidelberg.) derived from Eq. (2.2)). Silicon (Si) has a bandgap of 1.1 eV and requires wavelengths below 1.1 µm to be detected. This shows why InSb can be used as detector material for infrared cameras in the 3-5 µm wave- length region, while silicon sensors are used for visible radiation. It also shows, however, that the sensitivity of standard silicon sensors extends beyond the visible range up to approximately 1 µm, which is often neglected in applications. Electromagnetic spectrum. Monochromatic radiation consists of only one frequency and wavelength. The distribution of radiation over the range of possible wavelengths is called spectrum or spectral distribu- tion. Figure 2.1 shows the spectrum of electromagnetic radiation to-

- 36. 2.2 Fundamentals of electromagnetic radiation 11 gether with the standardized terminology1 separating different parts. Electromagnetic radiation covers the whole range from very high energy cosmic rays with wavelengths in the order of 10−16 m (ν = 1024 Hz) to sound frequencies above wavelengths of 106 m (ν = 102 Hz). Only a very narrow band of radiation between 380 and 780 nm is visible to the human eye. Each portion of the electromagnetic spectrum obeys the same princi- pal physical laws. Radiation of different wavelengths, however, appears to have different properties in terms of interaction with matter and de- tectability that can be used for wavelength selective detectors. For the last 100 yr detectors have been developed for radiation of almost any region of the electromagnetic spectrum. Recent developments in de- tector technology incorporate point sensors into integrated detector arrays, which allows setting up imaging radiometers instead of point measuring devices. Quantitative measurements of the spatial distribu- tion of radiometric properties are now available for remote sensing at almost any wavelength. 2.2.2 Dispersion and attenuation A mixture of radiation consisting of different wavelengths is subject to different speeds of light within the medium it is propagating. This fact is the basic reason for optical phenomena such as refraction and disper- sion. While refraction changes the propagation direction of a beam of radiation passing the interface between two media with different opti- cal properties, dispersion separates radiation of different wavelengths (Section 3.3.2). 2.2.3 Polarization of radiation In electromagnetic theory, radiation is described as oscillating electric and magnetic fields, denoted by the electric field strength E and the magnetic field strength B, respectively. Both vector fields are given by the solution of a set of differential equations, referred to as Maxwell’s equations. In free space, that is, without electric sources and currents, a special solution is a harmonic planar wave, propagating linearly in space and time. As Maxwell’s equations are linear equations, the superposition of two solutions also yields a solution. This fact is commonly referred to as the superposition principle. The superposition principle allows us to explain the phenomenon of polarization, another important property of electromagnetic radia- tion. In general, the 3-D orientation of vector E changes over time and 1 International Commission on Illumination (Commission Internationale de l’Eclairage, CIE); http://www.cie.co.at/cie

- 37. 12 2 Radiation a b E n tio ga pa pro ion ect dir Figure 2.2: Illustration of a linear and b circular polarization of electromag- netic radiation. (By C. Garbe, University of Heidelberg.) mixtures of electromagnetic waves show randomly distributed orienta- tion directions of E. If, however, the electromagnetic field vector E is confined to a plane, the radiation is called linearly polarized (Fig. 2.2a). If two linearly polarized electromagnetic waves are traveling in the same direction, the resulting electric field vector is given by E = E 1 + E 2 . Depending on the phase shift Φ in the oscillations of E 1 and E 2 , the net electric field vector E remains linearly polarized (Φ = 0), or rotates around the propagation direction of the wave. For a phase shift of Φ = 90◦ , the wave is called circularly polarized (Fig. 2.2b). The general case consists of elliptical polarization, that is, mixtures between both cases. Due to polarization, radiation exhibits different properties in differ- ent directions, such as, for example, directional reflectivity or polariza- tion dependent transmissivity. 2.2.4 Coherence of radiation Mixtures of electromagnetic waves, which are emitted from conven- tional light sources, do not show any spatial and temporal relation. The phase shifts between the electric field vectors E and the corresponding orientations are randomly distributed. Such radiation is called incoher- ent . Special types of light sources, mainly those operating by stimulated emission of radiation (e. g., lasers), emit radiation with a fixed system- atic relationship between the phases of the electromagnetic field vec- tors, a property called coherence. Such radiation can be subject to constructive and destructive inter- ference if it is superposed. As the electric field vectors can add up to high amplitudes, the local energy impact of coherent radiation is much more severe and can cause damage to delicate body tissue.

- 38. 2.3 Radiometric quantities 13 s f r Figure 2.3: Definition of plane angle. 2.3 Radiometric quantities 2.3.1 Solid angle In order to quantify the geometric spreading of radiation leaving a source, it is useful to recall the definition of solid angle. It extends the concept of plane angle into 3-D space. A plane angle θ is defined as the ratio of the arc length s on a circle to the radius r centered at the point of definition: s θ= (2.3) r The arc length s can be considered as projection of an arbitrary line in the plane onto the circle (Fig. 2.3). Plane angles are measured in rad (radians). A plane angle θ quantifies the angular subtense of a line segment in the plane viewed from the point of definition. A circle has a circumference of 2π r and, therefore, subtends a plane angle of 2π rad. A solid angle ω is similarly defined as the ratio of an area A on the surface of a sphere to the square radius, as shown in Fig. 2.4: A Ω= (2.4) r2 The area segment A can be considered as the projection of an arbitrarily shaped area in 3-D space onto the surface of a sphere. Solid angles are measured in sr (steradian). They quantify the areal subtense of a 2-D surface area in 3-D space viewed from the point of definition. A sphere subtends a surface area of 4π r 2 , which corresponds to a solid angle of 4π sr. Given a surface area A that is tilted under some angle θ between the surface normal and the line of sight the solid angle is reduced by a factor of cos θ: A Ω= cos θ (2.5) r2

- 39. 14 2 Radiation z r 9 A x y Figure 2.4: Definition of solid angle. (By C. Garbe, University of Heidelberg.) Table 2.1: Definitions of radiometric quantities (corresponding photometric quantities are defined in Table 2.2) Quantity Symbol Units Definition Total energy emitted by a source Radiant energy Q Ws or received by a detector Total power emitted by a source Radiant flux Φ W or received by a detector Power emitted per unit surface Radiant exitance M W m−2 area Power received at unit surface Irradiance E W m−2 element Power leaving a point on a sur- Radiant intensity I W sr−1 face into unit solid angle Power leaving unit projected sur- Radiance L W m−2 sr−1 face area into unit solid angle From the definition of angles as ratios of lengths or areas it follows that they have no physical unit. However, it is advisable always to use the artificial units rad and sr when referring to quantities related to angles to avoid confusion. Radiometric and photometric quantities also have to be defined carefully as their meaning cannot be inferred from physical units (Tables 2.1 and 2.2). 2.3.2 Conventions and overview Measurements of radiometric and photometric quantities very often are subject to confusion related to terminology and units. Due to di- verse historical developments and often inaccurate usage of names, radiometry is one of the least understood subjects in the field of op-

- 40. 2.3 Radiometric quantities 15 Table 2.2: Definitions of photometric quantities (corresponding radiometric quantities are defined in Table 2.1) Quantity Symbol Units Definition Total luminous energy Luminous energy Qν lm s emitted by a source or received by a detector Total luminous power Luminous flux Φν lm (lumen) emitted by a source or received by a detector Luminous power emitted Luminous exitance Mν lm m−2 per unit surface area lm m−2 Luminous power received Illuminance Eν = lx (lux) at unit surface element Luminous power leaving lumen sr−1 Luminous intensity Iν a point on a surface into = cd (candela) unit solid angle Luminous power leaving lumen m−2 sr−1 Luminance Lν unit projected surface = cd m−2 area into unit solid angle tics. However, it is not very difficult if some care is taken with regard to definitions of quantities related to angles and areas. Despite confusion in the literature, there seems to be a trend to- wards standardization of units. In pursuit of standardization we will use only SI units, in agreement with the International Commission on Illumination CIE. The CIE is the international authority defining termi- nology, standards, and basic concepts in radiometry and photometry. The radiometric and photometric terms and definitions are in com- pliance with the American National Standards Institute (ANSI) report RP-16, published in 1986. Further information on standards can be found at the web sites of CIE (http://www.cie.co.at/cie/) and ANSI (http://www.ansi.org), respectively. In this section, the fundamental quantities of radiometry will be defined. The transition to photometric quantities will be introduced by a generic equation Eq. (2.31) that can be used to convert each of these radiometric quantities to its corresponding photometric counterpart. We will start from the concept of radiative flux and derive the most important quantities necessary to define the geometric distribution of radiation emitted from or irradiated on surfaces. The six fundamen- tal concepts relating the spatial distribution of energy in electromag- netic radiation are summarized in Table 2.1. The term “radiant” is only

- 41. 16 2 Radiation added to the names of those quantities that could be confused with the corresponding photometric quantity (see Table 2.2). 2.3.3 Definition of radiometric quantities Radiant energy and radiant flux. Radiation carries energy that can be absorbed in matter heating up the absorber or interacting with electrical charges. Radiant energy Q is measured in units of Joule (J). It quantifies the total energy emitted by a source or received by a detector. Radiant flux Φ is defined as radiant energy per unit time interval dQ Φ= (2.6) dt passing through or emitted from a surface. Radiant flux has the unit Watts (W) and is also frequently called radiant power , which corre- sponds to its physical unit. Quantities describing the spatial and ge- ometric distributions of radiative flux are introduced in the following sections. The units for radiative energy, radiative flux, and all derived quan- tities listed in Table 2.1 are based on Joule as the fundamental unit. Instead of these energy-derived quantities an analogous set of photon- derived quantities can be defined based on the number of photons. Photon-derived quantities are denoted by the subscript p, while the energy-based quantities are written with a subscript e if necessary to distinguish between them. Without a subscript, all radiometric quanti- ties are considered energy-derived. Given the radiant energy the num- ber of photons can be computed from Eq. (2.2) Qe λ Np = = Qe (2.7) ep hc With photon-based quantities the number of photons replaces the ra- diative energy. The set of photon-related quantities is useful if radia- tion is measured by detectors that correspond linearly to the number of absorbed photons (photon detectors) rather than to thermal energy stored in the detector material (thermal detector ). Photon flux Φp is defined as the number of photons per unit time interval dNp λ dQe λ Φp = = = Φe (2.8) dt hc dt hc Similarly, all other photon-related quantities can be computed from the corresponding energy-based quantities by dividing them by the energy of a single photon.

- 42. 2.3 Radiometric quantities 17 a b dS dS Figure 2.5: Illustration of the radiometric quantities: a radiant exitance and b irradiance. (By C. Garbe, University of Heidelberg.) Because the conversion from energy-derived to photon-derived quan- tities Eq. (2.7) depends on the wavelength of radiation, spectral distri- butions of radiometric quantities will have different shapes for both sets of units (Fig. 2.10). Radiant exitance and irradiance. Radiant exitance M defines the ra- diative flux emitted per unit surface area dΦ M= (2.9) dS of a specified surface. The flux leaving the surface is radiated into the whole hemisphere enclosing the surface element dS and has to be inte- grated over all angles to obtain M (Fig. 2.5a). The flux is, however, not radiated uniformly in angle. Radiant exitance is a function of position on the emitting surface, M = M(x). Specification of the position on the surface can be omitted if the emitted flux Φ is equally distributed over an extended area S. In this case M = Φ/S. Irradiance E similarly defines the radiative flux incident on a certain point of a surface per unit surface element dΦ E= (2.10) dS Again, incident radiation is integrated over all angles of the enclosing hemisphere (Fig. 2.5b). Radiant exitance characterizes an actively radi- ating source while irradiance characterizes a passive receiver surface. Both are measured in W m−2 and cannot be distinguished by their units if not further specified. Radiant intensity. Radiant intensity I describes the angular distribu- tion of radiation emerging from a point in space. It is defined as radiant

- 43. 18 2 Radiation a b Z Z G G d 9 d 9 dS = dS cos G B dS Y B Y X X Figure 2.6: Illustration of radiometric quantities: a radiant intensity and b radiance. (By C. Garbe, University of Heidelberg.) flux per unit solid angle dΦ I= (2.11) dΩ and measured in units of W sr−1 . Radiant intensity is a function of the direction of the beam of radiation, defined by the spherical coordinates θ and φ (Fig. 2.6). Intensity is usually used to specify radiation emitted from point sources, such as stars or sources that are much smaller than their distance from the detector, that is, dxdy r 2 . In order to use it for extended sources those sources have to be made up of an infinite number of infinitesimal areas. The radiant intensity in a given direc- tion is the sum of the radiant flux contained in all rays emitted in that direction under a given solid angle by the entire source (see Eq. (2.22)). The term intensity is frequently confused with irradiance or illumi- nance. It is, however, a precisely defined quantity in radiometric termi- nology and should only be used in this context to avoid confusion. Radiance. Radiance L defines the amount of radiant flux per unit solid angle per unit projected area of the emitting source d2 Φ d2 Φ L= = (2.12) dΩ dS⊥ dΩ dS cos θ The differential dS⊥ = dS cos θ defines a surface element perpendicu- lar to the direction of the radiated beam (Fig. 2.6b). The unit of radiance is W m−2 sr−1 . Radiance combines the concepts of exitance and inten- sity, relating intensity in a certain direction to the area of the emitting surface. And conversely, it can be thought of as exitance of the pro- jected area per unit solid angle. Radiance is used to characterize an extended source that has an area comparable to the squared viewing distance. As radiance is a