CUDA and Caffe for deep learning

- 1. CUDA and Caffe for deep learning Amgad Muhammad Mohamed Ghoneim

- 2. Outline • GPU Computing • What is CUDA? • Why use CUDA? • When use CUDA? • CUDA - Machine Specs . • CUDA - Matrix Multiplication • CUDA - Closest Pair in 2D • Convolution Neural Networks • Auto Encoder

- 3. GPU Computing • Moore’s law slowed down. • Computation is directed towards parallelism instead of better processing unit performance. • CPU has a small number of processing units with very high processing power. • GPU has a large number of processing units with moderate processing power.

- 4. What is CUDA? • Compute Unified Device Architecture • Introduced by nVidia in 2006. • Refers to 2 different concepts: 1. CUDA Architecture: Massively parallel architecture of modern GPUs with hundreds of cores. 2. CUDA Programming Model: the model used to program these GPUs

- 6. Why use CUDA? • Efficiently processing thousands of small/repeated tasks in parallel. • It provides a methodology for these tasks to communicate and cooperate efficiently. • Scalable and intuitive mechanism to express parallelism.

- 7. When use CUDA? • Lots of computations and lots of data. • Parallel algorithms. • Neural Networks. • Physical Simulations • Distributed Computing • Accelerated Encryption, Decryption and Compression

- 8. CUDA – Machine Specs . Machine specs for this experiment: - Processor: Dual-core AMD Opteron(™) processor 2216 2.4 GHz (2 processors). - RAM: 32.0 GB - OS: 64-bit Windows 7 - Graphics Card: Quadro FX 4600 - CUDA Driver: 5.5 - CUDA Compatibility: 1.0 - # of Cores: 96 - Core Clock: 500MHz - Memory: 768MB - Memory Clock: 1400MHz

- 9. CUDA - Matrix Multiplication Comparing different implementations: All the times below are in milliseconds. 100 200 300 400 500 600 700 800 900 1000 25000 20000 15000 10000 5000 0 Matrix Multiplication Matrix Side CPU GPU Time in MS

- 10. CUDA - Closest Pair in 2D This is a well known problem where the algorithm tries to find the 2 points that closest to each other. There are many solutions to address this problem: 1. Brute Force complexity O( n^2 ) 2. Divide and Conquer O( n log(n) ) For completeness there is another implementation using KD-trees with complexity similar to D&C.

- 11. CUDA - Closest Pair in 2D (cont.) Comparing different implementations: All the times below are in milliseconds. 100 1000 5000 10000 20000 25000 30000 40000 50000 100000 250000 200000 150000 100000 50000 0 Closest Pair in 2D Number of Points Brute Force CPU BF GPU BF GPU Optimized Time in MS

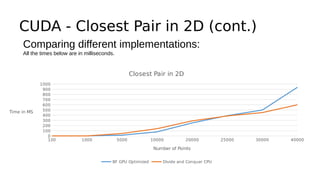

- 12. CUDA - Closest Pair in 2D (cont.) Comparing different implementations: All the times below are in milliseconds. 1000 900 800 700 600 500 400 300 200 100 0 Closest Pair in 2D 100 1000 5000 10000 20000 25000 30000 40000 Number of Points BF GPU Optimized Divide and Conquer CPU Time in MS

- 13. CUDA - Closest Pair in 2D (cont.) To explain how optimized GPU version works we need to review the threads hierarchy in the GPU works:

- 14. CUDA - Closest Pair in 2D (cont.) To explain how optimized GPU version works we need to review the memory hierarchy in the GPU works:

- 15. CUDA – back to Matrix Multiplication Explaining the matrix multiplication optimization on board

- 16. CUDA - Closest Pair in 2D (cont.) Explaining the optimized code on board __global__ void FindClosestGPU2(float2* points, float* vals, int count) { __shared__ float2 sharedPoints[blockSize]; if(count <= 1) return; int idx = threadIdx.x + blockIdx.x * blockDim.x; float2 thisPoint; float distanceToClosest = FLT_MAX; if(idx < count) thisPoint = points[idx]; for(int currentBlockOfPoints = 0; currentBlockOfPoints < gridDim.x; currentBlockOfPoints++) { if(threadIdx.x + currentBlockOfPoints * blockSize < count) sharedPoints[threadIdx.x] = points[threadIdx.x + currentBlockOfPoints * blockSize]; else sharedPoints[threadIdx.x].x = reasonableINF, sharedPoints[threadIdx.x].y = reasonableINF; __syncthreads(); if(idx < count) { float *ptr = &sharedPoints[0].x; for(int i = 0; i < blockSize; i++) { float dist = (thisPoint.x - ptr[0]) * (thisPoint.x - ptr[0]) + (thisPoint.y - ptr[1]) * (thisPoint.y - ptr[1]); ptr += 2; if(dist < distanceToClosest && (i + currentBlockOfPoints * blockSize < count) && (i + currentBlockOfPoints * blockSize != idx)) distanceToClosest = dist; } }_ _syncthreads(); }i f(idx < count) vals[idx] = distanceToClosest; }

- 17. CNN

- 18. Convolution, The first operation to optimize

- 19. Pooling, the second operation to optimize

- 20. Results

- 21. LeNet Results The MNIST database of handwritten digits, has a training set of 60,000 examples, and a test set of 10,000 examples. We used OpenBlas for parallelization on the CPU Due to the fact that the data set is small in size, the overhead wasn't compensated by the speedup. 1 CPU Core 2 CPU Cores 3 CPU Cores 4 CPU Cores 800 700 600 500 400 300 200 100 0 CNN with GPU without GPU Time in Seconds

- 22. AutoEncoder

- 23. AutoEncoders Results The MNIST database of handwritten digits, has a training set of 60,000 examples, and a test set of 10,000 examples. And the main operation here is inner product 1 CPU Core 2 CPU Cores 3 CPU Cores 800 700 600 500 400 300 200 100 0 Auto Encoder with GPU without GPU Time in Seconds

![[Bryan Catanzaro]](https://image.slidesharecdn.com/cudaandcaffe-141214063345-conversion-gate02/85/CUDA-and-Caffe-for-deep-learning-5-320.jpg)

![CUDA - Closest Pair in 2D (cont.)

Explaining the optimized code on board

__global__ void FindClosestGPU2(float2* points, float* vals, int count)

{

__shared__ float2 sharedPoints[blockSize];

if(count <= 1) return;

int idx = threadIdx.x + blockIdx.x * blockDim.x;

float2 thisPoint;

float distanceToClosest = FLT_MAX;

if(idx < count) thisPoint = points[idx];

for(int currentBlockOfPoints = 0; currentBlockOfPoints < gridDim.x; currentBlockOfPoints++) {

if(threadIdx.x + currentBlockOfPoints * blockSize < count)

sharedPoints[threadIdx.x] = points[threadIdx.x + currentBlockOfPoints * blockSize];

else

sharedPoints[threadIdx.x].x = reasonableINF, sharedPoints[threadIdx.x].y = reasonableINF;

__syncthreads();

if(idx < count) {

float *ptr = &sharedPoints[0].x;

for(int i = 0; i < blockSize; i++) {

float dist = (thisPoint.x - ptr[0]) * (thisPoint.x - ptr[0]) +

(thisPoint.y - ptr[1]) * (thisPoint.y - ptr[1]);

ptr += 2;

if(dist < distanceToClosest && (i + currentBlockOfPoints * blockSize < count)

&& (i + currentBlockOfPoints * blockSize != idx))

distanceToClosest = dist;

}

}_

_syncthreads();

}i

f(idx < count)

vals[idx] = distanceToClosest;

}](https://image.slidesharecdn.com/cudaandcaffe-141214063345-conversion-gate02/85/CUDA-and-Caffe-for-deep-learning-16-320.jpg)