Da Vinci - A scaleable architecture for neural network computing (updated v4)

- 1. HUAWEI | MUNICH RESEARCH CENTER 2 HUAWEI | MUNICH RESEARCH CENTER HUAWEI TECHNOLOGIES DÜSSELDORF GmbH Da Vinci — A scalable architecture for neural network computing Heiko Joerg Schick Chief Architect | Advanced Computing Version 4 Presenting the work of many people at Huawei

- 2. HUAWEI | MUNICH RESEARCH CENTER 3 Agenda /1 Introduction − Computation in brains and machines − The hype roller coaster of artificial intelligence | Neural networks beat human performance − Two distinct eras of compute usage in training AI systems − Microprocessor trends | Rich variety of computing architectures − Comparison of processors for deep learning | Preferred architectures for compute are shifting − Data structure of digital images | Kernel convolution example | Architecture of LeNet-5 Applicability of artificial intelligence − Ubiquitous and future AI computation requirements − Artificial intelligence in modern medicine Product realisation − Scalable across devices − Focus on innovation, continuous dedication and backward compatibility − HiSilicon Ascend 310 | HiSilicon Ascend 910 | HiSilicon Kungpeng 920 Da Vinci architecture − Building blocks and compute intensity − Advantages of special compute units − Da Vinci core architecture | Micro-architectural configurations Heiko Joerg Schick Chief Architect | Advanced Computing Munich Research Center HUAWEI TECHNOLOGIES Duesseldorf GmbH Riesstrasse 25, C3 80992 Munich Mobile +49-151-54682218 E-mail heiko.schick@huawei.com

- 3. HUAWEI | MUNICH RESEARCH CENTER 4 Agenda /2 End-to-end lifecycle − Implementation of end-to-end lifecycle in AI projects − The Challenges to AI implementations Software stack − Ascend AI software stack | Logical architecture − Software flow for model conversion and deployment | Framework manager | Digital vision pre-processing − Mind Studio | Model Zoo (excerpt) Gain more practical experiences − Atlas 200 DK developer board | Application examples | Getting started | Environment deployment − Ascend developer community − Getting started with Atlas 200 DK developer board Preparing the Ubuntu-based development environment − Environment deployment − Hardware and software requirements | About version 1.73.0.0 − Install environment dependencies − Install the toolkit packages − Install the media module device driver − Install Mind Studio Heiko Joerg Schick Chief Architect | Advanced Computing Munich Research Center HUAWEI TECHNOLOGIES Duesseldorf GmbH Riesstrasse 25, C3 80992 Munich Mobile +49-151-54682218 E-mail heiko.schick@huawei.com

- 4. HUAWEI | MUNICH RESEARCH CENTER 5 Agenda /3 Create and write SD card image − Setting up the operating environment Boot and connect to the Atlas 200 DK developer board − Power on the Atlas 200 DK developer board Install third-party packages − Installation of additional packages (FFmpeg, OpenCV and Python) Heiko Joerg Schick Chief Architect | Advanced Computing Munich Research Center HUAWEI TECHNOLOGIES Duesseldorf GmbH Riesstrasse 25, C3 80992 Munich Mobile +49-151-54682218 E-mail heiko.schick@huawei.com

- 5. HUAWEI | MUNICH RESEARCH CENTER 6 Introduction

- 6. HUAWEI | MUNICH RESEARCH CENTER 7 “Nothing in the natural world makes sense — except when seen in the light of evolution.” – David Attenborough

- 7. HUAWEI | MUNICH RESEARCH CENTER 8 “It artificial intelligence would take off on its own and redesign itself at an ever increasing rate. Humans, who are limited by slow biological evolution, couldn't compete and would be superseded.” – Stephen Hawking

- 8. HUAWEI | MUNICH RESEARCH CENTER 9 “We are going in the direction of artificial intelligence or hybrid intelligence where a part of our brain will get information from the cloud and the other half is from you, so all this stuff will happen in the future.” – Arnold Schwarzenegger “Our technology, our machines, is part of our humanity. We created them to extend ourself, and that is what is unique about human beings.” – Ray Kurzweil

- 9. HUAWEI | MUNICH RESEARCH CENTER 10 Computation in brains and machines [Conradt, 2014], [Wikipedia, 2020] • Getting to know your brain − 1.3 kg, about 2% of body weight − 1011 neurons − Neuron growth: 250.000 / min (early pregnancy), but also loss 1 neuron/second

- 10. HUAWEI | MUNICH RESEARCH CENTER 11 Computation in brains and machines [Conradt, 2014], [Wikipedia, 2020] • Getting to know your brain − 1.3 kg, about 2% of body weight − 1011 neurons − Neuron growth: 250.000 / min (early pregnancy), but also loss 1 neuron/second • Operating mode of neurons − Analog leaky integration in soma − Digital pulses (spikes) along neurites − 1014 stochastic synapses − Typical operating “frequency”: ≦ 100 Hz, typically ~10 Hz, asynchronous

- 11. HUAWEI | MUNICH RESEARCH CENTER 12 Computation in brains and machines [Conradt, 2014], [Wikipedia, 2020] • Getting to know your brain − 1.3 kg, about 2% of body weight − 1011 neurons − Neuron growth: 250.000 / min (early pregnancy), but also loss 1 neuron/second • Operating mode of neurons − Analog leaky integration in soma − Digital pulses (spikes) along neurites − 1014 stochastic synapses − Typical operating “frequency”: ≦ 100 Hz, typically ~10 Hz, asynchronous • Getting to know your computer’s processor − 50g, irrelevant for most applications − 2,00E+10 transistors (HiSilicon Kunpeng 920) − Ideally no modification over lifetime • Operating mode of processors − No analog components − Digital signal propagation − Reliable signal propagation − Typical operation frequency: several GHz, synchronous

- 12. HUAWEI | MUNICH RESEARCH CENTER 13 Computation in brains and machines [Conradt, 2014], [Wikipedia, 2020] • Getting to know your brain − 1.3 kg, about 2% of body weight − 1011 neurons − Neuron growth: 250.000 / min (early pregnancy), but also loss 1 neuron/second • Operating mode of neurons − Analog leaky integration in soma − Digital pulses (spikes) along neurites − 1014 stochastic synapses − Typical operating “frequency”: ≦ 100 Hz, typically ~10 Hz, asynchronous • Getting to know your computer’s processor − 50g, irrelevant for most applications − 2,00E+10 transistors (HiSilicon Kunpeng 920) − Ideally no modification over lifetime • Operating mode of processors − No analog components − Digital signal propagation − Reliable signal propagation − Typical operation frequency: several GHz, synchronous 3,02E+02 1,00E+05 9,60E+05 1,60E+07 7,10E+07 2,50E+08 7,40E+09 8,60E+10 2,57E+11 1,00E+00 1,00E+02 1,00E+04 1,00E+06 1,00E+08 1,00E+10 1,00E+12 Sponge Caenorhabditis elegans Larval zebrafish Honeybee Frog House mouse House cat Chimpanzee Human Africat elephant Neurons in the brain / whole nervous system

- 13. HUAWEI | MUNICH RESEARCH CENTER 14 Computers “invented” Computers “available” Workstations’ PCs GPUs Powerful PDAs Pervasive ubiquitous computing Bandwidth, storage, compute universally available 1940 1950 1960 1970 1980 1990 2000 2010 2020 Expectations Low High 1st AI Winter 2nd AI Winter 1943 Neural Nets 1958 Artificial NN Perceptrons 1960 Back Propagation 1965 Deep Learning 1970 Expert Systems 1984 CYC 1983 Thinking Machines 1982 Japan 5th Generation Project / US 5th Generation Project (MCC) 1997 LSTM 1999 Aibo 2012 Google Brain 2013 Hanson Robotics 2017 AI Citizen in Saudi Arabia (Sophia) / Aibo 2 The hype roller coaster of artificial intelligence [Villain, 2019] 1999 First GPU 2010 ImageNet 2015 DeepMind AlphaGo

- 14. HUAWEI | MUNICH RESEARCH CENTER 15 Computers “invented” Computers “available” Workstations’ PCs GPUs Powerful PDAs Pervasive ubiquitous computing Bandwidth, storage, compute universally available 1940 1950 1960 1970 1980 1990 2000 2010 2020 Expectations Low High 1st AI Winter 2nd AI Winter 1943 Neural Nets 1958 Artificial NN Perceptrons 1960 Back Propagation 1965 Deep Learning 1970 Expert Systems 1984 CYC 1983 Thinking Machines 1982 Japan 5th Generation Project / US 5th Generation Project (MCC) 1997 LSTM 1999 Aibo 2012 Google Brain 2013 Hanson Robotics 2017 AI Citizen in Saudi Arabia (Sophia) / Aibo 2 The hype roller coaster of artificial intelligence [Villain, 2019] 1999 First GPU 2010 ImageNet 2015 DeepMind AlphaGo Simplifies product marketing Reduce data entry errors Predict future sales accurately Augment customer interaction and improve satisfaction Foresee maintenance needs Provide medical diagnosis Accurate and rational decision making Neural networks beat human performance (in some disciplines) ADVANTAGES Massive amounts of training data are needed Labelling data is tedious and error prone Machine cannot explain themselves Bias makes the results less usable Machine learning solutions cannot cooperate Deep learning requires much more data than traditional machine learning algorithms It takes a lot of time to develop a neural network Neural networks are also more computationally expensive than traditional networks DISADVANTAGES

- 15. HUAWEI | MUNICH RESEARCH CENTER 16 Neural networks beat human performance /1 [Giró-i-Nieto, 2016], [Gershgorn, 2017] — Example: Image classification on ImageNet 15 million images in dataset, 22,000 object classes (categories) and 1 million images with bounding boxes.

- 16. HUAWEI | MUNICH RESEARCH CENTER 17 Neural networks beat human performance /1 [Giró-i-Nieto, 2016], [Gershgorn, 2017] — Example: Image classification on ImageNet 15 million images in dataset, 22,000 object classes (categories) and 1 million images with bounding boxes.

- 17. HUAWEI | MUNICH RESEARCH CENTER 18 Neural networks beat human performance /2 [Russakovsky et al., 2015], [Papers With Code, 2020] — Example: Image classification on ImageNet SIFT + FVs AlexNet - 7CNNs Five Base + Five HiRes VGG-19 ResNet-152 ResNeXt-101 64x4 PNASNet-5 ResNeXt-101 32x48d BiT-L (ResNet) FixEfficientNet-L2 0% 10% 20% 30% 40% 50% 60% 70% 80% 90% 100% 2011 2012 2013 2014 2015 2016 2017 2018 2019 2020 Top-1 accuracyYears’ best Top-1 accuracy Top-5 accuracy Estimated Top-5 human classification error (5.1%)

- 18. HUAWEI | MUNICH RESEARCH CENTER 19 Two distinct eras of compute usage in training AI systems [McCandlish et al., 2018], [Amodei et al., 2019] Perceptron NETtalk ALVINN TD-Gammon v2.1 RNN for Speech LeNet-5 BiLSTM for Speech Deep Belief Nets and layer- wise pretraining AlexNet DQN VGG ResNets Neural Machine Translation TI7 Dota 1v1 AlphaGO Zero 1,00E-14 1,00E-12 1,00E-10 1,00E-08 1,00E-06 1,00E-04 1,00E-02 1,00E+00 1,00E+02 1,00E+04 1950 1960 1970 1980 1990 2000 2010 2020 2-year doubling (Moore’s law) 3.4-month doubling Modern era →← First era

- 19. HUAWEI | MUNICH RESEARCH CENTER 20 Microprocessor trends [Brookes, 1986], [Sutter, 2005], [Rupp, 2015], [Rupp, 2018a], [Rupp, 2018b], [Hennessy et al., 2019] Original data up to the year 2010 collected and plotted by M. Horowitz, F. Labonte, O. Shacham, K. Olukotun, L. Hammond, and C. Batten New plot and data collected for 2010-2017 by K. Rupp 1,00E+00 1,00E+01 1,00E+02 1,00E+03 1,00E+04 1,00E+05 1,00E+06 1,00E+07 1,00E+08 1970 1975 1980 1985 1990 1995 2000 2005 2010 2015 2020 Transistors (thousands) Single-thread SPECint (thousands) Frequency (MHz) Typical power (Watts) Number of logical cores

- 20. HUAWEI | MUNICH RESEARCH CENTER 21 Original data up to the year 2010 collected and plotted by M. Horowitz, F. Labonte, O. Shacham, K. Olukotun, L. Hammond, and C. Batten New plot and data collected for 2010-2017 by K. Rupp 1,00E+00 1,00E+01 1,00E+02 1,00E+03 1,00E+04 1,00E+05 1,00E+06 1,00E+07 1,00E+08 1970 1975 1980 1985 1990 1995 2000 2005 2010 2015 2020 Transistors (thousands) Single-thread SPECint (thousands) Frequency (MHz) Typical power (Watts) Number of logical cores Processor clock rate stops increasing 1. Frequency wall Power cannot be increased 2. Power wall Core count doubling ~ every 2 years Memory capacity doubling ~ every 3 years 3. Memory wall Microprocessor trends [Brookes, 1986], [Sutter, 2005], [Rupp, 2015], [Rupp, 2018a], [Rupp, 2018b], [Hennessy et al., 2019]

- 21. HUAWEI | MUNICH RESEARCH CENTER 22 Original data up to the year 2010 collected and plotted by M. Horowitz, F. Labonte, O. Shacham, K. Olukotun, L. Hammond, and C. Batten New plot and data collected for 2010-2017 by K. Rupp 1,00E+00 1,00E+01 1,00E+02 1,00E+03 1,00E+04 1,00E+05 1,00E+06 1,00E+07 1,00E+08 1970 1975 1980 1985 1990 1995 2000 2005 2010 2015 2020 Transistors (thousands) Single-thread SPECint (thousands) Frequency (MHz) Typical power (Watts) Number of logical cores Processor clock rate stops increasing 1. Frequency wall Power cannot be increased 2. Power wall Core count doubling ~ every 2 years Memory capacity doubling ~ every 3 years 3. Memory wall Microprocessor trends [Brookes, 1986], [Sutter, 2005], [Rupp, 2015], [Rupp, 2018a], [Rupp, 2018b], [Hennessy et al., 2019] Moore’s Law “The Free Lunch is Over” “No Silver Bullet” “A New Golden Age for Computer Architectures”

- 22. HUAWEI | MUNICH RESEARCH CENTER 23 Original data up to the year 2010 collected and plotted by M. Horowitz, F. Labonte, O. Shacham, K. Olukotun, L. Hammond, and C. Batten New plot and data collected for 2010-2017 by K. Rupp 1,00E+00 1,00E+01 1,00E+02 1,00E+03 1,00E+04 1,00E+05 1,00E+06 1,00E+07 1,00E+08 1970 1975 1980 1985 1990 1995 2000 2005 2010 2015 2020 Transistors (thousands) Single-thread SPECint (thousands) Frequency (MHz) Typical power (Watts) Number of logical cores Processor clock rate stops increasing 1. Frequency wall Power cannot be increased 2. Power wall Core count doubling ~ every 2 years Memory capacity doubling ~ every 3 years 3. Memory wall Microprocessor trends [Brookes, 1986], [Sutter, 2005], [Rupp, 2015], [Rupp, 2018a], [Rupp, 2018b], [Hennessy et al., 2019] Moore’s Law “A New Golden Age for Computer Architectures” “The Free Lunch is Over” “No Silver Bullet” CISC 2x/2.5 years (22%/year) RISC 2x/1.5 years (52%/year) End of Dennard Scaling → Multicore 2x/3.5 years (23%/year) Amdahl’s Law → 2x/6 years (12%/year) End of the Line → 2x/20 years (3%/year)

- 23. HUAWEI | MUNICH RESEARCH CENTER 24 Rich variety of computing architectures • Wide range of options to optimise for performance and efficiency: − Central processing unit (CPU) executes general purpose applications (e.g. N-body methods, computational logic, map reduce, dynamic programming) − General-purpose computing on graphics processing units (GPGPU) accelerates compute intensive and time consuming applications for the CPU (e.g. dense linear algebra and sparse linear algebra) − Digital signal processor (DSP) accelerates signal processing for post camera operations (e.g. spectral methods) − Image signal processor (ISP) executes processing for camera sensor pipeline − Vision processing unit (VPU) accelerates machine vision tasks − Network processor (NP) accelerates packet processing Note: Not exact proportion Performance (GOPS) Power (mW) Area (mm2 ) GPGPU Vector DSP Scalar DSP Large CPU Medium CPU Each of these options represents different power, performance, and area trade-offs, which should be considered for specific application scenarios. Mobile GPU NPU Max NPU Lite NPU Tiny − Neural processing unit (NPU) accelerates artificial intelligence applications (e.g. matrix-matrix multiplication, dot-products, scalar a times x plus y)

- 24. HUAWEI | MUNICH RESEARCH CENTER 25 Rich variety of computing architectures • Wide range of options to optimise for performance and efficiency: − Central processing unit (CPU) executes general purpose applications (e.g. N-body methods, computational logic, map reduce, dynamic programming) − General-purpose computing on graphics processing units (GPGPU) accelerates compute intensive and time consuming applications for the CPU (e.g. dense linear algebra and sparse linear algebra) − Digital signal processor (DSP) accelerates signal processing for post camera operations (e.g. spectral methods) − Image signal processor (ISP) executes processing for camera sensor pipeline − Vision processing unit (VPU) accelerates machine vision tasks − Network processor (NP) accelerates packet processing Note: Not exact proportion Performance (GOPS) Power (mW) Area (mm2 ) Each of these options represents different power, performance, and area trade-offs, which should be considered for specific application scenarios. Target: Search for the optimal power-performance-area (PPA) design point. − Neural processing unit (NPU) accelerates artificial intelligence applications (e.g. matrix-matrix multiplication, dot-products, scalar a times x plus y)

- 25. HUAWEI | MUNICH RESEARCH CENTER 26 Comparison of processors for deep learning CPU GPU FPGA ASIC Proccessing peak power Moderate High Very high Highest Power consumption High Very high Very low Low Flexibility Highest Medium Very high Lowest Training Poor at training Production-ready training hardware Not efficient Potential, best for training Inference Poor at inference but sometimes feasible Average for inference Good for inference Potentially, best for inference focused Improvement through • Adding new instructions (e.g. AVX512, SVE) • Adding cores or increasing frequency (higher power consumption and cost) • Adding new modules (e.g. support for multiple data types, tensor cores) • Adding of special tensor cores for training and inference Ecosystem Rich ecosystem Rich and mature ecosystem Proprietary ecosystem (HDL programmable) Allows leaveraging the existing ecosystem Challenges Computation and power efficiency is low High cost, low energy efficiency ration and high latency Long development period, high barrier to entry High manufacturing cost High risks

- 26. HUAWEI | MUNICH RESEARCH CENTER 27 Preferred architectures for compute are shifting [Batra et al., 2018] 50 40 10 ASIC1 CPU2 FPGA3 GPU4 Other 2025 Inference Training TrainingInference Data-centre architecture (%) Edge architecture (%) 75 10 15 2017 97 2017 50 40 10 2025 60 30 10 2017 70 20 10 2025 50 50 2017 70 20 10 2025 1 Application-specific integrted circuit 2 Central processing unit 3 Field programmable gate array 4 Graphics processing unit

- 27. HUAWEI | MUNICH RESEARCH CENTER 28 Convolutional Neural Networks

- 28. HUAWEI | MUNICH RESEARCH CENTER 29 Data structure of digital images Blue 0 0 0 0 0 0 0 0 0 0 0 255 255 0 0 0 0 0 255 0 0 255 0 0 0 0 255 0 0 255 0 0 0 0 0 255 255 255 0 0 0 0 0 0 0 255 0 0 0 0 255 255 255 0 0 0 0 0 0 0 0 0 0 0 Green 0 0 0 0 0 0 0 0 0 0 0 255 255 0 0 0 0 0 255 0 0 255 0 0 0 0 255 0 0 255 0 0 0 0 0 255 255 255 0 0 0 0 0 0 0 255 0 0 0 0 255 255 255 0 0 0 0 0 0 0 0 0 0 0 Red 0 0 0 0 0 0 0 0 0 0 0 255 255 0 0 0 0 0 255 0 0 255 0 0 0 0 255 0 0 255 0 0 0 0 0 255 255 255 0 0 0 0 0 0 0 255 0 0 0 0 255 255 255 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 255 255 0 0 0 0 0 255 0 0 255 0 0 0 0 255 0 0 255 0 0 0 0 0 255 255 255 0 0 0 0 0 0 0 255 0 0 0 0 255 255 255 0 0 0 0 0 0 0 0 0 0 0

- 29. HUAWEI | MUNICH RESEARCH CENTER 30 Data structure of digital images Blue 0 0 0 0 0 0 0 0 0 0 0 255 255 0 0 0 0 0 255 0 0 255 0 0 0 0 255 0 0 255 0 0 0 0 0 255 255 255 0 0 0 0 0 0 0 255 0 0 0 0 255 255 255 0 0 0 0 0 0 0 0 0 0 0 Green 0 0 0 0 0 0 0 0 0 0 0 255 255 0 0 0 0 0 255 0 0 255 0 0 0 0 255 0 0 255 0 0 0 0 0 255 255 255 0 0 0 0 0 0 0 255 0 0 0 0 255 255 255 0 0 0 0 0 0 0 0 0 0 0 Red 0 0 0 0 0 0 0 0 0 0 0 255 255 0 0 0 0 0 255 0 0 255 0 0 0 0 255 0 0 255 0 0 0 0 0 255 255 255 0 0 0 0 0 0 0 255 0 0 0 0 255 255 255 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 255 255 0 0 0 0 0 255 0 0 255 0 0 0 0 255 0 0 255 0 0 0 0 0 255 255 255 0 0 0 0 0 0 0 255 0 0 0 0 255 255 255 0 0 0 0 0 0 0 0 0 0 0

- 30. HUAWEI | MUNICH RESEARCH CENTER 32 Kernel convolution example /1 10 10 10 10 10 10 10 10 10 10 10 10 10 10 10 10 10 10 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 2 1 0 0 0 -1 -2 -1 0 0 0 0 40 40 40 40 40 40 40 40 0 0 0 0 ∗ = 𝐺 𝑚, 𝑛 = 𝑓 ∗ ℎ 𝑚, 𝑛 = ) ! ) " ℎ 𝑗, 𝑘 𝑓[𝑚 − 𝑗, 𝑛 − 𝑘] Input image Kernel Feature map Subsequent feature map values are calculated according to the following formula, where the input image is denoted by 𝑓 and our kernel by ℎ. The indexes of rows and columns of the feature map (result matrix) are marked with 𝑚 and 𝑛 respectively.

- 31. HUAWEI | MUNICH RESEARCH CENTER 33 Kernel convolution example /2 10 10 10 10 10 10 10 10 10 10 10 10 10 10 10 10 10 10 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 2 1 0 0 0 -1 -2 -1 0 0 0 0 40 40 40 40 40 40 40 40 0 0 0 0 ∗ = 10 10 10 10 10 10 0 0 0 40 𝐺 1,1 = 10 ∗ 1 + 10 ∗ 2 + 10 ∗ 1 + 10 ∗ 0 + 10 ∗ 0 + 10 ∗ 0 + 0 ∗ −1 + 0 ∗ −2 + 0 ∗ −1 = 40 𝐺 𝑚, 𝑛 = 𝑓 ∗ ℎ 𝑚, 𝑛 = ) ! ) " ℎ 𝑗, 𝑘 𝑓[𝑚 − 𝑗, 𝑛 − 𝑘] Subsequent feature map values are calculated according to the following formula, where the input image is denoted by 𝑓 and our kernel by ℎ. The indexes of rows and columns of the feature map (result matrix) are marked with 𝑚 and 𝑛 respectively. Input image Kernel Feature map

- 32. HUAWEI | MUNICH RESEARCH CENTER 34 10 10 10 10 10 10 10 10 10 10 10 10 10 10 10 10 10 10 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 Kernel convolution example /3 -1 -1 -1 -1 8 -1 -1 -1 -1 0 0 0 0 40 40 40 40 40 40 40 40 0 0 0 0 ∗ = 10 10 10 10 10 10 0 0 0 40 An outline kernel (also called an "edge" kernel) is used to highlight large differences in pixel values. A pixel next to neighbour pixels with close to the same intensity will appear black in the new image while one next to neighbour pixels that differ strongly will appear white. Input image Kernel Feature map Please have a look at Gimp's documentation on general filters using convolution matrices. You can also apply your custom filters in Photoshop by going to Filter ▸ Other ▸ Custom. Carl Friedrich Gauß (1777–1855)

- 33. HUAWEI | MUNICH RESEARCH CENTER 35 Kernel convolution example /4 10 10 10 10 10 10 10 10 10 10 10 10 10 10 10 10 10 10 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 -1 -2 -1 0 0 0 1 2 1 0 0 0 0 40 40 40 40 40 40 40 40 0 0 0 0 ∗ = 10 10 10 10 10 10 0 0 0 40 The sobel kernels are used to show only the differences in adjacent pixel values in a particular direction. Input image Kernel Feature map Please have a look at Gimp's documentation on general filters using convolution matrices. You can also apply your custom filters in Photoshop by going to Filter ▸ Other ▸ Custom. Carl Friedrich Gauß (1777–1855)

- 34. HUAWEI | MUNICH RESEARCH CENTER 38 Architecture of LeNet-5 1 32 INPUT 6 28 C1 S2 16 10 C3 S4 1 120 C5 1 84 F6 10 OUTPUT

- 35. HUAWEI | MUNICH RESEARCH CENTER 39 Applicability of artificial intelligence

- 36. HUAWEI | MUNICH RESEARCH CENTER 40 Ubiquitous and future AI computation requirements MemoryFootprint(GB) Computation (TOPS) Low High HighLow Smartwatches Today Wearables Smartphone Smart Home Robotics Autonomous Vehicles Data Center Wearable Speech-based Controller Gesture Control Devices Smart Footwear Smart Rings Smart Contact Lenses Smart Photo Management Voice as UI Biometric Identification Eye Tracking Image Super Resolution Real-time Language Translation Environmental Awareness Intelligent Ambient Cards Virtual Private Assistants Situationally Adaptive Behaviour Smart Thermostats Intelligent Lighting Smart Mirrors Smart Robots Commercial UAVs (Drones) Drone Delivery Fully Autonomous Robots Adaptive Cruise Control Driver Monitoring System Semantic Scene Understanding Autonomous Taxi Fleets Consumer Smart Appliances Virtual Customer Assistants Proactive Search Bots Augmented Reality Cognitive Expert Advisors Prescriptive Analytics Key element to enable intelligence in physical devices is a scalable AI architecture. Scalability n Years n+1 Years

- 37. HUAWEI | MUNICH RESEARCH CENTER 41 Ubiquitous and future AI computation requirements MemoryFootprint(GB) Computation (TOPS) Low High HighLow Smartwatches Today Wearables Smartphone Smart Home Robotics Autonomous Vehicles Data Center Wearable Speech-based Controller Gesture Control Devices Smart Footwear Smart Rings Smart Contact Lenses Smart Photo Management Voice as UI Biometric Identification Eye Tracking Image Super Resolution Real-time Language Translation Environmental Awareness Intelligent Ambient Cards Virtual Private Assistants Situationally Adaptive Behaviour Smart Thermostats Intelligent Lighting Smart Mirrors Smart Robots Commercial UAVs (Drones) Drone Delivery Fully Autonomous Robots Adaptive Cruise Control Driver Monitoring System Semantic Scene Understanding Autonomous Taxi Fleets Consumer Smart Appliances Virtual Customer Assistants Proactive Search Bots Augmented Reality Cognitive Expert Advisors Prescriptive Analytics Key element to enable intelligence in physical devices is a scalable AI architecture. Scalability Da Vinci - Nano Da Vinci - Tiny Da Vinci - Lite Da Vinci - Mini Da Vinci - Max n Years n+1 Years

- 38. HUAWEI | MUNICH RESEARCH CENTER 42 Artificial intelligence in modern medicine [Medical AI Index, 2020] Radiology, 48% Pathology, 13% Microscopy, 8% Ophthalmology, 6% Endoscopy, 6% Genomics, 4% Dentistry, 2% Colonoscopy, 2% Others, 11% Medical domains Classification, 32% Segmentation, 20% Detection, 17% Regression, 5% Registration, 5% Denoising, 4% Quality assesment, 2% Plane detection, 2% Localisation, 2% Generation, 2% Reconstruction, 2% Others, 7% Medical tasks Anatomy Brain, 16% Cell, 11% Multi-organ, 9% Breast, 8% Eye, 7% Prostate, 5% Lung, 5% Fetal, 5% Heart, 4% Gastrointestinal, 4% Chest, 3% Colon, 3% Knee, 2% Teeth, 2% Blood, 2% Liver, 2% Infant, 2% Abdomin, 2% Others, 10%

- 39. HUAWEI | MUNICH RESEARCH CENTER 43 Artificial intelligence in modern medicine Automated 5-year mortality prediction using deep learning and radiomics features from chest computed tomography Dermatologist-level classification of skin cancer with deep neural networks 2017 Radiologist-level pneumonia detection on chest X-rays with deep learning 2018 A fully convolutional network for quick and accurate segmentation of neuroanatomy Classification and mutation prediction from non–small cell lung cancer histopathology images using deep learning Applying deep adversarial autoencoders for new molecule development in oncology Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs 2016 [Carneiro et al., 2017] [Esteva et al., 2017] [Rajprkar et al., 2017] [Roy et al., 2018] [Coudray et al., 2018][Kadurin et al., 2017][Gulshan et al., 2016]

- 40. HUAWEI | MUNICH RESEARCH CENTER 44 Artificial intelligence in modern medicine AI-driven version of the Berg Balance Scale Real-time automatic detection system increases colonoscopic polyp and adenoma detection rates: a prospective randomised controlled study 2019 2020 FDA approve the first MRI super-resolution productDeepTek detects tuberculosis from X-rays Deep learning predicts hip fracture using confounding patient and healthcare variables 2018 [Wolff, 2019] [Wang et al., 2019] [Salian, 2020] [Oakden-Rayner, 2020], [Subtle Medical Inc., 2020][Badgeley et al., 2018]

- 41. HUAWEI | MUNICH RESEARCH CENTER 45 Artificial intelligence in modern medicine 2020 A deep learning approach to antibiotic discovery [Stokes et al., 2020]] To be continued …

- 42. HUAWEI | MUNICH RESEARCH CENTER 46 Product realisation

- 43. HUAWEI | MUNICH RESEARCH CENTER 47 Scalable across devices Device Edge Cloud Earphone Always-on Smartphone Laptop IPC Edge Server Data Centre Compute 20 MOPS 100 GOPS 1 - 10 TOPS 10 - 20 TOPS 10 - 20 TOPS 10 - 100 TOPS 200+ TOPS Power budget 1 mW 10 mW 1 - 2 W 3 - 10 W 3 - 10 W 10 - 100 W 200+ W Model size 10 KB 100 KB 10 MB 10 - 100 MB 10 -100 MB 100+ MB 300+ MB Latency < 10 ms ~10 ms 10 - 100 ms 10 - 500 ms 10 - 500 ms ms ~ s ms ~ s Inference? Y Y Y Y Y Y Y Training? N N Y Y Y Y Y SoC scale Nano Tiny Lite Mini Ascend 310 Mini Ascend 310 Multi-Mini Ascend 310 Max Ascend 910

- 44. HUAWEI | MUNICH RESEARCH CENTER 48 Focus on innovation, continuous dedication and backward compatibility “Once a technology becomes digital—that is, once it can be programmed in the ones and zeros of computer code—it hopes on the back of Moore’s law and begins accelerating exponentially.” – Peter H. Diamandis & Steven Kotler, The Future Is Faster Than You Think

- 45. HUAWEI | MUNICH RESEARCH CENTER 49 Ascend 910 Highest compute density on a single chip • Half precision (FP16): 256 TFLOPS • Integer precision (INT8): 512 TOPS • 128-channel full-HD video decoder: H.264/265 • Max. power consumption: 350 W • 7nm Inference Training Ascend 310 AI SoC with ultimate efficiency • Half precision (FP16): 8 TFLOPS • Integer precision (INT8): 16 TOPS • 16-channel full-HD video decoder: H.264/265 • 1-channel full-HD video encoder: H.264/265 • Max. power consumption: 8 W • 12nm “Once a technology becomes digital—that is, once it can be programmed in the ones and zeros of computer code—it hopes on the back of Moore’s law and begins accelerating exponentially.” – Peter H. Diamandis & Steven Kotler, The Future Is Faster Than You Think Focus on innovation, continuous dedication and backward compatibility

- 46. HUAWEI | MUNICH RESEARCH CENTER 50 Kunpeng 920 • ARM v8.2-architecture • up to 64 cores, 2.6 GHz • 8 DDR4 memory channels • PCIe 4.0 and CCIX • Integrated 100GE LOM and encryption and compression engines • Supports 2- or 4-socket interconnects The industry's highest-performance ARM-based server CPU 52 mm x 38 mm x 10 mm Atlas 200 • 16 TOPS of INT8 • 16-channel HD video real-time analytics, JPEG decoding • 4 GB/8 GB memory, PCIe 3.0 x4 interface • Operating temperature: -25°C to +80°C AI Accelerator Module • Thousands of Ascend 910 AI processors • High-speed interconnection • Delivers up to 256 to 1024 PetaFLOPS at FP16 • Can complete model training based on ResNet-50 within 59.8 seconds • 15% faster than the second-ranking product • Faster AI model training with images and speech Atlas 900 AI Cluster The pinnacle of computing power Atlas 200 DK • 16 TOPS of INT8 @ 24 W • 1 USB type-C, 2 camera interfaces, 1 GE port, 1 SD card slot • 4 GB/8 GB memory Quickly build development environments in 30 minutes • 16 TOPS of INT8 • 25–40 W • Wi-Fi & LTE • 16-channel HD video real-time analytics • Fanless design, -40°C to +70°C environments Atlas 500 AI Edge Stations Atlas 300 • 64 TOPS of INT8 @ 67 W • 32 GB memory • 64-channel HD video real-time analytics • Standard half-height half-length PCIe card form factor, applicable to general-purpose servers AI Accelerator Card Atlas 800 Deep Learning System • Plug-and-play installation • Ultimate Performance • Integrated Management 5290 4U 72-drive storage model 2280 2U 2S balanced model 5280 4U 40-drive storage model X6000 2U 4-node high-density model 1280 1U 2S high-density model 2480 2U 4S high-performance model Storage-intensive Computing-intensive Ascend 910 Highest compute density on a single chip • Half precision (FP16): 256 TFLOPS • Integer precision (INT8): 512 TOPS • 128-channel full-HD video decoder: H.264/265 • Max. power consumption: 350 W • 7nm Ascend 310 AI SoC with ultimate efficiency • Half precision (FP16): 8 TFLOPS • Integer precision (INT8): 16 TOPS • 16-channel full-HD video decoder: H.264/265 • 1-channel full-HD video encoder: H.264/265 • Max. power consumption: 8 W • 12nm Focus on innovation, continuous dedication and backward compatibility

- 47. HUAWEI | MUNICH RESEARCH CENTER 51 HiSilicon Kunpeng 920 — The industry's highest-performance ARM-based server CPU CPU • 48x Cores, ARMv8.2, 3.0 GHz, 48-bit physical address space • 4x Issue out-of-order superscalar design • 64 KB L1 instruction cache and 64 KB L1 data cache L2 Cache • 512 KB private per core L3 Cache • 48 MB shared for all (1 MB/core) Memory • 8-channel DDR4-2400/2666/2933/3200 PCIe • 40x PCI Express 4.0 lanes Integrated I/O • 8x Ethernet lanes, combo MACs, supporting 2x 100GbE, 2x 40GbE, 8x 25GbE/10GbE,10xGbE • RoCEv1 and RoCEv2 • x4 USB ports • 16x SAS 3.0 lanes and 2x SATA 3.0 lanes CCIX • Cache coherency interface for Xilinx FPGA accelerator • (collaboration with Xilinx) Management Engine • Isolated management subsystem (co-works with ARM’s SCP & MCP firmware) Scale-up • Coherent SMP interface for 2P/4P configurations • Up to 240 Gbit/s per port Power • 180 Watt (64x cores with 2.6 GHz) • 150 Watt (48x cores with 2.6 GHz) DDR4 64b DDR4 64b DDR4 64b SAS / SATA 3.0 PCIe 4.0 NAND / USB / UART / GPIO 48 – 64 MB L3 Cache 512 KB L2 Cache per core 512 KB L2 Cache per core 512 KB L2 Cache per core ARMv8.2 2.6-3.0GHz 64KB/32KB ...... … SMP Interface AMBA Network Interface Function Accelerators Coherent Fabric 240 Gbps per port 2x 100 GbE / 2x 40 GbE / 8x 25 GbE / 10x GbE Up to 16 lanes Up to 40 lanes Low speed I/O ARMv8.2 2.6-3.0GHz 64KB/32KB ARMv8.2 2.6-3.0GHz 64KB/32KB

- 48. HUAWEI | MUNICH RESEARCH CENTER 52 HiSilicon Ascend 310 — AI SoC with ultimate efficiency Da Vinci AI Core Da Vinci AI Core CHIE Interconnect (512 bits) A55 TS Low Power M3 USB 3.0 Device Gigabit Ethernet PCIe 3.0 Ctrl RC/EP 1 – 4 Lanes On-chip Buffer 8 MB Last Level Cache 3 MB DMA Engine DMA Engine FHD Video JPEG/PNG Codec SPI Flash UART / I2C / SPI / GPIO / etc. A55 Cluster DSU LPDDR4x (64 bits) LPDDR4x (64 bits) LPDDR4 Chip LPDDR4 Chip CPU x 8 CPU x 8 CPU x 8 CPU x 8 CPU x 8 CPU x 8 CPU x 8 CPU x 8 x86 / Arm Host Network x86 / Arm Host or PCIe EP Device • Half precision (FP16): 8 TFLOPS • Integer precision (INT8): 16 TOPS • 16-channel full-HD video decoder: H.264/265 • 1-channel full-HD video encoder: H.264/265 • Max. power consumption: 8 W • 12nm

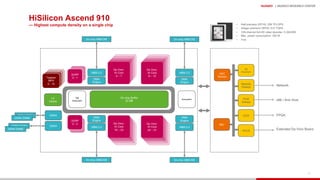

- 49. HUAWEI | MUNICH RESEARCH CENTER 53 HiSilicon Ascend 910 — Highest compute density on a single chip Taishan MP4 0 - 15 Taishan MP4 0 - 15 Taishan MP4 0 - 15 Taishan MP4 0 - 15 L3 Cache DVPP 0 - 1DVPP 0 - 1 DVPP 0 - 1 DVPP 2 - 3 DDR4 DDR4 TS Subsystem Subsystem On-chip Buffer 32 MB DDR4 DIMM DDR4 DIMM DDR4 DIMM DDR4 DIMM Da Vinci AI Core 8 – 15 HBM 2.0 DMA Engine On-chip HBM DIE DMA Engine HBM 2.0 On-chip HBM DIE HBM 2.0 DMA Engine On-chip HBM DIE DMA Engine HBM 2.0 On-chip HBM DIE IO Subsystem Network Subsys PCIE Subsys CCIX HCCS IMU HAC Subsys Da Vinci AI Core 0 – 7 Da Vinci AI Core 16 – 23 Da Vinci AI Core 24 – 31 • Half precision (FP16): 256 TFLOPS • Integer precision (INT8): 512 TOPS • 128-channel full-HD video decoder: H.264/265 • Max. power consumption: 350 W • 7nm Network x86 / Arm Host FPGA Extended Da Vinci Board

- 50. HUAWEI | MUNICH RESEARCH CENTER 54 Da Vinci Architecture

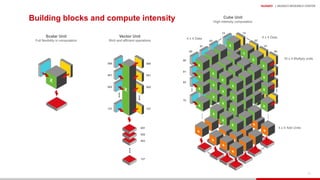

- 51. HUAWEI | MUNICH RESEARCH CENTER 55 Building blocks and compute intensity Scalar Unit Full flexibility in computation 000 001 002 127 000 001 002 127 001 002 003 127 ●●● ●●● ●●● Vector Unit Rich and efficient operations 16 x 4 Multiply units Cube Unit High intensity computation ●●● 00 01 02 15 15 02 01 00 —— —— 00 01 02 15 4 x 4 Data 4 x 4 Data 4 x 4 Add Units ●●● ●●● ●●●●●● ●●●

- 52. HUAWEI | MUNICH RESEARCH CENTER 56 Building blocks and compute intensity N N2 N3 1 1 1 2 4 8 4 16 128 8 64 512 16 256 4,096 32 1,024 32,768 64 4,096 262,144 GPU + Tensor core AI core + SRAM Area (normalised to 12 nm) 5.2 mm2 13.2 mm2 Compute power 1.7 FLOPS (FP16) 8 FLOPS (FP16) ●●● Cube Unit High intensity computation 16 x 4 Multiply units 00 01 02 15 15 02 01 00 —— —— 00 01 02 15 4 x 4 Data 4 x 4 Data 4 x 4 Add Units ●●● ●●● ●●●●●● ●●● Number of multiply-accumulators (MACs)

- 53. HUAWEI | MUNICH RESEARCH CENTER 57 Advantages of special compute units Computing density Flexibility for (int i=0; i<16; i++) { for (int j=0; j<16; j++) { for (int k=0; k<16; k++) { c[i][j] += a[i][k] * b[k][j]; } } } float a[16][16], b[16][16], c[16][16]; for (int i=0; i<16; i++) { for (int j=0; j<16; j++) { c[i][j] = a[i][:] *+ b[:][j]; } } c[:][:] = a[:][:] X b[:][:] 16 16 16 16 16 16 =× Scalar: Vector: Cube: 𝐶𝑦𝑐𝑙𝑒𝑠 = 16 ∗ 16 ∗ 16 ∗ 2 = 8192 𝐷𝑎𝑡𝑎 𝑝𝑒𝑟 𝑐𝑦𝑐𝑙𝑒 = 𝑅𝑑 2; 𝑊𝑟 1 𝐶𝑦𝑐𝑙𝑒𝑠 = 16 ∗ 16 = 256 𝐷𝑎𝑡𝑎 𝑝𝑒𝑟 𝑐𝑦𝑐𝑙𝑒 = 𝑅𝑑 2 ∗ 16; 𝑊𝑟 16 𝐶𝑦𝑐𝑙𝑒𝑠 = 1 𝐷𝑎𝑡𝑎 𝑝𝑒𝑟 𝑐𝑦𝑐𝑙𝑒 = 𝑅𝑑 2 ∗ 16 ∗ 16; 𝑊𝑟 16*16

- 54. HUAWEI | MUNICH RESEARCH CENTER 58 Computing density Flexibility Advantages of special compute units for (int i=0; i<16; i++) { for (int j=0; j<16; j++) { for (int k=0; k<16; k++) { c[i][j] += a[i][k] * b[k][j]; } } } float a[16][16], b[16][16], c[16][16]; for (int i=0; i<16; i++) { for (int j=0; j<16; j++) { c[i][j] = a[i][:] *+ b[:][j]; } } c[:][:] = a[:][:] X b[:][:] 16 16 16 16 16 16 =× Scalar: Vector: Cube: 𝐶𝑦𝑐𝑙𝑒𝑠 = 16 ∗ 16 ∗ 16 ∗ 2 = 8192 𝐷𝑎𝑡𝑎 𝑝𝑒𝑟 𝑐𝑦𝑐𝑙𝑒 = 𝑅𝑑 2; 𝑊𝑟 1 𝐶𝑦𝑐𝑙𝑒𝑠 = 16 ∗ 16 = 256 𝐷𝑎𝑡𝑎 𝑝𝑒𝑟 𝑐𝑦𝑐𝑙𝑒 = 𝑅𝑑 2 ∗ 16; 𝑊𝑟 16 𝐶𝑦𝑐𝑙𝑒𝑠 = 1 𝐷𝑎𝑡𝑎 𝑝𝑒𝑟 𝑐𝑦𝑐𝑙𝑒 = 𝑅𝑑 2 ∗ 16 ∗ 16; 𝑊𝑟 16*16 FC 1000 FC 4096 / ReLU FC 4096 / ReLU Max Pool 3x3s2 Conv 3x3s1, 256 / ReLU Conv 3x3s1, 256 / ReLU Conv 3x3s1, 256 / ReLU Max Pool 3x3s2 Local Response Norm Conv 5x5s1, 356 / ReLU Max Pool 3x3s2 Local Response Norm Conv, 11x11s4, 96 / ReLU AlexNet 884K 1.3M 442K 37M 16M 4M 307K 35K 35K 223M 149M 112M 74M 37M 16M 4M Parameters FLOPS Number of parameters and floating point operations per second (FLOPS) for each layer of the AlexNet artificial intelligence model. Typical CNN networks AlexNet VGG16 Inception-v3 Model memory (MB) > 200 > 500 90-100 Parameter count (Million) 60 138 23.2 Computation amount (Million) 720 15300 5000 99% of the computations are matrix-matrix multiplications

- 55. HUAWEI | MUNICH RESEARCH CENTER 59 Da Vinci core architecture L1Buffer(1MB) MTE Unified Buffer (256 KB) Buffer C L0 (256 KB) 163 Cube A/B DFF 8 x 16 Vector Unit Scalar Unit / AGU / Mask Gen SPR GPR System Control BIU I Cache (32 KB) Event Synchronisation Cube Queue Vector Queue MTE Queue 162Accumulator AccumulatorDFF decomp img2col trans L1DMAC Scalar PSQ Instruction Dispatch Buffer A L0 (64 KB) Buffer B L0 (64 KB) FP16 → FP32 FP32 → FP16 ReLU Config Port L2 access (1024 bits x 2) L0load (4096bits) L1load (2048bits) Load/store (2048bits) Density processing units System control Auxiliary units Controlling and management Caches General-purpose processing • Cube unit has 4,096 (163) FP16 MACs and 8,192 INT8 MACs • Vector unit supports 2048-bit INT8/FP16/FP32 vectors with special functions (e.g. activation functions, NMS- Non Minimum Suppression, ROI, SORT) • Explicit memory hierarchy design which is managed by MTE

- 56. HUAWEI | MUNICH RESEARCH CENTER 60 Micro-architectural configurations Core scale Cube Operations/cycle Vector Operations/cycle L0 Bus width L1 Bus width L2 → Memory Bandwidth Max 8192 256 A: 8192 B: 2048 Ascend 310: !"# $%/' # ()*+' Ascend 910: , -%/' ,# ()*+' Lite 4096 128 A: 8192 B: 2048 38.4 GB/s Tiny 512 32 A: 2048 B: 512 N/A Performance baseline Minimise vector limitation Matches with execution units; eliminates bottleneck Ensure this no a limitation Limited by network-on-a-chip; avoids additional limitations

- 57. HUAWEI | MUNICH RESEARCH CENTER 61 End-to-end life cycle

- 58. HUAWEI | MUNICH RESEARCH CENTER 62 Implementation of end-to-end lifecycle in AI projects [Alake, 2020], [Sato et al., 2019] Problem definition Research Data aggregation, mining and scraping Data preparation, pre-processing and augmentation Model building, implementation and experimentation Model training and evaluation Model conversion (to appropriate format) Evaluation Model deployment Monitoring and observability CodeModelData • Problem statement • Ideal problem solution • Understanding and insight into the problem • Technical requirements • Data structure and source • Solution form • Model architecture • Algorithm research • Hardware requirements • Data gathering (diverse, unbiased and abundant) • Data reformatting • Data cleaning • Data normalization • Data augmentation • Usage of pre-trained models? • Fine-tuning pre-trained models • Training accuracy • Validation accuracy • Training loss • Validation loss • Underfitting or overfitting? • Confusion matrix (error matrix) • Precision-recall • Refine and optimise model • Model conversion • Mobile-optimised model • UI interface to access model functionalities • Continuous integration pipeline that enables model redeployment • Model performance monitoring system Training code Training data Candidate models Test data Metrics Chosen model Offline model Test code Offline model Application code Code and model in production Production dataRaw data Labelled data Test data

- 59. HUAWEI | MUNICH RESEARCH CENTER 63 The challenges to AI implementations in projects Problem definition Research Data aggregation, mining and scraping Data preparation, pre-processing and augmentation Model building, implementation and experimentation Model training and evaluation Model conversion (to appropriate format) Evaluation Model deployment Monitoring and observability CodeModelData • Problem statement • Ideal problem solution • Understanding and insight into the problem • Technical requirements • Data structure and source • Solution form • Model architecture • Algorithm research • Hardware requirements • Data gathering (diverse, unbiased and abundant) • Data reformatting • Data cleaning • Data normalization • Data augmentation • Usage of pre-trained models? • Fine-tuning pre-trained models • Training accuracy • Validation accuracy • Training loss • Validation loss • Underfitting or overfitting? • Confusion matrix (error matrix) • Precision-recall • Refine and optimise model • Model conversion • Mobile-optimised model • UI interface to access model functionalities • Continuous integration pipeline that enables model redeployment • Model performance monitoring system Training code Training data Candidate models Test data Metrics Chosen model Model Test code Model Application code Code and model in production Production dataRaw data Labelled data 4) Hidden stratification 3) Data problems • Variability • Labelling method • Labelling quality • Label structure • Documentation • Image quality 1) Lengthy and complex development process 6) Manipulation of deep learning systems through adversarial attacks 5) Artificial intelligence vs. functional safety 2) Theoretical performance vs. application performance (e.g. CNNs vs. LSTMs) Test data 7) Validated with independent data set?

- 60. HUAWEI | MUNICH RESEARCH CENTER 64 Software stack

- 61. HUAWEI | MUNICH RESEARCH CENTER 65 Ascend AI software stack Public Cloud Private Cloud Edge ComputingConsumer Device Industrial IoT Device All Scenarios Ascend: AI IP and SoC series based on unified scalable architecture Ascend IP and SoCAscend-MaxAscend-MiniAscend-Tiny Ascend-LiteAscend-Nano CANN (Compute Architecture for Neural Networks) Chip enablement CANN: SoC operator library and highly automated development toolkit Frameworks MindSpore: Unified training and inference framework for device, edge, and cloud (both standalone and cooperative) TensorFlow PyTorch PaddlePaddle …MindSpore Application enablement Application enablement: Full-pipeline services (ModelArts), hierarchical APIs, and pre-integrated solutions HiAI Service HiAI Engine General APIs Advanced APIs Pre-integrated Solutions ModelArts AI Applications Full Stack

- 62. HUAWEI | MUNICH RESEARCH CENTER 66 Toolchain Application enablement Frameworks Chip enablement Computing resources IP and SoC Logical architecture of the Ascend AI software stack Computer vision engine Language and text engine General service execution engine Miscellaneous Offline model generator AI model manager Offline model executor Process orchestrator Digital vision pre- processing (DVPP) Tensor boosting engine (TBE) Runtime Device driver Task scheduler Operating system AI CPU AI core DVPP hardware Project management Compilation and commissioning Process orchestration Offline model conversion Operator comparison Logging Profiling Custom operators • The application enablement layer provides different processing algorithms for specific application fields. • The chip enablement layer bridges between the offline models and the Ascend AI processor. • The computing resource layer is responsible for executing specific computing tasks allocated from its upper layers. • The frameworks are providing offline model generation and execution capabilities for the Ascend AI processor.

- 63. HUAWEI | MUNICH RESEARCH CENTER 69 Software flow for model conversion and deployment Problem definition Research Data aggregation, mining and scraping Data preparation, pre-processing and augmentation Model building, implementation and experimentation Model training and evaluation Model conversion (to appropriate format) Evaluation Monitoring and observability • Problem statement • Ideal problem solution • Understanding and insight into the problem • Technical requirements • Data structure and source • Solution form • Model architecture • Algorithm research • Hardware requirements • Data gathering (diverse, unbiased and abundant) • Data reformatting • Data cleaning • Data normalization • Data augmentation • Usage of pre-trained models? • Fine-tuning pre-trained models • Training accuracy • Validation accuracy • Training loss • Validation loss • Underfitting or overfitting? • Confusion matrix (error matrix) • Precision-recall • Refine and optimise model • Model conversion • Mobile-optimised model • UI interface to access model functionalities • Continuous integration pipeline that enables model redeployment • Model performance monitoring system Chosen model Offline model Model deployment Framework manager Tensor boosting engine (TBE) Ascend AI chip C++ API Command line, C++ API DVPP module Framework manager Runtime manager Task scheduler C++ API Command line, C++ API Process orchestrator C++ API

- 64. HUAWEI | MUNICH RESEARCH CENTER 70 Software flow for model conversion and deployment Problem definition Research Data aggregation, mining and scraping Data preparation, pre-processing and augmentation Model building, implementation and experimentation Model training and evaluation Model conversion (to appropriate format) Evaluation Monitoring and observability • Problem statement • Ideal problem solution • Understanding and insight into the problem • Technical requirements • Data structure and source • Solution form • Model architecture • Algorithm research • Hardware requirements • Data gathering (diverse, unbiased and abundant) • Data reformatting • Data cleaning • Data normalization • Data augmentation • Usage of pre-trained models? • Fine-tuning pre-trained models • Training accuracy • Validation accuracy • Training loss • Validation loss • Underfitting or overfitting? • Confusion matrix (error matrix) • Precision-recall • Refine and optimise model • Model conversion • Mobile-optimised model • UI interface to access model functionalities • Continuous integration pipeline that enables model redeployment • Model performance monitoring system Chosen model Offline model Model deployment Framework manager Tensor boosting engine (TBE) Ascend AI chip C++ API Command line, C++ API DVPP module Framework manager Runtime manager Task scheduler C++ API Command line, C++ API Process orchestrator C++ API1 2

- 65. HUAWEI | MUNICH RESEARCH CENTER 71 Framework manager Problem definition Research Data aggregation, mining and scraping Data preparation, pre-processing and augmentation Model conversion (to appropriate format) Evaluation Monitoring and observability Data • Problem statement • Ideal problem solution • Understanding and insight into the problem • Technical requirements • Data structure and source • Solution form • Model architecture • Algorithm research • Hardware requirements • Data gathering (diverse, unbiased and abundant) • Data reformatting • Data cleaning • Data normalization • Data augmentation • Usage of pre-trained models? • Fine-tuning pre-trained models • Training accuracy • Validation accuracy • Training loss • Validation loss • Underfitting or overfitting? • Confusion matrix (error matrix) • Precision-recall • Refine and optimise model • Model conversion • Mobile-optimised model • UI interface to access model functionalities • Continuous integration pipeline that enables model redeployment • Model performance monitoring system Model deployment Model building, implementation and experimentation Model training and evaluation Chosen model Offline model Deep learning framework • Caffe model • TensorFlow model Model passing Quantisation Serialisation Intermediate representation (IR) graph Computational graph Weights Build Operator Model Data type conversion Data shape conversion Data compression Multi-stream scheduling Memory reuse 1 2 3 4 5

- 66. HUAWEI | MUNICH RESEARCH CENTER 72 Process orchestrator DVPP Digital vision pre-processing (DVPP) JPEGD VDEC PNGD JPEGE VENC AI CPU / AI Core VPC Output memory Input memory Output memory Input memory DVPP driver DVPP hardware • The DVPP provides the following six external interfaces: − Video decoder (VDEC) decodes H.264/H.265 videos and outputs images for video pre-processing. − Video encoder (VENC) module encodes output data of DVPP or the raw input YUV data into H.264/H.265 videos for playback and display. − JPEG picture decoder (JPEGD) module decodes the JPEG images, converts their format into YUV, and pre-processes the inference input data for the neural network. − JPEG picture encoder (JPEGE) module is used to restore the format of processed data to JPEG for the post-processing of the inference output data of the neural network. − PNG picture decoder (PNGD) module needs to be called to decode the image into the RGB format before it is output to the Ascend AI processor for inference and computing. − Vision pre-processing core (VPC) module provides other image and video processing functions, such as format conversion (for example, conversion from YUV/RGB to YUV420), resizing, and cropping. Raw data Output dataYUV/RGB YUV420SP Model deployment

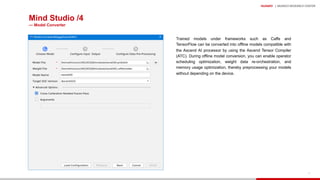

- 67. HUAWEI | MUNICH RESEARCH CENTER 74 Device Host Integrated development environment (IDE) Mind Studio /1 Process orchestrator Building Execution Offline model Application development Custom operator development Device management Operator comparison Log management Performance profiling IDE daemon client Device development kit (DDK) Log service Performance profiling service Process orchestrator agent IDE daemon host Driver Application IDE daemon device Project management Offline model execution (OME) Tensor boosting engine (TBE) Runtime Driver Control CPU Task scheduling (TS) AI CPU / AI Core DVPP Development platformlayer HostservicelayerChiplayer Application Mind Studio functional component Basic software and device Chip enablement • Mind Studio is an Intellij-based development toolchain platform. • Mind Studio offers the following features: − Project management − Development and building of operators, computing engines, and applications − Execution of developed operators and computing engines on Ascend AI processor − Debugging − Process orchestration − Custom operator development − Offline model conversion for converting trained third-party network models − Log management for system-wide log collection and analysis − Performance profiling that enables efficient, easy-to-use, and scalable systematic performance analysis − Device management for managing devices connected to the host − Operator comparison for comparing the execution results − DDK installation and management for streamlining AI algorithm development

- 68. HUAWEI | MUNICH RESEARCH CENTER 75 Mind Studio /2

- 69. HUAWEI | MUNICH RESEARCH CENTER 76 Mind Studio /3 — Device Manager The Device Manager allows you to add, delete, and modify devices. Choose Tools ▸ Device Manager from the main menu of Mind Studio. Parameter or icon Description Host IP Device IP address ADA port Port number used by the ADC to communicate with the ADA. The value range is [20000, 25000]. Ensure that the configured port is not occupied. You can run the netstat –an | grep PortNumber command to check whether a port is occupied. Defaults to 22118. Alias Device alias. Using aliases can help manage devices expediently when multiple devices are connected. Target Device type EP: ASIC form, such as Atlas 200/300/500 RC: Atlas 200 DK Run version Version of the software package Connectivity Status of the connection between Mind Studio and the device: YES: connected NO: disconnected Adds a device. After a device is added, you can click this icon to add more devices. Deletes a device. You can select a device and click this icon to delete it. Edits a device. Select a device to be edited and click this icon to modify the Host IP, ADA Port, and Alias properties. Checks the device connection status. After modifying the device information, you can click this icon to refresh the device connection status, software version number, and device type.

- 70. HUAWEI | MUNICH RESEARCH CENTER 77 Mind Studio /4 — Model Converter Trained models under frameworks such as Caffe and TensorFlow can be converted into offline models compatible with the Ascend AI processor by using the Ascend Tensor Compiler (ATC). During offline model conversion, you can enable operator scheduling optimization, weight data re-orchestration, and memory usage optimization, thereby preprocessing your models without depending on the device.

- 71. HUAWEI | MUNICH RESEARCH CENTER 78 Mind Studio /5 — Model Visualizer The .om model file of a successfully converted model can be visualized in Mind Studio, so that you can view the network topology including all operators in the model. On the menu bar, choose Tools ▸ Model Visualizer. Choose resnet50 ▸ device, select the converted resnet50.om model file, and click Open.

- 72. HUAWEI | MUNICH RESEARCH CENTER 79 Mind Studio /6 — Profiler For a single-operator simulation project, set Target to Simulator_Performance and run the test cases. After profiling is successfully executed, the profiling data generated during simulation is displayed on the console in the lower part of the IDE. Right-click the operator project name and choose View Profiling Result from the shortcut menu to view the profiling result, covering the following matrices: − Perf. Consumption Graph − Perf. Consumption Data − Hotspot Function Analysis − WR BufferInfo − Parallel Analysis

- 73. HUAWEI | MUNICH RESEARCH CENTER 80 Mind Studio /7 — Log Manager Mind Studio provides a system-wide log collection and analysis solution for the Ascend AI Processor, improving the efficiency of locating algorithm problems at runtime. Mind Studio also provides a unified log format and a GUI for visualized analysis of cross-platform logs and runtime diagnosis, facilitating the use of the log analysis system. Log management: Click the +Log tab at the bottom of the Mind Studio window.

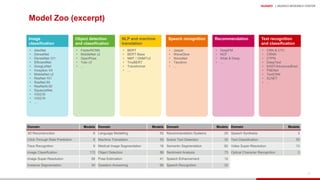

- 74. HUAWEI | MUNICH RESEARCH CENTER 81 Model Zoo (excerpt) Image classification • AlexNet • DenseNet • DenseNet-121 • EfficientNet • GoogLeNet • Inception V4 • MobileNet v2 • ResNet-101 • ResNet-50 • ResNeXt-50 • SquezzeNet • VGG16 • VGG19 • … Object detection and classification • FasterRCNN • MobileNet v2 • OpenPose • Yolo v3 • … NLP and machine translation • BERT • BERT-Base • NMT / GNMTv2 • TinyBERT • Transformer • … Recommendation • DeepFM • NCF • Wide & Deep • … Speech recognition • Jasper • WaveGlow • WaveNet • Tacotron • … Text recognition and classification • CNN & CTC • CRNN • CTPN • DeepText • EAST/AdvancedEast • PSENet • TextCNN • XLNET • … Domain Models Domain Models Domain Models Domain Models 3D Reconstruction 6 Language Modelling 55 Recommendation Systems 25 Speech Synthesis 3 Click-Through Rate Prediction 6 Machine Translation 55 Scene Text Detection 32 Text Classification 35 Face Recognition 9 Medical Image Segmentation 18 Semantic Segmentation 82 Video Super-Resolution 13 Image Classification 172 Object Detection 88 Sentiment Analysis 73 Optical Character Recognition 3 Image Super-Resolution 58 Pose Estimation 41 Speech Enhancement 12 Instance Segmentation 34 Question Answering 66 Speech Recognition 32

- 75. HUAWEI | MUNICH RESEARCH CENTER 82 Gain more practical experiences

- 76. HUAWEI | MUNICH RESEARCH CENTER 83 Open inside view Atlas 200 DK developer board Right isometric view Lower left isometric view AI Compute Power • Up to 8 TFLOPS FP16 (16 TOPS INT8) 3 options: 16 TOPS, 8 TOPS, and 4 TOPS Memory • LPDDR4x, 8 GB, and 3,200 Mbit/s Storage • 1 Micro-SD (TF) card slot, supporting SD 3.0 and a maximum rate of SDR52 Network Port • 10/100/1000Mbps Ethernet RJ45 port USB Port • 1 USB 3.0 Type-C port, which is used only as a slave device and compatible with USB 2.0 Other Ports • 1 x 40-pin I/O connector • 2 x onboard microphones Camera • 2 x 15-pin Raspberry Pi Camera connectors, supporting the Raspberry Pi v1.3 and v2.1 camera modules Power Supply • 5V to 28V DC. 12V 3A adapter is configured by default Dimensions (H x W x D) • 32.9 mm x 137.8 mm x 93.0 mm Power Consumption • 20W Weight • 234g

- 77. HUAWEI | MUNICH RESEARCH CENTER 84 Retinal blood vessel segmentation in the eyeground • The fundus retinal blood vessel segmentation application was developed for the Atlas 200 DK inference system, in partnership with the Nankai University, led by Professor Li Tao of Intelligent Computing System Research Office . • This project makes full use of the neural network computing power of the Atlas 200 DK system to segment the fundus vessels in real-time. • The total inference time of 20 pictures is 761.8 milliseconds, and the average inference time of one image is 38 milliseconds. An overview of the vascular segmentation model Video Before and after

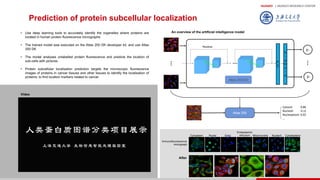

- 78. HUAWEI | MUNICH RESEARCH CENTER 85 Prediction of protein subcellular localization • Use deep learning tools to accurately identify the organelles where proteins are located in human protein fluorescence micrographs • The trained model was executed on the Atlas 200 DK developer kit, and use Atlas 200 DK • The model analyses unlabelled protein fluorescence and predicts the location of sub-cells with pictures • Protein subcellular localisation prediction targets the microscopic fluorescence images of proteins in cancer tissues and other tissues to identify the localisation of proteins; to find location markers related to cancer An overview of the artificial intelligence model Video Residual y1 yn S i g m o i d F C Atlas 200开发板 Cytosol: 0.86 Nucleoli: 0.11 Nucleoplasm: 0.02 ... Atlas 200 DK Immunofluorescence micrograph Cytoplasm Nuclei Golgi Endoplasmic reticulum Mitochondria Nucleoli Cytoskeleton After Atlas 200

- 79. HUAWEI | MUNICH RESEARCH CENTER 86 Ascend developer community

- 80. HUAWEI | MUNICH RESEARCH CENTER 87 Ascend developer community https://ascend.huawei.com Ascend developer portal Support services Developer-centric

- 81. HUAWEI | MUNICH RESEARCH CENTER 88 Getting started with Atlas 200 DK developer board • Preparing the Ubuntu-based development environment − Install Python, xterm, Firefox, fonts, numpy, OpenJDK, etc. − Modify .bashrc file • Setting up the Atlas 200 DK hardware environment − Removing the upper case and install the camera • Create and write SD card image − Download and verify software packages − Write image to the SD card • Boot and connect to the Atlas 200 DK developer board • Install third-party packages • Create the first project: Object detection sample application 1 2 3 4 5 6

- 82. HUAWEI | MUNICH RESEARCH CENTER 89 Preparing the Ubuntu-based development environment

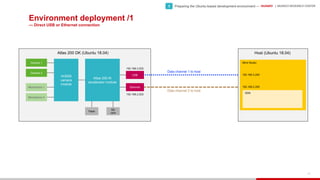

- 83. HUAWEI | MUNICH RESEARCH CENTER 90 Environment deployment /1 — Direct USB or Ethernet connection Microphone 2 Microphone 1 Camera 1 Camera 2 Hi3559 camera module Atlas 200 AI accelerator module SD card Flash USB Ethernet Mind Studio DDK Atlas 200 DK (Ubuntu 18.04) Host (Ubuntu 18.04) Data channel 1 to host Data channel 2 to host 192.168.2.202 192.168.3.202 192.168.3.200 192.168.2.200 • Preparing the Ubuntu-based development environment —1

- 84. HUAWEI | MUNICH RESEARCH CENTER 91 Environment deployment /2 — Direct USB or Ethernet connection and virtual machine with — guest operating system (shared network mode) Microphone 2 Microphone 1 Camera 1 Camera 2 Hi3559 camera module Atlas 200 AI accelerator module SD card Flash USB Ethernet Mind Studio DDK Atlas 200 DK (Ubuntu 18.04) Host (Ubuntu 18.04) Virtual machine with guest operating system • Virtual machine requirements – Main memory ≧ 4 GB – Bridged network with default adapter – Hard disk ≧ 5 GB • Shared network (NAT) is the default network mode for virtual machines. – The hardware virtualisation software creates a separate virtual subnet with its own virtual DHCP server running. – A virtual machine belongs to that virtual subnet with its own IP range. – A virtual machine is not visible in the real subnet the host system belongs to. – A virtual machine use full internet access. NAT Data channel 1 to host Data channel 2 to host 192.168.2.202 192.168.3.202 10.211.66.1 10.222.55.1 192.168.3.200 192.168.2.200 • Preparing the Ubuntu-based development environment —1

- 85. HUAWEI | MUNICH RESEARCH CENTER 92 Environment deployment /3 — Direct USB or Ethernet connection and virtual machine with — guest operating system (bridged network mode) Microphone 2 Microphone 1 Camera 1 Camera 2 Hi3559 camera module Atlas 200 AI accelerator module SD card Flash USB Ethernet Mind Studio DDK Atlas 200 DK (Ubuntu 18.04) Host (Ubuntu 18.04) Virtual machine with guest operating system Bridgedmode • Virtual machine requirements – Main memory ≧ 4 GB – Bridged network with default adapter – Hard disk ≧ 5 GB • Shared network (NAT) is the default network mode for virtual machines. – The hardware virtualisation software creates a separate virtual subnet with its own virtual DHCP server running. – A virtual machine belongs to that virtual subnet with its own IP range. – A virtual machine is not visible in the real subnet the host system belongs to. – A virtual machine use full internet access. • Bridget network is the recommended network mode and uses a virtualised network interface card with direct access to Internet. – A virtual machine appears as a separate computer that belongs to the same subnet as the host system. – A DHCP server (e.g. your router) provides a virtual machine with an IP address within the same IP range as other computer in the same subnet. – A virtual machine can ping and see all computers in the subnet. – Other computers can ping and see the virtual machine. Data channel 1 to host Data channel 2 to host 192.168.3.201 192.168.2.201 192.168.2.202 192.168.3.202 192.168.3.200 192.168.2.200 • Preparing the Ubuntu-based development environment —1

- 86. HUAWEI | MUNICH RESEARCH CENTER 93 Environment deployment /4 — Direct USB or Ethernet connection, virtual machine with — guest operating system (bridged network mode) and routing Microphone 2 Microphone 1 Camera 1 Camera 2 Hi3559 camera module Atlas 200 AI accelerator module SD card Flash USB Ethernet Mind Studio DDK Atlas 200 DK (Ubuntu 18.04) Host (Ubuntu 18.04) Virtual machine with guest operating system Bridgedmode • Virtual machine requirements – Main memory ≧ 4 GB – Bridged network with default adapter – Hard disk ≧ 5 GB • Shared network (NAT) is the default network mode for virtual machines. – The hardware virtualisation software creates a separate virtual subnet with its own virtual DHCP server running. – A virtual machine belongs to that virtual subnet with its own IP range. – A virtual machine is not visible in the real subnet the host system belongs to. – A virtual machine use full internet access. • Bridget network is the recommended network mode and uses a virtualised network interface card with direct access to Internet. – A virtual machine appears as a separate computer that belongs to the same subnet as the host system. – A DHCP server (e.g. your router) provides a virtual machine with an IP address within the same IP range as other computer in the same subnet. – A virtual machine can ping and see all computers in the subnet. – Other computers can ping and see the virtual machine. Routing Internet Data channel 1 to host Data channel 2 to host 192.168.2.202 192.168.3.202 192.168.3.201 192.168.2.201 Router 192.168.3.200 192.168.2.200 • Preparing the Ubuntu-based development environment —1

- 87. HUAWEI | MUNICH RESEARCH CENTER 94 Environment deployment /5 — Ethernet connection, virtual machine with guest operating and Internet access (recommended) Microphone 2 Microphone 1 Camera 1 Camera 2 Hi3559 camera module Atlas 200 AI accelerator module SD card Flash USB Ethernet Mind Studio DDK Atlas 200 DK (Ubuntu 18.04) Host (Ubuntu 18.04) Virtual machine with guest operating system • Virtual machine requirements – Main memory ≧ 4 GB – Bridged network with default adapter – Hard disk ≧ 5 GB • Shared network (NAT) is the default network mode for virtual machines. – The hardware virtualisation software creates a separate virtual subnet with its own virtual DHCP server running. – A virtual machine belongs to that virtual subnet with its own IP range. – A virtual machine is not visible in the real subnet the host system belongs to. – A virtual machine use full internet access. • Bridget network is the recommended network mode and uses a virtualised network interface card with direct access to Internet. – A virtual machine appears as a separate computer that belongs to the same subnet as the host system. – A DHCP server (e.g. your router) provides a virtual machine with an IP address within the same IP range as other computer in the same subnet. – A virtual machine can ping and see all computers in the subnet. – Other computers can ping and see the virtual machine. Internet Switch Bridgedmode 192.168.2.202 192.168.2.201 Router 192.168.2.200 • Preparing the Ubuntu-based development environment —1

- 88. HUAWEI | MUNICH RESEARCH CENTER 95 About version 1.73.0.0 • Atlas 200 DK developer board comes with three versions, including 1.3.0.0, 1.32.0.0, and 1.73.0.0. 1.73.0.0 is the latest version based on our new software architecture, facilitating developers to read, understand, and develop their artificial intelligence applications. • This version allows multiple installation methods. − Development environment: Install the toolkit packages for model conversion, source code creation and build. − Operating environment: Install device driver, OPP, and ACL library to run and built the source code or applications. Please note: − If you install the development and operating environment on the same machine, both environments are co-deployed. If the installation is on different machines, the environments are separately deployed. − To use Mind Studio (only supported on Ubuntu for x86 architecture), use the separate deployment mode. • The following describes the installation of the development and the operating environment via the separate deployment mode. The development environment is Ubuntu 18.04 (x86) in a virtual machine setup or dual-boot (it is recommended to have a clean operating system installation). The operating environment is Ubuntu 18.04 (ARM64) on the Atlas 200 DK developer board. • Preparing the Ubuntu-based development environment —1

- 89. HUAWEI | MUNICH RESEARCH CENTER 96 Hardware and software requirements • Atlas 200 DK developer board, including network cable, power supply and SD card • Ubuntu 18.04 in a virtual machine or dual-boot (preferably a newly created environment). It is recommended to use a virtual machine with more that 4 GB of memory. • Two network ports that can be used to access the Internet (one for the virtual machine and the other for the developer board) • Preparing the Ubuntu-based development environment —1

- 90. HUAWEI | MUNICH RESEARCH CENTER 97 Install environment dependencies /1 You will do the installation as a regular user. Please ensure that this regular user and the root user exist in the current environment. 1) Configure user permissions Grant sudo permissions to a regular user for the toolkit installation. Switch to the root user: sudo bash Grant the write permission on the sudoers file and open the file: chmod u+w /etc/sudoers vi /etc/sudoers Add the following content in the marked box below # User privilege specification, as shown in the following figure: Remove the write permission on the /etc/sudoers file: chmod u-w /etc/sudoers • Preparing the Ubuntu-based development environment —1

- 91. HUAWEI | MUNICH RESEARCH CENTER 98 Install environment dependencies /2 2) Install the related system dependencies and system components, which the toolkit packages requires sudo apt-get install -y gcc make cmake unzip zlib1g zlib1g-dev libsqlite3-dev openssl libssl-dev libffi-dev pciutils net-tools g++-5-aarch64-linux-gnu 3) Compile and install Python Go to the home directory as regular user: cd $HOME Download the Python 3.7.5 source code package and decompress it: wget https://www.python.org/ftp/python/3.7.5/Python-3.7.5.tgz tar -zxvf Python-3.7.5.tgz Go to the decompressed folder and run the following configuration, build, and installation commands: cd Python-3.7.5 ./configure --prefix=/usr/local/python3.7.5 --enable-shared make -j8 sudo make install • Preparing the Ubuntu-based development environment —1

- 92. HUAWEI | MUNICH RESEARCH CENTER 99 Install environment dependencies /3 2) Run the following commands to copy the .so files to the lib directory of the operating system and create Python soft links: sudo cp /usr/local/python3.7.5/lib/libpython3.7m.so.1.0 /usr/lib sudo ln -s /usr/local/python3.7.5/bin/python3 /usr/bin/python3.7 sudo ln -s /usr/local/python3.7.5/bin/pip3 /usr/bin/pip3.7 sudo ln -s /usr/local/python3.7.5/bin/python3 /usr/bin/python3.7.5 sudo ln -s /usr/local/python3.7.5/bin/pip3 /usr/bin/pip3.7.5 3) Install the Python dependency packages: 4) pip3.7.5 install attrs psutil decorator numpy protobuf==3.11.3 scipy sympy cffi grpcio grpcio-tools requests --user Modify the PATH environment variable: vim ~/.bashrc 5) Append the following line to the file: export PATH=/usr/local/python3.7.5/bin/:$PATH 6) Run the following command for the environment variable to take effect: source ~/.bashrc • Preparing the Ubuntu-based development environment —1

- 93. HUAWEI | MUNICH RESEARCH CENTER 100 Install the toolkit packages Download and install the two toolkit packages which required by the development environment. Possible download links are http://shrnk.cc/hbe41 or https://www.huaweicloud.com/ascend/resource/Software The required packages are: − Ascend-Toolkit-20.0.RC1-arm64-linux_gcc7.3.0.run − Ascend-Toolkit-20.0.RC1-x86_64-linux_gcc7.3.0.run Save the package in the $HOME/Ascend directory of your regular user in the development environment. Install the toolkit packages: chmod +x Ascend-Toolkit*.run Install the toolkit packages: cd $HOME/Ascend ./Ascend-Toolkit-20.0.RC1-arm64-linux_gcc7.3.0.run --install ./Ascend-Toolkit-20.0.RC1-x86_64-linux_gcc7.3.0.run --install • Preparing the Ubuntu-based development environment —1

- 94. HUAWEI | MUNICH RESEARCH CENTER 101 Install the media module device driver You need to install header and library files if you use an external camera to collect source data for the development of an artificial intelligence application. Possible download links are http://shrnk.cc/hbe41 or https://www.huaweicloud.com/ascend/resource/Software The required package is: − Ascend310-driver-1.73.5.1.b050-ubuntu18.04.aarch64-minirc.tar.gz Save the package in the $HOME/Ascend directory of your regular user in the development environment. Install the device driver: chmod +x Ascend-Toolkit*.run Install the toolkit packages: cd $HOME/Ascend tar -zxvf Ascend310-driver-1.73.5.1.b050-ubuntu18.04.aarch64-minirc.tar.gz • Preparing the Ubuntu-based development environment —1

- 95. HUAWEI | MUNICH RESEARCH CENTER 102 Install Mind Studio /1 • Preparing the Ubuntu-based development environment —1 Download the Mind Studio version 2.3.3, as shown in the following figure. Possible download links are http://shrnk.cc/hbe41 or https://www.huaweicloud.com/ascend/resources/Tools/0 The required package is: − mindstudio.tar.gz Save the package in the $HOME directory of your regular user in the development environment. cd $HOME Install required dependencies: sudo apt-get -y install xterm openjdk-8-jdk fonts-wqy-zenhei fonts-wqy-microhei fonts-arphic-ukai fonts-arphic-uming sudo /usr/local/python3.7.5/bin/pip3 install --user coverage gnureadline pylint matplotlib PyQt5==5.14.0 sudo apt install libcanberra-gtk-module libcanberra-gtk3-module Decompress the file and run Mind Studio: tar -zxvf mindstudio.tar.gz cd MindStudio-ubuntu/bin ./Mindstudio.sh

- 96. HUAWEI | MUNICH RESEARCH CENTER 103 Install Mind Studio /2 • Preparing the Ubuntu-based development environment —1 In the following dialog box, select Do not import settings. Select the Toolkit path (/home/ascend/Ascend/ascend-toolkit/20.0.RC1 is used as an example):

- 97. HUAWEI | MUNICH RESEARCH CENTER 106 Create and write SD card image

- 98. HUAWEI | MUNICH RESEARCH CENTER 107 Setting up the operating environment /1 For the set up of the operating environment, you need to write the runtime code and system programs to the SD card. Afterwards, you can insert the SD card in the Atlas 200 DK developer board. 1) Download and install the software packages which which are required to write the SD card. Possible download links are http://shrnk.cc/hbe41 or https://www.huaweicloud.com/ascend/resource/Software http://cdimage.ubuntu.com/ubuntu/releases/18.04/release/ The required packages are: − Ascend310-driver-1.73.5.1.b050-ubuntu18.04.aarch64-minirc.tar.gz − Ascend310-aicpu_kernels-1.73.5.1.b050-minirc.tar.gz − Ascend-acllib-1.73.5.1.b050-ubuntu18.04.aarch64-minirc.run − ubuntu-18.04.5-server-arm64.iso • Create and write SD card image —3

- 99. HUAWEI | MUNICH RESEARCH CENTER 108 Setting up the operating environment /2 2) Download the card making script Run the following command the $HOME directory of your development environment for your regular user. This will download the program from the ascend-tools repository: git clone https://gitlab.schihei.de/schihei/ascend-tools.git or git clone https://gitee.com/ascend/tools.git ascend-tools Go to the card making directory. cd $HOME/ascend-tools/makesd/for_1.7x.0.0/ Copy the downloaded files into this directory and verify with ls if all files are available. ~/ascend-tools/makesd/for_1.7x.0.0$ ls Ascend310-aicpu_kernels-1.73.5.1.b050-minirc.tar.gz make_sd_card.py README.md Ascend310-driver-1.73.5.1.b050-ubuntu18.04.aarch64-minirc.tar.gz make_ubuntu_sd.sh sd_card_making_log Ascend-acllib-1.73.5.1.b050-ubuntu18.04.aarch64-minirc.run README_EN.md ubuntu-18.04.5-server-arm64.iso 3) Install required Python packages pip3 install pyyaml 4) Install system dependencies sudo apt-get install qemu-user-static binfmt-support python3-yaml gcc-aarch64-linux-gnu g++-aarch64-linux-gnu • Create and write SD card image —3

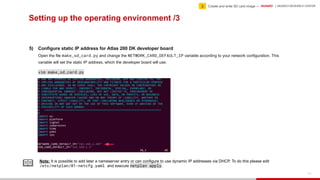

- 100. HUAWEI | MUNICH RESEARCH CENTER 109 Setting up the operating environment /3 5) Configure static IP address for Atlas 200 DK developer board Open the file make_sd_card.py and change the NETWORK_CARD_DEFAULT_IP variable according to your network configuration. This variable will set the static IP address, which the developer board will use. vim make_sd_card.py • Create and write SD card image —3 Note: It is possible to add later a nameserver entry or can configure to use dynamic IP addresses via DHCP. To do this please edit /etc/netplan/01-netcfg.yaml and execute netplan apply.

- 101. HUAWEI | MUNICH RESEARCH CENTER 110 Setting up the operating environment /4 6) Connect the card reader and make a bootable SD card Switch to the root user and prepare for card making: sudo bash Execute the script to prepare for card making: python3 make_sd_card.py local /dev/sdb Note: /dev/sdb indicates the device name of the SD card. You can run the fdisk -l command as the root user to view the device name. When a message is displayed, asking you whether to continue the installation, enter Y. Wait for about 7 minutes. The message Make SD Card successfully! is displayed, indicating that the SD card has been made successfully. • Create and write SD card image —3

- 102. HUAWEI | MUNICH RESEARCH CENTER 111 Boot and connect to the Atlas 200 DK developer board

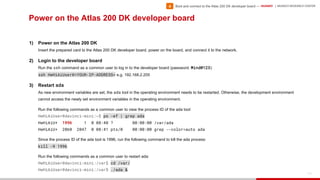

- 103. HUAWEI | MUNICH RESEARCH CENTER 112 Power on the Atlas 200 DK developer board 1) Power on the Atlas 200 DK Insert the prepared card to the Atlas 200 DK developer board, power on the board, and connect it to the network. 2) Login to the developer board Run the ssh command as a common user to log in to the developer board (password: Mind@123): ssh HwHiAiUser@<YOUR-IP-ADDRESS> e.g. 192.168.2.205 3) Restart ada As new environment variables are set, the ada tool in the operating environment needs to be restarted. Otherwise, the development environment cannot access the newly set environment variables in the operating environment. Run the following commands as a common user to view the process ID of the ada tool: HwHiAiUser@davinci-mini:~$ ps -ef | grep ada HwHiAiU+ 1996 1 0 08:40 ? 00:00:00 /var/ada HwHiAiU+ 2060 2047 0 08:41 pts/0 00:00:00 grep --color=auto ada Since the process ID of the ada tool is 1996, run the following command to kill the ada process: kill -9 1996 Run the following commands as a common user to restart ada: HwHiAiUser@davinci-mini:/var$ cd /var/ HwHiAiUser@davinci-mini:/var$ ./ada & • Boot and connect to the Atlas 200 DK developer board —4

- 104. HUAWEI | MUNICH RESEARCH CENTER 113 Install third-party packages

- 105. HUAWEI | MUNICH RESEARCH CENTER 114 Installation of additional packages /1 1) Install FFmpeg Switch to the root user (password: Mind@123): su root Install the related system software packages. As a root user, install the following software packages: apt-get install build-essential libgtk2.0-dev libjpeg-dev libtiff5-dev git cmake Exit the root user and switch to a regular user: exit Create a folder to store the built files: mkdir -p /home/HwHiAiUser/ascend_ddk/arm Download FFmpeg source code to the $HOME directory of your regular user, decompress it, go to the directory, compile and install it: cd $HOME wget http://www.ffmpeg.org/releases/ffmpeg-4.1.3.tar.gz tar -zxvf ffmpeg-4.1.3.tar.gz cd ffmpeg-4.1.3 ./configure --enable-shared --enable-pic --enable-static --disable-yasm --prefix=/home/HwHiAiUser/ascend_ddk/arm make -j8 make install • Install third-party packages —5

- 106. HUAWEI | MUNICH RESEARCH CENTER 115 Installation of additional packages /2 1) Switch to the root user and add the FFmpeg library: su root echo "/home/HwHiAiUser/ascend_ddk/arm/lib" > /etc/ld.so.conf.d/ffmpeg.conf ldconfig Add the binaries to the PATH environment variable: echo "export PATH=$PATH:/home/HwHiAiUser/ascend_ddk/arm/bin" >> /etc/profile source /etc/profile Copy all files in the ./lib/pkgconfig directory in the FFmpeg installation directory to the related directory of the operating system. The installation of OpenCV depends on these files: cp /home/HwHiAiUser/ascend_ddk/arm/lib/pkgconfig/* /usr/share/pkgconfig Exit the root user and switch to a regular user: exit • Install third-party packages —5

- 107. HUAWEI | MUNICH RESEARCH CENTER 116 Installation of additional packages /3 2) Install OpenCV Switch to the root user (password: Mind@123): su root Install the related system software packages. As a root user, install the following software packages: apt install python-dev python3-dev Run the following commands to go to the $HOME directory of a regular user, download OpenCV, and create a build directory: cd $HOME git clone -b 4.3.0 https://gitee.com/mirrors/opencv.git cd opencv mkdir build cd build Build OpenCV and install OpenCV: cmake ../ -DBUILD_SHARED_LIBS=ON -DBUILD_TESTS=OFF -DCMAKE_BUILD_TYPE=RELEASE -DCMAKE_INSTALL_PREFIX=/home/HwHiAiUser/ascend_ddk/arm make -j8 make install • Install third-party packages —5