Data Con LA 2019 - Best Practices for Prototyping Machine Learning Models for Healthcare by Lorenzo Rossi

- 1. Lorenzo Rossi, PhD Data Scientist City of Hope National Medical Center DataCon LA, August 2019 Best Practices for Prototyping Machine Learning Models for Healthcare

- 3. Machine learning in healthcare is growing fast, but best practices are not well established yet Towards Guidelines for ML in Health (8.2018, Stanford)

- 4. Motivations for ML in Healthcare 1. Lots of information about patients, but not enough time for clinicians to process it 2. Physicians spend too much time typing information about patients during encounters 3. Overwhelming amount of false alerts (e.g. in ICU)

- 5. Topics 1. The electronic health record (EHR) 2. Cohort definition 3. Data quality 4. Training - testing split 5. Performance metrics and reporting 6. Survival analysis

- 6. Topics 1. The electronic health record (EHR) 2. Cohort definition 3. Data quality 4. Training - testing split 5. Performance metrics and reporting 6. Survival curves Data preparation

- 7. 1. The Electronic Health Record (EHR)

- 8. • Laboratory tests • Vitals • Diagnoses • Medications • X-rays, CT scans, EKGs, … • Notes EHR data are very heterogeneous

- 9. • Laboratory tests [multi dimensional time series] • Vitals [multi dimensional time series] • Diagnoses [text, codes] • Medications [text, codes, numeric] • X-rays, CT scans, EKGs,… [2D - 3D images, time series, ..] • Notes [text] EHR data are very heterogeneous

- 10. • labs • vitals • notes • … Time is a key aspect of EHR data p01 p02 p03 time

- 11. • labs • vitals • notes • … Time is a key aspect of EHR data p01 p02 p03 Temporal resolution varies a lot • ICU patient [minutes] • Hospital patient [hours] • Outpatient [weeks] time

- 12. • Unplanned 30 day readmission • Length of stay • Mortality • Sepsis • ICU admission • Surgical complications Events hospitals want to predict from EHR data

- 13. • Unplanned 30 day readmission • Length of stay • Mortality • Sepsis • ICU admission • Surgical complications Events hospitals want to predict from EHR data Improve capacity

- 14. • Unplanned 30 day readmission • Length of stay • Mortality • Sepsis • ICU admission • Surgical complications Events hospitals want to predict from EHR data Improve capacity Optimize decisions

- 15. Consider only binary prediction tasks for simplicity Prediction algorithm gives score from 0 to 1 – E.g. close to 1 → high risk of readmission within 30 days 0 / 1

- 16. Consider only binary prediction tasks for simplicity Prediction algorithm gives score from 0 to 1 – E.g. close to 1 → high risk of readmission within 30 days Trade-off between falsely detected and missed targets 0 / 1

- 18. Individuals “who experienced particular event during specific period of time” Cohort

- 19. Individuals “who experienced particular event during specific period of time” Given prediction task, select clinically relevant cohort E.g. for surgery complication prediction, patients who had one or more surgeries between 2011 and 2018. Cohort

- 20. A. Pick records of subset of patients • labs • vitals • notes • …p01 p02 p03 time

- 21. B. Pick a prediction time for each patients. Records after prediction time are discarded • labs • vitals • notes • …p01 p02 p03 time

- 22. B. Pick a prediction time for each patients. Records after prediction time are discarded • labs • vitals • notes • …p01 p02 p03 time

- 23. 3. Data Quality [Image source: SalesForce]

- 24. EHR data challenging in many different ways

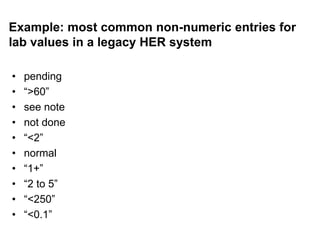

- 25. Example: most common non-numeric entries for lab values in a legacy HER system • pending • “>60” • see note • not done • “<2” • normal • “1+” • “2 to 5” • “<250” • “<0.1”

- 26. Example: discrepancies in dates of death between hospital records and Social Security (~ 4.8 % of shared patients)

- 28. Distinguish between Anomalies and Outliers Outlier: legitimate data point far away from mean/median of distribution Anomaly: illegitimate data point generated by process different from one producing rest of data Need domain knowledge to differentiate

- 29. Distinguish between Anomalies and Outliers Outlier: legitimate data point far away from mean/median of distribution Anomaly: illegitimate data point generated by process different from one producing rest of data Need domain knowledge to differentiate E.g.: Albumin level in blood. Normal range: 3.4 – 5.4 g/dL. µ=3.5, σ=0.65 over cohort.

- 30. Distinguish between Anomalies and Outliers Outlier: legitimate data point far away from mean/median of distribution Anomaly: illegitimate data point generated by process different from one generating rest of data Need domain knowledge to differentiate E.g.: Albumin level in blood. Normal range: 3.4 – 5.4 g/dL. µ=3.5, σ=0.65 over cohort. ρ = -1 → ?

- 31. Distinguish between Anomalies and Outliers Outlier: legitimate data point far away from mean/median of distribution Anomaly: illegitimate data point generated by process different from one generating rest of data Need domain knowledge to differentiate E.g.: Albumin level in blood. Normal range: 3.4 – 5.4 g/dL. µ=3.5, σ=0.65 over cohort. ρ = -1 → anomaly (treat as missing value)

- 32. Distinguish between Anomalies and Outliers Outlier: legitimate data point far away from mean/median of distribution Anomaly: illegitimate data point generated by process different from one generating rest of data Need domain knowledge to differentiate E.g.: Albumin level in blood. Normal range: 3.4 – 5.4 g/dL. µ=3.5, σ=0.65 over cohort. ρ = 1 → ?

- 33. Distinguish between Anomalies and Outliers Outlier: legitimate data point far away from mean/median of distribution Anomaly: illegitimate data point generated by process different from one generating rest of data Need domain knowledge to differentiate E.g.: Albumin level in blood. Normal range: 3.4 – 5.4 g/dL. µ=3.5, σ=0.65 over cohort. ρ = 1 → possibly a outlier (clinically relevant)

- 34. 4. Training - Testing Split

- 36. • Machine learning models evaluated on ability to make prediction on new (unseen) data • Split train (cross-validation) and test sets based on temporal criteria – e.g. no records in train set after prediction dates in test set – random splits, even if stratified, could include records virtually from ‘future’ to train model • In retrospective studies should also avoid records of same patients across train and test – model could just learn to recognize patients Guidelines

- 37. 5. Performance Metrics and Reporting

- 38. Background Generally highly imbalanced problems: 15% unplanned 30 day readmissions < 10% sepsis cases < 1% 30 day mortality

- 39. Types of Performance Metrics 1. Measure trade-offs – (ROC) AUC – average precision / PR AUC 2. Measure error rate at specific decision point – false positive, false negative rates – precision, recall – F1 – accuracy

- 40. Types of Performance Metrics (II) 1. Measure trade-offs – AUC, average precision / PR AUC, – good for global performance characterization and (intra)- model comparisons 2. Measure error rate at a specific decision point – false positives, false negatives, …, precision, recall – possibly good for interpretation of specific clinical costs and benefits

- 41. Don’t use accuracy unless dataset is balanced

- 42. ROC AUC can be misleading too

- 44. ROC AUC can be misleading (II) [Avati, Ng et al., Countdown Regression: Sharp and Calibrated Survival Predictions. ArXiv, 2018]

- 45. ROC AUC (1 year) > ROC AUC (5 years), but PR AUC (1 year) < PR AUC (5 years)! Latter prediction task is easier. [Avati, Ng et al., Countdown Regression: Sharp and Calibrated Survival Predictions. ArXiv, 2018]

- 46. Performance should be reported with both types of metrics • 1 or 2 metrics for trade-off evaluation – ROC AUC – average precision • 1 metric for performance at clinically meaningful decision point – e.g. recall @ 90% precision

- 47. Performance should be reported with both types of metrics • 1 or 2 metrics for trade-off evaluation – ROC AUC – average precision • 1 metric for performance at clinically meaningful decision point – e.g. recall @ 90% precision + Comparison with a known benchmark (baseline)

- 48. Metrics in Stanford 2017 paper on mortality prediction: AUC, average precision, recall @ 90%

- 49. Benchmarks

- 50. Main paper [Google, Nature, 2018] only reports deep learning results with no benchmark comparison

- 51. Comparison only in supplemental online file (not on Nature paper): deep learning only 1-2% better than logistic regression benchmark

- 52. Plot scales can be deceiving [undisclosed vendor, 2017]!

- 53. Same TP, FP plots rescaled

- 55. B. Pick a prediction time for each patients. Records after prediction time are discarded • labs • vitals • notes • …p01 p02 p03

- 56. C. Plot survival curves • Consider binary classification tasks – Event of interest (e.g. death) either happens or not before censoring time • Survival curve: distribution of time to event and time to censoring

- 57. Different selections of prediction times lead to different survival profiles over same cohort

- 58. Example: high percentage of patients deceased within 30 days. Model trained to distinguish mostly between relatively healthy and moribund patients

- 59. Example: high percentage of patients deceased within 30 days. Model trained to distinguish mostly between relatively healthy and moribund patients → performance overestimate

- 60. Final Remarks • Outliers should not to be treated like anomalies • Split train (CV) and test sets temporally • Metrics: – ROC AUC alone could be misleading – Precision-Recall curve often more useful than ROC – Compare with meaningful benchmarks • Performance possibly overestimated for cohorts with unrealistic survival curves

- 64. Example: ROC Curve Very high detection rate, but also high false alarm rate

![• Laboratory tests [multi dimensional time series]

• Vitals [multi dimensional time series]

• Diagnoses [text, codes]

• Medications [text, codes, numeric]

• X-rays, CT scans, EKGs,… [2D - 3D images, time series, ..]

• Notes [text]

EHR data are very heterogeneous](https://image.slidesharecdn.com/dataconla2019-ml4hcbestpracticesml4hc-lrossipublic-190903005246/85/Data-Con-LA-2019-Best-Practices-for-Prototyping-Machine-Learning-Models-for-Healthcare-by-Lorenzo-Rossi-9-320.jpg)

![• labs

• vitals

• notes

• …

Time is a key aspect of EHR data

p01

p02

p03

Temporal resolution varies a lot

• ICU patient [minutes]

• Hospital patient [hours]

• Outpatient [weeks]

time](https://image.slidesharecdn.com/dataconla2019-ml4hcbestpracticesml4hc-lrossipublic-190903005246/85/Data-Con-LA-2019-Best-Practices-for-Prototyping-Machine-Learning-Models-for-Healthcare-by-Lorenzo-Rossi-11-320.jpg)

![3. Data Quality

[Image source: SalesForce]](https://image.slidesharecdn.com/dataconla2019-ml4hcbestpracticesml4hc-lrossipublic-190903005246/85/Data-Con-LA-2019-Best-Practices-for-Prototyping-Machine-Learning-Models-for-Healthcare-by-Lorenzo-Rossi-23-320.jpg)

![ROC AUC can be misleading (II)

[Avati, Ng et al., Countdown Regression: Sharp and Calibrated

Survival Predictions. ArXiv, 2018]](https://image.slidesharecdn.com/dataconla2019-ml4hcbestpracticesml4hc-lrossipublic-190903005246/85/Data-Con-LA-2019-Best-Practices-for-Prototyping-Machine-Learning-Models-for-Healthcare-by-Lorenzo-Rossi-44-320.jpg)

![ROC AUC (1 year) > ROC AUC (5 years), but PR AUC (1

year) < PR AUC (5 years)! Latter prediction task is easier.

[Avati, Ng et al., Countdown Regression: Sharp and Calibrated

Survival Predictions. ArXiv, 2018]](https://image.slidesharecdn.com/dataconla2019-ml4hcbestpracticesml4hc-lrossipublic-190903005246/85/Data-Con-LA-2019-Best-Practices-for-Prototyping-Machine-Learning-Models-for-Healthcare-by-Lorenzo-Rossi-45-320.jpg)

![Main paper [Google, Nature, 2018] only reports deep

learning results with no benchmark comparison](https://image.slidesharecdn.com/dataconla2019-ml4hcbestpracticesml4hc-lrossipublic-190903005246/85/Data-Con-LA-2019-Best-Practices-for-Prototyping-Machine-Learning-Models-for-Healthcare-by-Lorenzo-Rossi-50-320.jpg)

![Plot scales can be deceiving [undisclosed

vendor, 2017]!](https://image.slidesharecdn.com/dataconla2019-ml4hcbestpracticesml4hc-lrossipublic-190903005246/85/Data-Con-LA-2019-Best-Practices-for-Prototyping-Machine-Learning-Models-for-Healthcare-by-Lorenzo-Rossi-52-320.jpg)