Data persistency (draco, cygnus, sth comet, quantum leap)

- 1. Session 5 - Data Persistency (Draco, Cygnus, STH Comet, Quantum Leap) Fernando López, Cloud & Platform Senior Expert fernando.lopez@fiware.org @flopezaguilar FIWARE Foundation, e.V.

- 2. Learning Goals 1 ● What is Data Persistrency? ● What is a Time Series Database? ● What is the different between Cygnus and Draco compares with Quantum Leap and STH-Comet? ● Which main technology is behind is solution?

- 3. 2 Draco: Persisting Context Data to MongoDB

- 4. Draco GE ▪ Ingestion and persistency of data ▪ Easy to use, powerful, and reliable system for processing and distributing data ▪ Based on Apache NiFi, dataflow system based on the concepts of flow-based programming ▪ Support powerful and scalable directed graphs of data routing, transformation, and system mediation logic ▪ Built to automate the flow of data between systems 3

- 5. Features ● NGSIv2 Support both for ingestion and serialization to have full integration with the Orion Context Broker. ● Several persistent backends : ● MySQL, the well-know relaEonal database manager. ● MongoDB, the NoSQL document-oriented database. ● PostgreSQL, the well-know relaEonal database manager. ● HDFS, Hadoop distributed file system. ● Cassandra, Distributed database. ● CartoDB, for geospaEal Data ● Templates for some common scenarios ● Rest API 4

- 7. Draco integration in the FIWARE ecosystem 6

- 9. References 8 ● FIWARE Catalogue o https://www.fiware.org/developers/catalogue ● FIWARE Draco o https://fiware-draco.readthedocs.io/en/latest

- 10. 9 Cygnus

- 11. Cygnus ● What is it for? ● Cygnus is a connector in charge of persisting Orion context data in certain configured third-party storages, creating a historical view of such data. ● How does it receives context data from Orion Context Broker? ● Cygnus uses the subscription/notification feature of Orion. ● A subscription is made in Orion on behalf of Cygnus, detailing which entities we want to be notified when an update occurs on any of those entities attributes. 10

- 12. Cygnus 11

- 13. Cygnus ● Internally, Cygnus is based on Apache Flume, data collection and persistence agents. ● An agent is basically composed of ● a listener or source in charge of receiving the data, ● a channel where the source puts the data once it has been transformed into a Flume event, ● a sink, which takes Flume events from the channel in order to persist the data within its body into a third-party storage. 12

- 14. Cygnus Architecture ● Cygnus runs Flume agents. Thus, Cygnus agents architecture is Flume agents one. 13

- 15. Data sinks 14 § NGSI-like context data in: § HDFS, the Hadoop distributed file system. § MySQL, the well-know relational database manager. § CKAN, an Open Data platform. § MongoDB, the NoSQL document-oriented database. § STH Comet, a Short-Term Historic database built on top of MongoDB. § Kafka, the publish-subscribe messaging broker. § DynamoDB, a cloud-based NoSQL database by Amazon Web Services. § PostgreSQL, the well-know relational database manager. § CartoDB, the database specialized in geolocated data. § Twitter data in: § HDFS, the Hadoop distributed file system.

- 16. Cygnus events 15 § A Source consumes Events having a specific format, and those Events are delivered to the Source by an external source like a web server. § For example, an AvroSource can be used to receive Avro Events from clients or from other Flume agents in the flow. § When a Source receives an Event, it stores it into one or more Channels. § The Channel is a passive store that holds the Event until that Event is consumed by a Sink. § One type of Channel available in Flume is the FileChannel which uses the local filesystem as its backing store.

- 17. Cygnus events 16 § A Sink is responsible for removing an Event from the Channel and puXng it into an external repository like HDFS (in the case of an HDFSEventSink) or forwarding it to the Source at the next hop of the flow. § The Source and Sink within the given agent run asynchronously with the Events staged in the Channel.

- 18. Cygnus configuration example 17 § https://github.com/telefonicaid/fiware- cygnus/blob/master/doc/cygnus- ngsi/installation_and_administration_guide/configurat ion_examples.md JVM Source Channel Sink

- 19. Multiple persistence backends 18 JVM Source Channel2 Sink2 Channel1 Sink1 Channel3 Sink3

- 20. Multiple agents 19 JVM2 Source2 Channel2 Sink2 JVMn Sourcen Channeln Sinkn JVM1 Source1 Channel1 Sink1 … § One instance for each Agent. § This add more capability to the system.

- 21. Connecting Orion Context Broker and Cygnus 20 § Subscription-notification mechanism of Orion Context Broker. § Cygnus needs to be notified each time certain entity's attributes change. § Therefore, Cygnus must subscribe to those entity's attribute changes.

- 22. Configure a basic Cygnus agent (Introduction) 21 § Edit /usr/cygnus/conf/agent_<id>.conf § List of sources, channels and sinks: § cygnusagent.sources = http-source § cygnusagent.sinks = hdfs-sink § cygnusagent.channels = hdfs-channel § Channels configuration § cygnusagent.channels.hdfs-channel.type = memory § cygnusagent.channels.hdfs-channel.capacity = 1000 § cygnusagent.channels.hdfs-channel. § transactionCapacity = 100

- 23. Configure a basic Cygnus agent (Sources) 22 § Sources configuration: § cygnusagent.sources.http-source.channels = hdfs-channel § cygnusagent.sources.http-source.type = org.apache.flume.source.http.HTTPSource § cygnusagent.sources.http-source.port = 5050 § cygnusagent.sources.http-source.handler = es.tid.fiware.fiwareconnectors.cygnus.handlers.OrionRestHandler § cygnusagent.sources.http-source.handler.notification_target = /notify § cygnusagent.sources.http-source.handler.default_service = def_serv § cygnusagent.sources.http-source.handler.default_service_path = def_servpath § cygnusagent.sources.http-source.handler.events_ttl = 10 § cygnusagent.sources.http-source.interceptors = ts de § cygnusagent.sources.http-source.interceptors.ts.type = timestamp § cygnusagent.sources.http-source.interceptors.de.type = es.tid.fiware.fiwareconnectors.cygnus.interceptors.DestinationExtractor$Builder § cygnusagent.sources.http-source.interceptors.de.matching_table = /usr/cygnus/conf/matching_table.conf

- 24. Configure a basic Cygnus agent (Sinks) 23 § Sinks configuration: § cygnusagent.sinks.hdfs-sink.channel = hdfs-channel § cygnusagent.sinks.hdfs-sink.type = es.tid.fiware.fiwareconnectors.cygnus.sinks.OrionHDFSSink § cygnusagent.sinks.hdfs-sink.cosmos_host = cosmos.lab.fi-ware.org § cygnusagent.sinks.hdfs-sink.cosmos_port = 14000 § cygnusagent.sinks.hdfs-sink.cosmos_default_username = cosmos_username § cygnusagent.sinks.hdfs-sink.cosmos_default_password = xxxxxxxxxxxxx § cygnusagent.sinks.hdfs-sink.hdfs_api = httpfs § cygnusagent.sinks.hdfs-sink.attr_persistence = column § cygnusagent.sinks.hdfs-sink.hive_host = cosmos.lab.fi-ware.org § cygnusagent.sinks.hdfs-sink.hive_port = 10000 § cygnusagent.sinks.hdfs-sink.krb5_auth = false

- 25. References 24 ● FIWARE Catalogue o https://www.fiware.org/developers/catalogue ● FIWARE Cygnus o https://fiware-cygnus.readthedocs.io/en/latest

- 26. 25 STH Comet

- 27. How? (… is not always the best) § The Context Broker only stores the latest attribute values: § Event-driven action-oriented paradigm § The Short Time Historic adds memory into the equation: § Continuous improvement paradigm 26

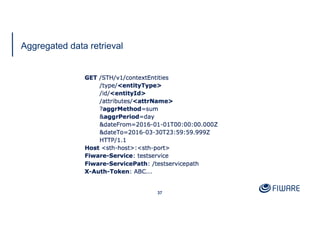

- 28. STH-COMET: What? § Time series database: § Optimized to deal with values indexed in time § Raw data vs. Aggregated data § Basic aggregation concepts: o Range o Resolution o Origin o Offset 27

- 29. How? (… is not always the best) § Collateral aspects to take into consideration: § Risk: § Maturity § Performance § Expertise § Flexibility (future evolution) § Current inversions 28

- 30. STH – Comet : How (birds-eye functioning: minimal) 29 subscribeContext update notification query (raw & aggregated) Client Orion Context Broker

- 31. STH – Comet : How (birds-eye functioning: formal) 30 subscribeContext update notification query (raw & aggregated) Client Orion Context Broker Cygnus Update (raw & aggregated)

- 32. Architecture 31

- 33. Data schemas and pre-pagination ▪ Although the STH stores the evolution of (raw) data (i.e., attributes values) in time, its real power comes from the storage of aggregated data ▪ The STH should be able to respond to queries such as: • Give me the maximum temperature of this room during the last month (range) aggregated by day (resolution) • Give me the mean temperature of this room today (range) aggregated by hour or even minute (resolution) • Give me the standard deviation of the temperature of this room this last year (range) aggregated by day (resolution) • Give me the number of times the air conditioner of this room was switched on or off last Monday (range) aggregated by hour 32

- 34. Data schemas and pre-aggregation 33

- 35. API: get raw data 34

- 36. Pagination 35

- 37. Response 36

- 39. Response 38

- 41. Log level retrieval and update 40

- 42. References 41 ● FIWARE Catalogue o https://www.fiware.org/developers/catalogue ● FIWARE STH-Comet o https://fiware-sth-comet.readthedocs.io/en/latest

- 43. 42 QuantumLeap: Managing the Stream of Context Information History using Time Series DBs

- 44. Why QuantumLeap § Provide historical data support for NGSIv2 data § Leverage on an efficient time-series database § Deploy and scale it easily on containerized environments 43

- 45. Data is no-more mono dimensional § The attribute § Temperature is 20 § The history of the attribute § Temperature is 20 on 19th May 8:59:01.020 AM CEST § Temperature is 21 on 19th May 9:12:03.045 AM CEST § The geo-localisation of the attribute § Temperature is 20 in 41°51'16.8"N 12°28'15.0"E 44 Value Value in time Value in space

- 46. The core of the solution 45 Quantum Leap

- 47. Architecture Overview 46 Context Broker Quantum Leap CrateDB Grafana /v2/subscribe /v2/notify 1 /v2/entities2 3 Spark /v2/notify My Analytic service

- 48. Step 1: Extract § QuantumLeap: § Needs to know where CrateDB is § Crate: § Needs a volume to persist data § Grafana: § Needs `crate-datasource` § (or `PostgreSQL`) plugin 47 QuantumLeap in 4 steps

- 49. Step 2: “Connect” to Orion Context Broker § Create a Subscription in Orion CB for the entities you are interested in! § A) Do it directly talking to Orion § https://fiware-orion.readthedocs.io/en/master/user/walkthrough_apiv2/index.html#subscriptions § B) Or ask QuantumLeap to do it for you § https://app.swaggerhub.com/apis/smartsdk/ngsi-tsdb/0.1.1#/input/reporter.reporter.subscribe § More info: § https://quantumleap.readthedocs.io/en/latest/user/#orion-subscription 48

- 50. Step 2: “Connect” to Orion Context Broker § POST orion/v2/subscriptions/ § Note notification url must be a valid url for Orion container. § Note the inclusion of dateModified § Use the same insertion headers § FIWARE-service § FIWARE-servicepath 49 { "description": "Notify QuantumLeap on luminosity changes on any Lamp", "subject": { "entities": [ { "idPattern": "Lamp.*" } ], "condition": { "attrs": [ "luminosity" ] } }, "notification": { "http": { "url": "http://quantumleap:8668/v2/notify" }, "attrs": [ "luminosity" ], "metadata": ["dateCreated", "dateModified"] }, "throttling": 1 }

- 51. Step 3: Get your data § Check you are sending data to Orion § Check Orion notifications did not fail § GET orion/v2/subscriptions § Get your data § Nothing? § Checkout QuantumLeap logs! 50 # REQUEST curl -X GET 'http://localhost:8668/v2/entities/Lamp:001/attrs/luminosity?=3&limit=3' -H 'Accept: application/json' -H 'Fiware-Service: openiot' -H 'Fiware-ServicePath: /’ # RESPONSE { "data": { "attrName": "luminosity", "entityId": "Lamp:001", "index": [ "2018-10-29T14:27:26", "2018-10-29T14:27:28", "2018-10-29T14:27:29" ], "values": [ 2000, 1991, 1998 ] } }

- 52. Step 3: Get your data 51

- 53. 1. Create a Grafana data-source for each entity type 2. Create Grafana dashboards using your datasource Step 4: Prepare your Dashboards 52

- 54. References 53 ● FIWARE Catalogue o https://www.fiware.org/developers/catalogue ● Tutorial o https://fiware-tutorials.readthedocs.io/en/latest/time-series-data/index.html ● Code o https://github.com/smartsdk/ngsi-timeseries-api ● Docs o https://quantumleap.readthedocs.io/en/latest ● API o https://app.swaggerhub.com/apis/smartsdk/ngsi-tsdb

- 57. 5 6

![Step 2: “Connect” to Orion Context Broker

§ POST orion/v2/subscriptions/

§ Note notification url must be a

valid url for Orion container.

§ Note the inclusion of

dateModified

§ Use the same insertion headers

§ FIWARE-service

§ FIWARE-servicepath

49

{

"description": "Notify QuantumLeap on luminosity changes on any Lamp",

"subject": {

"entities": [

{

"idPattern": "Lamp.*"

}

],

"condition": {

"attrs": [

"luminosity"

]

}

},

"notification": {

"http": {

"url": "http://quantumleap:8668/v2/notify"

},

"attrs": [

"luminosity"

],

"metadata": ["dateCreated", "dateModified"]

},

"throttling": 1

}](https://image.slidesharecdn.com/session5-datapersistencydracocygnussthcometquantumleap-191127083746/85/Data-persistency-draco-cygnus-sth-comet-quantum-leap-50-320.jpg)

![Step 3: Get your data

§ Check you are sending data to

Orion

§ Check Orion notifications did not

fail

§ GET orion/v2/subscriptions

§ Get your data

§ Nothing?

§ Checkout QuantumLeap logs!

50

# REQUEST

curl -X GET

'http://localhost:8668/v2/entities/Lamp:001/attrs/luminosity?=3&limit=3'

-H 'Accept: application/json'

-H 'Fiware-Service: openiot'

-H 'Fiware-ServicePath: /’

# RESPONSE

{

"data": {

"attrName": "luminosity",

"entityId": "Lamp:001",

"index": [

"2018-10-29T14:27:26",

"2018-10-29T14:27:28",

"2018-10-29T14:27:29"

],

"values": [

2000,

1991,

1998

]

}

}](https://image.slidesharecdn.com/session5-datapersistencydracocygnussthcometquantumleap-191127083746/85/Data-persistency-draco-cygnus-sth-comet-quantum-leap-51-320.jpg)