End-to-end sequence labeling via bi-directional LSTM-CNNs-CRF

- 1. State-of-the-Art Named Entity Recognition Framework Anirudh Ganesh Jayavardhan P Reddy Speech and Language Processing (CSE 5525) Spring 2018 Final Presentation Prof. Eric Fosler-Lussier

- 2. Introduction ● The main goal with this tutorial is to develop a state of the art Named Entity Recognizer. ● Along with this we also want the student to be able to understand and implement a deep learning approach to solve a given problem. ● The tutorial is also structured in such a way as to provide the student with a decent hands on experience in replicating a journal publication, given the data and the model used.

- 3. Importance ● This is important since deep learning is gaining widespread traction for most modern machine learning applications especially NLP. ● Replication of the results in such deep learning research publication is critical for accelerated research growth. ● This is one crucial point that we wanted to tackle, that is replicability of deep learning based publications and studies, because we feel that there is a huge shortage of such work.

- 4. Resources used ● PyTorch ● NumPy ● Jupyter Notebook ● Python 3.5 ● CoNLL 2003 dataset for NER ● Paper: End-to-end Sequence Labeling via Bi-directional LSTM-CNNs-CRF by Xuezhe Ma, Eduard Hovy; https://arxiv.org/abs/1603.01354 ● F1 score : 91.21

- 5. Main parts of the Architecture: ● Data Preparation ● Convolutional Neural Network (CNN) Encoder for Character Level representation ● Bi-directional Long Short Term Memory (LSTM) for Word-Level Encoding ● Conditional Random Fields (CRF) for output decoding

- 6. Data Preparation The paper* uses the English data from CoNLL 2003 shared task ● Tag Update: In the paper, the authors use the tagging Scheme ( BIOES ) rather than BIO (which is used by the dataset). So, we need to first update the data to convert tag scheme from BIO to BIOES. (Beginning, Inside, Outside, End, Single or Unit Length) ● Mappings: Create mapping for Words-to-ids, Tag-to-ids, characters-to-ids * “End-to-end Sequence Labeling via Bi-directional LSTM-CNNs-CRF”, Xuezhe Ma and Eduard H. Hovy, CoRR (2016) abs/1603.01354

- 7. Word Embedding ● Using Pre-Trained Embeddings: The paper uses Global Vectors (GloVe) 100 dimension vectors trained on the ( Wikipedia 2014 + Gigaword 5 ) corpus containing 6 Billion Words. ● Word embedding Mapping Create mapping for words in vocabulary to word embeddings

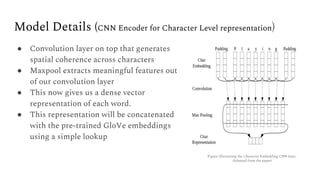

- 8. Model Details (CNN Encoder for Character Level representation) ● Convolution layer on top that generates spatial coherence across characters ● Maxpool extracts meaningful features out of our convolution layer ● This now gives us a dense vector representation of each word. ● This representation will be concatenated with the pre-trained GloVe embeddings using a simple lookup Figure Illustrating the Character Embedding CNN layer. (Adapted from the paper)

- 9. Model Details (Bi-LSTM for Word-Level Encoding) ● The word-embeddings that we generated in our previous layer, we feed to a bi-directional LSTM model ● The forward layer takes in a sequence of word vectors and generates a new vector based on what it has seen so far in the forward direction ● This vector can be thought of as a summary of all the words it has seen ● The backwards layer does the same but in opposite direction Figure Illustrating the Sequence labelling LSTM layer. (Adapted from the paper)

- 10. Model Details (CRF Layer) ● Even if we capture some information from the context thanks to the bi-LSTM, the tagging decision needs to take advantage of this. ● Since NER is heavily influenced by neighboring tagging decisions. ● This is why we apply CRFs over traditional softmax ● Given a sequence of words and a sequence of score vectors, a linear-chain CRF defines a global score such that it generates sentence level likelihoods for optimal tags. Figure Illustrating Conditional Random Fields (CRF) for sequence tagging. (Adapted from the paper)

- 11. Computing Tags Recall that the CRF computes a conditional probability. Let y be a tag sequence and x an input sequence of words. Then we compute maximum likelihood as, Viterbi decode is basically applying dynamic programming to choosing our tag sequence

- 12. Closing Comments Sample Output from the given model

- 13. Experience of presenting to friends ● Initial few drafts ○ Too much time preprocessing data ○ PyTorch API ● Changes ○ Detailed comments explaining each step and the intuition behind it. ○ Detailed comments for the PyTorch functions ● Final Draft: ○ Takes a little longer than the intended time if the student solving it doesn’t have sufficient background in deep learning and PyTorch.

- 14. Thank You!