Face detection and expression using OpenCV using python

- 1. CSE 185 Introduction to Computer Vision Face Detection

- 2. Face Detection: A Solved Problem? • Recent results have demonstrated excellent results – fast, multi pose, partial occlusion, … • So, is face detection a solved problem? • No, not quite… Omron’s face detector [Liu et al. 04]

- 3. Outline • Objective – Survey major face detection works – Address “how” and “why” questions – Pros and cons of detection methods – Future research directions • Updated tutorial material http://faculty.ucmerced.edu/mhyang/face-detection- survey.html

- 4. Face Detection • Identify and locate human faces in an image regardless of their – position – scale – in-plane rotation – orientation – pose (out-of-plane rotation) – and illumination Where are the faces, if any?

- 5. Why Face Detection is Important? • First step for any fully automatic face recognition system • First step in many surveillance systems • Face is a highly non-rigid object • Lots of applications • A step towards Automatic Target Recognition (ATR) or generic object detection/recognition

- 6. In One Thumbnail Face Image • Consider a thumbnail 19 19 face pattern • 256361 possible combination of gray values – 256361= 28361 = 22888 • Total world population (as of 2004) – 6,400,000,000 232 • 290 times more than the world population! • Extremely high dimensional space!

- 7. Why Is Face Detection Difficult? • Pose (Out-of-Plane Rotation): frontal, 45 degree, profile, upside down • Presence or absence of structural components: beards, mustaches, and glasses • Facial expression: face appearance is directly affected by a person's facial expression • Occlusion: faces may be partially occluded by other objects • Orientation (In-Plane Rotation): face appearance directly vary for different rotations about the camera's optical axis • Imaging conditions: lighting (spectra, source distribution and intensity) and camera characteristics (sensor response, gain control, lenses), resolution

- 8. Related Problems • Face localization: – Aim to determine the image position of a single face – A simplified detection problem with the assumption that an input image contains only one face • Facial feature extraction: – To detect the presence and location of features such as eyes, nose, nostrils, eyebrow, mouth, lips, ears, etc – Usually assume that there is only one face in an image • Face recognition (identification) • Facial expression recognition • Human pose estimation and tracking

- 9. Face Detection and Object Recognition • Detection: concerns with a category of object • Recognition: concerns with individual identity • Face is a highly non-rigid object • Many methods can be applied to other object detection/recognition Car detection Pedestrian detection

- 10. Pedestrian Detection: Recent Results

- 11. Human Detection and Tracking • Often used as a salient cue for human detection • Used as a strong cue to search for other body parts • Used to detect new objects and re- initialize a tracker once it fails [Lee and Cohen 04] [Okuma et al. 04]

- 12. Research Issues • Representation: How to describe a typical face? • Scale: How to deal with face of different size? • Search strategy: How to spot these faces? • Speed: How to speed up the process? • Precision: How to locate the faces precisely? • Post processing: How to combine detection results?

- 13. Face Detector: Ingredients • Target application domain: single image, video • Representation: holistic, feature, holistic, etc • Pre processing: histogram equalization, etc • Cues: color, motion, depth, voice, etc • Search strategy: exhaustive, greedy, focus of attention, etc • Classifier design: ensemble, cascade • Post processing: combing detection results

- 14. In This Tutorial Face Detection Video Color Gray Scale Single Image Color Gray Scale Upright frontal Pose Rotation Occlusion Motion Depth Voice • Focus on detecting – upright, frontal faces – in a single gray- scale image – with decent resolution – under good lighting conditions • See [Sinha 01] for detecting faces in low-resolution images

- 15. Methods to Detect/Locate Faces • Knowledge-based methods: – Encode human knowledge of what constitutes a typical face (usually, the relationships between facial features) • Feature invariant approaches: – Aim to find structural features of a face that exist even when the pose, viewpoint, or lighting conditions vary • Template matching methods: – Several standard patterns stored to describe the face as a whole or the facial features separately • Appearance-based methods: – The models (or templates) are learned from a set of training images which capture the representative variability of facial appearance Many methods can be categorized in several ways

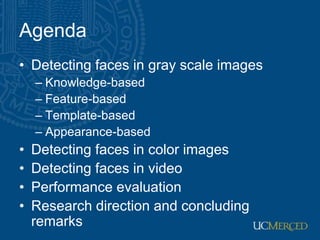

- 16. Agenda • Detecting faces in gray scale images – Knowledge-based – Feature-based – Template-based – Appearance-based • Detecting faces in color images • Detecting faces in video • Performance evaluation • Research direction and concluding remarks

- 17. Knowledge-Based Methods • Top-down approach: Represent a face using a set of human-coded rules • Example: – The center part of face has uniform intensity values – The difference between the average intensity values of the center part and the upper part is significant – A face often appears with two eyes that are symmetric to each other, a nose and a mouth • Use these rules to guide the search process

- 18. Knowledge-Based Method: [Yang and Huang 94] • Multi-resolution focus-of- attention approach • Level 1 (lowest resolution): apply the rule “the center part of the face has 4 cells with a basically uniform intensity” to search for candidates • Level 2: local histogram equalization followed by edge detection • Level 3: search for eye and mouth features for validation

- 19. Knowledge-Based Method: [Kotropoulos & Pitas 94] • Horizontal/vertical projection to search for candidates • Search eyebrow/eyes, nostrils/nose for validation • Difficult to detect multiple people or in complex background m x n y y x I y VI y x I x HI 1 1 ) , ( ) ( ) , ( ) ( [Kotropoulos & Pitas 94]

- 20. Knowledge-based Methods: Summary • Pros: – Easy to come up with simple rules to describe the features of a face and their relationships – Based on the coded rules, facial features in an input image are extracted first, and face candidates are identified – Work well for face localization in uncluttered background • Cons: – Difficult to translate human knowledge into rules precisely: detailed rules fail to detect faces and general rules may find many false positives – Difficult to extend this approach to detect faces in different poses: implausible to enumerate all the possible cases

- 21. Agenda • Detecting faces in gray scale images – Knowledge-based – Feature-based – Template-based – Appearance-based • Detecting faces in color images • Detecting faces in video • Performance evaluation • Research direction and concluding remarks

- 22. Feature-Based Methods • Bottom-up approach: Detect facial features (eyes, nose, mouth, etc) first • Facial features: edge, intensity, shape, texture, color, etc • Aim to detect invariant features • Group features into candidates and verify them

- 23. Random Graph Matching [Leung et al. 95] • Formulate as a problem to find the correct geometric arrangement of facial features • Facial features are defined by the average responses of multi-orientation, multi-scale Gaussian derivative filters • Learn the configuration of features with Gaussian distribution of mutual distance between facial features • Convolve an image with Gaussian filters to locate candidate features based on similarity • Random graph matching among the candidates to locate faces

- 24. Feature Grouping [Yow and Cipolla 90] • Apply a 2nd derivative Gaussian filter to search for interest points • Group the edges near interest points into regions • Each feature and grouping is evaluated within a Bayesian network • Handle a few poses • See also [Amit et al. 97] for efficient hierarchical (focus of attention) feature-based method Face model and component Model facial feature as pair of edges Apply interest point operator and edge detector to search for features Using Bayesian network to combine evidence

- 25. Feature-Based Methods: Summary • Pros: – Features are invariant to pose and orientation change • Cons: – Difficult to locate facial features due to several corruption (illumination, noise, occlusion) – Difficult to detect features in complex background

- 26. Agenda • Detecting faces in gray scale images – Knowledge-based – Feature-based – Template-based – Appearance-based • Detecting faces in color images • Detecting faces in video • Performance evaluation • Research direction and concluding remarks

- 27. Template Matching Methods • Store a template – Predefined: based on edges or regions – Deformable: based on facial contours (e.g., Snakes) • Templates are hand-coded (not learned) • Use correlation to locate faces

- 28. Face Template • Use relative pair-wise ratios of the brightness of facial regions (14 16 pixels): the eyes are usually darker than the surrounding face [Sinha 94] • Use average area intensity values than absolute pixel values • See also Point Distribution Model (PDM) [Lanitis et al. 95] Ration Template [Sinha 94] average shape [Lanitis et al. 95]

- 29. Template-Based Methods: Summary • Pros: – Simple • Cons: – Templates needs to be initialized near the face images – Difficult to enumerate templates for different poses (similar to knowledge-based methods)

- 30. Agenda • Detecting faces in gray scale images – Knowledge-based – Feature-based – Template-based – Appearance-based • Detecting faces in color images • Detecting faces in video • Performance evaluation • Research direction and concluding remarks

- 31. Appearance-Based Methods • Train a classifier using positive (and usually negative) examples of faces • Representation • Pre processing • Train a classifier • Search strategy • Post processing • View-based

- 32. Appearance-Based Methods: Classifiers • Neural network: Multilayer Perceptrons • Princiapl Component Analysis (PCA), Factor Analysis • Support vector machine (SVM) • Mixture of PCA, Mixture of factor analyzers • Distribution-based method • Naïve Bayes classifier • Hidden Markov model • Sparse network of winnows (SNoW) • Kullback relative information • Inductive learning: C4.5 • Adaboost • …

- 33. Representation • Holistic: Each image is raster scanned and represented by a vector of intensity values • Block-based: Decompose each face image into a set of overlapping or non-overlapping blocks – At multiple scale – Further processed with vector quantization, Principal Component Analysis, etc.

- 34. Face and Non-Face Exemplars • Positive examples: – Get as much variation as possible – Manually crop and normalize each face image into a standard size (e.g., 19 19 pixels) – Creating virtual examples [Sung and Poggio 94] • Negative examples: – Fuzzy idea – Any images that do not contain faces – A large image subspace – Bootstraping [Sung and Poggio 94]

- 35. Distribution-Based Method [Sung & Poggio 94] • Masking: reduce the unwanted background noise in a face pattern • Illumination gradient correction: find the best fit brightness plane and then subtracted from it to reduce heavy shadows caused by extreme lighting angles • Histogram equalization: compensates the imaging effects due to changes in illumination and different camera input gains

- 36. Creating Virtual Positive Examples • Simple and very effective method • Randomly mirror, rotate, translate and scale face samples by small amounts • Increase number of training examples • Less sensitive to alignment error Randomly mirrored, rotated translated, and scaled faces [Sung & Poggio 94]

- 37. Distribution of Face/Non-face Pattern • Cluster face and non-face samples into a few (i.e., 6) clusters using K- means algorithm • Each cluster is modeled by a multi- dimensional Gaussian with a centroid and covariance matrix • Approximate each Gaussian covariance with a subspace (i.e., using the largest eigenvectors) • See [Moghaddam and Pentland 97] on distribution-based learning using Gaussian mixture model ) ( ) ( 2 1 exp ) 2 ( 1 ) ( 1 T 2 / 1 2 / μ x Σ μ x Σ x d p x: face, non-face samples [Sung & Poggio 94]

- 38. Distance Metrics [Sung & Poggio 94] • Compute distances of a sample to all the face and non-face clusters • Each distance measure has two parts: – Within subspace distance (D1): Mahalanobis distance of the projected sample to cluster center – Distance to the subspace (D2): distance of the sample to the subspace • Feature vector: Each face/non-face samples is represented by a vector of these distance measurements • Train a multilayer perceptron (MLP) using the feature vectors for face detection 2 T 75 75 2 2 1 T 1 ) )( ( ) ( )) ( ) ( | | ln 2 ln ( 2 1 μ x x x μ x Σ μ x Σ E E I D d D p 6 face clusters 6 non-face clusters 2 distance values per cluster 24 measurements [Sung and Poggio 94]

- 39. Bootstrapping [Sung and Poggio 94] 1. Start with a small set of non-face examples in the training set 2. Train a MLP classifier with the current training set 3. Run the learned face detector on a sequence of random images. 4. Collect all the non-face patterns that the current system wrongly classifies as faces (i.e., false positives) 5. Add these non-face patterns to the training set 6. Got to Step 2 or stop if satisfied Improve the system performance greatly False positive detects Test image

- 40. Search over Space and Scale Downsample the input image by a factor of 1.2 and continue to search Scan an input image at one-pixel increments horizontally and vertically

- 41. Continue to Search over Space and Scale Continue to downsample the input image and search until the image size is too small

- 42. Experimental Results: [Sung and Poggio 94] • Can be have multiple detects of a face since it may be detected – at different scale – at a slightly displaced window location • Able to detect upright frontal faces

- 43. Neural Network-Based Detector • Train multiple multilayer perceptrons with different receptive fields [Rowley and Kanade 96] • Merging the overlapping detections within one network • Train an arbitration network to combine the results from different networks • Needs to find the right neural network architecture (number of layers, hidden units, etc.) and parameters (learning rate, etc.)

- 44. Dealing with Multiple Detects • Merging overlapping detections within one network [Rowley and Kanade 96] • Arbitration among multiple networks – AND operator – OR operator – Voting – Arbitration network Merging overlapping results ANDing results from two networks

- 45. Experimental Results: [Rowley et al. 96]

- 46. Detecting Rotated Faces [Rowley et al. 98] • A router network is trained to estimate the angle of an input window – If it contain a face, the router returns the angle of the face and the face can be rotated back to upright frontal position. – Otherwise the router returns a meaningless angle • The de-rotated window is then applied to a detector (previously trained for upright frontal faces)

- 47. Router Network [Rowley et al. 98] • Rotate a face sample at 10 degree increment • Create virtual examples (translation and scaling) from each sample • Train a multilayer neural network with input- output pair Input-output pair to train a router network

- 48. Experimental Results [Rowley et al. 98] • Able to detect rotated faces with good results • Performance degrades in detecting upright frontal faces due to the use of router network • See also [Feraud et al. 01]

- 49. Support Vector Machine (SVM) • Find the optimal separating hyperplane constructed by support vectors [Vapnik 95] • Maximize distances between the data points closest to the separating hyperplane (large margin classifier) • Formulated as a quadratic programming problem • Kernel functions for nonlinear SVMs support vector d margin

- 50. SVM-Based Face Detector [Osuna et al. 97] • Adopt similar architecture Similar to [Sung and Poggio 94] with the SVM classifier • Pros: Good recognition rate with theoretical support • Cons: – Time consuming in training and testing – Need to pick the right kernel [Osuna et al. 97]

- 51. SVM-Based Face Detector: Issues • Training: Solve a complex quadratic optimization problem – Speed-up: Sequential Minimal Optimization (SMO) [Platt 99] • Testing: The number of support vectors may be large lots of kernel computations – Speed-up: Reduced set of support vectors [Romdhani et al. 01] • Variants: – Component-based SVM [Heisele et al. 01]: • Learn components and their geometric configuration • Less sensitive to pose variation

- 52. Sparse Network of Winnows [Roth 98] • On line, mistake driven algorithm • Attribute (feature) efficiency • Allocations of nodes and links is data driven – complexity depends on number of active features • Allows for combining task hierarchically • Multiplicative learning rule Features Target nodes

- 53. SNoW-Based Face Detector • Multiplicative weight update algorithm: • Pros: On-line feature selection [Yang et al. 00] • Cons: Need more powerful feature representation scheme • Also been applied to object recognition [Yang et al. 02] 0.5 2, Usually, (demotion) 1) x (if w w , x but w 0 Class If ) (promotion 1) x (if w w , x w but 1 Class If x w iff 1 is Prediction i i i i i i θ θ θ

- 54. Probabilistic Modeling of Local Appearance [Schneiderman and Kanade 98] • Using local appearance • Learn the distribution by parts using Naïve Bayes classifier • Apply Bayesian decision rule • Further decompose the appearance into space, frequency, and orientation • Learn the joint distribution of object and position • Also wavelet representation n k k object subregion p object region p 1 ) | ( ) | ( p( |face)= p( |face)* p( |face)* p( |face)* p( |face) p( , x, y, s |face)*… or p( , x, y, s |face)*… ) ( ) ( ) | ( ) | ( object p ojbect p object region P object region p

- 55. Detecting faces in Different Pose • Extend to detect faces in different pose with multiple detectors • Each detector specializes to a view: frontal, left pose and right pose • [Mikolajczyk et al. 01] extend to detect faces from side pose to frontal view [Schneiderman and Kanade 98]

- 56. Experimental Results [Schneiderman and Kanade 98] Extended to detect cars [Schneiderman and Kanade 00] Able to detect profile faces [Schneiderman and Kanade 98]

- 57. Mixture of Factor Analyzers [Yang et al. 00] • Generative method that performs clustering and dimensionality reduction within each cluster • Similar to probabilistic PCA but has more merits – proper density model – robust to noise • Use mixture model to detect faces in different pose • Using EM to estimate all the parameters in the mixture model • See also [Moghaddam and Pentland 97] on using probabilistic Gaussian mixture for object localization ) , ( ) | ( Ψ Λz z x u Λz x N p Factor faces for frontal view z x hidden factor observation Factor faces for 45 view z x j, j ) , ( ) , | ( Ψ z Λ z x j j j N p mixture model

- 58. Fisher Linear Discriminant [Yang et al. 00] • Fisherface (FLD) demonstrated good results in face recognition • Apply Self-Organizing Map (SOM) to cluster faces/non- faces, and thereby labels for samples • Apply FLD to find optimal projection matrix for maximal separation • Estimate class-conditional density for detection Face/non-face prototypes generated by SOM Given a set of unlabeled face and non-face samples SOM FLD Class Conditional Density Maximum Likelihood Estimation

- 59. Adaboost [Freund and Schapire 95] • Use a set of weak classifiers (εt < 0.5) and weighting on difficult examples for learning (sampling is based on the weights) • Given: (x1, y1), …, (xm, ym) where xiX, yiY={-1,+1} Initialize D1(i)=1/m For t = 1, …, T: Train a weak classifier using distribution Dt 1. Get a weak hypothesis ht: X {-1,+1} with error εt=Pri~Dt[ht(xi)≠yi] 2. Importance of ht:t=1/2 ln((1- εt)/ εt)) 3. Update: Dt+1(i)= Dt(i)/Zte-t if ht (x)=yi (correctly classified) Dt+1(i)= Dt(i)/Ztet if ht (x)≠yi (incorrectly classified) where Zt is a normalization factor Aggregating the classifiers: H(x)=sign(t=1 t ht(x)) • Perform well and does not overfit in empirical studies

- 60. Adaboost-Based Detector [Viola and Jones 01] • Main idea: – Feature selection: select important features – Focus of attention: focus on potential regions – Use an integral image for fast feature evaluation • Use Adaboost to learn – A set of important features (feature selection) • sort them in the order of importance • each feature can be used as a simple (weak) classifier – A cascade of classifiers that • combine all the weak classifiers to do a difficult task • filter out the regions that most likely do not contain faces

- 61. Feature Selection [Viola and Jones 01] • Training: If x is a face, then x – most likely has feature 1 (easiest feature, and of greatest importance) – very likely to have feature 2 (easy feature) – … – likely to have feature n (more complex feature, and of less importance since it does not exist in all the faces in the training set) • Testing: Given a test sub-image x’ – if x’ has feature 1: • Test whether x’ has feature 2 – Test whether x’ has feature n – else … • else, it is not face – else, it is not a face • Similar to decision tree x’ Yes No Yes No Yes No x’ is a face feature 2 feature n x’ is a non-face x’ is a non-face feature 1 x’ is a non-face One simple implementation

- 62. Boxlet As Weak Classifier [Viola & Jones 01] Boxlet: compute the difference between the sums of pixels within two rectangular regions Compute boxlets all over a pattern Harr-like wavelets Over-complete representation: lots of boxlet features For each boxlet j, compute fj(x) where x is a positive or negative example Each feature is used as a weak classifier Set threshold j so that most samples are classified correctly: hj(x, f, p, )=1 if fj(x) < j (or fj(x) > j) Sequentially select the boxlets Boxlet: 2-rectangle, 3-rectangle, 4-rectangle face samples non-face samples

- 63. Selecting Features Using Adaboost [Viola and Jones 01] otherwise 0 ) ( if 1 ) ( j j j j j p x f p x h • For t=1, …, T – Construct a weak classifier using one single feature ht where pj is a parity bit and j is a threshold – For each feature j, train a classifier hj, the error is evaluated with respect to wt, εt=iwi|hj(xi)-yi| – Choose the classifier ht, with the minimum error εt – Update the weights: wt+1,i=wt,i t 1-ei, ei=0 if xi is correctly classified, and ei=1 otherwise. t = εt/(1- εt) • Final classifier: t t T t T t t t t j x h x h 1 log , otherwise 0 2 1 ) ( 1 ) ( 1 1 The top two boxlets selected by Adaboost

- 64. Attentional Cascade [Viola and Jones 01] • Within an image, most sub-images are non-face instances • Use smaller and efficient classifiers to reject many negative examples at early stage while detecting almost all the positive instances • Simpler classifiers are used to reject the majority of sub-windows • More complex classifiers are used at later stage to examine difficult cases • Learn the cascade classifier using Adaboost, i.e., learn an ensemble of weak classifiers Early stage classifier deals with easy instances while the deeper classifier faces more difficult cases.

- 65. Training Attentional Cascade • Similar to decision tree • Design parameters: – Number of cascade stages – Number of features of each stage – Threshold of each stage • Example: 32 stage cascade classifier – 2-feature classifier in the firs stage rejecting 60% non-faces while detecting 100% faces – 5-feature classifier in the second stage rejecting 80% non-faces while detecting 100 % faces – 20-feature classifier in stages 3, 4, and 5 – 50-feature classifier in stages 6 and 7 – 100-feature classifier in stages 8 to 12 – 200-feature classifier in stage 13 to 32 [Viola and Jones 01]

- 66. Variations and Implementations • Extended to handle multi-pose [Li et al. 02] [Viola and Jones 03] • Extended to handle multi-pose and in- plane rotation [Wu et al. 04] • Kullback-Leibler Adaboost [Liu and Shum 03] • Extended to detect pedestrians [Viola et al. 03] • Handle occlusions [Lin et al. ECCV 04] • Implemented in Intel OpenCV library

- 67. Adaboost-Based Detector: Summary • Three main components [Viola and Jones 01] : – Integral graph: efficient convolution – Use Adaboost for feature selection – Use Adaboost to learn the cascade classifier • Pros: – Fast and fairly robust; runs in real time. • Cons: – Very time consuming in training stage (may take days in training) – Requires lots of engineering work • Another greedy method: Anti-face [Keren 00] • See also [Amit et al. 97] for efficient hierarchical (focus of attention) feature-based method

- 68. Appearance-Based Methods: Summary • Pros: – Use powerful machine learning algorithms – Has demonstrated good empirical results – Fast and fairly robust – Extended to detect faces in different pose and orientation • Cons – Usually needs to search over space and scale – Need lots of positive and negative examples – Limited view-based approach

- 69. Agenda • Detecting faces in gray scale images – Knowledge-based – Feature-based – Template-based – Appearance-based • Detecting faces in color images • Detecting faces in video • Performance evaluation • Research direction and concluding remarks

- 70. Color-Based Face Detector • Distribution of skin color across different ethnic groups – Under controlled illumination conditions: compact – Arbitrary conditions: less compact • Color space – RGB, normalized RGB, HSV, HIS, YCrCb, YIQ, UES, CIE XYZ, CIE LIV, … • Statistical analysis – Histogram, look-up table, Gaussian model, mixture model, …

- 71. Skin and Non-Skin Color Model • Analyze 1 billion labeled pixels • Skin and non-skin models: • A significant degree of separation between skin and non-skin model • Achieves 80% detection rate with 8.5% false positives • Histogram method outperforms Gaussian mixture model ) | ( ) | ( ] [ ) | ( ] [ ) | ( skin rgb P skin rgb P Tn rgb n skin rgb P Ts rgb s skin rgb P [Jones and Rehg 99]

- 72. Experimental Results [Jones and Rehg 02] • Does a decent job • May have lots of false positives in the raw results • Need further processing to eliminate false negatives and group color pixels for face detection • See also [Hsu et al 02] [Jones and Rehg 99]

- 73. Color-Based Face Detector: Summary • Pros: – Easy to implement – Effective and efficient in constrained environment – Insensitive to pose, expression, rotation variation • Cons: – Sensitive to environment and lighting change – Noisy detection results (body parts, skin-tone line regions)

- 74. Agenda • Detecting faces in gray scale images – Knowledge-based – Feature-based – Template-based – Appearance-based • Detecting faces in color images • Detecting faces in video • Performance evaluation • Research direction and concluding remarks

- 75. Video-Based Face Detector • Motion cues: – Frame differencing – Background modeling and subtraction • Can also used depth cue (e.g., from stereo) when available • Reduce the search space dramatically

- 76. Face Detection in Video: [Mikolajczyk et al. 01] • Use two probabilistic detectors, one frontal Pf(I,x,y,s) and one profile Pp(I,x,y,s), based on [Schneiderman and Kanade 98]. • Predict state st=(xt,yt,st,t) based on observation zt=(Pf, Pp) where xt,yt,st,t are position, scale and pose angle. • Using Condensation algorithm [Isard and Blake 96] to propagate the probability of detection and parameters over time. Pf: frontal view Pp: profile view

- 77. Video-Based Detectors: Summary • Pros: – An easier problem than detection in still images – Use all available cues: motion, depth, voice, etc. to reduce search space • Cons – Need to efficient and effective methods to process the multimodal cues – Data fusion

- 78. Agenda • Detecting faces in gray scale images – Knowledge-based – Feature-based – Template-based – Appearance-based • Detecting faces in color images • Detecting faces in video • Performance evaluation • Research direction and concluding remarks

- 79. Performance Evaluation • Tricky business • Need to set the evaluation criteria/protocol – Training set – Test set – What is a correct detect? – Detection rate: false positive/negative – Precision of face location – Speed: training/test stage

- 80. Training Sets • Cropped and pre processed data set with face and non-face images provided by MIT CBCL: http://www.ai.mit.edu/projects/cbcl/software- datasets/index.html Mainly used for face recognition Need to crop and pre process the face images in the data set

- 81. Standard Test Sets • MIT test set (http://www.cs.cmu.edu/~har): subsumed by CMU test set • CMU test set (http://www.cs.cmu.edu/~har) (de facto benchmark): 130 gray scale images with a total of 507 frontal faces • CMU profile face test set (http://eyes.ius.cs.cmu.edu/usr20/ftp/testing_face _images.tar.gz) : 208 images with faces in profile views • Kodak data set (Eastman Kodak Corp): faces of multiple size, pose and varying lighting conditions in color images

- 82. CMU Test Set I: Upright Frontal Faces • 130 images with 507 frontal faces • Collected by K.-K. Sung and H. Rowley • Including 23 images used in [Sung and Poggio 94] • Some images have low resolution • De facto benchmark set

- 83. CMU Test Sets: Rotated and Profile Faces • 50 images with 223 faces in-plane orientation • Collected by H. Rowley 208 images with 441 faces Collected by H. Schneiderman

- 84. What is a Correct Detect? • Different interpretation of “correct detect” • Precision of face location • Affect the reporting results: detection, false positive, false negative rates Which is a correct detect?

- 85. Receiver Operator Characteristic Curve • Useful for detailed performance assessment • Plot true positive (TP) proportion against the false positive (FP) proportion for various possible settings • False positive: Predict a face when there is actually none • False negative: Predict a non-face where there is actually one SNoW with local features SVM with linear features SVM with 2nd poly kernel SNoW with conjunctive features Nothing is face Everything is face ROC Curve of a SVM-based detector (2nd order polynomial kernel): the detection rate is 78% with false positive rate of 10% (for a particular data set)

- 86. Agenda • Detecting faces in gray scale images – Knowledge-based – Feature-based – Template-based – Appearance-based • Detecting faces in color images • Detecting faces in video • Performance evaluation • Research direction and concluding remarks

- 87. Face Detection: A Solved Problem? • Not quite yet… • Factors: – Shadows – Occlusions – Robustness – Resolution • Lots of potential applications • Can be applied to other domains

- 88. Detector, Tracker, and Recognizer • Inseparable components for a robust system • Some promising results in – human pose estimation (d) [Lee and Cohen 04] – human tracking (d+t) [Sigal et al. 04] – multi-object tracker (d+t) [Okuma et al. 04] – video-based object recognition (t+r) [Lee et al. 03] [Williams et al. 03] [Lee et al. 03] [Williams et al. 03]

- 89. Research Issues • Detect faces robustly under – varying pose: [Schneiderman and Kanade 00] – orientation: [Rowley and Kanade 98] – occlusion: – expression: – and varying lighting conditions (with shadows) – using low resolution images • Precision • Performance evaluation

![Face Detection: A Solved Problem?

• Recent results have

demonstrated

excellent results

– fast, multi pose,

partial occlusion, …

• So, is face detection a

solved problem?

• No, not quite…

Omron’s face detector

[Liu et al. 04]](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-2-320.jpg)

![Human Detection and Tracking

• Often used as a

salient cue for human

detection

• Used as a strong cue

to search for other

body parts

• Used to detect new

objects and re-

initialize a tracker

once it fails

[Lee and Cohen 04] [Okuma et al. 04]](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-11-320.jpg)

![In This Tutorial

Face

Detection

Video

Color Gray Scale

Single

Image

Color Gray Scale

Upright

frontal

Pose

Rotation

Occlusion

Motion

Depth Voice

• Focus on detecting

– upright, frontal

faces

– in a single gray-

scale image

– with decent

resolution

– under good lighting

conditions

• See [Sinha 01] for

detecting faces in

low-resolution

images](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-14-320.jpg)

![Knowledge-Based Method: [Yang and Huang 94]

• Multi-resolution focus-of-

attention approach

• Level 1 (lowest resolution):

apply the rule “the center part

of the face has 4 cells with a

basically uniform intensity” to

search for candidates

• Level 2: local histogram

equalization followed by edge

detection

• Level 3: search for eye and

mouth features for validation](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-18-320.jpg)

![Knowledge-Based Method: [Kotropoulos & Pitas 94]

• Horizontal/vertical projection to search for

candidates

• Search eyebrow/eyes, nostrils/nose for validation

• Difficult to detect multiple people or in complex

background

m

x

n

y

y

x

I

y

VI

y

x

I

x

HI

1

1

)

,

(

)

(

)

,

(

)

(

[Kotropoulos & Pitas 94]](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-19-320.jpg)

![Random Graph Matching [Leung et al. 95]

• Formulate as a problem to find the correct

geometric arrangement of facial features

• Facial features are defined by the average

responses of multi-orientation, multi-scale

Gaussian derivative filters

• Learn the configuration of features with

Gaussian distribution of mutual distance

between facial features

• Convolve an image with Gaussian filters to

locate candidate features based on similarity

• Random graph matching among the candidates

to locate faces](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-23-320.jpg)

![Feature Grouping [Yow and Cipolla 90]

• Apply a 2nd derivative

Gaussian filter to search for

interest points

• Group the edges near

interest points into regions

• Each feature and grouping

is evaluated within a

Bayesian network

• Handle a few poses

• See also [Amit et al. 97] for

efficient hierarchical (focus

of attention) feature-based

method

Face model and component

Model facial feature as pair of edges

Apply interest point operator and edge detector

to search for features

Using Bayesian network to combine evidence](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-24-320.jpg)

![Face Template

• Use relative pair-wise

ratios of the brightness of

facial regions (14 16

pixels): the eyes are

usually darker than the

surrounding face [Sinha 94]

• Use average area

intensity values than

absolute pixel values

• See also Point Distribution

Model (PDM) [Lanitis et al. 95]

Ration Template [Sinha 94]

average shape

[Lanitis et al. 95]](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-28-320.jpg)

![Face and Non-Face Exemplars

• Positive examples:

– Get as much variation as

possible

– Manually crop and normalize

each face image into a

standard size (e.g., 19 19

pixels)

– Creating virtual examples [Sung

and Poggio 94]

• Negative examples:

– Fuzzy idea

– Any images that do not contain

faces

– A large image subspace

– Bootstraping [Sung and Poggio 94]](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-34-320.jpg)

![Distribution-Based Method [Sung & Poggio 94]

• Masking: reduce the

unwanted background noise

in a face pattern

• Illumination gradient

correction: find the best fit

brightness plane and then

subtracted from it to reduce

heavy shadows caused by

extreme lighting angles

• Histogram equalization:

compensates the imaging

effects due to changes in

illumination and different

camera input gains](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-35-320.jpg)

![Creating Virtual Positive Examples

• Simple and very

effective method

• Randomly mirror,

rotate, translate and

scale face samples by

small amounts

• Increase number of

training examples

• Less sensitive to

alignment error

Randomly mirrored, rotated

translated, and scaled faces

[Sung & Poggio 94]](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-36-320.jpg)

![Distribution of Face/Non-face Pattern

• Cluster face and non-face samples

into a few (i.e., 6) clusters using K-

means algorithm

• Each cluster is modeled by a multi-

dimensional Gaussian with a

centroid and covariance matrix

• Approximate each Gaussian

covariance with a subspace (i.e.,

using the largest eigenvectors)

• See [Moghaddam and Pentland 97] on

distribution-based learning using

Gaussian mixture model

)

(

)

(

2

1

exp

)

2

(

1

)

( 1

T

2

/

1

2

/

μ

x

Σ

μ

x

Σ

x d

p

x: face, non-face samples

[Sung & Poggio 94]](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-37-320.jpg)

![Distance Metrics [Sung & Poggio 94]

• Compute distances of a sample to all

the face and non-face clusters

• Each distance measure has two

parts:

– Within subspace distance (D1):

Mahalanobis distance of the projected

sample to cluster center

– Distance to the subspace (D2):

distance of the sample to the

subspace

• Feature vector: Each face/non-face

samples is represented by a vector of

these distance measurements

• Train a multilayer perceptron (MLP)

using the feature vectors for face

detection

2

T

75

75

2

2

1

T

1

)

)(

(

)

(

))

(

)

(

|

|

ln

2

ln

(

2

1

μ

x

x

x

μ

x

Σ

μ

x

Σ

E

E

I

D

d

D

p

6 face clusters

6 non-face clusters

2 distance values per cluster

24 measurements

[Sung and Poggio 94]](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-38-320.jpg)

![Bootstrapping [Sung and Poggio 94]

1. Start with a small set of non-face

examples in the training set

2. Train a MLP classifier with the current

training set

3. Run the learned face detector on a

sequence of random images.

4. Collect all the non-face patterns that

the current system wrongly classifies

as faces (i.e., false positives)

5. Add these non-face patterns to the

training set

6. Got to Step 2 or stop if satisfied

Improve the system performance

greatly

False positive detects

Test image](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-39-320.jpg)

![Experimental Results: [Sung and Poggio 94]

• Can be have

multiple detects of

a face since it may

be detected

– at different scale

– at a slightly

displaced window

location

• Able to detect

upright frontal faces](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-42-320.jpg)

![Neural Network-Based Detector

• Train multiple multilayer perceptrons with different receptive

fields [Rowley and Kanade 96]

• Merging the overlapping detections within one network

• Train an arbitration network to combine the results from

different networks

• Needs to find the right neural network architecture (number

of layers, hidden units, etc.) and parameters (learning rate,

etc.)](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-43-320.jpg)

![Dealing with Multiple Detects

• Merging

overlapping

detections within

one network [Rowley

and Kanade 96]

• Arbitration among

multiple networks

– AND operator

– OR operator

– Voting

– Arbitration network

Merging overlapping results

ANDing results from two networks](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-44-320.jpg)

![Experimental Results: [Rowley et al. 96]](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-45-320.jpg)

![Detecting Rotated Faces [Rowley et al. 98]

• A router network is trained to estimate the angle of an

input window

– If it contain a face, the router returns the angle of the face and the

face can be rotated back to upright frontal position.

– Otherwise the router returns a meaningless angle

• The de-rotated window is then applied to a detector

(previously trained for upright frontal faces)](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-46-320.jpg)

![Router Network [Rowley et al. 98]

• Rotate a face sample at 10 degree increment

• Create virtual examples (translation and scaling)

from each sample

• Train a multilayer neural network with input-

output pair

Input-output pair to train a router network](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-47-320.jpg)

![Experimental Results [Rowley et al. 98]

• Able to detect rotated

faces with good results

• Performance degrades in

detecting upright frontal

faces due to the use of

router network

• See also [Feraud et al. 01]](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-48-320.jpg)

![Support Vector Machine (SVM)

• Find the optimal separating

hyperplane constructed by

support vectors [Vapnik 95]

• Maximize distances between

the data points closest to the

separating hyperplane (large

margin classifier)

• Formulated as a quadratic

programming problem

• Kernel functions for nonlinear

SVMs

support

vector

d

margin](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-49-320.jpg)

![SVM-Based Face Detector [Osuna et al. 97]

• Adopt similar architecture

Similar to [Sung and Poggio 94]

with the SVM classifier

• Pros: Good recognition

rate with theoretical

support

• Cons:

– Time consuming in

training and testing

– Need to pick the right

kernel

[Osuna et al. 97]](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-50-320.jpg)

![SVM-Based Face Detector: Issues

• Training: Solve a complex quadratic optimization

problem

– Speed-up: Sequential Minimal Optimization (SMO)

[Platt 99]

• Testing: The number of support vectors may be

large lots of kernel computations

– Speed-up: Reduced set of support vectors [Romdhani et

al. 01]

• Variants:

– Component-based SVM [Heisele et al. 01]:

• Learn components and their geometric configuration

• Less sensitive to pose variation](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-51-320.jpg)

![Sparse Network of Winnows [Roth 98]

• On line, mistake driven algorithm

• Attribute (feature) efficiency

• Allocations of nodes and links is data driven

– complexity depends on number of active features

• Allows for combining task hierarchically

• Multiplicative learning rule

Features

Target nodes](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-52-320.jpg)

![SNoW-Based Face Detector

• Multiplicative weight update algorithm:

• Pros: On-line feature selection [Yang et al. 00]

• Cons: Need more powerful feature

representation scheme

• Also been applied to object recognition [Yang

et al. 02]

0.5

2,

Usually,

(demotion)

1)

x

(if

w

w

,

x

but w

0

Class

If

)

(promotion

1)

x

(if

w

w

,

x

w

but

1

Class

If

x

w

iff

1

is

Prediction

i

i

i

i

i

i

θ

θ

θ](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-53-320.jpg)

![Probabilistic Modeling of Local Appearance

[Schneiderman and Kanade 98]

• Using local appearance

• Learn the distribution by

parts using Naïve Bayes

classifier

• Apply Bayesian decision

rule

• Further decompose the

appearance into space,

frequency, and orientation

• Learn the joint distribution

of object and position

• Also wavelet

representation

n

k

k object

subregion

p

object

region

p

1

)

|

(

)

|

(

p( |face)=

p( |face)* p( |face)*

p( |face)* p( |face)

p( , x, y, s |face)*…

or

p( , x, y, s |face)*…

)

(

)

(

)

|

(

)

|

(

object

p

ojbect

p

object

region

P

object

region

p

](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-54-320.jpg)

![Detecting faces in Different Pose

• Extend to detect faces

in different pose with

multiple detectors

• Each detector

specializes to a view:

frontal, left pose and

right pose

• [Mikolajczyk et al. 01] extend

to detect faces from

side pose to frontal

view

[Schneiderman and Kanade 98]](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-55-320.jpg)

![Experimental Results [Schneiderman and Kanade 98]

Extended to detect cars

[Schneiderman and Kanade 00]

Able to detect profile faces

[Schneiderman and Kanade 98]](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-56-320.jpg)

![Mixture of Factor Analyzers [Yang et al. 00]

• Generative method that performs

clustering and dimensionality

reduction within each cluster

• Similar to probabilistic PCA but has

more merits

– proper density model

– robust to noise

• Use mixture model to detect faces in

different pose

• Using EM to estimate all the

parameters in the mixture model

• See also [Moghaddam and Pentland 97]

on using probabilistic Gaussian

mixture for object localization

)

,

(

)

|

( Ψ

Λz

z

x

u

Λz

x

N

p

Factor faces

for frontal view

z

x

hidden

factor

observation

Factor faces for 45

view

z

x

j, j

)

,

(

)

,

|

(

Ψ

z

Λ

z

x

j

j

j

N

p

mixture model](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-57-320.jpg)

![Fisher Linear Discriminant [Yang et al. 00]

• Fisherface (FLD)

demonstrated good results in

face recognition

• Apply Self-Organizing Map

(SOM) to cluster faces/non-

faces, and thereby labels for

samples

• Apply FLD to find optimal

projection matrix for maximal

separation

• Estimate class-conditional

density for detection

Face/non-face prototypes generated by SOM

Given a set of unlabeled face

and non-face samples

SOM

FLD

Class Conditional Density

Maximum Likelihood Estimation](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-58-320.jpg)

![Adaboost [Freund and Schapire 95]

• Use a set of weak classifiers (εt < 0.5) and weighting on

difficult examples for learning (sampling is based on the

weights)

• Given: (x1, y1), …, (xm, ym) where xiX, yiY={-1,+1}

Initialize D1(i)=1/m

For t = 1, …, T:

Train a weak classifier using distribution Dt

1. Get a weak hypothesis ht: X {-1,+1} with error

εt=Pri~Dt[ht(xi)≠yi]

2. Importance of ht:t=1/2 ln((1- εt)/ εt))

3. Update: Dt+1(i)= Dt(i)/Zte-t if ht (x)=yi (correctly classified)

Dt+1(i)= Dt(i)/Ztet if ht (x)≠yi (incorrectly classified)

where Zt is a normalization factor

Aggregating the classifiers: H(x)=sign(t=1 t ht(x))

• Perform well and does not overfit in empirical studies](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-59-320.jpg)

![Adaboost-Based Detector [Viola and Jones 01]

• Main idea:

– Feature selection: select important features

– Focus of attention: focus on potential regions

– Use an integral image for fast feature evaluation

• Use Adaboost to learn

– A set of important features (feature selection)

• sort them in the order of importance

• each feature can be used as a simple (weak) classifier

– A cascade of classifiers that

• combine all the weak classifiers to do a difficult task

• filter out the regions that most likely do not contain faces](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-60-320.jpg)

![Feature Selection [Viola and Jones 01]

• Training: If x is a face, then x

– most likely has feature 1 (easiest

feature, and of greatest importance)

– very likely to have feature 2 (easy

feature)

– …

– likely to have feature n (more complex

feature, and of less importance since it

does not exist in all the faces in the

training set)

• Testing: Given a test sub-image x’

– if x’ has feature 1:

• Test whether x’ has feature 2

– Test whether x’ has feature n

– else …

• else, it is not face

– else, it is not a face

• Similar to decision tree

x’

Yes No

Yes No

Yes No

x’ is a face

feature 2

feature n

x’ is a non-face

x’ is a non-face

feature 1

x’ is a non-face

One simple implementation](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-61-320.jpg)

![Boxlet As Weak Classifier [Viola & Jones 01]

Boxlet: compute the difference between the sums of

pixels within two rectangular regions

Compute boxlets all over a pattern

Harr-like wavelets

Over-complete representation: lots of boxlet features

For each boxlet j, compute fj(x) where x is a positive

or negative example

Each feature is used as a weak classifier

Set threshold j so that most samples are classified

correctly:

hj(x, f, p, )=1 if fj(x) < j (or fj(x) > j)

Sequentially select the boxlets

Boxlet:

2-rectangle,

3-rectangle,

4-rectangle

face samples

non-face samples](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-62-320.jpg)

![Selecting Features Using Adaboost

[Viola and Jones 01]

otherwise

0

)

(

if

1

)

( j

j

j

j

j

p

x

f

p

x

h

• For t=1, …, T

– Construct a weak classifier using one single feature ht

where pj is a parity bit and j is a threshold

– For each feature j, train a classifier hj, the error is

evaluated with respect to wt, εt=iwi|hj(xi)-yi|

– Choose the classifier ht, with the minimum error εt

– Update the weights: wt+1,i=wt,i t

1-ei, ei=0 if xi is correctly

classified, and ei=1 otherwise. t = εt/(1- εt)

• Final classifier:

t

t

T

t

T

t t

t

t

j

x

h

x

h

1

log

,

otherwise

0

2

1

)

(

1

)

( 1 1

The top two boxlets selected by Adaboost](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-63-320.jpg)

![Attentional Cascade [Viola and Jones 01]

• Within an image, most sub-images are

non-face instances

• Use smaller and efficient classifiers to

reject many negative examples at

early stage while detecting almost all

the positive instances

• Simpler classifiers are used to reject

the majority of sub-windows

• More complex classifiers are used at

later stage to examine difficult cases

• Learn the cascade classifier using

Adaboost, i.e., learn an ensemble of

weak classifiers

Early stage classifier deals

with easy instances while

the deeper classifier faces

more difficult cases.](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-64-320.jpg)

![Training Attentional Cascade

• Similar to decision tree

• Design parameters:

– Number of cascade stages

– Number of features of each stage

– Threshold of each stage

• Example: 32 stage cascade classifier

– 2-feature classifier in the firs stage rejecting 60%

non-faces while detecting 100% faces

– 5-feature classifier in the second stage rejecting

80% non-faces while detecting 100 % faces

– 20-feature classifier in stages 3, 4, and 5

– 50-feature classifier in stages 6 and 7

– 100-feature classifier in stages 8 to 12

– 200-feature classifier in stage 13 to 32

[Viola and Jones 01]](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-65-320.jpg)

![Variations and Implementations

• Extended to handle multi-pose [Li et al. 02]

[Viola and Jones 03]

• Extended to handle multi-pose and in-

plane rotation [Wu et al. 04]

• Kullback-Leibler Adaboost [Liu and Shum 03]

• Extended to detect pedestrians [Viola et al.

03]

• Handle occlusions [Lin et al. ECCV 04]

• Implemented in Intel OpenCV library](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-66-320.jpg)

![Adaboost-Based Detector: Summary

• Three main components [Viola and Jones 01] :

– Integral graph: efficient convolution

– Use Adaboost for feature selection

– Use Adaboost to learn the cascade classifier

• Pros:

– Fast and fairly robust; runs in real time.

• Cons:

– Very time consuming in training stage (may take days in training)

– Requires lots of engineering work

• Another greedy method: Anti-face [Keren 00]

• See also [Amit et al. 97] for efficient hierarchical (focus

of attention) feature-based method](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-67-320.jpg)

![Skin and Non-Skin Color Model

• Analyze 1 billion labeled pixels

• Skin and non-skin models:

• A significant degree of separation

between skin and non-skin model

• Achieves 80% detection rate with

8.5% false positives

• Histogram method outperforms

Gaussian mixture model

)

|

(

)

|

(

]

[

)

|

(

]

[

)

|

(

skin

rgb

P

skin

rgb

P

Tn

rgb

n

skin

rgb

P

Ts

rgb

s

skin

rgb

P

[Jones and Rehg 99]](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-71-320.jpg)

![Experimental Results [Jones and Rehg 02]

• Does a decent job

• May have lots of false

positives in the raw

results

• Need further

processing to

eliminate false

negatives and group

color pixels for face

detection

• See also [Hsu et al 02]

[Jones and Rehg 99]](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-72-320.jpg)

![Face Detection in Video: [Mikolajczyk et al. 01]

• Use two probabilistic detectors, one

frontal Pf(I,x,y,s) and one profile

Pp(I,x,y,s), based on [Schneiderman

and Kanade 98].

• Predict state st=(xt,yt,st,t) based on

observation zt=(Pf, Pp) where

xt,yt,st,t are position, scale and

pose angle.

• Using Condensation algorithm [Isard

and Blake 96] to propagate the

probability of detection and

parameters over time.

Pf: frontal view

Pp: profile view](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-76-320.jpg)

![CMU Test Set I: Upright Frontal Faces

• 130 images with 507

frontal faces

• Collected by K.-K. Sung

and H. Rowley

• Including 23 images used

in [Sung and Poggio 94]

• Some images have low

resolution

• De facto benchmark set](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-82-320.jpg)

![Detector, Tracker, and Recognizer

• Inseparable components

for a robust system

• Some promising results in

– human pose estimation

(d) [Lee and Cohen

04]

– human tracking (d+t)

[Sigal et al. 04]

– multi-object tracker

(d+t) [Okuma et al. 04]

– video-based object

recognition (t+r) [Lee et

al. 03] [Williams et al.

03]

[Lee et al. 03]

[Williams et al. 03]](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-88-320.jpg)

![Research Issues

• Detect faces robustly under

– varying pose: [Schneiderman and Kanade 00]

– orientation: [Rowley and Kanade 98]

– occlusion:

– expression:

– and varying lighting conditions (with shadows)

– using low resolution images

• Precision

• Performance evaluation](https://image.slidesharecdn.com/facedetection-240326044147-e65a764e/85/Face-detection-and-expression-using-OpenCV-using-python-89-320.jpg)