Facial expression recognition based on local binary patterns final

- 1. Supervised by Prof.Dr. Aliaa Youssif Caifeng Shan a,*, Shaogang Gong b, Peter W. McOwan b Published in Journal: www.elsevier.com 1 Presented by, Mohamed Sherif Ahmed Reda Elshami Moataz Ahmed

- 2. Introduction: • What is Facial expression recognition. • Types of Feature extraction. • Facial expression recognition based on Local Binary Patterns(LBP). • Facial expression data. Feature Extraction using LBP Machine Learning ◦ What is Machine Learning? ◦ Machine Learning Method we use(Brief) ◦ SVM(Supported Vector machine)

- 3. Low-resolution facial expression recognition Boosting LBP for facial expression recognition Tables of Results Paper Implementation Conclusion Future Work References 3

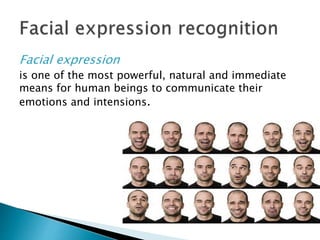

- 4. Facial expression is one of the most powerful, natural and immediate means for human beings to communicate their emotions and intensions.

- 5. A facial recognition system is a computer application for automatically identifying or verifying a person from a digital image or a video frame from a video source. One of the ways to do this is by comparing selected facial features from the image and a facial database.

- 6. Facial expression recognition is a process performed by humans or computers, which consists of: 1. Locating faces in the scene (referred to as face detection). 2. Extracting facial features from the detected face region (referred to as facial feature extraction). 3. Analyzing the motion of facial features and/or the changes in the appearance of facial features and classifying this information into some facial- expression-interpretative categories such as facial muscle activations like smile or frown, emotion (affect) categories like happiness or anger, attitude categories like (dis)liking or ambivalence, etc. (referred to as facial expression interpretation).

- 7. History: Automatic facial expression recognition has attracted much attention from behavioural scientists since the work of C.Darwin in 1872(The Expression of the Emotions in Man and Animals). M.Suwa made the first attempt to automatically analyze facial expressions from image sequences in 1978 (A preliminary note on pattern recognition of human emotional expression). Much progress has been made in the last decade.

- 8. Photographs from the 1862 book Mécanisme de la Physionomie Humaine by Guillaume Duchenne. Through electric stimulation, determined which muscles were responsible for different facial expressions. Charles Darwin would later republish some of these photographs in his own work on the subject, which compared facial expressions in humans to those in animals.

- 9. Challenges: Though much progress has been made recognizing facial expression with a high accuracy remains difficult due to the subtlety, complexity and variability of facial expressions. Low-resolution images in real world environments make real-life expression recognition much more difficult. And we must also consider the factor of time and memory.

- 10. progress: Y. Yacoob, L.S. Davis, Recognizing human facial expression from long image sequences using optical flow, IEEE Transactions on Pattern Analysis and Machine Intelligence 18 (6) (1996) 636–642. I. Essa, A. Pentland, Coding, analysis, interpretation, and recognition of facial expressions, IEEE Transactions on Pattern Analysis and Machine Intelligence 19 (7) (1997) 757–763. M.J. Lyons, J. Budynek, S. Akamatsu, Automatic classification of single facial images, IEEE Transactions on Pattern Analysis and Machine Intelligence 21 (12) (1999) 1357–1362. G. Donato, M. Bartlett, J. Hager, P. Ekman, T. Sejnowski, Classifying facial actions, IEEE Transactions on Pattern Analysis and Machine Intelligence 21 (10) (1999) 974–989. M. Pantic, L. Rothkrantz, Expert system for automatic analysis of facial expression, Image and Vision Computing 18 (11) (2000) 881–905. Y. Tian, T. Kanade, J. Cohn, Recognizing action units for facial expression analysis, IEEE Transactions on Pattern Analysis and Machine Intelligence 23 (2) (2001) 97–115.

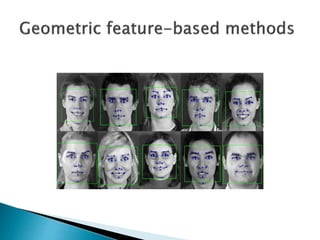

- 11. There are two common approaches to extract facial features: Geometric feature-based methods. Appearance-based methods.

- 12. Geometric features present the shape and locations of facial components, which are extracted to form a feature vector that represents the face geometry. In image sequences, the facial movements can be qualified by measuring the geometrical displacement of facial feature points between the current frame and the initial frame.

- 15. With appearance-based methods, Holistic spatial analysis including Principal Component Analysis (PCA), Linear Discriminant Analysis (LDA), Independent Component Analysis (ICA) and Gabor wavelets, are applied to either the whole-face or specific face-regions to extract the appearance changes of the face.

- 16. Due to their superior performance, the major works on appearance-based methods have focused on using Gabor-wavelet representations. However, the computation of Gabor-wavelet representations is both time and memory intensive.

- 17. Gabor-wavelet: In image processing, a Gabor filter is a linear filter used for edge detection. The Gabor wavelet representation allows description of spatial frequency structure in the image while preserving information about spatial relations.

- 18. Recently M.Valstar have demonstrated that geometric feature-based methods provide similar or better performance than appearance- based approaches in Action Unit recognition. However, the geometric feature-based methods usually requires accurate and reliable facial feature detection and tracking.

- 19. We extract the facial features using appearance based method: In this work, we empirically study facial representation based on Local Binary Pattern (LBP) features for person-independent facial expression recognition.

- 20. Local Binary Pattern (LBP) is a simple yet very efficient texture operator which labels the pixels of an image by threasholding the neighborhood of each pixel and considers the result as a binary number. Due to its discriminative power and computational simplicity.

- 21. LBP features were proposed originally for texture analysis, and recently have been introduced to represent faces in facial images analysis. The most important properties of LBP features are their tolerance against illumination changes and their computational simplicity.

- 22. Different machine learning methods, including template Matching, Support Vector Machine (SVM), Linear Discriminant Analysis (LDA) and the linear programming technique are examined to perform facial expression recognition using LBP features.

- 23. Compared to Gabor wavelets, LBP features can be derived very fast in a single scan through the raw image and lie in low-dimensional feature space, while still retaining discriminative facial information in a compact representation. Since it is both time and memory intensive to convolve face images with a bank of Gabor filters to extract multi-scale and multi-orientational coefficients.

- 24. The generalization ability of LBP features across different databases are evaluated. Obviously low-resolution images in real world environments make real-life expression recognition much more difficult. SO In this work, LBP features for low-resolution facial expression recognition are investigated. Experiments on different image resolutions show that LBP features perform stably and robustly over a useful range of low resolutions of face images.

- 25. A widely used description is Facial Action Coding System (FACS), which is a human- observer-based system developed to capture subtle changes in facial expressions. With FACS, facial expressions are decomposed into one or more Action Units (AUs).

- 26. Emotion Action Units Happiness 6+12 Sadness 1+4+15 Surprise 1+2+5B+26 Fear 1+2+4+5+7+20+26 Anger 4+5+7+23 Disgust 9+15+16

- 27. AU Number FACS Name Muscular Basis 0 Neutral face 1 Inner Brow Raiser frontalis (pars medialis) 2 Outer Brow Raiser frontalis (pars lateralis) 4 Brow Lowerer depressor glabellae, depressor supercilii, corrugator supercilii 5 Upper Lid Raiser levator palpebrae superioris, superior tarsal muscle 6 Cheek Raiser orbicularis oculi (pars orbitalis) 7 Lid Tightener orbicularis oculi (pars palpebralis) 8 Lips Toward Each Other orbicularis oris 9 Nose Wrinkler levator labii superioris alaeque nasi 10 Upper Lip Raiser levator labii superioris, caput infraorbitalis 11 Nasolabial Deepener zygomaticus minor 12 Lip Corner Puller zygomaticus major 13 Sharp Lip Puller levator anguli oris (also known as caninus) 14 Dimpler buccinator 15 Lip Corner Depressor depressor anguli oris (also known as triangularis) 16 Lower Lip Depressor depressor labii inferioris 17 Chin Raiser mentalis 18 Lip Pucker incisivii labii superioris and incisivii labii inferioris 19 Tongue Show 20 Lip Stretcher risorius w/ platysma 21 Neck Tightener platysma 22 Lip Funneler orbicularis oris 23 Lip Tightener orbicularis oris 24 Lip Pressor orbicularis oris 25 Lips Part depressor labii inferioris, or relaxation of mentalis or orbicularis oris 26 Jaw Drop masseter; relaxed temporalis and internal pterygoid

- 28. 28

- 29. The experiments on the Cohn–Kanade database, one of the most comprehensive database in the current facial-expression-research community. The database consists of 100 university students aged from 18 to 30 years, of which 65% were female, 15% were African-American and 3% were Asian or Latino. Subjects were instructed to perform a series of 23 facial displays, six of which were based on description of prototypic emotions. Image sequences from neutral to target display were digitized into 640 x 490 pixel arrays with 8-bit precision for gray scale values.

- 31. We empirically evaluate LBP features for person-independent facial expression recognition. Different machine learning methods are exploited to classify expressions on several databases. here we comprehensively study LBP features for facial expression recognition with different classifiers on much larger databases. We investigate LBP features for low-resolution facial expression recognition, a critical problem but seldom addressed in the existing work. We not only perform evaluation on different image resolutions, but also conduct experiments in real-world compressed video sequences. We formulate Boosted-LBP by learning the most discriminative LBP histograms with AdaBoost for each expression, and the recognition performance of different classifiers are improved by using the Boosted-LBP features. We also evaluate the generalization ability of LBP features cross different databases.

- 33. 33 Color image

- 34. 34 Gray image

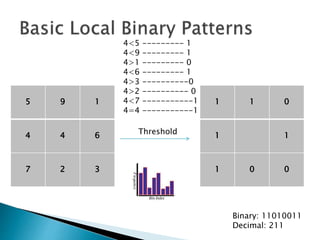

- 35. 5 9 1 4 4 6 7 2 3 1 1 0 1 1 1 0 0 Binary: 11010011 Decimal: 211 Threshold 4<5 --------- 1 4<9 --------- 1 4>1 --------- 0 4<6 --------- 1 4>3 ----------0 4>2 ---------- 0 4<7 -----------1 4=4 -----------1

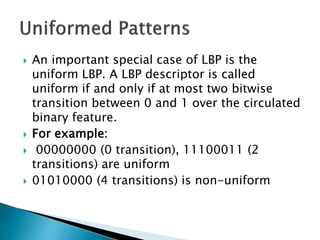

- 37. An important special case of LBP is the uniform LBP. A LBP descriptor is called uniform if and only if at most two bitwise transition between 0 and 1 over the circulated binary feature. For example: 00000000 (0 transition), 11100011 (2 transitions) are uniform 01010000 (4 transitions) is non-uniform

- 40. Is a branch of artificial intelligence, concerns the construction and study of systems that can learn from data. Types ◦ Supervised Learning ◦ Unsupervised Learning

- 41. Template Matching SVM 41

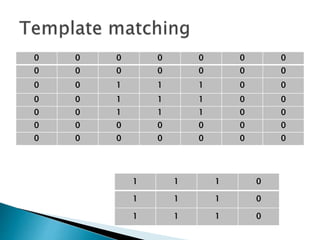

- 42. 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 0 0 0 0 1 1 1 0 0 0 0 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 0 1 1 1 0 1 1 1 0

- 46. Linear Polynomial Radial basis function 46

- 50. In real-world environments such as smart meeting and visual surveillance, only low- resolution video input is available. 50

- 51. 51

- 52. 52

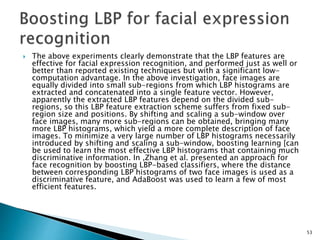

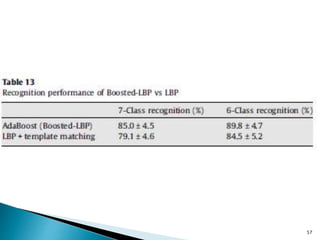

- 53. The above experiments clearly demonstrate that the LBP features are effective for facial expression recognition, and performed just as well or better than reported existing techniques but with a significant low- computation advantage. In the above investigation, face images are equally divided into small sub-regions from which LBP histograms are extracted and concatenated into a single feature vector. However, apparently the extracted LBP features depend on the divided sub- regions, so this LBP feature extraction scheme suffers from fixed sub- region size and positions. By shifting and scaling a sub-window over face images, many more sub-regions can be obtained, bringing many more LBP histograms, which yield a more complete description of face images. To minimize a very large number of LBP histograms necessarily introduced by shifting and scaling a sub-window, boosting learning [can be used to learn the most effective LBP histograms that containing much discriminative information. In ,Zhang et al. presented an approach for face recognition by boosting LBP-based classifiers, where the distance between corresponding LBP histograms of two face images is used as a discriminative feature, and AdaBoost was used to learn a few of most efficient features. 53

- 54. 54

- 55. 55

- 57. 57

- 58. 58

- 59. 59

- 60. 60

- 61. 61

- 62. 62

- 63. 63

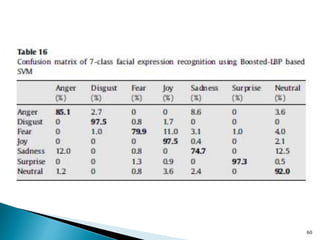

- 64. In this paper, we present a comprehensive empirical study of facial expression recognition based on Local Binary Patterns features. Different classification techniques are examined on several databases. The key issues of this work can be summarized as follows: 1. Deriving an effective facial representation from original face images is a vital step for successful facial expression recognition. We empirically evaluate LBP features to describe appearance changes of expression images. Extensive experiments illustrate that LBP features are effective and efficient for facial expression recognition. 2. One challenge for facial expression recognition is recognizing facial expressions at low resolutions, as only compressed low resolution video input is available in real-world applications. We investigate LBP features on low-resolution images, and observe that LBP features perform stably and robustly over a useful range of low resolutions of face images. 3. We adopt AdaBoost to learn the most discriminative LBP features from a large LBP feature pool. Best recognition performance is obtained by using SVM with Boosted-LBP features. However, this method has limitation on generalization to other datasets. 64

- 65. [1] M. Pantic, L. Rothkrantz, Automatic analysis of facial expressions: the state of art, IEEE Transactions on Pattern Analysis and Machine Intelligence 22 (12) (2000) 1424–1445. [2] B. Fasel, J. Luettin, Automatic facial expression analysis: a survey, Pattern Recognition 36 (2003) 259–275. [3] M. Pantic, L. Rothkrantz, Toward an affect-sensitive multimodal human– computer interaction, in: Proceeding of the IEEE, vol. 91, 2003, pp. 1370–1390. [4] Y. Tian, T. Kanade, J. Cohn, Handbook of Face Recognition, Springer, 2005 (Chapter 11. Facial Expression Analysis). [5] Y. Yacoob, L.S. Davis, Recognizing human facial expression from long image sequences using optical flow, IEEE Transactions on Pattern Analysis and Machine Intelligence 18 (6) (1996) 636–642. [6] I. Essa, A. Pentland, Coding, analysis, interpretation, and recognition of facial expressions, IEEE Transactions on Pattern Analysis and Machine Intelligence 19 (7) (1997) 757–763. [7] M.J. Lyons, J. Budynek, S. Akamatsu, Automatic classification of single facial images, IEEE Transactions on Pattern Analysis and Machine Intelligence 21 (12) (1999) 1357–1362. [8] G. Donato, M. Bartlett, J. Hager, P. Ekman, T. Sejnowski, Classifying facial 65

- 66. actions, IEEE Transactions on Pattern Analysis and Machine Intelligence 21 (10) (1999) 974–989. [9] M. Pantic, L. Rothkrantz, Expert system for automatic analysis of facial expression, Image and Vision Computing 18 (11) (2000) 881–905. [10] Y. Tian, T. Kanade, J. Cohn, Recognizing action units for facial expression analysis, IEEE Transactions on Pattern Analysis and Machine Intelligence 23 (2) (2001) 97–115. [11] I. Cohen, N. Sebe, A. Garg, L. Chen, T.S. Huang, Facial expression recognition from video sequences: temporal and static modeling, Computer Vision and Image Understanding 91 (2003) 160–187. [12] L. Yin, J. Loi, W. Xiong, Facial expression representation and recognition based on texture augmentation and topographic masking, in: ACM Multimedia, 2004. [13] M. Yeasin, B. Bullot, R. Sharma, From facial expression to level of interests: a spatio-temporal approach, in: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2004. [14] J. Hoey, J.J. Little, Value directed learning of gestures and facial displays, in: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2004. [15] Y. Chang, C. Hu, M. Turk, Probabilistic expression analysis on manifolds, in: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2004. [16] R.E. Kaliouby, P. Robinson, Real-time inference of complex mental states from facial expressions and head gestures, in: IEEE CVPR Workshop on Real-time Vision for Human–Computer Interaction, 2004. [17] M. Pantic, L.J.M. Rothkrantz, Facial action recognition for facial expression analysis from static face images, IEEE Transactions on Systems, Man, and Cybernetics 34 (3) (2004) 1449–1461. 66

- 67. [18] Y. Zhang, Q. Ji, Active and dynamic information fusion for facial expression understanding from image sequences, IEEE Transactions on Pattern Analysis and Machine Intelligence 27 (5) (2005) 1–16. [19] M.S. Bartlett, G. Littlewort, M. Frank, C. Lainscsek, I. Fasel, J. Movellan, Recognizing facial expression: machine learning and application to spotaneous behavior, in: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2005. [20] F. Dornaika, F. Davoine, Simultaneous facial action tracking and expression recognition using a particle filter, in: IEEE International Conference on Computer Vision (ICCV), 2005. [21] C.S. Lee, A. Elgammal, Facial expression analysis using nonlinear decomposable generative models, in: IEEE International Workshop on Analysis and Modeling of Faces and Gestures (AMFG), 2005. [22] M. Valstar, I. Patras, M. Pantic, Facial action unit detection using probabilistic actively learned support vector machines on tracked facial point data, in: IEEE Conference on Computer Vision and Pattern Recognition Workshop, vol. 3, 2005, pp. 76–84. [23] M. Valstar, M. Pantic, Fully automatic facial action unit detection and temporal analysis, in: IEEE Conference on Computer Vision and Pattern Recognition Workshop, 2006, p. 149 67

- 68. Thank you 68

Editor's Notes

- #36: The operator labels the pixels of an image by thresholding a 3 x 3 neighborhood of each pixel with the center value and considering the results as a binary number (see Fig. 3 for an illustration) a histogram is a graphical representation of the distribution of data the 256-bin histogram of the LBP labels computed over a region is used as a texture descriptor. The derived binary numbers (called Local Binary Patterns or LBP codes) The limitation of the basic LBP operator is its small 3 X 3 neighborhood which can not capture dominant features with large scale structures

- #37: circular neighborhoods the notation (P , R) denotes a neighborhood of P equally spaced sampling points on a circle of radius of R that form a circularly symmetric neighbor set.

- #38: uniform LBP is used for compressing the histograms from 256 bins to 59, which gives quite some speedup when comparing them later.

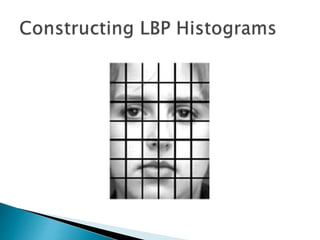

- #39: Some parameters can be optimized for better feature extraction 110 x150 (Left) A face image divided into 6 X 7 sub-region. 378 histogram bin 59 uniformed Represented by the LBP histograms with the length of 2478(59 x 42).

- #40: a histogram is a graphical representation of the distribution of data 16500 2478 Represented by the LBP histograms with the length of 2478(59 x 42).

- #41: Supervised Learning algorithms are trained on labelled examples, i.e., input where the desired output is known. Unsupervised learning algorithms operate on unlabelled examples, i.e., input where the desired output is unknown. Here the objective is to discover structure in the data (e.g. through a cluster analysis), not to generalise a mapping from inputs to outputs.

- #42: Template matching[1] is a technique in digital image processing for finding small parts of an image which match a template image.

- #43: Template matching[1] is a technique in digital image processing for finding small parts of an image which match a template image.

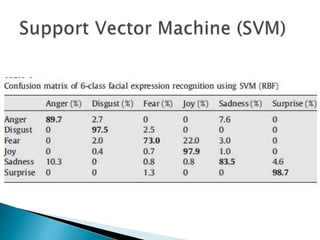

- #45: Joy and Surprise can be recognized with high accuracy (around 90–92%), but Anger and Fear are easily confused with others.

- #49: SVM It is observed that, Disgust, Joy, Surprise and Neutral can be recognized with high accuracy (90–98%), while the recognition rates for Fear and Sadness are muchlower (68–69%). Compared to the recognition results of template matching in Table 2, the recognition performance for every expression is increased except Fear.

- #50: Linear Polynomial Radial basis function

![ [1] M. Pantic, L. Rothkrantz, Automatic analysis of facial expressions: the state of

art, IEEE Transactions on Pattern Analysis and Machine Intelligence 22 (12)

(2000) 1424–1445.

[2] B. Fasel, J. Luettin, Automatic facial expression analysis: a survey, Pattern

Recognition 36 (2003) 259–275.

[3] M. Pantic, L. Rothkrantz, Toward an affect-sensitive multimodal human–

computer interaction, in: Proceeding of the IEEE, vol. 91, 2003, pp. 1370–1390.

[4] Y. Tian, T. Kanade, J. Cohn, Handbook of Face Recognition, Springer, 2005

(Chapter 11. Facial Expression Analysis).

[5] Y. Yacoob, L.S. Davis, Recognizing human facial expression from long image

sequences using optical flow, IEEE Transactions on Pattern Analysis and

Machine Intelligence 18 (6) (1996) 636–642.

[6] I. Essa, A. Pentland, Coding, analysis, interpretation, and recognition of facial

expressions, IEEE Transactions on Pattern Analysis and Machine Intelligence

19 (7) (1997) 757–763.

[7] M.J. Lyons, J. Budynek, S. Akamatsu, Automatic classification of single facial

images, IEEE Transactions on Pattern Analysis and Machine Intelligence 21

(12) (1999) 1357–1362.

[8] G. Donato, M. Bartlett, J. Hager, P. Ekman, T. Sejnowski, Classifying facial

65](https://image.slidesharecdn.com/facialexpressionrecognitionbasedonlocalbinarypatternsfinal-150301170536-conversion-gate02/85/Facial-expression-recognition-based-on-local-binary-patterns-final-65-320.jpg)

![ actions, IEEE Transactions on Pattern Analysis and Machine Intelligence 21

(10) (1999) 974–989.

[9] M. Pantic, L. Rothkrantz, Expert system for automatic analysis of facial

expression, Image and Vision Computing 18 (11) (2000) 881–905.

[10] Y. Tian, T. Kanade, J. Cohn, Recognizing action units for facial expression

analysis, IEEE Transactions on Pattern Analysis and Machine Intelligence 23 (2)

(2001) 97–115.

[11] I. Cohen, N. Sebe, A. Garg, L. Chen, T.S. Huang, Facial expression recognition

from video sequences: temporal and static modeling, Computer Vision and

Image Understanding 91 (2003) 160–187.

[12] L. Yin, J. Loi, W. Xiong, Facial expression representation and recognition based

on texture augmentation and topographic masking, in: ACM Multimedia, 2004.

[13] M. Yeasin, B. Bullot, R. Sharma, From facial expression to level of interests: a

spatio-temporal approach, in: IEEE Conference on Computer Vision and

Pattern Recognition (CVPR), 2004.

[14] J. Hoey, J.J. Little, Value directed learning of gestures and facial displays, in:

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2004.

[15] Y. Chang, C. Hu, M. Turk, Probabilistic expression analysis on manifolds, in:

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2004.

[16] R.E. Kaliouby, P. Robinson, Real-time inference of complex mental states from

facial expressions and head gestures, in: IEEE CVPR Workshop on Real-time

Vision for Human–Computer Interaction, 2004.

[17] M. Pantic, L.J.M. Rothkrantz, Facial action recognition for facial expression

analysis from static face images, IEEE Transactions on Systems, Man, and

Cybernetics 34 (3) (2004) 1449–1461.

66](https://image.slidesharecdn.com/facialexpressionrecognitionbasedonlocalbinarypatternsfinal-150301170536-conversion-gate02/85/Facial-expression-recognition-based-on-local-binary-patterns-final-66-320.jpg)

![ [18] Y. Zhang, Q. Ji, Active and dynamic information fusion for facial expression

understanding from image sequences, IEEE Transactions on Pattern Analysis

and Machine Intelligence 27 (5) (2005) 1–16.

[19] M.S. Bartlett, G. Littlewort, M. Frank, C. Lainscsek, I. Fasel, J. Movellan,

Recognizing facial expression: machine learning and application to spotaneous

behavior, in: IEEE Conference on Computer Vision and Pattern Recognition

(CVPR), 2005.

[20] F. Dornaika, F. Davoine, Simultaneous facial action tracking and expression

recognition using a particle filter, in: IEEE International Conference on

Computer Vision (ICCV), 2005.

[21] C.S. Lee, A. Elgammal, Facial expression analysis using nonlinear decomposable

generative models, in: IEEE International Workshop on Analysis and Modeling

of Faces and Gestures (AMFG), 2005.

[22] M. Valstar, I. Patras, M. Pantic, Facial action unit detection using probabilistic

actively learned support vector machines on tracked facial point data, in: IEEE

Conference on Computer Vision and Pattern Recognition Workshop, vol. 3,

2005, pp. 76–84.

[23] M. Valstar, M. Pantic, Fully automatic facial action unit detection and temporal

analysis, in: IEEE Conference on Computer Vision and Pattern Recognition

Workshop, 2006, p. 149

67](https://image.slidesharecdn.com/facialexpressionrecognitionbasedonlocalbinarypatternsfinal-150301170536-conversion-gate02/85/Facial-expression-recognition-based-on-local-binary-patterns-final-67-320.jpg)