AME-1934 : Enable Active-Active Messaging Technology to Extend Workload Balancing and High Availability

- 1. Enable Active-Active Enterprise Messaging Technology to extend workload balancing and high availability Session AME-1934 © 2015 IBM Corporation Wang Bo - IBM CDL wangbowb@cn.ibm.com

- 2. Agenda • Concepts of Business Continuity • Business Continuity • High Availability • Continuous Serviceability • Continuous Availability Cross Sites • Messaging Technologies for Business Continuity • Cases Sharing 1

- 3. What does business continuity mean to you? • Why we need to have a business continuity plan (BCP)? • Don’t panic in the event of disaster crisis • What we need to consider when preparing a BCP? • "backups" and their locations • a central command center, which we call it as "Crisis Management Team (CMT)" in IBMManagement Team (CMT)" in IBM • maintain a "contact list“ • think about all possible "scenarios" and their corresponding action plans • consider "critical" information or applications first 2

- 4. Different levels of business continuity • Enterprise Business Requires Business Continuity Standby Active-Active 0. Disaster 1. High-Availability 2. Continuous 3. Continuous Recovery • Restore the business after a disaster • Meet Service Availability objectives e.g., 99.9% availability or no more than 8 hours of down-time a year for maintenance and failures serviceability • No downtime within one data center (planned or not) 3 Availability cross sites • No downtime ever (planned or not)

- 5. BC Level 1 – High Availability • HA at different levels (AIX example) • Apps follow HA principles • Middleware HA technologies – Clustering, DB2 pureScale, MQ multi-ins • OS HA technologies – PowerHA (HACMP) • Hardware HA technologies – Disk redundancy (RAID, SDD, etc) –– FlashCopy, Metro/Global mirror – Server redundancy (CPU, power, etc) – Network redundancy • Key point is eliminating SPOF • Redundancy • RPO = 0! 4

- 6. BC Level 2 – Continuous Serviceability • Usually based on workload take over • Automatically take over • A challenge for application affinity and sequence • Decoupling of components – easier maintenance • Old data may be lost – could combine with HA • Maintenance• Maintenance • Planed and unplanned downtime • Rolling updates • Coexistence • Short RTO ! 5

- 7. BC Level 3 – Continuous Availability Cross Sites • Two or more sites, separated by unlimited distances, running the same applications and having the same data to provide cross-site workload balancing and Continuous Availability / Disaster Recovery • Customer data at geographically dispersed sites kept in sync via synchronization GDPS/PPRC GDPS/XRC or GDPS/GM Active/ActiveGDPS/PPRC GDPS/XRC or GDPS/GM Active/Active Failover model Failover model Near CA model Recovery time = 2 minutes Recovery time < 1 hour Recovery time < 1 minute Distance < 20 KM Unlimited distance Unlimited distance CD1SOURCE CD1TABLE CD1SOURCE CD1TABLE CD1SOURCE CD1TABLE CD1SOURCE CD1TABLE CD1SOURCECD1SOURCE CD1TABLECD1CD1TABLE CD1SOURCE CD1TABLE CD1SOURCE CD1TABLE CD1SOURCECD1SOURCE CD1TABLECD1CD1TABLE 6 • Care about both RPO & RTO!

- 8. Workload Balancing Through Data Replication • Both sides run workload simultaneously, may with same or different volumes. But both have the full picture of data! • Replicate data from one platform to another • Both sides may work equally, or have different focus, like below: • Main server still do the existing critical work. • Meanwhile, the offloaded server can run data analysis, query data, etc. • New business requirements, but don’t want to touch the existing server!• New business requirements, but don’t want to touch the existing server! • When purchase a new organization, may involve a different database on a different platform. How to centralize the data? Site A Site B Synchronization OLTP QLTP Powerful Critical production work (DB updates/inserts) Strict maintenance process Cautions: Nobody wants it down Less powerful Less critical work (DB queries) Work can be delayed, but may cost high CPU (Data analysis, credit card anti-fraud, etc) New workloads 7

- 9. Agenda • Concepts of Business Continuity • Messaging Technologies for Business Continuity • HA Technologies • Continuous Serviceability Technologies • Continuous Availability Cross Sites • Cases Sharing• Cases Sharing 8

- 10. MQ Technologies • HA Technologies • QSG for MQ on zOS • Failover Technologies • Application HA • Continuous Serviceability Technologies • MQ Clustering • Rolling Upgrade• Rolling Upgrade • Continuous Availability Cross Sites • Data Synchronization • Synchronization Application Design • How To Replicate Data • Performance Consideration 9

- 11. HA - QSG for MQ on z/OS Queue manager Private queues Queue manager Private queues Coupling facility failure Queue manager Private queues Queue manager Private queues Nonpersistent messages on private queues OK (kept) Queue manager failure 10 Queue manager Private queues Shared queues Messages on shared queues OK (kept) Nonpersistent messages on shared queues lost (deleted) Queue manager Private queues Shared queues Messages on shared queues OK (kept) Nonpersistent messages on private queues lost (deleted) Persistent messages on shared queues restored from log

- 12. HA - Failover Technologies • Failover • The automatic switching of availability of a service • Data accessible on all servers • Multi-instance queue manager • Integrated into the WebSphere MQ product • Faster failover than HA cluster • Runtime performance of networked storage• Runtime performance of networked storage • More susceptible to MQ and OS defects • HA cluster • Capable of handling a wider range of failures • Failover historically slower, but some HA clusters are improving • Some customers frustrated by unnecessary failovers • Extra product purchase and skills required

- 13. HA - Application Availability • Application environment • Dependencies like a specific DB, broker, WAS? • machine-specific or server-specific? • Start/stop operations – sequence? • Message loss • Really need every message delivered? • Application affinities• Application affinities • MQ connectivity 12 QM1 MQ Client Application QM3 QM2

- 14. App 1App 1Client 1 Gateway QMgr QMgr Site 1 Continuous Serviceability – MQ Cluster • Workload Balancing • Service Availability • Location Transparency (of a kind) Service 1 Client 1 Service 1 QMgr Site 2 13

- 15. QMgr QMgr Service Service QMgr QMgr App 1App 1Client New York but separated by an ocean and 3500 miles Global applications Multi - Data Center using MQ Cluster QMgr QMgr Service Service QMgr QMgr App 1App 1Client London • Prefer traffic to stay geographically local • Except when you have to look further afield • How do you do this with clusters that span geographies?… 14

- 16. QMgr Service QMgr App 1App 1Client New York DEF QALIAS(AppQ) TARGET(NYQ) DEF QALIAS(NYQ) TARGET(ReqQ) CLUSTER(Global) CLWLPRTY(9) AppQ NYQ ReqQ A A LonQ A DEF QALIAS(LonQ) TARGET(ReqQ) CLUSTER(Global) CLWLPRTY(4) Set this up – The one cluster solution London • Clients always open AppQ • Local alias determines the preferred region • Cluster workload priority is used to target geographically local cluster aliases • Use of CLWLPRTY enables automatic failover •CLWLRANK can be used for manual failover Service App 1App 1Client QMgr AppQ A QMgr NYQ ReqQ A LonQ A DEF QALIAS(AppQ) TARGET(LonQ) DEF QALIAS(LonQ) TARGET(ReqQ) CLUSTER(Global) CLWLPRTY(9) DEF QALIAS(NYQ) TARGET(ReqQ) CLUSTER(Global) CLWLPRTY(4) 15

- 17. QMgr QMgr Service Service QMgr QMgr App 1App 1Client New York USA QMgr QMgr Set this up - The two cluster solution QMgr QMgr Service Service QMgr QMgr App 1App 1Client London EUROPE QMgr QMgr • The service queue managers join both geographical clusters •Each with separate cluster receivers for each cluster, at different cluster priorities. Queues are clustered in both clusters. • The client queue managers are in their local cluster only. 16

- 18. Continuous Availability Cross Sites • Data Synchronization is the key component in Active-Active • Capture transaction change in real-time • Publish the change in high performance with low latency • Messaging based implementation is proven to be the simplest way among kinds of methods of data transmission • A high performance, reliable messaging product is needed for the following requirements: • Simplifies application development • Ease of use • Assured message delivery • High Performance and Scalability • Easy of Management 17

- 19. Active-Active Common Model based on Messaging Workload Distributor •Cross Site Workload Distribution •Data synchronization •Reply on high performance, reliable messaging transmission •Flexible application design •Automation & Management Business App Business Data Sync App Messaging Sync App Messaging Business App Business Data Sites at a distance 18

- 20. How to replicate data? • Capture transaction activities through DB2 logs – an independent tool Log-based Capture WebSphere MQ Source Target Highly parallel Apply Q Capture Q Apply • Modify the existing applications – Send out transactional data with MQ API • At the end of existing logic, add MQPUT call to send the data. Program an apply application at the target end. • Flexible, can cross different platforms, even different database products. But need a robust application. • Option to choose within or without syncpoint. – Will the existing transaction fail(roll back) if the send fails? WebSphere MQ Q-Replication 19

- 21. Performance Tuning Considerations • Synchronize only the changed data, thus reduce the data volume • Introduce more parallelism • Multiple synchronization channels for different type of workload • More threads in sync application for parallel processing • Multiple MQ channels to leverage single channel busy problem• Multiple MQ channels to leverage single channel busy problem • Invest to use MQ new feature • Bigger buffer pools above the bar • Sequential pre-fetch • Page set read/write performance enhancement • Channel performance improvement 20

- 22. MQ Buffer pools read ahead enhancement • Symptom : When the number of messages overruns the buffer pool allocated for the queue, messages are spilled to disk and must then be retrieved from disk. • The read ahead enhancement enables message pre-fetch from disk storage and improves MQGET performance. • Available in PM PM63802/UK79853 in 2012 and PM81785/ UK91439 in 2013. • Internal testing shows ~50% improvement with read ahead enabled (msglen=6KB). • Enable this feature if MQ buffer pool may overrun. 21

- 23. Agenda • Concepts of Business Continuity • Messaging Technologies for Business Continuity • Cases sharing • Case 1 (Active/Active with QREP tool ) • Case 2 (Active/Active with application) • Case 3 (Workload offload )• Case 3 (Workload offload ) • Case 4 (Workload offload to multiple systems) 22

- 24. Beijing data center: For disaster recovery Requirements of a bank – Active/Active • A commercial bank - data centers in Shanghai and Beijing • Beijing: One existing data center for disaster recovery • Shanghai: One existing data center for production, and one new data center for Active- Active. 70 km between two data centers • This bank plans to achieve Active-Active between two data centers in Shanghai for core banking business. rows/s MB/s OLTP 45K-50K 45 Batch 140K 50 Month-End Batch 130K 70-80 1200 km 70 km For disaster recovery Shanghai data center 1 Production center Shanghai data center 2 23 Month-End Batch 130K 70-80 Interest Accrual Batch 440K 172.5

- 25. MQ in Q Replication • Part of the InfoSphere Data Replication product • A software-based asynchronous replication solution • For Relational Databases • Changes are captured from the database recovery log; transmitted as (compact) binary data; and then applied to the remote database(s) using SQL statements. • Leverages WebSphere MQ for Staging/Transport • Each captured database transactions published in an MQ message (messages sent at each commit interval)sent at each commit interval) • Staging makes it possible to achieve continuous operation even when the target database is down for some time or the network encounter some problem. 24 DB2 Control Tables Site A DB2 Control tables Q Capture Q Apply agent agent agentUser tables database recovery log User tables Unlimited Distance Site B Configuration & Monitoring logrdr publish Data CenterWebSphere MQ DB2 Transaction Parallel Replay Asynchronous LOG change data capture Active DB2Active DB2 Persistent Staging Capability SQL statements

- 26. MQ v8.0 features for Q Rep scenarios • Sequential pre-fetch on z/OS • The TUNE READAHEAD(ON), TUNE RAHGET(ON) delivered to the bank as PTF in V71 and still applicable to V8 • Pageset read/write performance enhancements for QREP on z/OS • Changes to the queue manager deferred write processor. Now it’s the default behavious in the V8 • 64-bit enablement of buffer pools on z/OS • More real storages can be used as buffers • SMF Enhancements on z/OS • Chinit SMF helps on tuning channel performance • 64-bit log RBA • We probably want QREP users to get to this • Other improvement • z/OS miscellaneous improvements (performance and serviceability) • Channel performance on z/OS 25

- 27. Case 2(Active/Active with application) • Active-Active Adaptability in Small/Medium-sized Banks • China banks have setup storage based DR solution, but the business recovery time is too long • Sysplex solution is expensive, and input-output ratio is not high. The distance is also limited. • Need to consider application based solution, and mix with the storage based solutionstorage based solution • Active-Active is the target model of modern data center • Not only for mainframe, but heterogeneous and periphery distributed platform also need to be active-active 26

- 28. Business Requirement of Active-Active • Credit card system on mainframe is based on the VisionPlus (V+) solution by First Data. • Improve the capacity and availability of the whole credit card system. • More comprehensive and more efficient services by payment systems of the banks.systems of the banks. • More flexibility accesses, more comprehensive functions of liquidity risk management, extension of the scope of system monitoring • Refinement of backup infrastructure 27

- 29. The target Active-Active System Structure • Both the main system and the secondary system are active • Real data synchronization for OLTP transactions • The main system and the secondary system backup each other • Workload can be taken over in case of planned or unplanned failure File TransferOLTP Batch Terminal Anti-fraud Reporting Debt-collection 2. File Transfer (Secondary) V+ Mainframe Batch Processing (Main) V+ Mainframe Batch Processing DRNET Headquarter Gateway Finance Processing in BJ Finance Processing in SH OLTP Processing OLTP Processing VISA/MC/JCB . Non-Finance Processing 3. Global Mirror files Workload Split by Card BIN, and send to BJ and SH 1.OLTP Transaction (MQ) 28

- 30. Active-Active Deployment Model Continuous Availability – Active-Active Encryption Core Data Beijing Business Continuous Availability Achieve Business Continuous AvailabilityAchieve Business Continuous Availability by front end and mainframe activeby front end and mainframe active--activeactive Reliable Services Synchronize application data based onSynchronize application data based on Headquarter Gateway (Route by BIN) Encryption Front-end App System Core Data Sync Sync Shanghai Front-end App System(Main) Synchronize application data based onSynchronize application data based on MQ reliable messaging, keep dataMQ reliable messaging, keep data consistency in real timeconsistency in real time Data Backup Backup key business data through MQBackup key business data through MQ seriesseries Data interchange in real time The data centers could be located in longThe data centers could be located in long distancedistance 29

- 31. Active-Active Logical model for OLTP • Self implemented replication service based on WebSphere MQ for z/OS Beijing Site Shanghai Site Credit Card System Credit Card System Workload Distributor MQ queue manager 1 send VSAM AOR Transaction Publisher VSAM Transaction Replay retrieve MQ queue manager 2 AOR Transaction Publisher Transaction Replay retrieve send Credit Card System 30

- 32. Planned Site Switch Over Procedure • Stop workload routing to BJ site • Waiting for SH site duplex as BJ site data • Workload re-rout to SH site • Reverse GM from site B to site A 31

- 33. Unplanned Site Switch Over Procedure • Stop workload routing to BJ site • Workload re-rout to SH site • Reverse GM from site B to site A 32

- 34. Characteristics of this case • For business which has less complex master data with less dependent database tables. For example, Credit Card business. • The synchronization applications need to be developed according to your business and technical requirements, rather than an out-of-box product.than an out-of-box product. 33

- 35. Case 3 (Workload offload ) • Purpose • A new business – SELECT frequently. • Existing DB2 on zOS, but wants to buy an existing solution on Linux. • So this is an active-active data replication within the same data center, cross platform. • Implementation • Modify the existing core banking applications + Send with MQ logic at the end. • On the distributed side, develop another application for DB updates/inserts. • Minimize the impact on the existing applications - out of syncpoint. 34

- 36. Workload offload • Easier and Faster expand the business • The existing business is slight touched (nearly untouched). • Flexible, no dependencies on the type of target database. Workload Distributor 35 Core banking system(zOS) zOS System Core Workload Standby Linux System Query Workload Active QUERY system(Linux) App Logic: • Existing logic • MQPUT (data to update in DB) • EXEC CICS SYNCPOINT Apply Application App Logic: • According to the data received, update target with SQL statement or SP MQ Channel zOS MQ Linux MQ

- 37. Case 4 (Workload offload to multiple systems) • Purpose • Replicate zOS database of core credit card system to a Linux database in a near real time window. There are multiple consumers on different Linux boxes want the same data. • Implementation • zOS MQ dose a normal put(same as the data replication discussed in previous pages), only one copy of data is transferred to Linux MQ. Then this MQ dose the 1-n publication with the MQ pub/sub engine.Then this MQ dose the 1-n publication with the MQ pub/sub engine. 36 MQPUT(/credit/deposit/) CICS/Batch QM on zOS ((((QM1)))) QM distributed ((((QM2)))) SUB2.Q APP1.SUB SUB1.Q MQGET or Remote QMGR APP2.SUB Cluster XMITQ Or XMITQ(hierarchy) …… 10 Subs in total MQGET or Remote QMGR

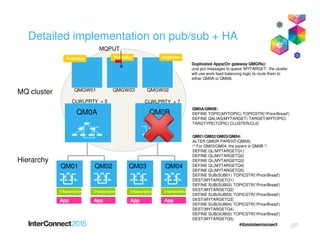

- 38. Detailed implementation on pub/sub + HA PublisherPublisherPublisher MQ cluster QM0A QM0B QMGW01 QMGW03 QMGW02 QM0A/QM0B: DEFINE TOPIC(MYTOPIC) TOPICSTR('/Price/Bread') DEFINE QALIAS(MYTARGET) TARGET(MYTOPIC) TARGTYPE(TOPIC) CLUSTER(CL0) Duplicated Apps(On gateway QMGRs): Just put messages to queue 'MYTARGET', the cluster will use work-load balancing logic to route them to either QM0A or QM0B. MQPUT CLWLPRTY = 7CLWLPRTY = 5 37 Hierarchy App 5 Subscription App 5 Subscription App 5 Subscription App 5 Subscription QM01 QM02 QM03 QM04 TARGTYPE(TOPIC) CLUSTER(CL0) QM01/QM02/QM03/QM04: ALTER QMGR PARENT(QM0A) /* For QM03/QM04, the parent is QM0B */ DEFINE QL(MYTARGETQ1) DEFINE QL(MYTARGETQ2) DEFINE QL(MYTARGETQ3) DEFINE QL(MYTARGETQ4) DEFINE QL(MYTARGETQ5) DEFINE SUB(SUB01) TOPICSTR('/Price/Bread') DEST(MYTARGETQ1) DEFINE SUB(SUB02) TOPICSTR('/Price/Bread') DEST(MYTARGETQ2) DEFINE SUB(SUB03) TOPICSTR('/Price/Bread') DEST(MYTARGETQ3) DEFINE SUB(SUB04) TOPICSTR('/Price/Bread') DEST(MYTARGETQ4) DEFINE SUB(SUB05) TOPICSTR('/Price/Bread') DEST(MYTARGETQ5)

- 39. 38

- 40. Notices and Disclaimers Copyright © 2015 by International Business Machines Corporation (IBM). No part of this document may be reproduced or transmitted in any form without written permission from IBM. U.S. Government Users Restricted Rights - Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM. Information in these presentations (including information relating to products that have not yet been announced by IBM) has been reviewed for accuracy as of the date of initial publication and could include unintentional technical or typographical errors. IBM shall have no responsibility to update this information. THIS DOCUMENT IS DISTRIBUTED "AS IS" WITHOUT ANY WARRANTY, EITHER EXPRESS OR IMPLIED. IN NO EVENT SHALL IBM BE LIABLE FOR ANY DAMAGE ARISING FROM THE USE OF THIS INFORMATION, INCLUDING BUT NOT LIMITED TO, LOSS OF DATA, BUSINESS INTERRUPTION, LOSS OF PROFIT OR LOSS OF OPPORTUNITY. IBM products and services are warranted according to the terms and conditions of the agreements under which they are provided. Any statements regarding IBM's future direction, intent or product plans are subject to change or withdrawal without notice. Performance data contained herein was generally obtained in a controlled, isolated environments. Customer examples are presented as illustrations of how those customers have used IBM products and the results they may have achieved. Actual performance, cost, savings or other results in other operating environments may vary. References in this document to IBM products, programs, or services does not imply that IBM intends to make such products, programs or services available in all countries in which IBM operates or does business. Workshops, sessions and associated materials may have been prepared by independent session speakers, and do not necessarily reflect the views of IBM. All materials and discussions are provided for informational purposes only, and are neither intended to, nor shall constitute legal or other guidance or advice to any individual participant or their specific situation. It is the customer’s responsibility to insure its own compliance with legal requirements and to obtain advice of competent legal counsel as to the identification and interpretation of any relevant laws and regulatory requirements that may affect the customer’s business and any actions the customer may need to take to comply with such laws. IBM does not provide legal advice or represent or warrant that its services or products will ensure that the customer is in compliance with any law.

- 41. Notices and Disclaimers (con’t) Information concerning non-IBM products was obtained from the suppliers of those products, their published announcements or other publicly available sources. IBM has not tested those products in connection with this publication and cannot confirm the accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on the capabilities of non-IBM products should be addressed to the suppliers of those products. IBM does not warrant the quality of any third-party products, or the ability of any such third-party products to interoperate with IBM’s products. IBM EXPRESSLY DISCLAIMS ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. The provision of the information contained herein is not intended to, and does not, grant any right or license under any IBM patents, copyrights, trademarks or other intellectual property right. • IBM, the IBM logo, ibm.com, Bluemix, Blueworks Live, CICS, Clearcase, DOORS®, Enterprise Document Management System™, Global Business Services ®, Global Technology Services ®, Information on Demand,Management System™, Global Business Services ®, Global Technology Services ®, Information on Demand, ILOG, Maximo®, MQIntegrator®, MQSeries®, Netcool®, OMEGAMON, OpenPower, PureAnalytics™, PureApplication®, pureCluster™, PureCoverage®, PureData®, PureExperience®, PureFlex®, pureQuery®, pureScale®, PureSystems®, QRadar®, Rational®, Rhapsody®, SoDA, SPSS, StoredIQ, Tivoli®, Trusteer®, urban{code}®, Watson, WebSphere®, Worklight®, X-Force® and System z® Z/OS, are trademarks of International Business Machines Corporation, registered in many jurisdictions worldwide. Other product and service names might be trademarks of IBM or other companies. A current list of IBM trademarks is available on the Web at "Copyright and trademark information" at: www.ibm.com/legal/copytrade.shtml.

- 42. Thank You Your Feedback is Important! Access the InterConnect 2015Access the InterConnect 2015 Conference CONNECT Attendee Portal to complete your session surveys from your smartphone, laptop or conference kiosk.