Lambda Data Grid: An Agile Optical Platform for Grid Computing and Data-intensive Applications (Short PPT)

- 1. Lambda Data Grid An Agile Optical Platform for Grid Computing and Data-intensive Applications Focus on BIRN Mouse application 1 Tal Lavian Short

- 2. 2 Feedback & Response • Issues: – Interface between applications and NRS – Information that would cross this interface • Response: – eScience: Mouse (Integrating brain data across scales and disciplines. Part of BIRN at SDSC & OptIPuter) – Architecture that supports applications with network resource service and application middleware service – Detailed interface specification

- 3. Updates • Feedback and apps analysis prompted new conceptualization • Visits: OptIPuter, SLAC, SSRL, SDSC, UCSD BIRN • Looked at many eSciense compute-data-intensive applications • BIRN Mouse as a representative example • Demos – GGF, SuperComputing • Concept validation with the research community that: “an OGSI-based, Grid Service capable of dynamically controlling end-to-end lightpaths over a real wavelength-switched network” 3 – Productive feedback: Ian Foster (used my slide in his Keynote), Carl Kesselman (OptIPuter question), Larry Smarr (proposed BIRN), Bill St. Arnaud, Francine Berman, Tom DeFanti, Cees de Latt

- 4. 4 Outline • Introduction • The BIRN Mouse Application • Research Concepts • Network – Application Interface • LambdaGrid Features • Architecture • Scope & Deliverables

- 5. Optical Networks Change the Current Pyramid 5 George Stix, Scientific American, January 2001 x10 DWDM- fundamental miss-balance between computation and communication

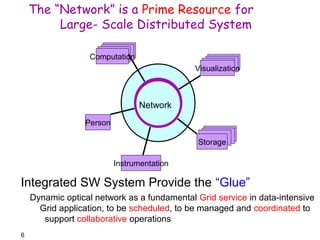

- 6. 6 The “Network” is a Prime Resource for Large- Scale Distributed System Network Computation Instrumentation Person Visualization Storage Integrated SW System Provide the “Glue” Dynamic optical network as a fundamental Grid service in data-intensive Grid application, to be scheduled, to be managed and coordinated to support collaborative operations

- 7. 7 BIRN Mouse: Example Application The Mouse research application at Biomedical Informatics Research Network (BIRN) – Studying animal models of disease across dimensional scales to test hypothesis with human neurological disorders – Brain disorders studies: Schizophrenia, Dyslexia, Multiple Sclerosis, Alzheimer and Parkinson – Brain Morphometry testbed – Interdisciplinary, multi-dimensional scale morphological analysis (from genomics to full organs) • Why BIRN Mouse? – illustrate the type of research questions – illustrate the type of e-Science collaborative applications for LambdaGrid – require analysis of massive amount of data, – LambdaGrid can enhance the way of doing the science – they are already recognized the current and future limitations, • trying to solve it from the storage, computation, visualization and collaborative tools. Working with Grid, NMI, OptIPuter, effective integration – Potential collaboration – BIRN and OptIPuter are eager to pull

- 8. 8 Data Schlepping Scenario Mouse Operation • The “BIRN Workflow” requires moving massive amounts of data: – The simplest service, just copy from remote DB to local storage in mega-compute site – Copy multi-Terabytes (10-100TB) data – Store first, compute later, not real time, batch model Mouse network limitations: – Needs to copy ahead of time – L3 networks can’t handle these amounts effectively, predictably, in a short time window – L3 network provides full connectivity -- major bottleneck – Apps optimized to conserve bandwidth and waste storage – Network does not fit the “BIRN Workflow” architecture

- 9. 9 Limitations of Solutions with Current Network Technology • The BIRN networking is unpredictable, a major bottleneck, specifically over WAN, limit the type, way, data sizes of the biomedical research, prevents true Grid Virtual Organization (VO) research collaborations • The network model doesn’t fit the “BIRN Workflow” model, it is not an integral resource of the BIRN Cyber- Infrastructure

- 10. 10 Problem Statement • Problems – Existing packet-switching communications model has not been sufficiently adaptable to meet the challenge of large scale data flows, especially those with variable attributes Q? Do we need an alternative switching technique?

- 11. 11 Problem Statement • Problems – BIRN Mouse often: • requires interaction and cooperation of resources that are distributed over many heterogeneous systems at many locations; • requires analyses of large amount of data (order of Terabytes); • requires the transport of large scale data; • requires sharing of data; • requires to support workflow cooperation model Q? Do we need a new network abstraction?

- 12. 12 Problem Statement • Problems – BIRN research moves ~10TB from remote DB to local mega-computation in ~10 days (unpredictable). Research would be enhanced with predictable, scheduled data movement, guaranteed in 10 hours (or perhaps 1 hour) – Many emerging eScience applications, especially within Grid environments require similar characteristics Q? Do we need a network service architecture?

- 13. 13 BIRN Network Limitations • Optimized to conserve bandwidth and waste storage – Geographically dispersed data – Data can scale up 10-100 times easily • L3 networks can’t handle multi-terabytes efficiently and cost effectively • Network does not fit the “BIRN Workflow” architecture – Collaboration and information sharing is hard • Mega-computation, not possible to move the computation to the data (instead data to the computation site) • Not interactive research, must first copy then analyze – Analysis locally, but with strong limitations geographically – Don’t know a head of time where the data is • Can’t navigate the data interactively or in real time • Can’t “Webify” the information of large volumes • No cooperation/interaction between the storage and network middleware(s)

- 14. 14 Proposed Solution • Switching technology: Lambda switching for data-intensive transfer • New abstraction: Network Resource encapsulated as a Grid service • New middleware service architecture: LambdaGrid service architecture

- 15. 15 Proposed Solution • Proposing LambdaGrid Service architecture that interacts with BIRN Cyber-infrastructure, and overcome BIRN data limitations efficiently & effectively by: – treating the “network” as a primary resource just like “storage” and “computation” – treat the “network” as a “scheduled resource” – rely upon a massive, dynamic transport infrastructure: Dynamic Optical Network

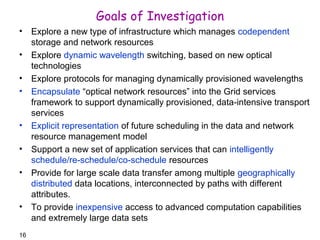

- 16. 16 Goals of Investigation • Explore a new type of infrastructure which manages codependent storage and network resources • Explore dynamic wavelength switching, based on new optical technologies • Explore protocols for managing dynamically provisioned wavelengths • Encapsulate “optical network resources” into the Grid services framework to support dynamically provisioned, data-intensive transport services • Explicit representation of future scheduling in the data and network resource management model • Support a new set of application services that can intelligently schedule/re-schedule/co-schedule resources • Provide for large scale data transfer among multiple geographically distributed data locations, interconnected by paths with different attributes. • To provide inexpensive access to advanced computation capabilities and extremely large data sets

- 17. 17 New Concepts • The “Network” is NO longer a Network – but a large scale Distributed System • Many-to-Many vs. Few-to-Few • Apps optimized to waste bandwidth • Network as a Grid service • Network as a scheduled service • Cloud bypass • New transport concept • New cooperative control plane

- 18. 18 Research Questions (Dist Comp) • Statistically multiplexing works for small streams, but not for mega flows. New concept for multiplexing by scheduling – What will we gain from the new scheduling model? – evaluate design tradeoffs • Current apps designed and optimized to conserve bandwidth – If we provide the LambdaGrid service architecture, how will this change the design and the architecture of eScience data-intensive workflow? – What are the tradeoffs for applications to waste bandwidth? • The batch & queue model does not fit networks. manual scheduling (email)–not efficient – What is the right model for adding the network as a Grid service? – network as integral Grid resource – Overcome Grid VO networking limitations

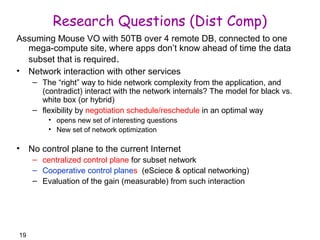

- 19. 19 Research Questions (Dist Comp) Assuming Mouse VO with 50TB over 4 remote DB, connected to one mega-compute site, where apps don’t know ahead of time the data subset that is required. • Network interaction with other services – The “right” way to hide network complexity from the application, and (contradict) interact with the network internals? The model for black vs. white box (or hybrid) – flexibility by negotiation schedule/reschedule in an optimal way • opens new set of interesting questions • New set of network optimization • No control plane to the current Internet – centralized control plane for subset network – Cooperative control planes (eSciece & optical networking) – Evaluation of the gain (measurable) from such interaction

- 20. 20 Research Questions (Net) • IP service best for many-to-many (~100M) small files. LambdaGrid services best for few-to-few (~100) huge files • Cloud bypass – 100TB over the Internet, break down the system – Offload Mega-flows from the IP cloud to the optical cloud. Alternate rout for Dynamic Optical network • What are the design characteristics for cloud bypass? • TCP does not fit mega-flows (well documented) – new proposals, XCP, SABUL, FAST, Tsunami…, fits the current Internet model. However, does not take advantage of dynamic optical network • {distinct characteristics - fairness, collision, drop, loss, QoS…} – What are the new design principle for cooperative protocols {L1+L4} ? – What to expose and in what interface to L3/L4? • Economics – expensive WAN static optical links, and L3 WAN ports – Given dynamic optical links, and the proposed Service Architecture, what are the design tradeoffs resulted in affordable solution?

- 21. 21 Research Questions (Storage) – BIRN’s Storage Resource Broker (SRB) – Hide the physical location of DB • Concepts of remote FS (RFS) • Based on the analysis search in other DB – SRB Lambda • Tight interaction between SRB and LambdaGrid • Optical control as a File System interface • The “Right Lambda” to the “Right DB” at the “Right time” • “Web-fy” MRI images ( mouse click ~1GB, ~2 sec) DB1 DB2 DB3 DB4 Lambda Grid λ1 λ2 λ3 λ4 SRB

- 22. Information Crossing NRS-Apps Interface The information is handled by the apps middleware as a running environment 22 for the application, and communicating with LambdaGrid as a whole • SA, DA, Protocol, SP, DP • Scheduling windows (time constrains) – {Start-after, end-before, duration} • Connectivity: {1-1, 1-N, N-1, N-N, N-M} • Data Size (calc bandwidth & duration) • Cost (or cost function) • Type of service – {Data Schlepping, Remote Operation, Remote Visualization } • Middleware calc {bandwidth, delay, Jitter, QoS… } • Translate into Lambda service • WS-Agreement – ID {user, application, data}, {credential , value, priorities, flexibility, availability} – Handle for returning output (scheduled plane, etc) renegotiation – Handle back for feedback, notification

- 23. 23 Interface Details SA, DA, Protocol, SP, DP Stream binding identifier {Src addr, Dest addr, Protocol, Src port, Dest Port) Scheduling Window: Window of time that the applications need the network. {start time, end time, and duration}. Start and end can be “ * ” (don’t care) The network will try to schedule the service under these constrains. The network middleware will reply with the allocated time and this can be shifted and renegotiated. The multiplexing will be done on data scheduling. The middleware will resolve conflicts and allocate the available time slot Example: allocate 2 hours, start after 5pm, end before 11pm Bandwidth: What is the bandwidth requirements Example: allocate 10Gbs half duplex, 100Mbs the opposite direction

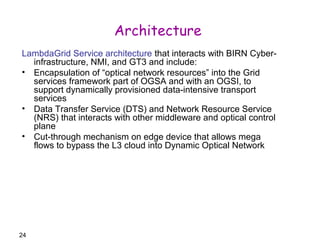

- 24. 24 Architecture LambdaGrid Service architecture that interacts with BIRN Cyber-infrastructure, NMI, and GT3 and include: • Encapsulation of “optical network resources” into the Grid services framework part of OGSA and with an OGSI, to support dynamically provisioned data-intensive transport services • Data Transfer Service (DTS) and Network Resource Service (NRS) that interacts with other middleware and optical control plane • Cut-through mechanism on edge device that allows mega flows to bypass the L3 cloud into Dynamic Optical Network

- 25. 25 Layered Architecture CONNECTION Fabric UDP DTS ODIN OMNInet BIRN Mouse Apps Middleware Grid FTP TCP/HTTP Resources Grid Layered Architecture NRS IP Connectivity Application Resource Collaborative BIRN Workflow NMI SRB BIRN Toolkit Lambda Resource managers DB Storage Computation Optical Control My view of the layers Λ OGSI Optical protocols Optical hw

- 26. Apps Middleware Scientific workflow Resource managers 26 Data Grid Service Plane optical Control Plane Data Transmission Plane Control Interactions DTS Optical Control Network l1 ln DB l1 ln l1 ln Storage Optical Control Network Network Service Plane NRS NMI Compute

- 27. 27 Scope of the Research • Investigate the feasibility of encapsulating lightpath as an OGSI Grid Service – Identify the characteristics to present the lightpath as a prime resource like computation and storage – Define and design the “right” framework & architecture for encapsulating lightpath – Demonstrate network Grid service concept • Identify the interactions between storage, computation and networking middlewares – Understand the concept in interactions with NMI, GT3, SRB – Collaborate with the BIRN Mouse Cyber-infrastructure research Will NOT investigate: – Scheduler, scheduling algorithms, network optimization – Photonics, hardware, optical networking, optical control, protocols, GT3, NMI, SRB, BIRN components – Develop it for any commercial optical networking or commercial hardware

- 28. 28 Deliverables • Initial investigation • Build a testbed • “Proof-of-concept {++}” of LambdaGrid service architecture • Demonstrate one aspect of BIRN Mouse application with the proposed concepts • Prototype Lambda as an OGSI Grid Service • Develop DTS and NRS, a Grid scheduling service • Propose a service architecture and generalize the concept with other eScience projects

- 29. 29 Timeline • Phase 1 – Continue to develop the Lambda Data Grid prototype – Build BIRN basic testbed • incorporate Lambda as a service, • measure/analyze the performance and scaling behavior • Phase 2 – Develop an OGSI wrapper to Lambda service • integrate as part of OGSA, interact with OptIPuter – Interface to NMI and SRB – Analyze the overall performance, incorporate the enhancements • Phase 3 – Extract generalized framework for intelligent services and applications – Incorporate experience from the Grid research community – Measure, optimize, measure, optimize, …

- 30. 30 Generalization and Future Direction for Research • Need to develop and build services on top of the base encapsulation • LambdaGrid concept can be generalized to other eScience apps which will enable new way of doing scientific research where bandwidth is “infinite” • The new concept of network as a scheduled grid service presents new and exciting problems for investigation: – New software systems that is optimized to waste bandwidth • Network, protocols, algorithms, software, architectures, systems – Lambda Distributed File System – The network as a Large Scale Distributed Computing – Resource co/allocation and optimization with storage and computation – Grid system architecture – enables new horizon for network optimization and lambda scheduling – The network as a white box, Optimal scheduling and algorithms

- 31. Thank You The Future is Bright 31 Imagine the next 5 years There are more questions than answers

- 32. 32 Mouse Applications SD SS Apps Middleware DTS Network(s) Overall System Lambda-Grid Meta- Scheduler Resource Managers C S D V I Control Plane GT3 SRB NRS Data Grid Comp Grid Net Grid OGSI-fy NMI Our contribution

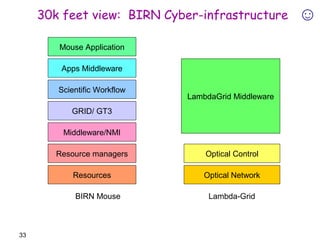

- 33. 33 30k feet view: BIRN Cyber-infrastructure Mouse Application Apps Middleware Scientific Workflow Middleware/NMI Resource managers LambdaGrid Middleware Optical Network GRID/ GT3 Optical Control Resources BIRN Mouse Lambda-Grid ☺

- 34. 34 Layered Architecture Co-reservation Grid Middleware OGSA/OGSI Co-allocation Data Grid Service Network Service Centralize Optical Network Control Intelligent Services NRS DTS Lambda OGSI-ification Generic DI Infrastructure Prototype Skeleton Sketch

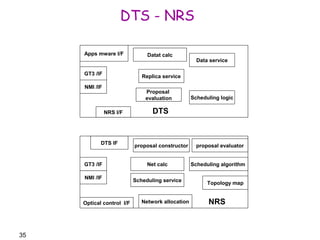

- 35. 35 DTS - NRS Data service Scheduling logic Replica service Apps mware I/F NMI /IF Proposal evaluation NRS I/F GT3 /IF Datat calc DTS Scheduling algorithm Topology map proposal constructor DTS IF NMI /IF Net calc Scheduling service Optical control I/F proposal evaluator GT3 /IF Network allocation NRS

Editor's Notes

- #33: Application Engaged Networks Allowing applications to place demands on the network (bandwidth, QoS, duration….) Allowing applications to get visibility into network status Full integration to ensure maximum value, performance