SCM Puppet: from an intro to the scaling

- 1. SCM Puppet: Who controls the spice, controls the universe! Stanislav S. Osipov, 2013

- 2. Table of contents • • • • • • • Overview Features overview Features Scalability & flexibility Mechanics Entities Subsystems

- 3. Overview SCM: what is the buzzword? SCM is an acronym for Software Configuration Management system. Of course, there are many SCM systems in the world. The most known in the open source world are: ● Puppet ● Chef ● Cfengine ● Ansible

- 4. Overview SCM: who are target users? ● SysOps ● DevOps ● Deployment Engineers

- 5. Overview Puppet: Known site sizes ● Two 50k+ node sites (Google is here) ● Multiple 10-30k+ node sites (Zynga is here) ● Tens of 3k+ node sites Many of companies don’t want to announce it’s own names. However, we know some of them.

- 6. Puppet features overview What does Puppet bring to us?

- 7. Features overview ● Scalability ● Flexibility ● Simple basic installation ● Puppet DSL is like a self-documentation ● Deterministic model ● Stored configurations ● Exported resources ● An inventory service with an API ● Reports & report processing

- 8. Features overview ● All configurations in one place ● Environments support: For example: production, development and testing can use different versions of manifests, plugins and modules from the same Puppet master ● Easy to debug and develop ● Strong community and evangelists ● Modular like Lego blocks: you can improve functionality and build a multilayer module model over Puppet modules

- 9. Features overview ● Available in production quality packages from the PuppetLabs package repository for Debian and Red Hat Linux families ● Puppet community has created two dashboards for Puppet: Puppet dashboard and Foreman ● 1200+ pre-built configurations on Puppet Forge including modules from PuppetLabs

- 11. Features Flexibility: models available Client - server model: ○ Server is a point of a management. It is called “Puppet master” usually. ○ Client is a deployment agent. It is called “Puppet agent” usually. “Masterless” model is available too: ○ Puppet can run without server in a standalone mode.

- 12. Features Flexibility: manifests languages available ● Puppet DSL is a declarative style language. If you define something, Puppet will try to deploy nodes every time it runs to met Puppet DSL definitions in manifests. ● Ruby DSL. Puppet allows you to use Ruby DSL instead or in addition to Puppet DSL to implement a very complex logic of a deployment. It is strongly recommended to use Puppet DSL only to keep your configurations readable and deterministic.

- 13. Features Environments Setup the Puppet master via puppet.conf: [main] modulepath = $confdir/modules manifest = $confdir/manifests/site.pp [development] modulepath = $confdir/environments/development/modules manifest = $confdir/environments/development/manifests/site.pp [testing] modulepath = $confdir/environments/testing/modules manifest = $confdir/environments/testing/manifests/site.pp

- 14. Features Environments Then, clone modules to fill your environments: # git clone /etc/puppet/modules /etc/puppet/environments/development/ # git clone /etc/puppet/modules /etc/puppet/environments/testing/

- 15. Features Environments How to pick up and apply manifests from the “development” environment on a particular node? Just use --environment option: puppet agent --test --environment development

- 16. Features Environments How to make the particular node to use a different environment permanently? Set in puppet.conf on your Puppet agent: [agent] environment = development Note, the default value of $environment is “production”.

- 17. Features Environments It was simple, wasn’t it? And at least 2 ways to use.

- 18. Features Simple basic installation Puppet master installation wget http://apt.Puppetlabs.com/puppetlabs-release-precise.deb dpkg -i Puppetlabs-release-precise.deb apt-get update apt-get -y install puppetmaster Puppet agent installation wget http://apt.Puppetlabs.com/puppetlabs-release-precise.deb dpkg -i Puppetlabs-release-precise.deb apt-get update apt-get -y install puppet

- 19. Features Determinism Before a single command is executed on a host, Puppet has constructed what we refer to as "a resource graph" of that node's existing configuration, and determined how your changes will impact that graph.

- 20. Features Determinism Here is a very simple example of such a resource graph:

- 21. Features Determinism: noop / dry-run mode One of the big advantages of the resource graph model is Puppet's unique and unrivaled "noop" functionality, which allows you to simulate a change before you deploy it. puppet agent -v --no-daemonize --noop This is also known as a dry-run mode. It is useful for seeing what changes Puppet will make without actually executing the changes.

- 22. Features Determinism: getting a resource graph Of course, you can get your own “resource graph”, which is actual set of resources for your node: puppet agent -v --no-daemonize --noop --graph It should create the next files: /var/lib/puppet/state/graphs/resources.dot /var/lib/puppet/state/graphs/relationships.dot /var/lib/puppet/state/graphs/expanded_relationships.dot

- 23. Features Determinism: getting a resource graph Then, you can generate a picture of the graph using Graphviz tool: dot -Tpng resources.dot -o resources.png

- 24. Features Determinism: getting a resource graph Of course, you can use interactive tools like Gephi to get a neat and interactive graph pic like this one:

- 26. Features Puppet DSL case $operatingsystem { centos, redhat: { $service_name = 'ntpd' } debian, ubuntu: { $service_name = 'ntp' } } package { 'ntp': ensure => installed } service { 'ntp': name => $service_name, ensure => running, enable => true, subscribe => File['/etc/ntp.conf'], } file { '/etc/ntp.conf': ensure => file, require => Package['ntp'], source => "Puppet:///modules/ntp/ntp.conf", # This source file would be located on the Puppet master at # /etc/puppet/modules/ntp/files/ntp.conf (in open source Puppet) }

- 27. Features Inventory service The inventory is a collection of node facts. The inventory service is a retrieval, storage, and search API exposed to the network by the Puppet master. The inventory service backend (a.k.a. the facts_terminus option) is what the Puppet master uses to store the inventory and do some of the heavy lifting of the inventory service.

- 28. Features Inventory service API ● To retrieve facts for nodefqdn.domain.tld, send a GET request to https://puppet:8140/production/facts/nodefqdn.domain.tld. ● To retrieve a list of all Ubuntu nodes with two or more processors, send a GET request to https://puppet:8140/production/facts_search/search?facts. processorcount.ge=2&facts.operatingsystem=Ubuntu. A further documentation is available at: http://docs.Puppetlabs.com/guides/inventory_service.html

- 29. Puppet scalability & flexibility Dive into unexpected things

- 30. Scalability Out of the box scheme HTTPS request Webrick HTTP server Puppet master / 1 process Sqlite backend

- 31. Scalability Out of the box scheme ● Webrick is a single theaded process web server written in Ruby. Puppet default settings are to launch a single Webrick process to serve connections. In fact, Puppet serves SSL operations itself. ● Sqlite backend doesn’t require a daemon to serve SQlite queries, therefore this solution is very light and doesn’t require an additional SysOp to setup a RDBMS.

- 32. Scalability Out of the box scheme: limitations Webrick becomes a problem when we have more than 1 connection from Puppet agents simultaneously. Is it a solution? Yes, when we have a lab with an only Puppet agent. Otherwise, it is obvious enough we need to replace Webrick with something more effectiveness.

- 33. Scalability: Vertical scaling Boosting a webserver performance HTTPS request Nginx with SSL Thin web server / 1 process Puppet master / N processes Sqlite backend

- 34. Scalability: Vertical scaling Boosting a webserver performance: advantages ● Nginx is the best known web server to serve SSL. It decreases a latency and Ruby server load. ● Thin allows to run many Puppet master processes, the count is predefined manually in your /etc/thin/puppet.yaml config file. The configuration is a quite simple. The best recommendation - to set N from C to 2*C, where C is a count of logical CPU cores you see in the system.

- 35. Scalability: Vertical scaling Boosting a webserver performance: limitations Sqlite becomes a problem when more that 4 Puppet agents tries to connect at the same time. Is it a solution? There is no sense to scale a web server while we have a very thin bottleneck at the backend. Therefore, we need an additional improvement at the backend. Then, it will be a solution.

- 36. Scalability: Vertical scaling Boosting a backend performance HTTPS request Nginx HTTPS Thin web server / 1 process Puppet master / N processes PuppetDB backend

- 37. Scalability: Vertical scaling Boosting a backend performance ● This is a mature and production-ready base model ● PuppetDB can use PostgreSQL or embedded HSQLDB backends ● PuppetDB requires a robust hardware, and should be installed on a dedicated node for big enough deployments ● PuppetDB was written in Java and requires a JVM to run

- 38. Scalability: Vertical scaling Boosting a backend performance The default behaviour of stored configurations is to store a complete copy of every catalog in the backend. This makes backend tables huge in a short time and generates a noticeable load as a consequence. Enabling thin_storeconfigs option allows to store facts, exported resources and tags only. Add the next setting in your puppet.conf: [master] thin_storeconfigs = true

- 39. Scalability: Horizontal scaling Puppet CA directory with manual signing scheme HTTPS requests Nginx HTTPS Nginx HTTPS Thin web server Puppet master N processes Thin web server Puppet master N processes PuppetDB backend

- 40. Scalability: Horizontal scaling Puppet CA directory with manual signing scheme Nginx Nginx /var/lib/puppet/ssl/ca/ /var/lib/puppet/ssl/ca/ Thin+PM /var/lib/puppet/ssl/ca/ Thin+PM ● ● ● puppet cert --list puppet cert --sign puppet cert --clean Network FS ● ● ● ● NFS OCFS2 GlusterFS Whatever

- 41. Scalability: Horizontal scaling Puppet CA directory with manual signing scheme ● Load balancer redirects Puppet CA requests to Puppet CA directory by regex: ^(/.*?)/(certificate.*?)/(.*)$ ● Puppet CA directory /var/lib/puppet/ssl/ca/ is actual on the every balancer node via a network file system. ● Admin can run “puppet cert” component on any node - all nodes will contain the actual state of the Puppet CA directory.

- 42. Scalability: Horizontal scaling Puppet CA directory with auto-signing scheme HTTPS requests Nginx HTTPS Nginx HTTPS Thin web server Thin web server Thin web server Puppet master N processes Puppet master N processes PuppetDB backend Puppet master CA autosign = true @ the only process

- 43. Scalability: Horizontal scaling Race condition on the Puppet CA directory issue When you try to use autosign = true option on more than 1 node with the shared Puppet CA directory, you can meet a race condition and lose sensitive data from it. The solution is to have the only active Puppet CA node in the cluster. All other Puppet CA nodes should be in a “hot standby” mode. If the first (active) node fails in terms of Nginx, the next node in the list will be used as a Puppet CA service.

- 44. Scalability: Horizontal scaling PuppetCA Nginx configuration with “hot standby” A sample configuration for Nginx: upstream puppetca { server 10.10.10.1:18140; server 10.10.10.2:18140 backup; server 10.10.10.3:18140 backup; } ... location ~* ^(/.*?)/(certificate.*?)/(.*)$ { ... proxy_pass http://puppetca; } ...

- 45. Scalability: Horizontal scaling Puppet CA directory with auto-signing scheme Nginx Nginx RO: /var/lib/puppet/ssl/ca/ Thin+PM RO: /var/lib/puppet/ssl/ca/ Thin+PM RW: /var/lib/puppet/ssl/ca/ Thin + a single process PM with autosign = true Network FS ● ● ● ● NFS OCFS2 GlusterFS Whatever

- 46. Scalability: Horizontal scaling Puppet CA directory with auto-signing scheme ● Load balancer redirects puppet CA requests to puppet CA cluster by regex: ^(/.*?)/(certificate.*?)/(.*)$ ● Puppet CA directory /var/lib/puppet/ssl/ca/ is actual on the every balancer node via a network file system. ● Puppet CA node can write to puppet CA directory - all nodes will contain the actual state of the puppet CA directory.

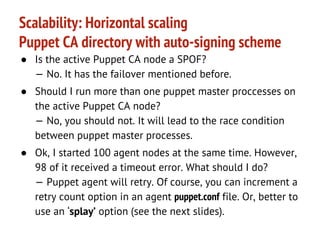

- 47. Scalability: Horizontal scaling Puppet CA directory with auto-signing scheme ● Is the active Puppet CA node a SPOF? — No. It has the failover mentioned before. ● Should I run more than one puppet master proccesses on the active Puppet CA node? — No, you should not. It will lead to the race condition between puppet master processes. ● Ok, I started 100 agent nodes at the same time. However, 98 of it received a timeout error. What should I do? — Puppet agent will retry. Of course, you can increment a retry count option in an agent puppet.conf file. Or, better to use an ‘splay’ option (see the next slides).

- 48. Scalability: Horizontal scaling Using a “splay” option By default, a puppet agent runs every 30 minutes. What if a lot of agent nodes were rebooted at the same time? You will get 99% of agent nodes failed to establish connection with puppet master nodes, because it will be overloaded. What is the solution? Set in your puppet.conf: [agent] splay = true

- 49. Scalability: Horizontal scaling Using a “splay” option What does the ‘splay’ option? ● It requires agent to add a random delay (but not longer than a rerun interval option) before it will run. ● With this option, reboot of 10k nodes will not lead to a Puppet master cluster overload (and crashes on it even) in a moment. ● Also, it will solve the problem with often autosign failures. Failures will happen noticeably rare!

- 50. Scalability: Horizontal scaling Multimaster issues As you noted before, schemes contains more than one Puppet master node. Let we take closer to a question how the balance of load should be reached. • Nginx balances requests between Puppet masters. • Requests between Nginx balancers could be distributed using multiple DNS A/AAAA RRs for a domain name of the Puppet master. • Every balancer could be a member of HA cluster with virtual IP, which is exposed as a DNS A/AAAA RR.

- 51. Scalability: Horizontal scaling Multimaster issues There is an important question about how make manifests synced between a lot of Puppet masters: ● A shared /etc/puppet directory. You will have a lot of caveats with it’s maintenance and security. Try to avoid this way. ● Rsync. Is very simple for very small clusters. ● Git. Useful for development labs or if a company policy requires it. ● A package with manifests (.deb/.rpm/etc). It is the most suitable way for an enterprise level deployment. Of course, package building, testing and publishing processes should be established before.

- 52. Puppet mechanics What is a magic inside?

- 53. SSL & identification Puppet uses SSL only. This aims 3 goals: ● Secure transport between client and server: Puppet can be used even via external networks. ● Identify the node: is trusted and has an unique name and a SSL fingerprint. ● Client can validate the server is trusted or not before to apply a configuration from the server. If not, the deployment process doesn’t starts.

- 54. Puppet mechanics: SSL handshake workflow 1. A new fresh and empty client tries to establish a communication with the Puppet master. If the client has no it’s own SSL certificate, it generates it from the scratch, using $fqdn fact as a client ID. 2. Puppet master checks whether the client is trusted or not. If CA has an information the client certificate has been revoked, the connection brakes.

- 55. Puppet mechanics: SSL handshake workflow 3. If the Puppet master & CA know nothing about the client (i.e. client is truly new) it stores client’s certificate signing request for an admin. 4. If ‘autosign’ option is not set, the request will be stored for admin. Admin can decline the request or sign it. The client will get a correct access to the Puppet master only after it’s certificate is signed on the Puppet master. 5. If ‘autosign’ option is set, the Puppet master signs the client certificate silently and starts to serve the client immediately.

- 56. Puppet mechanics: SSL: the first handshake Puppet agent Hello, show me your cert, please. Okay, here it is. Puppet master Here is my cert. Give me <...>, please. Sorry, your cert is not signed. Good bye. unsigned client cert

- 57. Puppet mechanics: SSL: a manual signing new certs to allow clients Puppet agent Puppet master What certs are waiting? Let me take a look. admin I know this node. Puppet, sign it, please. unsigned client cert

- 58. Puppet mechanics: SSL: the next handshakes Puppet agent Hello, show me your cert, please. Okay, here it is. Puppet master Here is my cert. Give me <data>, please. Here it is. signed client cert Further details are available at: http://projects.Puppetlabs.com/projects/1/wiki/certificates_and_security

- 59. Puppet mechanics: An internal workflow: operation sequence 1. After the SSL connection is established successfully, a client (Puppet agent) receives all additional facts and plugins (if the option ‘pluginsync’ is set as “true”). 2. Then, the client calls Facter. It returns to Puppet agent default and custom facts together. 3. Puppet agent sends all facts to the Puppet server. 4. Server stores facts and compiles a catalog for the node, which is based on facts. 5. Client receives a catalog and applies it. This operation is a pure deployment. PuppetLabs calls it “application configuration”. 6. After the deployment, client sends a report to the server. 7. Server stores and processes (if configured) the report.

- 60. Puppet mechanics: An internal workflow: operation sequence Puppet agent SSL handshake is ok Give me plugins, please. Okay, here it is. Here are my Facter variables. Give me a catalog, please. Here it is. Here is my “deployment” report. Good bye. Puppet master

- 61. Puppet mechanics: A full anatomy

- 62. Puppet entities What are basic bricks for SysOps?

- 63. Entities A resource Resource - an object, which should be in the state after Puppet run is completed, which described with options of the resource. file { ’/etc/hosts’: ensure => present, content => ‘127.0.0.1 } localhost’

- 64. Entities A node definition A node definition can be used in a root manifest only. Here are some node definitions: node default { … } node ‘client1.my.tld’ inherits default { … } node /^client-d+.dc01.my.tld { … }

- 65. Entities A manifest The manifest is a .pp file which contains resources. The root manifest is a site.pp file, placed at /etc/puppet/manifests/ directory usually. Here is a simple site.pp content example: node default { file { ‘/etc/hosts’: ensure => present, content => ‘127.0.0.1 } } localhost’

- 66. Entities A Puppet module The module is a group of manifests and plugins, placed at /etc/puppet/modules/<modulename>/ directory usually. An manifests/init.pp file is the root manifest of the module. The module could be loaded from the site.pp in a very simple manner: node default { include ‘modulename’ }

- 67. Entities A Puppet class The class is a set of resources and conditions, placed at /etc/puppet/modules/<modulename>/manifests/<classname>.pp manifest usually. class classname ( $class_option = ‘default_value’ ) { ... } The class could be loaded in a very simple manner: class { ‘classname’: class_option => ‘custom_value’ }

- 68. Entities A Puppet class Note: class could be called the only once at the whole configuration like a singleton object. Otherwise, you will get a Puppet error. If you want to call a class many times with different options, use a define directive instead.

- 69. Entities A Puppet type definition The type definition is a set of resources and conditions, placed where you want in manifests. define my_res ( $type_option = ‘default_value’ ) { ... } The type could be loaded in a very simple manner: my_res { ‘resourcename’: type_option => ‘custom_value’ }

- 70. Entities A Puppet catalog The catalog - is a plain manifest with no name, without any logic, variables, node definitions, modules, where are defined plain resources with a text prefilled options, which affects the given client only.

- 71. Entities A fact The fact is a named value, returned by tool called Facter. Every fact is available as a global variable inside every manifest. If you want to get closer to it, just run the next command: facter -p to see what variables (facts) are available for your manifests. Example of a fact usage in a manifest: $operatingsystem

- 72. Entities RAL: A type & provider The resource abstraction layer consists of: ● types (high-level models) ● providers (platform-specific implementations) — by splitting the two, it lets you describe desired resource states in a way that isn’t tied to a specific OS.

- 73. Entities A simple RAL model resource: in manifests/init.pp mysuperfile { “/etc/file/file.conf”: … } type: in lib/puppet/type/mysuperfile.rb Puppet::Type.newtype(:mysuperfile) do But sometimes, we need to implement an OS-dependent logic

- 74. Entities A full RAL model resource: in manifests/init.pp mysuperfile { “/etc/file/file.conf”: … } type: in lib/puppet/type/mysuperfile.rb Puppet::Type.newtype(:mysuperfile) do provider: in lib/puppet/provider/mysuperfile.rb Puppet::Type.type(:mysuperfile).provide(:saver) do

- 75. Puppet subsystems Additional things to see Puppet a little bit clearer

- 76. Puppet subsystems Facter Facter is a tool, which collects a node information and returns it in a “key => value” parseable format. Here is a part of a facter output: architecture => x86_64 facterversion => 1.7.0 fqdn => fuel-pm.c7200.d interfaces => eth0,eth1,eth2,lo ipaddress => 10.0.0.100 memoryfree => 112.33 MB Further details are available at: http://docs.Puppetlabs.com/facter/latest/

- 77. Puppet subsystems PuppetDB In version 1.4, PuppetDB stores: ● The most recent facts from every node ● The most recent catalog for every node ● Optionally, seven days (but it is configurable) of event reports for every node Additional details are available at: http://docs.Puppetlabs.com/puppetdb/latest/index.html

- 78. Puppet subsystems PuppetDB PuppetDB collects data generated by Puppet. It enables advanced Puppet features like the inventory service and exported resources, and can be the foundation for other applications that use Puppet’s data. Before PuppetDB appears, Puppet used ActiveRecord.

- 79. Puppet subsystems PuppetDB vs ActiveRecord ActiveRecord supports more different backends, than PuppetDB - like MySQL, Oracle, etc. But, it is deprecated for now. The main issue with AR is a lot of persistent connections to DB. PuppetDB works in an asynchronous way: It accepts all data from a Puppet master as fast as possible, returns ok, puts the data in a PuppetDB internal queue, which sequentially stores the data in the backend like PostgreSQL.

- 80. Puppet subsystems ActiveRecord: before PuppetDB Puppet Master ActiveRecord Puppet Master ActiveRecord SQL

- 81. Puppet subsystems ActiveRecord: before PuppetDB Puppet Master Puppet Puppet Master Puppet Master Puppet Master Puppet Master Master ActiveRecord ActiveRecord ActiveRecord ActiveRecord ActiveRecord ActiveRecord Sorry, so many concurrent SQL locks, persistent connections & writes

- 82. Puppet subsystems ActiveRecord: Before PuppetDB Puppet Master ActiveRecord Stomp Puppet Master ActiveRecord Stomp Puppet Master ActiveRecord SQL Stomp ActiveMQ Puppet queue

- 83. Puppet subsystems ActiveRecord: Before PuppetDB Puppet Puppet Puppet ActiveRecord Master Puppet ActiveRecord Master Puppet ActiveRecord ActiveRecord Master ActiveRecord Master Master Stomp Stomp Stomp Stomp Stomp Puppet Puppet Stomp Puppet ActiveRecord Stomp Master Puppet ActiveRecord Stomp Master Puppet ActiveRecord Stomp Master Puppet ActiveRecord Stomp Master Puppet ActiveRecord Stomp ActiveRecord Master Stomp ActiveRecord Master Master SQL: sorry, I’m too slow ActiveMQ Puppet queue

- 84. Puppet subsystems Before PuppetDB Puppet Puppet Puppet ActiveRecord Master Puppet ActiveRecord Master Puppet ActiveRecord ActiveRecord Master ActiveRecord Master Master Stomp Stomp Stomp Stomp Stomp Puppet Puppet Stomp Puppet ActiveRecord Stomp Master Puppet ActiveRecord Stomp Master Puppet ActiveRecord Stomp Master Puppet ActiveRecord Stomp Master Puppet ActiveRecord Stomp ActiveRecord Master Stomp ActiveRecord Master Master SQL ActiveMQ Puppet queue Script, which expires a data

- 85. Puppet subsystems PuppetDB Puppet Puppet Puppet Master Puppet Master Puppet Master Puppet Master Puppet Master Puppet Master Puppet Master Master Master PuppetDB: ● Async queue ● Cache ● Expiration ● Performance dashboard SQL

- 86. Puppet subsystems PuppetDB vs. AR: the final evolution chart Puppet Master Puppet Master Puppet Master ActiveRecord Stomp Active MQ Puppet queue SQL SQL ActiveRecord PuppetDB SQL

- 87. Puppet subsystems PuppetDB performance dashboard The PuppeDB performance dashboard is available at: http://puppetdb: 8080/dashboard/index.html Further information is available at: http://docs.Puppetlabs. com/puppetdb/latest/maintain_an d_tune.html

- 88. Puppet subsystems Hiera Hiera makes Puppet better by keeping site-specific data out of your manifests. It makes Puppet more flexible. Puppet classes can request whatever data they need, and your Hiera data will act like a sitewide config file. http://docs.Puppetlabs.com/hiera/1/

- 89. Puppet subsystems Hiera ● Easier to configure your own nodes: default data with multiple levels of overrides is finally easy. ● Easier to re-use public Puppet modules: don’t edit the code, just put the necessary data in Hiera. ● Easier to publish your own modules for collaboration: no need to worry about cleaning out your data before showing it around, and no more clashing variable names.

- 90. Puppet subsystems Hiera: what it brings to you? With Hiera, you can: ● Write common data for most nodes ● Override some values for machines located at a particular facility ● Override some of those values for one or two unique nodes. This way, you only have to write down the differences between nodes. When each node asks for a piece of data, it will get the specific value it needs.

- 91. Stanislav S. Osipov oss at gkos dot name Thank you!

![Features

Environments

Setup the Puppet master via puppet.conf:

[main]

modulepath = $confdir/modules

manifest = $confdir/manifests/site.pp

[development]

modulepath = $confdir/environments/development/modules

manifest = $confdir/environments/development/manifests/site.pp

[testing]

modulepath = $confdir/environments/testing/modules

manifest = $confdir/environments/testing/manifests/site.pp](https://image.slidesharecdn.com/puppet-131028164556-phpapp02/85/SCM-Puppet-from-an-intro-to-the-scaling-13-320.jpg)

![Features

Environments

How to make the particular node to use a different

environment permanently?

Set in puppet.conf on your Puppet agent:

[agent]

environment = development

Note, the default value of $environment is

“production”.](https://image.slidesharecdn.com/puppet-131028164556-phpapp02/85/SCM-Puppet-from-an-intro-to-the-scaling-16-320.jpg)

![Features

Puppet DSL

case $operatingsystem {

centos, redhat: { $service_name = 'ntpd' }

debian, ubuntu: { $service_name = 'ntp' }

}

package { 'ntp': ensure => installed }

service { 'ntp':

name

=> $service_name,

ensure

=> running,

enable

=> true,

subscribe => File['/etc/ntp.conf'],

}

file { '/etc/ntp.conf':

ensure

=> file,

require => Package['ntp'],

source

=> "Puppet:///modules/ntp/ntp.conf",

# This source file would be located on the Puppet master at

# /etc/puppet/modules/ntp/files/ntp.conf (in open source Puppet)

}](https://image.slidesharecdn.com/puppet-131028164556-phpapp02/85/SCM-Puppet-from-an-intro-to-the-scaling-26-320.jpg)

![Scalability: Vertical scaling

Boosting a backend performance

The default behaviour of stored configurations is to

store a complete copy of every catalog in the

backend. This makes backend tables huge in a short

time and generates a noticeable load as a

consequence. Enabling thin_storeconfigs

option allows to store facts, exported resources and

tags only. Add the next setting in your puppet.conf:

[master]

thin_storeconfigs = true](https://image.slidesharecdn.com/puppet-131028164556-phpapp02/85/SCM-Puppet-from-an-intro-to-the-scaling-38-320.jpg)

![Scalability: Horizontal scaling

Using a “splay” option

By default, a puppet agent runs every 30 minutes.

What if a lot of agent nodes were rebooted at the

same time?

You will get 99% of agent nodes failed to establish

connection with puppet master nodes, because it

will be overloaded. What is the solution? Set in your

puppet.conf:

[agent]

splay = true](https://image.slidesharecdn.com/puppet-131028164556-phpapp02/85/SCM-Puppet-from-an-intro-to-the-scaling-48-320.jpg)