Quasi newton artificial neural network training algorithms

- 1. Artificial Neural Network Training Algorithms DR.MRINMOY MAJUMDER QUASI-NEWTON LEVENBERG-MARQUARDT

- 2. QUASI-NEWTON METHOD • Newton's method is computationally expensive, as it requires many operations to evaluate the Hessian matrix and compute its inverse. • Alternative approaches, known as quasi-Newton or variable metric methods, are developed to solve that drawback. • These methods, instead of calculating the Hessian directly and then evaluating its inverse, build up an approximation to the inverse Hessian at each iteration of the algorithm. • The main idea behind the quasi-Newton method is to approximate the inverse Hessian by another matrix using only the first partial derivatives of the loss function.

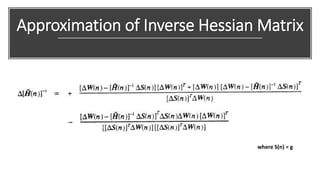

- 3. Equation for Weight Update/Parameter Improvement Approximation of Inverse Hessian Matrix Training Rate

- 4. How ? 1 1st iteration : random values assigned 2 2nd : random value assigned. 3 Use the weight update formula Process 1st iteration Weight assigned randomly Process 2nd iteration Weight Assigned Randomly G Inverse Hessian Matrix Process Weight Update formula used 3rd iteration η Learning rate Either constant or approximated by line minimization g 1st order loss gradient

- 5. Approximation of Inverse Hessian Matrix where S(n) = g

- 6. Levenberg-Marquardt algorithm The Levenberg-Marquardt algorithm or the damped least- squares method, • Has been designed to work specifically with loss functions which take the form of a sum of squared errors. • It works without computing the exact Hessian matrix. • Instead, it works with the Gradient vector and the Jacobian matrix.

- 8. Weight Update Procedure Loss function Gradient vector of the loss function Approximation of the Hessian matrix where m is the number of iterations in the data set and n is the number of weights. e is the vector of all error terms

- 9. Damping parameter λ is a damping factor that ensures the positiveness of the Hessian When the damping parameter λ is zero, this is just Newton's method, using the approximate Hessian matrix. On the other hand, when λ is large, this becomes gradient descent with a small training rate

- 10. Strength and Weakness As we have seen the Levenberg-Marquardt algorithm is a method tailored for functions of the type sum-of- squared-error. That makes it to be very fast when training neural networks measured on that kind of errors. Drawbacks The first one is that it cannot be applied to functions such as the root mean squared error or the cross entropy error. Also, it is not compatible with regularization terms. Finally, for very big data sets and neural networks, the Jacobian matrix becomes huge, and therefore it requires a lot of memory. Therefore, the Levenberg-Marquardt algorithm is not recommended when we have big data sets and/or neural networks.

- 11. Comparisons If our neural networks has many thousands of parameters/weights, we can use gradient descent or conjugate gradient, to save memory. If we have multiple neural networks to train with just a few thousands of instances or data sets and a few hundreds of weights, the best choice might be the Levenberg-Marquardt algorithm. In the rest of situations, the quasi- Newton method will work well.