Regression Testing: Down the Rabbit Hole (MEWT 2014)

- 1. Regression Testing: Down the Rabbit Hole Neil Studd, Towers Watson

- 2. About Me • 10 years of testing • Cambridge-based • Work for companies with red logos

- 3. Only the names have changed…

- 4. Chasing the Holy Grail • We’ll hear lots today about how regression testing should be done – …in an ideal world – …easiest for new projects – …or when starting afresh – …when there’s wider business buy-in, e.g. continuous delivery • The “holy grail” of regression testing…

- 5. I took the red pill • Desktop software • Infrequent releases • Client-driven features • Client-driven deadlines • (Time v features v quality: Quality often loses) • Manual regression cycle • At the end of the release

- 6. Our sacred texts • Tests are treated as a product bible • Handed down through generations • Revered and followed without question • Very much “of their time”; not modified to reflect new evidence

- 7. Oh, the things I’ve seen… • Tests not testing what they claimed to test • Expected result = “a sensible error” • …but that was actually a bug! • Not enough detail • Too much detail

- 8. All the information, all at once

- 9. Why was it done this way?

- 10. We need to go deeper • Five whys: – Not peer-reviewing – Short of time/resources – Fixed project deadline – Unrealistic promise to customer – Salespeople too far removed • Dev/test separation, driven by disrespect (dev) and fear (test) • “Testing is a tester’s problem”

- 11. We fell for the dark side • Don’t allow your tools to start working against you! • TFS: Supports multiple references to one test • TFS: Supports “shared steps” in tests = quickly multiplies setup/teardown • Just because you can easily record a regression test, doesn’t mean you should

- 12. What I didn’t do • Not burning books… • …written in good faith • …useful metadata • …cross-referencing • …gives information about previous perceived severities

- 13. How I’m surviving • Rewriting/reducing • Piecemeal • Session-based • To answer “Is there a problem here?” • …Which involves looking at the product

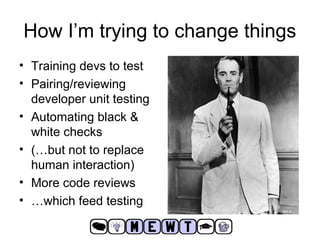

- 14. How I’m trying to change things • Training devs to test • Pairing/reviewing developer unit testing • Automating black & white checks • (…but not to replace human interaction) • More code reviews • …which feed testing

- 15. There’s still room to improve • More automation • Run more easily/often • Increased testability • Address the causes of regressions, rather than fixing the fallout • Focus on providing value and information

- 16. Any questions? • More thought to come (yes Simon I’ll write that article for The Testing Planet) • Blog: neilstudd.com • Twitter: @neilstudd

Editor's Notes

- #2: All slides are the property of Neil Studd & the MEWT organisers; please do not redistribute without prior permission. Alice in Wonderland (2010)

- #3: Me (2014)

- #4: All of the following experience accounts are true. They’re anonymised, but I haven’t worked for that many companies, so you could probably work out who they refer to. It’s all meant with the utmost respect for colleagues (past and present), and provided purely for discussion about the betterment of testing processes. Young Guns (1988)

- #5: The points here all comprise what’s very much the “holy grail” of regression testing That’s good, and I hope to learn a lot about how others are doing that! Monty Python and the Holy Grail (1975)

- #6: These are my experiences… Time v features v quality = the Project Management Triangle - http://en.wikipedia.org/wiki/Project_management_triangle The Matrix (1999)

- #7: The Ten Commandments (1956)

- #8: Tests not testing: One feature, only coverage was “blank inputs = blank outputs” Expected result: Another example, “Add corrupted data to file A, then try to open file B” – of course it meant A, and when done properly it revealed a bug… Not enough detail = Often skipped if confusing, and running it would’ve revealed bug. (Why skipped? Why not surfaced the problem? Why wasn’t it understood?) Too much detail = many different test cases within one; too guided, the “anyone can run them” example Wayne’s World 2 (1993)

- #9: A mixture of “too much” and “not enough”! Just cram the entire text into the title. Attempting some form of “all-pairs” test for a few key product elements, but presenting them in hard to comprehend manner They’re all authentication checks = candidates for automation, where a computer won’t have to comprehend all of this… Star Trek: The Next Generation, “Deja Q” (1990)

- #10: Quote from Grace Hopper

- #11: How did our tests get into this state? A quick five-whys analysis. http://en.wikipedia.org/wiki/5_Whys Other dev/test conflict that I’ve seen, thankfully not in current org, leading to reduced understanding/prioritisation within test team. Inception (2010)

- #12: A couple of issues that we uncovered after 2yrs of using Microsoft Team Foundation Server Multiple refs to one test: Can be hard to judge work left Shared steps means that each test has its own setup/teardown cycle, when they’re all testing in the same area; better to turn those into single scenarios/sessions. Easy recording (one-line test entry) encouraged tests to be created for even the tiniest thing, because it’s easy to create them …but need to judge the likelihood of regression, what the impact would be, versus the time spent running this test in every cycle… Star Wars Episode VI: Return Of The Jedi (1983)

- #13: Many test exist for a reason Good faith, e.g. 6 tests to check 6 different settings for one parameter, where (since launch) we know only 1 or 2 are in everyday use Many tests contain cross-references to (e.g.) support tickets or bug IDs, allowing us to understand why (at one point) this was deemed important to somebody Helps us find out if we’ve previously broken (and fixed) an issue. Regressions can be embarrassing, but moreso if you’ve already shipped a patch for it before… Fahrenheit 451 (1966)

- #14: With colleagues: Refactoring areas, as we find need to update or re-run them (i.e. Not a big, all-consuming, top-down effort to rewrite. More of a “make do and mend” approach) Creating scenario-based sessions, allowing us to (for instance) turn dozens of repetitive feature tests into 6 real-world scenarios which touch upon the same areas This also allows us to record more than just “time spent”, we can review time spent “on-charter” vs time spent investigating bugs, to give us another quality measurement Answering “is there a problem here” requires human interaction; we need to learn from our findings I Am Legend (2007)

- #15: Some ways in which we can catch regressions earlier, which we’re doing (with reasonable results so far) We’re learning as we go, adapting our approaches, seeing what works. Not precious about process. Training devs to test: Reminding them of common mistakes/weaknesses in our product, as well as pair-review during development Encouraging careful/collaborative development Repeated automated checks to identify regressions (or decrease in quality), after changes occur. Automation with care. Too many checks = not enough people looking at the software, may prevent new risks from being uncovered, reduce learning Code reviews: Not just to catch glaring issues, but also to provide “hints to testers” about what’s impacted and what the risks are, to help shape the regression testing approach 12 Angry Men (1957)

- #16: Value and information: Is regression even the biggest risk? New problems (in new features) tend to be more prevalent. …If we (and our internal/beta testers) are using the application in a user-centred manner, and regressions slip through, would a real user notice (or care) either? Rocky Balboa (2006)

- #17: Recommended viewing: Michael Bolton’s EuroSTAR webinar “Things could get worse” : https://www.youtube.com/watch?v=VNfPwD9u9Bk Batman Forever (1995)