Spark Summit EU talk by Sital Kedia

- 1. Apache Spark at Scale: A 60 TB+ production use case Sital Kedia Facebook

- 2. Agenda • Use case: Entity ranking • Previous Hive implementation • Spark implementation • Performance comparison • Reliability improvements • Performance improvements • Configuration tuning

- 3. Use case: Entity ranking • Used to serve realtime queries to rank entities • Entity can be users, places, pages etc • Raw features generated offline using Hive and loaded onto the system for real-time query.

- 4. Previous Hive implementation INSERT OVERWRITE TABLE tmp_table1 PARTITION ( . . .) SELECT entity_id, target_id, feature_id, feature_value FROM input_table WHERE ... INSERT OVERWRITE TABLE tmp_table2 PARTITION ( . . .) SELECT entity_id, target_id, AGG(feature_id, feature_value) FROM tmp_table1 SELECT TRANSFORM (entity_id % SHARDS as shard_id, ...) USING 'indexer' -- writes indexed files to hdfs AS shard_id, status FROM tmp_table2 Input table tmp_table1 tmp_table2 indexed hdfs_files • 60 TB + compressed input data size • Split into hundreds of smaller hive jobs sharded by entity id • Unmanageable and slow Filter Aggregate Shard

- 5. Spark implementation SELECT TRANSFORM (shard_id, . . .) USING 'indexer' AS shard_id, status FROM ( SELECT entity_id % SHARDS as shard_id, entity_id, target_id, AGG ( ...) FROM input_table WHERE ... GROUP BY shard_id, entity_id, feature_id, target_id CLUSTER BY shard_id ) AS T Input table indexed hdfs files • Single job with 2 stages • Shuffles 90 TB+ compressed intermediate data

- 7. CPU time CPU time (in cpu-days) 0 1750 3500 5250 7000 Job 1 Job 2 Hive Spark • Collected from OS proc file-system. • Aggregated across all executors

- 8. CPU Reservation time CPU Reservation time (in cpu-days) 0 3500 7000 10500 14000 Job 1 Job 2 Hive Spark • Executor run time * spark.executor.cores • Aggregated across all executors

- 9. Latency Latency (in hours) 0 20 40 60 80 Job 1 Job 2 Hive Spark • End to end latency of the job

- 11. Fix memory leak in the sorter SPARK-14363 e c o r d Pointer Array Sort local disk Spill to Disk Free memory Pages Memory leak Memory Page

- 12. Seamless cluster restart Task Scheduler Backend Scheduler Shuffle service Executor local disk Resource Manager Zookeeper Ignore all task failures Cluster Manager down notification Register the shuffle files Cluster Manager up notification Stop ignoring task failures Request resources Shuffle service Executor local disk Launch executors Executor Executor Driver Worker Worker

- 13. Other reliability improvements • Various memory leak fixes (SPARK-13958 and SPARK-17113) • Make PipedRDD robust to fetch failures (SPARK-13793) • Configurable max number of fetch failures (SPARK-13369) • Unresponsive driver (SPARK-13279) • TimSort issue due to integer overflow for large buffer (SPARK-13850)

- 16. Source: sed ut unde omnis Tools Thread dump from Spark UI

- 17. Tools Flame Graph Executo Periodic Jstack/PerfExecutoExecutor Filter executor threads Worker Jstack aggregator service

- 18. Reduce shuffle write latency SPARK-5581 (Up to 50% speed-up) Map task Sort and Spill Shuffle partition Shuffle partition Shuffle partition file open file close Map task Sort and Spill Shuffle partition Shuffle partition Shuffle partition

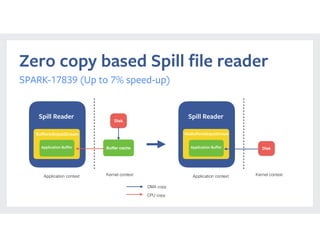

- 19. Zero copy based Spill file reader SPARK-17839 (Up to 7% speed-up) Disk Application context Kernel context DMA copy CPU copy Application Buffer Spill Reader BufferedInputStream Buffer cache Disk Application context Kernel context Application Buffer Spill Reader NioBufferedInputStream

- 20. Cache index files on shuffle server SPARK-15074 index file partition partition partition Shuffle service Reducer Reducer Shuffle fetch Shuffle fetch Read index file Read partition Read partition Read index file index file partition partition partition Shuffle service Reducer Reducer Shuffle fetch Shuffle fetch Read and cache index file Read partition Read partition

- 21. Other performance improvements • Snappy optimization (SPARK-14277) • Fix duplicate task run issue due to fetch failure (SPARK-14649) • Configurable buffer size for PipedRDD (SPARK-14542) • Reduce update frequency of shuffle bytes written metrics (SPARK-15569) • Configurable initial buffer size for Sorter(SPARK-15958)

- 23. Configuration tuning • Memory configurations • spark.memory.offHeap.enabled = true • spark.executor.memory = 3g • spark.memory.offHeap.size = 3g • Use parallel GC instead of G1GC • spark.executor.extraJavaOptions = -XX:UseParallelGC • Enable dynamic executor allocation • spark.dynamicAllocation.enabled = true

- 24. Configuration tuning • Tune Shuffle service • spark.shuffle.io.serverThreads = 128 • spark.shuffle.io.backLog = 8192 • Buffer size configurations - • spark.unsafe.sorter.spill.reader.buffer.size = 2m • spark.shuffle.file.buffer = 1m • spark.shuffle.sort.initialBufferSize = 4194304

- 25. Resource • Apache Spark @Scale: A 60 TB+ production use case

- 26. Questions?