Static Partitioning with Xen, LinuxRT, and Zephyr: A Concrete End-to-end Example - ELC NA 2022

- 1. Static Partitioning with Xen, LinuxRT, and Zephyr: a concrete end-to-end example Stefano Stabellini Embedded Linux Conference 2022

- 2. 2 | Mixed-Criticality and Static Partitioning • It is common to have a mix of critical and non-critical components • Real-time vs. non real-time • Function-critical vs. user interface or cloud interface • Running them in the same environment put the critical function at risk • A failure in the non-critical component causes a critical system crash • The non-critical component using too much CPU time causes a missed deadline • Don’t put all your eggs in one basket • Static partitioning allows you to run them separately in isolation • Many baskets! • Each domain has direct hardware access (IOMMU protected) • Real-time (< 5us latency) • Strong isolation between the domains • Real-time isolation • Failure isolation • By default, each component has only enough privilege to do what it needs to do 2 Xen Critical Non- Critical Non- Critical

- 3. 3 | Static Partitioning Linux Dom0 Dom1 Zephyr LinuxRT Programmable Logic GEM0 MMC0 GEM1 MMC1 TTC SATA

- 4. 4 | Xen Features for Static Partitioning • Xen Dom0less: static domains configuration for Xen • Statically-defined domains • Sub-second boot times • No need to create new VMs at runtime (possible but not required) • No need for Dom0 (possible but not required) • Each domain has direct hardware access • Real-time and Cache Coloring: isolation from cache interference • Deterministic interrupt latency at 3us • VM-to-VM communication • Static shared memory and event channels • PV drivers for device sharing • Safety-certifiability improvements underway • MISRA C • Deterministic interrupt handling and memory allocations

- 5. 5 | Step1: a simple Xen dom0less static configuration • Board: Xilinx ZCU102 • Xen and 3 VMs • Regular Linux Dom0 from Xilinx BSP • LinuxRT DomU with a minimal busybox rootfs • Zephyr DomU • Configuration parameters • Memory allocation • CPUs allocation • Device assignment added later • Do we need Dom0? • Useful for system monitoring and domains restart • If dom0 is required, the Xen userspace tools (xl) need to be built and added to the dom0 rootfs Xen LinuxRT Linux Dom0 Zephyr

- 6. 6 | Xen Dom0less boot U-Boot Xen Linux Dom0 LinuxRT DomU Zephyr DomU CPU 0 CPU 1-2 CPU 3 boots boots

- 7. 7 | ImageBuilder • A tool to automatically generate a bootable configuration from a simple text config file • https://gitlab.com/xen-project/imagebuilder MEMORY_START="0x0" MEMORY_END="0x80000000" DEVICE_TREE="elc22demo1/mpsoc.dtb" XEN="elc22demo1/xen" DOM0_KERNEL="elc22demo1/xilinx-linux" DOM0_RAMDISK="elc22demo1/xen-rootfs.cpio.gz" DOM0_MEM=1024 NUM_DOMUS=2 DOMU_KERNEL[0]="elc22demo1/linuxrt" DOMU_RAMDISK[0]="elc22demo1/initrd.cpio" DOMU_MEM[0]=2048 DOMU_VCPUS[0]=2 DOMU_KERNEL[1]="elc22demo1/zephyr.bin" DOMU_MEM[1]=128 # bash imagebuilder/scripts/uboot-script-gen -t tftp -d . -c elc22demo/config

- 8. 8 | ImageBuilder boot.source • ImageBuilder generates boot.source and boot.scr to be loaded by u-boot • Editing boot.source is possible • Then call mkimage by hand # mkimage -A arm64 -T script -C none -a 0xc00000 -e 0xc00000 -d boot.source boot.scr

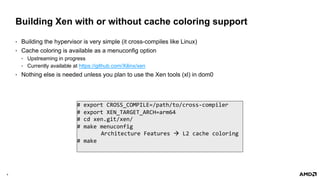

- 9. 9 | Building Xen with or without cache coloring support • Building the hypervisor is very simple (it cross-compiles like Linux) • Cache coloring is available as a menuconfig option • Upstreaming in progress • Currently available at https://github.com/Xilinx/xen • Nothing else is needed unless you plan to use the Xen tools (xl) in dom0 # export CROSS_COMPILE=/path/to/cross-compiler # export XEN_TARGET_ARCH=arm64 # cd xen.git/xen/ # make menuconfig Architecture Features à L2 cache coloring # make

- 10. 10 | Building a Linux/LinuxRT kernel for Xen • Vanilla Linux releases and binaries work • Using Linux v5.17 for the demo • The following Kconfig options are recommended: • CONFIG_XEN • CONFIG_XEN_BLKDEV_BACKEND • CONFIG_XEN_NETDEV_BACKEND • CONFIG_XEN_GNTDEV • CONFIG_XEN_GRANT_DEV_ALLOC • CONFIG_XEN_GRANT_DMA_ALLOC • CONFIG_SERIAL_AMBA_PL011 • CONFIG_SERIAL_AMBA_PL011_CONSOLE • CONFIG_BRIDGE • Either CONFIG_XEN or CONFIG_SERIAL_AMBA_PL011 is needed for console output • 2 patches for PV drivers support for dom0less • Patches already accepted and soon to be upstream

- 11. 11 | Building a Dom0 rootfs • Dom0 requires the Xen userspace tools in Dom0 • Build a Linux rootfs for Dom0 with Xen support using Yocto: DISTRO_FEATURES:append = " xen" DISTRO_FEATURES:append = " virtualization" IMAGE_INSTALL:append = " xen-base zlib-dev" ASSUME_PROVIDED += ” iasl-native"

- 12. 12 | Building Zephyr with Xen support • Initial Zephyr build environment setup required • See https://docs.zephyrproject.org/latest/develop/getting_started/index.html • Use the existing xenvm machine • Build time Device Tree: boards/arm64/xenvm/xenvm.dts • Memory mappings: soc/arm64/xenvm/mmu_regions.c • To run Zephyr as Dom0less guest: • change xen_hvc with xen_consoleio_hvc in xenvm.dts • build Zephyr with Dom0 support • build Xen with DEBUG enabled # west build -t menuconfig -> SoC/CPU/Configuration Selection (Xen virtual machine on aarch64) -> Xen virtual machine on aarch64 -> Zephyr as Xen Domain 0 -> Device Drivers -> Serial Drivers -> Xen hypervisor Dom0 console UART driver # west build -b xenvm samples/hello_world/

- 13. 13 | Step2: Add Device Assignment • Device Assigment: • Direct hardware access • Protected by the IOMMU • Example configuration: • Assigning the Network Device (GEM) to LinuxRT • Assigning the TTC timer to Zephyr Xen LinuxRT Linux Dom0 Zephyr GEM0 TTC0

- 14. 14 | Xen Device Assignment • Based on “partial device trees” • They describe the device for the domU • They configure device assignment • Everything under passthrough is copied to the guest device tree • Special device assignment configuration properties: • xen,reg • xen,path • xen,force-assign-without-iommu • interrupt is used for interrupt remapping • interrupt-parent must be 0xfde8 /dts-v1/; / { #address-cells = <0x2>; #size-cells = <0x2>; passthrough { compatible = "simple-bus"; ranges; #address-cells = <0x2>; #size-cells = <0x2>; ethernet@ff0e0000 { compatible = "cdns,zynqmp-gem"; […] reg = <0x0 0xff0e0000 0x0 0x1000>; interrupt-parent = <0xfde8>; interrupts = <0x0 0x3f 0x4 0x0 0x3f 0x4>; xen,reg = <0x0 0xff0e0000 0x0 0x1000 0x0 0xff0e0000>; xen,path = "/amba/ethernet@ff0e0000"; }; }; };

- 15. 15 | Xen Device Assignment and ImageBuilder • Add xen,passthrough; under the corresponding node on the host device tree • Add the partial device tree binaries to the ImageBuilder config file • ImageBuilder will load them automatically for you at boot […] NUM_DOMUS=2 DOMU_KERNEL[0]="elc22demo2/linuxrt" DOMU_RAMDISK[0]="elc22demo2/initrd.cpio" DOMU_MEM[0]=2048 DOMU_VCPUS[0]=2 DOMU_PASSTHROUGH_DTB[0]="elc22demo2/ethernet@ff0e0000.dtb" DOMU_KERNEL[1]="elc22demo2/zephyr.bin" DOMU_PASSTHROUGH_DTB[1]="elc22demo2/timer@ff110000.dtb" DOMU_MEM[1]=128

- 16. 16 | Xilinx Xen Device Tree examples repository • Repository with device tree passthrough examples: https://github.com/Xilinx/xen-passthrough-device-trees • Simplified ImageBuilder configuration with PASSTHROUGH_DTS_REPO, DOMU_PASSTHROUGH_PATHS • Based on file names and example files, no automatic device tree generation yet • Careful about “axi” vs “amba”, the name needs to match everywhere! • host DTB, passthrough DTB, xen,path property, ImageBuider config file […] PASSTHROUGH_DTS_REPO="git@github.com:Xilinx/xen-passthrough-device-trees.git device-trees-2021.2" NUM_DOMUS=2 DOMU_KERNEL[0]="elc22demo2/linuxrt" DOMU_RAMDISK[0]="elc22demo2/initrd.cpio" DOMU_MEM[0]=2048 DOMU_VCPUS[0]=2 DOMU_PASSTHROUGH_PATHS[0]="/axi/ethernet@ff0e0000” DOMU_KERNEL[1]="elc22demo2/zephyr.bin" DOMU_MEM[1]=128 DOMU_PASSTHROUGH_PATHS[1]="/axi/timer@ff110000"

- 17. 17 | Xen Partial Device Trees Automatic Generation • System Device Tree: Device Tree based description of a full heterogeneous system • Lopper • a Device Tree manipulation tool • takes a System Device Tree as input and generates multiple Device Trees as output • Use Lopper to automatically generate Xen passthrough DTBs • Just need to provide the host DTB and the device path, e.g. /amba/ethernet@ff0e0000 • Attend the presentation “System Device Tree and Lopper: Concrete Examples”, Thursday June 23 at 12PM!

- 18. 18 | Make use of a new device in Zephyr • Add it to the board device tree boards/arm64/xenvm/xenvm.dts • Map the device memory in soc/arm64/xenvm/mmu_regions.c MMU_REGION_FLAT_ENTRY("TTC", DT_REG_ADDR_BY_IDX(DT_INST(0, xlnx_ttcps), 0), DT_REG_SIZE_BY_IDX(DT_INST(0, xlnx_ttcps), 0), MT_DEVICE_nGnRnE | MT_P_RW_U_RW | MT_NS), ttc0: timer@ff110000 { compatible = "xlnx,ttcps"; status = "okay"; interrupts = <GIC_SPI 36 IRQ_TYPE_LEVEL IRQ_DEFAULT_PRIORITY>, <GIC_SPI 37 IRQ_TYPE_LEVEL IRQ_DEFAULT_PRIORITY>, <GIC_SPI 38 IRQ_TYPE_LEVEL IRQ_DEFAULT_PRIORITY>; interrupt-names = "irq_0", "irq_1", "irq_2"; reg = <0x0 0xff110000 0x0 0x1000>; label = "ttc0"; interrupt-parent = <&gic>; clock-frequency = <8320000>; };

- 19. © Copyright 2020 Xilinx Step3: Xen Cache Coloring L2 Core 1 Core 2 Core 3 Core 4 DDR L1 L1 L1 L1

- 20. © Copyright 2020 Xilinx Xen Cache Coloring L2 Core 1 Core 2 Core 3 Core 4 DDR L1 L1 L1 L1

- 21. © Copyright 2020 Xilinx 4CPUs clusters often share L2 cache 4Interference via L2 cache affects performance ¬ App0 running on CPU0 can cause cache entries evictions, which affect App1 running on CPU1 ¬ App1 running on CPU1 could miss a deadline due to App0’s behavior ¬ It can happen between Apps running on the same OS & between VMs on the same hypervisor 4Hypervisor Solution: Cache Partitioning, AKA Cache Coloring ¬ Each VM gets its own allocation of cache entries ¬ No shared cache entries between VMs ¬ Allows real-time apps to run with deterministic IRQ latency D D R Xen Cache Coloring Core 1 Core 2 Core 3 Core 4 L2

- 22. 22 | Xen Cache Coloring and ImageBuilder • Cache Coloring on Xen ZCU102 • 16 colors, each color corresponds to 256MB • Color bitmask is 0xF000 • Configuration: • Xen à color 0 • Dom0 à color 1-5 • LinuxRT à color 6-14 • Zephyr à color 15 # Xen and Dom0, use XEN_CMD in the ImageBuilder config: XEN_CMD=“…xen_colors=0-0 dom0_colors=1-5 way_size=65536” DOMU_COLORS[0]="6-14” DOMU_COLORS[1]="15-15"

- 23. Dom0 LinuxRT Zephyr CPU 0 CPU 1-2 CPU 3 Linux Dom0 ring buffer Step4: Shared Memory and Event Channels

- 24. 24 | Shared Memory Configuration • A static shared memory region chosen at build time • E.g. 0x7fe0_1000 • The address matter for cache coloring: 0x7fe0_1000 means color “1” • Xen event channels can be used for notifications • A BSD-licensed xen.h file with Xen hypercall definitions is provided availble • Can be embedded in Zephyr and other RTOSes • Makes it easy to add event channel support anywhere • Static event channel definitions based on device tree available in the next Xen release • Today event channels are created dynamically • From the next release it is possible to configure them statically as part of the Xen domains (i.e. ImageBuilder) • Static shared memory support also coming in the next Xen release • Possible to define shared memory without editing the memory node • Work in progress by ARM

- 25. 25 | Shared Memory Configuration • Carve out an address range from the memory node • 0xf7fe00000 - 0xf7ff00000 • Add it as a separate sram node memory { device_type = "memory"; reg = <0x00 0x00 0x00 0x7fe00000 0x08 0x00 0x00 0x80000000>; }; sram@7fe00000 { compatible = "mmio-sram"; reg = <0x0 0x7fe00000 0x0 0x100000>; xen,passthrough; };

- 26. 26 | Shared Memory Configuration • Add shared memory to the domU passthrough device tree passthrough { compatible = "simple-bus"; ranges; #address-cells = <0x2>; #size-cells = <0x2>; /* timer has been tested with baremetal app only */ timer@ff110000 { […] sram@7fe01000 { compatible = "mmio-sram"; reg = <0x0 0x7fe01000 0x0 0x1000>; xen,reg = <0x0 0x7fe01000 0x0 0x1000 0x0 0x7fe01000>; xen,force-assign-without-iommu = <0x1>; };

- 27. 27 | Make use of the shared memory and event channels in Zephyr • Map the shared memory • Setup event channels MMU_REGION_FLAT_ENTRY("RAM_SHARED", 0x7fe01000, 0x1000, MT_NORMAL_NC | MT_P_RW_U_RW | MT_NS), #include "xen.h” […] bind.remote_dom = remote_domid; bind.remote_port = remote_port; bind.local_port = 0; ret = xen_hypercall(EVTCHNOP_bind_interdomain, (unsigned long)&bind, 0, 0, HYPERVISOR_event_channel_op);

- 28. 28 | Make use of the shared memory and event channels in Linux • Write a new driver static int __init shared_mem_init(void) { char *str = "go"; struct resource r; struct device_node *np = of_find_compatible_node(NULL, NULL, "xen,shared-memory-v1"); struct evtchn_alloc_unbound alloc_unbound; of_address_to_resource(np, 0, &r); shared_mem = phys_to_virt(r.start); alloc_unbound.dom = DOMID_SELF; alloc_unbound.remote_dom = 1; /* domid 1 */ HYPERVISOR_event_channel_op(EVTCHNOP_alloc_unbound, &alloc_unbound); irq = bind_evtchn_to_irqhandler_lateeoi(alloc_unbound.port, evtchn_handler, 0, “shared_intf", NULL); irq = rc; memcpy(shared_mem, str, 3); mb(); /* send port number to other domain */ memcpy(shared_mem + 4, &alloc_unbound.port, sizeof(alloc_unbound.port)); return 0; }

- 29. 29 | Step5: Dom0less with PV Drivers Dom0 U-Boot Xen LinuxRT CPU CPU NetBack NetFront init-dom0less Zephyr CPU 3

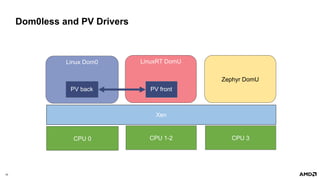

- 30. 30 | Dom0less and PV Drivers Linux Dom0 LinuxRT DomU Zephyr DomU CPU 0 CPU 1-2 CPU 3 Xen

- 31. 31 | Dom0less and PV Drivers • Xen PV Drivers are used to share a device across multiple domains • Share the network across multiple domains • Share a disk across multiple domains, a partition to each domain • PV network can also be used for VM-to-VM communication • With dom0less, PV Drivers are instantiated after the boot is finished from Dom0 • Fast boot times: the critical application can start immediately, no need to wait • PV drivers connect later after Dom0 completed booting • A Dom0 environment with Xen userspace tools is required to setup the connection • Use init-dom0less in Dom0 to initialize PV drivers, then hotplug a PV network connection /usr/lib/xen/bin/init-dom0less brctl addbr xenbr0; ifconfig xenbr0 192.168.0.1 up xl network-attach 1

- 32. Questions?

![7 |

ImageBuilder

• A tool to automatically generate a bootable configuration from a simple text config file

• https://gitlab.com/xen-project/imagebuilder

MEMORY_START="0x0"

MEMORY_END="0x80000000"

DEVICE_TREE="elc22demo1/mpsoc.dtb"

XEN="elc22demo1/xen"

DOM0_KERNEL="elc22demo1/xilinx-linux"

DOM0_RAMDISK="elc22demo1/xen-rootfs.cpio.gz"

DOM0_MEM=1024

NUM_DOMUS=2

DOMU_KERNEL[0]="elc22demo1/linuxrt"

DOMU_RAMDISK[0]="elc22demo1/initrd.cpio"

DOMU_MEM[0]=2048

DOMU_VCPUS[0]=2

DOMU_KERNEL[1]="elc22demo1/zephyr.bin"

DOMU_MEM[1]=128

# bash imagebuilder/scripts/uboot-script-gen -t tftp -d . -c elc22demo/config](https://image.slidesharecdn.com/xenstaticpartitioningexample-elc22-220623210323-dbb14fcb/85/Static-Partitioning-with-Xen-LinuxRT-and-Zephyr-A-Concrete-End-to-end-Example-ELC-NA-2022-7-320.jpg)

![14 |

Xen Device Assignment

• Based on “partial device trees”

• They describe the device for the domU

• They configure device assignment

• Everything under passthrough is copied

to the guest device tree

• Special device assignment

configuration properties:

• xen,reg

• xen,path

• xen,force-assign-without-iommu

• interrupt is used for interrupt remapping

• interrupt-parent must be 0xfde8

/dts-v1/;

/ {

#address-cells = <0x2>;

#size-cells = <0x2>;

passthrough {

compatible = "simple-bus";

ranges;

#address-cells = <0x2>;

#size-cells = <0x2>;

ethernet@ff0e0000 {

compatible = "cdns,zynqmp-gem";

[…]

reg = <0x0 0xff0e0000 0x0 0x1000>;

interrupt-parent = <0xfde8>;

interrupts = <0x0 0x3f 0x4 0x0 0x3f 0x4>;

xen,reg = <0x0 0xff0e0000 0x0 0x1000 0x0 0xff0e0000>;

xen,path = "/amba/ethernet@ff0e0000";

};

};

};](https://image.slidesharecdn.com/xenstaticpartitioningexample-elc22-220623210323-dbb14fcb/85/Static-Partitioning-with-Xen-LinuxRT-and-Zephyr-A-Concrete-End-to-end-Example-ELC-NA-2022-14-320.jpg)

![15 |

Xen Device Assignment and ImageBuilder

• Add xen,passthrough; under the corresponding node on the host device tree

• Add the partial device tree binaries to the ImageBuilder config file

• ImageBuilder will load them automatically for you at boot

[…]

NUM_DOMUS=2

DOMU_KERNEL[0]="elc22demo2/linuxrt"

DOMU_RAMDISK[0]="elc22demo2/initrd.cpio"

DOMU_MEM[0]=2048

DOMU_VCPUS[0]=2

DOMU_PASSTHROUGH_DTB[0]="elc22demo2/ethernet@ff0e0000.dtb"

DOMU_KERNEL[1]="elc22demo2/zephyr.bin"

DOMU_PASSTHROUGH_DTB[1]="elc22demo2/timer@ff110000.dtb"

DOMU_MEM[1]=128](https://image.slidesharecdn.com/xenstaticpartitioningexample-elc22-220623210323-dbb14fcb/85/Static-Partitioning-with-Xen-LinuxRT-and-Zephyr-A-Concrete-End-to-end-Example-ELC-NA-2022-15-320.jpg)

![16 |

Xilinx Xen Device Tree examples repository

• Repository with device tree passthrough examples: https://github.com/Xilinx/xen-passthrough-device-trees

• Simplified ImageBuilder configuration with PASSTHROUGH_DTS_REPO, DOMU_PASSTHROUGH_PATHS

• Based on file names and example files, no automatic device tree generation yet

• Careful about “axi” vs “amba”, the name needs to match everywhere!

• host DTB, passthrough DTB, xen,path property, ImageBuider config file

[…]

PASSTHROUGH_DTS_REPO="git@github.com:Xilinx/xen-passthrough-device-trees.git device-trees-2021.2"

NUM_DOMUS=2

DOMU_KERNEL[0]="elc22demo2/linuxrt"

DOMU_RAMDISK[0]="elc22demo2/initrd.cpio"

DOMU_MEM[0]=2048

DOMU_VCPUS[0]=2

DOMU_PASSTHROUGH_PATHS[0]="/axi/ethernet@ff0e0000”

DOMU_KERNEL[1]="elc22demo2/zephyr.bin"

DOMU_MEM[1]=128

DOMU_PASSTHROUGH_PATHS[1]="/axi/timer@ff110000"](https://image.slidesharecdn.com/xenstaticpartitioningexample-elc22-220623210323-dbb14fcb/85/Static-Partitioning-with-Xen-LinuxRT-and-Zephyr-A-Concrete-End-to-end-Example-ELC-NA-2022-16-320.jpg)

![22 |

Xen Cache Coloring and ImageBuilder

• Cache Coloring on Xen ZCU102

• 16 colors, each color corresponds to 256MB

• Color bitmask is 0xF000

• Configuration:

• Xen à color 0

• Dom0 à color 1-5

• LinuxRT à color 6-14

• Zephyr à color 15

# Xen and Dom0, use XEN_CMD in the ImageBuilder config:

XEN_CMD=“…xen_colors=0-0 dom0_colors=1-5 way_size=65536”

DOMU_COLORS[0]="6-14”

DOMU_COLORS[1]="15-15"](https://image.slidesharecdn.com/xenstaticpartitioningexample-elc22-220623210323-dbb14fcb/85/Static-Partitioning-with-Xen-LinuxRT-and-Zephyr-A-Concrete-End-to-end-Example-ELC-NA-2022-22-320.jpg)

![26 |

Shared Memory Configuration

• Add shared memory to the domU passthrough device tree

passthrough {

compatible = "simple-bus";

ranges;

#address-cells = <0x2>;

#size-cells = <0x2>;

/* timer has been tested with baremetal app only */

timer@ff110000 {

[…]

sram@7fe01000 {

compatible = "mmio-sram";

reg = <0x0 0x7fe01000 0x0 0x1000>;

xen,reg = <0x0 0x7fe01000 0x0 0x1000 0x0 0x7fe01000>;

xen,force-assign-without-iommu = <0x1>;

};](https://image.slidesharecdn.com/xenstaticpartitioningexample-elc22-220623210323-dbb14fcb/85/Static-Partitioning-with-Xen-LinuxRT-and-Zephyr-A-Concrete-End-to-end-Example-ELC-NA-2022-26-320.jpg)

![27 |

Make use of the shared memory and event channels in Zephyr

• Map the shared memory

• Setup event channels

MMU_REGION_FLAT_ENTRY("RAM_SHARED",

0x7fe01000,

0x1000,

MT_NORMAL_NC | MT_P_RW_U_RW | MT_NS),

#include "xen.h”

[…]

bind.remote_dom = remote_domid;

bind.remote_port = remote_port;

bind.local_port = 0;

ret = xen_hypercall(EVTCHNOP_bind_interdomain, (unsigned long)&bind, 0, 0, HYPERVISOR_event_channel_op);](https://image.slidesharecdn.com/xenstaticpartitioningexample-elc22-220623210323-dbb14fcb/85/Static-Partitioning-with-Xen-LinuxRT-and-Zephyr-A-Concrete-End-to-end-Example-ELC-NA-2022-27-320.jpg)