Supervised Papers Classification on Large-Scale High-Dimensional Data with Apache Spark

- 1. Supervised Papers Classification on Large-Scale High-Dimensional Data with Apache Spark Leonidas Akritidis, Panayiotis Bozanis, Athanasios Fevgas Department of Electrical and Computer Engineering Data Structuring and Engineering Lab University of Thessaly The Fourth IEEE International Conference on Big Data Intelligence and Computing (DataCom 2018) 12-15 August 2018, Athens, Greece

- 2. Supervised papers classification • Classify a set of unlabeled articles X into one research field y of a given taxonomy structure Y. • Supervised learning problem: The algorithm will exploit a given set of articles with known labels. • Important problem for academic search engines & digital libraries - a robust solution allows: – Results refinement by category. – Browsing of articles by category. – Recommendations of Similar articles. L. Akritidis, P. Bozanis, A. Fevgas 2IEEE DataCom 2018, 12-15 August 2018, Athens

- 3. Large-Scale Dataset • This work makes use of the Open Academic Graph https://www.openacademic.ai/oag • It contains 167 million publications. • The articles are classified in 19 primary research areas and thousands of children categories. • It occupies 300GB in uncompressed form. • Each paper and its characteristics are represented by a record in JSON format. L. Akritidis, P. Bozanis, A. Fevgas 3IEEE DataCom 2018, 12-15 August 2018, Athens

- 4. Features • Keywords: Special words which are selected by the authors to highlight the contents of their articles. Present in some papers. • Title words: treated as normal keywords after removing the stop words. Present in all papers. • Authors history: The research fields of the each author and the respective frequency for each field. • Co-authorship information: We record the research fields of the each (author, coauthor) pair. • Journal history: The research fields of the each journal and the respective frequency for each field. L. Akritidis, P. Bozanis, A. Fevgas 4IEEE DataCom 2018, 12-15 August 2018, Athens

- 5. The original classification algorithm • L. Akritidis, P. Bozanis. “A supervised machine learning classification algorithm for research articles”, ACM SAC, pp. 115-120, 2013. • Given a set of labeled articles, we build a model which correlates the keywords, the authors and the journals with one or more research fields. • The model contains a dictionary data structure M with the features F of the dataset. L. Akritidis, P. Bozanis, A. Fevgas 5IEEE DataCom 2018, 12-15 August 2018, Athens

- 6. Original algorithm: Training phase • For each feature f we store: – A global frequency value |Xf| (occurrences of f in dataset) – A relevance desc. vector (RDV) with components (y, |Xf,y|). – |Xf,y|: number of f and y co-occurrences (i.e. how many times y has been correlated with f). L. Akritidis, P. Bozanis, A. Fevgas 6IEEE DataCom 2018, 12-15 August 2018, Athens

- 7. Original algorithm: Test phase • For each unlabeled article x we extract the features and we search M. • We retrieve its RDV and we compute a score Sy for each label y in the RDV: • wf: The weight of the feature. • Good accuracy: wk=0.3, wa=0.2, wj=0.5 L. Akritidis, P. Bozanis, A. Fevgas 7IEEE DataCom 2018, 12-15 August 2018, Athens xf F xF

- 8. Apache Spark • Spark is a fault-tolerant parallelization framework. • In contrast to MapReduce, it has been designed to allow storage of intermediate data in the main memory of the cluster nodes. • In contrast to MapReduce which forces a linear dataflow, it is based on a DAG Scheduler which enhances the job execution performance. • The core element is its Resilient Distributed Datasets (RDDs), i.e. fault-tolerant & read-only abstractions for data handling with various persistence levels. L. Akritidis, P. Bozanis, A. Fevgas 8IEEE DataCom 2018, 12-15 August 2018, Athens

- 9. Apache MLlib • Spark powers a set of libraries including Spark SQL, GraphX, Spark Streaming and MLlib. • MLlib is Spark’s scalable machine learning library. • It implements a series of classification and regression algorithms. • We are interested in comparing our model with the multi-class classification algorithms of MLlib: – i.e., Logistic Regression, Decision Trees and Random Forests. L. Akritidis, P. Bozanis, A. Fevgas 9IEEE DataCom 2018, 12-15 August 2018, Athens

- 10. The LIBSVM Format • MLlib algorithms accept their input data in LIBSVM format. • Each row is represented by a LabeledPoint, an abstraction which contains: – The label of the data sample (double). – A sparse vector of feature-weight pairs (int, double). • We converted the dataset in LIBSVM format with the aim of comparing our method with the adversary classifiers of MLlib. L. Akritidis, P. Bozanis, A. Fevgas 10IEEE DataCom 2018, 12-15 August 2018, Athens

- 11. Dataset Preprocessing • Spark offers a powerful SQL- like filtration mechanism. – We discard all the unlabeled samples and all the samples with irrelevant labels. – We discard all the non-English articles. – We convert the dataset to the LIBSVM format by applying the hashing trick. L. Akritidis, P. Bozanis, A. Fevgas 11IEEE DataCom 2018, 12-15 August 2018, Athens

- 12. Dimensionality Reduction • After preprocessing, our dataset consisted of about 75 million articles and 83 million features. • Our method executes normally on this huge feature space. However, MLlib algorithms do not: – Out of memory errors • Therefore, we applied Sparse Random Projection to reduce the dimensionality of the feature space. – The built-in dimensionality reduction algorithms of MLlib, SVD and PCA, failed to complete the task. – The final projected space included only 4181 features. L. Akritidis, P. Bozanis, A. Fevgas 12IEEE DataCom 2018, 12-15 August 2018, Athens

- 13. Algorithm parallelization on Spark (1) • The Driver program controls the execution flow of the job. • The dataset has been converted to LIBSVM format. • The split method automatically shuffles the dataset and splits it in the training and test sets, based on the parameter N (we set N=0.6). • After the model M has been trained, it is transmitted to all cluster nodes via a special broadcast call. L. Akritidis, P. Bozanis, A. Fevgas 13IEEE DataCom 2018, 12-15 August 2018, Athens

- 14. Algorithm parallelization on Spark (2) • Our parallel model implementation includes: – The features dictionary M. – The training (fitting) function. – The classification function. • The training phase is a flatMap function which operates in two phases: L. Akritidis, P. Bozanis, A. Fevgas 14IEEE DataCom 2018, 12-15 August 2018, Athens

- 15. Algorithm parallelization on Spark (3) • Stage 1: For each LabeledPoint of the input return a local list λ of (f,y,wf) tuples. • Collect all local lists λ and merge them into a list l. • Stage 2: Traverse the list l and build the model M (insert the features, compute frequencies, build the RDVs). L. Akritidis, P. Bozanis, A. Fevgas 15IEEE DataCom 2018, 12-15 August 2018, Athens

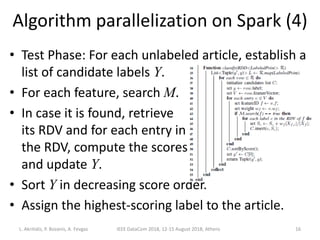

- 16. Algorithm parallelization on Spark (4) • Test Phase: For each unlabeled article, establish a list of candidate labels Y. • For each feature, search M. • In case it is found, retrieve its RDV and for each entry in the RDV, compute the scores and update Y. • Sort Y in decreasing score order. • Assign the highest-scoring label to the article. L. Akritidis, P. Bozanis, A. Fevgas 16IEEE DataCom 2018, 12-15 August 2018, Athens

- 17. Experiments • We used the cluster of our department: 8 nodes with 16 CPUs and 64GB of RAM each. • Java 1.8, Hadoop 2.9.0, HDFS, YARN, Spark 2.3.0. • 15 executors (2 executors per node) with 28 GB of available RAM each. – One node runs only 1 executor plus the Application Master. • Our method: Paper Classifier (PC) • MLlib adversaries: Logistic Regression, Decision Trees, Random Forests. L. Akritidis, P. Bozanis, A. Fevgas 17IEEE DataCom 2018, 12-15 August 2018, Athens

- 18. Accuracy Measurements • In the original feature space (83,3M features) only our method managed to complete, achieving accuracy of 79.1%. • In the reduced feature space, our method lost a portion of its accuracy (52.1%), however, it outperformed all the classifiers of MLlib. L. Akritidis, P. Bozanis, A. Fevgas 18IEEE DataCom 2018, 12-15 August 2018, Athens

- 19. Efficiency Measurements • Our method was much faster than the algorithms of MLlib. Even in the original feature space (83M features), it was twice as fast as Logistic Regression in the reduced feature space. In the reduced feature space, it was 11 times faster. • The dashes symbolize the failure of SVD and PCA in reducing the feature space. L. Akritidis, P. Bozanis, A. Fevgas 19IEEE DataCom 2018, 12-15 August 2018, Athens

- 20. Conclusions • We presented a parallel supervised learning algorithm for classifying research articles on Apache Spark. • Our method takes into consideration multiple features including keywords, title words, authors, and publishing journals of the articles. • Our method operates effectively and efficiently on large, high-dimensional datasets. • It outperforms the built-in Spark MLlib classifiers by a significant margin. L. Akritidis, P. Bozanis, A. Fevgas 20IEEE DataCom 2018