[論文紹介] Understanding and improving transformer from a multi particle dynamic system point of view

- 1. Understanding and Improving Transformer From a Multi-Particle Dynamic System Point of View 1

- 2. • https://arxiv.org/abs/1906.02762 • Microsoft Research Asia • • Transformer • Transformer • Transformer Lie-Trotter • Lie-Trotter Strang-Marchuk Macaron • GLUE 2

- 3. • Transformer (eg. ) , = (eg. ) • Transformer SA/Residual→FFN/Residual Lie-Trotter • Self-Attention(SA) • PositionWise—FFN (PW-FFN) • • Lie-Trotter 2 3 Strang-Marchuk Transformer Macaron • Lie-Trotter Strang- Marchuk • NLP Transformer Macaron Macaron 3

- 4. Residual • 1 • eg. : t : • • x(t) → Residual x(t) f(x, t) 4 x(t = tl) ·= xl, (l ∈ [0,L − 1]) tl = t0 + γl, γ ·= Δt Δx Δt = x2 − x1 t2 − t1 ≈ f(xl, tl)

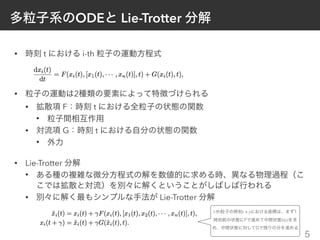

- 5. ODE Lie-Trotter • t i-th • 2 • F t • • G t • • Lie-Trotter • • Lie-Trotter 5 t + γ ˜x(t)

- 6. Transformer • Encoder (Figure1 ) Decoder (Figure1 ) • Encoder • Residual • Self-Attention • Position-Wise Feed Forword Network • Decoder • 6 Multihead(Q, K, V) = concat(head1, ⋯, headH)WO headk = Attention(QWQ k , KWK k , VWV k ) Attention(Q, K, V) = softmax(QKT / dmodel) ⋅ V dmodel dK dV WQ k ∈ ℝdmodel×dK WK k ∈ ℝdmodel×dK WV k ∈ ℝdmodel×dV WO ∈ ℝHdV×dmodel

- 7. Transformer Lie-Trotter • Self-Attention • : l-th i-th • l t i-th head concat • PW-FFN • PW-FFN Residual l+1 xl,i ˜xl,i xl,i ˜xl,i 7 e(k) i,j = (xl,iWQ,l k ) ⋅ (xl,jWK,l k )T / dmodel

- 8. Transformer Lie-Trotter • Self-Attention PW-FFN Transformer 1 • Lie-Trotter • Transformer 2 Lie-Trotter F G 1γ 8

- 9. Strang-Marchuk • • Transformer Lie-Trotter • Lie-Trotter • F G → 2 : • Lie-Trotter → Strang-Marchuk • Lie-Trotter F→G Strang-Marchuk G F • Strang-Marchuk 3 : Lie-Trotter 𝒪(γ2 ) 𝒪(γ3 ) 9

- 10. Strang-Marchuk Transformer • Transformer Strang-Marchuk → Macaron 10

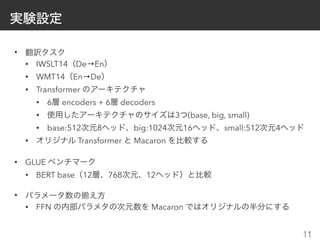

- 11. • • IWSLT14 De→En • WMT14 En→De • Transformer • 6 encoders + 6 decoders • 3 (base, big, small) • base:512 8 big:1024 16 small:512 4 • Transformer Macaron • GLUE • BERT base 12 768 12 • • FFN Macaron 11

- 13. • Transformer • Transformer Lie-Trotter • Lie-Trotter Strang-Marchuk Macaron • GLUE 13

![Residual

• 1

• eg. : t :

•

• x(t)

→ Residual

x(t) f(x, t)

4

x(t = tl) ·= xl, (l ∈ [0,L − 1]) tl = t0 + γl, γ ·= Δt

Δx

Δt

=

x2 − x1

t2 − t1

≈ f(xl, tl)](https://image.slidesharecdn.com/understandingandimprovingtransformerfromamulti-particledynamicsystempointofview2-200506083516/85/Understanding-and-improving-transformer-from-a-multi-particle-dynamic-system-point-of-view-4-320.jpg)