Web20expo Scalable Web Arch

- 1. Scalable Web Architectures Common Patterns & Approaches Cal Henderson

- 2. Hello

- 3. Scalable Web Architectures? What does scalable mean? What’s an architecture? An answer in 12 parts

- 4. 1. Scaling

- 5. Scalability – myths and lies What is scalability?

- 6. Scalability – myths and lies What is scalability not ?

- 7. Scalability – myths and lies What is scalability not ? Raw Speed / Performance HA / BCP Technology X Protocol Y

- 8. Scalability – myths and lies So what is scalability?

- 9. Scalability – myths and lies So what is scalability? Traffic growth Dataset growth Maintainability

- 10. Today Two goals of application architecture: Scale HA

- 11. Today Three goals of application architecture: Scale HA Performance

- 12. Scalability Two kinds: Vertical (get bigger) Horizontal (get more)

- 13. Big Irons Sunfire E20k $450,000 - $2,500,000 36x 1.8GHz processors PowerEdge SC1435 Dualcore 1.8 GHz processor Around $1,500

- 14. Cost vs Cost

- 15. That’s OK Sometimes vertical scaling is right Buying a bigger box is quick (ish) Redesigning software is not Running out of MySQL performance? Spend months on data federation Or, Just buy a ton more RAM

- 16. The H & the V But we’ll mostly talk horizontal Else this is going to be boring

- 17. 2. Architecture

- 18. Architectures then? The way the bits fit together What grows where The trade-offs between good/fast/cheap

- 19. LAMP We’re mostly talking about LAMP Linux Apache (or LightHTTPd) MySQL (or Postgres) PHP (or Perl, Python, Ruby) All open source All well supported All used in large operations (Same rules apply elsewhere)

- 20. Simple web apps A Web Application Or “Web Site” in Web 1.0 terminology Interwebnet App server Database

- 21. Simple web apps A Web Application Or “Web Site” in Web 1.0 terminology Interwobnet App server Database Cache Storage array AJAX!!!1

- 22. App servers App servers scale in two ways:

- 23. App servers App servers scale in two ways: Really well

- 24. App servers App servers scale in two ways: Really well Quite badly

- 25. App servers Sessions! (State) Local sessions == bad When they move == quite bad Centralized sessions == good No sessions at all == awesome!

- 26. Local sessions Stored on disk PHP sessions Stored in memory Shared memory block (APC) Bad! Can’t move users Can’t avoid hotspots Not fault tolerant

- 27. Mobile local sessions Custom built Store last session location in cookie If we hit a different server, pull our session information across If your load balancer has sticky sessions, you can still get hotspots Depends on volume – fewer heavier users hurt more

- 28. Remote centralized sessions Store in a central database Or an in-memory cache No porting around of session data No need for sticky sessions No hot spots Need to be able to scale the data store But we’ve pushed the issue down the stack

- 29. No sessions Stash it all in a cookie! Sign it for safety $data = $user_id . ‘-’ . $user_name; $time = time(); $sig = sha1($secret . $time . $data); $cookie = base64(“$sig-$time-$data”); Timestamp means it’s simple to expire it

- 30. Super slim sessions If you need more than the cookie (login status, user id, username), then pull their account row from the DB Or from the account cache None of the drawbacks of sessions Avoids the overhead of a query per page Great for high-volume pages which need little personalization Turns out you can stick quite a lot in a cookie too Pack with base64 and it’s easy to delimit fields

- 31. App servers The Rasmus way App server has ‘shared nothing’ Responsibility pushed down the stack Ooh, the stack

- 32. Trifle

- 33. Trifle Sponge / Database Jelly / Business Logic Custard / Page Logic Cream / Markup Fruit / Presentation

- 34. Trifle Sponge / Database Jelly / Business Logic Custard / Page Logic Cream / Markup Fruit / Presentation

- 35. App servers

- 36. App servers

- 37. App servers

- 38. Well, that was easy Scaling the web app server part is easy The rest is the trickier part Database Serving static content Storing static content

- 39. The others Other services scale similarly to web apps That is, horizontally The canonical examples: Image conversion Audio transcoding Video transcoding Web crawling Compute!

- 40. Amazon Let’s talk about Amazon S3 - Storage EC2 – Compute! (XEN based) SQS – Queueing All horizontal Cheap when small Not cheap at scale

- 42. Load balancing If we have multiple nodes in a class, we need to balance between them Hardware or software Layer 4 or 7

- 43. Hardware LB A hardware appliance Often a pair with heartbeats for HA Expensive! But offers high performance Many brands Alteon, Cisco, Netscalar, Foundry, etc L7 - web switches, content switches, etc

- 44. Software LB Just some software Still needs hardware to run on But can run on existing servers Harder to have HA Often people stick hardware LB’s in front But Wackamole helps here

- 45. Software LB Lots of options Pound Perlbal Apache with mod_proxy Wackamole with mod_backhand http://backhand.org/wackamole/ http ://backhand.org/mod_backhand/

- 46. Wackamole

- 47. Wackamole

- 48. The layers Layer 4 A ‘dumb’ balance Layer 7 A ‘smart’ balance OSI stack, routers, etc

- 49. 4. Queuing

- 50. Parallelizable == easy! If we can transcode/crawl in parallel, it’s easy But think about queuing And asynchronous systems The web ain’t built for slow things But still, a simple problem

- 53. Helps with peak periods

- 58. Databases Unless we’re doing a lot of file serving, the database is the toughest part to scale If we can, best to avoid the issue altogether and just buy bigger hardware Dual Opteron/Intel64 systems with 16+GB of RAM can get you a long way

- 59. More read power Web apps typically have a read/write ratio of somewhere between 80/20 and 90/10 If we can scale read capacity, we can solve a lot of situations MySQL replication!

- 61. Master-Slave Replication Reads and Writes Reads

- 70. 6. Caching

- 71. Caching Caching avoids needing to scale! Or makes it cheaper Simple stuff mod_perl / shared memory Invalidation is hard MySQL query cache Bad performance (in most cases)

- 72. Caching Getting more complicated… Write-through cache Write-back cache Sideline cache

- 74. Write-back cache

- 75. Sideline cache

- 76. Sideline cache Easy to implement Just add app logic Need to manually invalidate cache Well designed code makes it easy Memcached From Danga (LiveJournal) http://www.danga.com/memcached/

- 77. Memcache schemes Layer 4 Good: Cache can be local on a machine Bad: Invalidation gets more expensive with node count Bad: Cache space wasted by duplicate objects

- 78. Memcache schemes Layer 7 Good: No wasted space Good: linearly scaling invalidation Bad: Multiple, remote connections Can be avoided with a proxy layer Gets more complicated Last indentation level!

- 79. 7. HA Data

- 80. But what about HA?

- 81. But what about HA?

- 82. SPOF! The key to HA is avoiding SPOFs Identify Eliminate Some stuff is hard to solve Fix it further up the tree Dual DCs solves Router/Switch SPOF

- 83. Master-Master

- 84. Master-Master Either hot/warm or hot/hot Writes can go to either But avoid collisions No auto-inc columns for hot/hot Bad for hot/warm too Unless you have MySQL 5 But you can’t rely on the ordering! Design schema/access to avoid collisions Hashing users to servers

- 85. Rings Master-master is just a small ring With 2 nodes Bigger rings are possible But not a mesh! Each slave may only have a single master Unless you build some kind of manual replication

- 86. Rings

- 87. Rings

- 88. Dual trees Master-master is good for HA But we can’t scale out the reads (or writes!) We often need to combine the read scaling with HA We can simply combine the two models

- 89. Dual trees

- 90. Cost models There’s a problem here We need to always have 200% capacity to avoid a SPOF 400% for dual sites! This costs too much Solution is straight forward Make sure clusters are bigger than 2

- 91. N+M N+M N = nodes needed to run the system M = nodes we can afford to lose Having M as big as N starts to suck If we could make each node smaller, we can increase N while M stays constant (We assume smaller nodes are cheaper)

- 92. 1+1 = 200% hardware

- 93. 3+1 = 133% hardware

- 94. Meshed masters Not possible with regular MySQL out-of-the-box today But there is hope! NBD (MySQL Cluster) allows a mesh Support for replication out to slaves in a coming version RSN!

- 95. 8. Federation

- 96. Data federation At some point, you need more writes This is tough Each cluster of servers has limited write capacity Just add more clusters!

- 97. Simple things first Vertical partitioning Divide tables into sets that never get joined Split these sets onto different server clusters Voila! Logical limits When you run out of non-joining groups When a single table grows too large

- 98. Data federation Split up large tables, organized by some primary object Usually users Put all of a user’s data on one ‘cluster’ Or shard, or cell Have one central cluster for lookups

- 99. Data federation

- 100. Data federation Need more capacity? Just add shards! Don’t assign to shards based on user_id! For resource leveling as time goes on, we want to be able to move objects between shards Maybe – not everyone does this ‘ Lockable’ objects

- 101. The wordpress.com approach Hash users into one of n buckets Where n is a power of 2 Put all the buckets on one server When you run out of capacity, split the buckets across two servers Then you run out of capacity, split the buckets across four servers Etc

- 102. Data federation Heterogeneous hardware is fine Just give a larger/smaller proportion of objects depending on hardware Bigger/faster hardware for paying users A common approach Can also allocate faster app servers via magic cookies at the LB

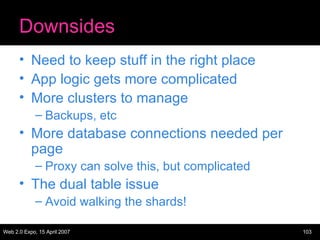

- 103. Downsides Need to keep stuff in the right place App logic gets more complicated More clusters to manage Backups, etc More database connections needed per page Proxy can solve this, but complicated The dual table issue Avoid walking the shards!

- 104. Bottom line Data federation is how large applications are scaled

- 105. Bottom line It’s hard, but not impossible Good software design makes it easier Abstraction! Master-master pairs for shards give us HA Master-master trees work for central cluster (many reads, few writes)

- 106. 9. Multi-site HA

- 107. Multiple Datacenters Having multiple datacenters is hard Not just with MySQL Hot/warm with MySQL slaved setup But manual (reconfig on failure) Hot/hot with master-master But dangerous (each site has a SPOF) Hot/hot with sync/async manual replication But tough (big engineering task)

- 108. Multiple Datacenters

- 109. GSLB Multiple sites need to be balanced Global Server Load Balancing Easiest are AkaDNS-like services Performance rotations Balance rotations

- 110. 10. Serving Files

- 111. Serving lots of files Serving lots of files is not too tough Just buy lots of machines and load balance! We’re IO bound – need more spindles! But keeping many copies of data in sync is hard And sometimes we have other per-request overhead (like auth)

- 112. Reverse proxy

- 113. Reverse proxy Serving out of memory is fast! And our caching proxies can have disks too Fast or otherwise More spindles is better We stay in sync automatically We can parallelize it! 50 cache servers gives us 50 times the serving rate of the origin server Assuming the working set is small enough to fit in memory in the cache cluster

- 114. Invalidation Dealing with invalidation is tricky We can prod the cache servers directly to clear stuff out Scales badly – need to clear asset from every server – doesn’t work well for 100 caches

- 115. Invalidation We can change the URLs of modified resources And let the old ones drop out cache naturally Or prod them out, for sensitive data Good approach! Avoids browser cache staleness Hello Akamai (and other CDNs) Read more: http://www.thinkvitamin.com/features/webapps/serving-javascript-fast

- 116. Reverse proxy Choices L7 load balancer & Squid http://www.squid-cache.org/ mod_proxy & mod_cache http://www.apache.org/ Perlbal and Memcache? http://www.danga.com/

- 117. High overhead serving What if you need to authenticate your asset serving? Private photos Private data Subscriber-only files Two main approaches Proxies w/ tokens Path translation

- 118. Perlbal backhanding Perlbal can do redirection magic Client sends request to Perbal Perlbl plugin verifies user credentials token, cookies, whatever tokens avoid data-store access Perlbal goes to pick up the file from elsewhere Transparent to user

- 119. Perlbal backhanding

- 120. Perlbal backhanding Doesn’t keep database around while serving Doesn’t keep app server around while serving User doesn’t find out how to access asset directly

- 121. Permission URLs But why bother!? If we bake the auth into the URL then it saves the auth step We can do the auth on the web app servers when creating HTML Just need some magic to translate to paths We don’t want paths to be guessable

- 122. Permission URLs

- 123. Permission URLs (or mod_perl)

- 124. Permission URLs Downsides URL gives permission for life Unless you bake in tokens Tokens tend to be non-expirable We don’t want to track every token Too much overhead But can still expire Upsides It works Scales nicely

- 125. 11. Storing Files

- 126. Storing lots of files Storing files is easy! Get a big disk Get a bigger disk Uh oh! Horizontal scaling is the key Again

- 127. Connecting to storage NFS Stateful == Sucks Hard mounts vs Soft mounts, INTR SMB / CIFS / Samba Turn off MSRPC & WINS (NetBOIS NS) Stateful but degrades gracefully HTTP Stateless == Yay! Just use Apache

- 128. Multiple volumes Volumes are limited in total size Except (in theory) under ZFS & others Sometimes we need multiple volumes for performance reasons When using RAID with single/dual parity At some point, we need multiple volumes

- 129. Multiple volumes

- 130. Multiple hosts Further down the road, a single host will be too small Total throughput of machine becomes an issue Even physical space can start to matter So we need to be able to use multiple hosts

- 131. Multiple hosts

- 132. HA Storage HA is important for assets too We can back stuff up But we tend to want hot redundancy RAID is good RAID 5 is cheap, RAID 10 is fast

- 133. HA Storage But whole machines can fail So we stick assets on multiple machines In this case, we can ignore RAID In failure case, we serve from alternative source But need to weigh up the rebuild time and effort against the risk Store more than 2 copies?

- 134. HA Storage

- 135. Self repairing systems When something fails, repairing can be a pain RAID rebuilds by itself, but machine replication doesn’t The big appliances self heal NetApp, StorEdge, etc So does MogileFS (reaper)

- 136. 12. Field Work

- 137. Real world examples Flickr Because I know it LiveJournal Because everyone copies it

- 138. Flickr Architecture

- 139. Flickr Architecture

- 142. Buy my book!

- 143. Or buy Theo’s

- 144. The end!

- 145. Awesome! These slides are available online: iamcal.com/talks/