DFA Minimization in Map-Reduce

- 1. DFA Minimization in Map-Reduce G¨osta Grahne Shahab Harrafi Iraj Hedayati Ali Moallemi Concordia University {grahne,s harraf,h iraj,moa ali}@cs.concordia.ca BeyondMR, SIGMOD/PODS, July 2016 Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 1 / 21

- 2. Introduction Outline 1 Introduction Preliminaries Minimization Algorithms 2 DFA Minimization in Map-Reduce Moore-MR Hopcroft-MR Communication Cost 3 Experimental Results 4 Conclusion Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 2 / 21

- 3. Introduction Preliminaries Preliminaries Finite Automata A = (Q, Σ, δ, qs, F) DFA minimization Motivation: Importance and wide use in applications Iterative and multi-round structure of problem Simialr problems: Coarsest partition Bi-Simulation Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 3 / 21

- 4. Introduction Minimization Algorithms DFA Minimization Algorithms - Moore Moore’s algorithm iteratively computes equivalence class of each state as p ≡i q ⇔ p ≡i−1 q AND ∀a ∈ Σ δ(p, a) ≡i−1 δ(q, a) p1 p2 p3 q1 q2 q3 a, b a, b a b p1 p2 p3 q1 q2 q3 a, b a, b a b Signatures: a b p1 q1 q1 p2 q2 q2 p3 q2 q3 Complexity: O(kn2) Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 4 / 21

- 5. Introduction Minimization Algorithms DFA Minimization Algorithms - Hopcroft Hopcroft’s algorithm works with splitters. At each iteration, for any splitter S, a , P ÷ S, a = {P1, P2} where P1 = {p ∈ P : δ(p, a) ∈ S} and P2 = {p ∈ P : δ(p, a) /∈ S}. It uses a data structure Γ(S, a), a subset of states from block S having incoming transition labeled with a. p1 p2 p3 q1 q2 q3 a, b a, b a b p1 p2 p3 q1 q2 q3 a, b a, b a b Here Γ(S, a) = {q1, q2} Complexity: O(kn log n) Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 5 / 21

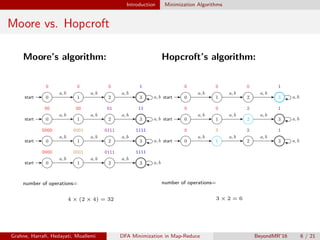

- 6. Introduction Minimization Algorithms Moore vs. Hopcroft Moore’s algorithm: 0start 0 1 0 2 0 3 1 a, b a, b a, b a, b 0start 00 1 00 2 01 3 11 a, b a, b a, b a, b 0start 0000 1 0001 2 0111 3 1111 a, b a, b a, b a, b 0start 0000 1 0001 2 0111 3 1111 a, b a, b a, b a, b number of operations= 4 × (2 × 4) = 32 Hopcroft’s algorithm: 0start 0 1 0 2 0 3 1 a, b a, b a, b a, b 0start 0 1 0 2 2 3 1 a, b a, b a, b a, b 0start 0 1 3 2 2 3 1 a, b a, b a, b a, b number of operations= 3 × 2 = 6 Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 6 / 21

- 7. DFA Minimization in Map-Reduce Outline 1 Introduction Preliminaries Minimization Algorithms 2 DFA Minimization in Map-Reduce Moore-MR Hopcroft-MR Communication Cost 3 Experimental Results 4 Conclusion Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 7 / 21

- 8. DFA Minimization in Map-Reduce Moore-MR Moore-MR: Moore’s algorithm in Map-Reduce MAPPER REDUCER PreProcessing Pre-Processing MAPPER REDUCER First job MAPPER REDUCER PPHF-MR Iterate till Q is not empty Construct minimal DFA δ K = h(p) ∆+ 0 , ∆− 0 ∆+ i−1 ∆− i−1 p, a, q, πi p, + p, a, q, πi p, − [ p, true ] K = h(q) K = h(p) K = h(πi p) p, a, q, πi p, + p, a, q, πi p, − h(p) h(p) h(p) h(q) h(πi p) ∆+ 0 = {(p, a, q, π0 p, +) : (p, a, q) ∈ δ} ∆− 0 = {(p, a, q, π0 q , −) : (p, a, q) ∈ δ} πi p = πi−1 p · πi−1 δ(p,a1) · . . . · πi−1 δ(p,ak) PPHF-MR in reducer j maps πp to {j · n, . . . , j · n + n − 1} sub(πi p): set of sub-strings obtained by dividing πi p into (k + 1) substrings. Thus h(p) emits p, true if |sub(πi p)| > |sub(πi−1 p )| Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 8 / 21

- 9. DFA Minimization in Map-Reduce Hopcroft-MR Hopcroft-MR: Hopcroft’s algorithm in Map-Reduce MAPPER REDUCER PreProcessing Pre-Processing MAPPER REDUCER First job MAPPER REDUCER Second job MAPPER REDUCER PPHF-MR Iterate till Q is not empty Construct minimal DFA δ ∆0, Γ0 ∆i−1 Γi−1 update tuple ∆i Γi ∆i Γi h(q) h(q) h(p) h(πi p) ∆0 = {(p, a, q, π0 p, +) : (p, a, q) ∈ δ} Γ0 = Bj ∈π0 {(p, a, q, π0 q , −) : (p, a, q) ∈ δ, q ∈ Bj } update tuples (pj , πi−1 pj , βa pj , πi−1 q ) Aggregated tuple in second job: βp = k j=1 β aj p Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 9 / 21

- 10. DFA Minimization in Map-Reduce Hopcroft-MR Sample run of Hopcroft-MR Splitters in queue: Q = { S1, a , S1, b , S3, a , S3, b } p1 p2 p3 q1 q2 q3 P S1 S2 S3 a b a, b a b p1, a, q1, P S1, q1, a First Job ⇒ p1, P, 01, S1 p3, b, q3, P p1, b, q3, P S3, q3, b ⇒ p1, P, 10, S3 p3, P, 10, S3 Second Job h(p1) :h(p1) : p1, a, q1, P p1, b, q3, P p1, P, 10, S3 p1, P, 01, S1 ⇒ p1, a, q1, P11S3S1 p1, b, q3, P11S3S1 h(p3) :h(p3) : p3, a, q2, P p3, b, q3, P p3, P, 10, S3 ⇒ p3, a, q2, P10S3 p3, b, q3, P10S3 After applying PPHF-MR, p1 ∈ P1 = P 11S3S1 and p3 ∈ P2 = P 10S3 while p2 remains in P . Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 10 / 21

- 11. DFA Minimization in Map-Reduce Communication Cost Communcation Cost Communication cost can be calculated as: Number of rounds × (Replication rate × Input size + Output size) Number of rounds: O(n) Replication rate: O(1) Input size = Output size Moore-MR: Record size of output of first job is Θ(k log n). Thus communication cost of each round is Θ(k2n log n). Therefor total comunication cost is O(k2n2 log n). Hopcroft-MR: There are O(n log n) updates in parallel execution at each round. Thus it requires O(kn2 log n) bits of communication. Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 11 / 21

- 12. Experiments Outline 1 Introduction Preliminaries Minimization Algorithms 2 DFA Minimization in Map-Reduce Moore-MR Hopcroft-MR Communication Cost 3 Experimental Results 4 Conclusion Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 12 / 21

- 13. Experiments Data sets - Slow A member of DFA family know slow for Hopcroft and Moore 0start 1 2 . . . n a a a a a After minimization 0start 1 2 . . . n a a a a a Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 13 / 21

- 14. Experiments Data sets - Circular Equal number of incoming and outgoing transitions 0start 1 2 3 4 56 7 a b c d a b c d a b c d a b cd ab c d a b c d a b c d a b c d After minimization 0, 4start 1, 5 2, 6 3, 7 a b, d c a b, d c a b, d c a b, d c Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 14 / 21

- 15. Experiments Data sets - Star Most of the transitions’ target is one state 0start 1 2 3 4 5 6 7 a b, c, d a b, c, d a b, c, d a b, c, d a b, c, d a b, c, d a b, c, d a, b, c, d After minimization 0, 1, 2, 3, 4, 5, 6start 7 a, b, c, d a, b, c, d Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 15 / 21

- 16. Experiments Experimental Results - The affect of number of rounds Slow DFA k = 2, n = {2 − 512} 0 200 400 600 0 25 50 75 100 Number of States CommunicationCost(MB) Moore-MR Hopcroft-MR 0 200 400 600 0 500 1,000 1,500 Number of States ExecutionTime(sec) Moore-MR Hopcroft-MR Slow DFA 0start 1 2 . . . n a a a a a Minimized: 0start 1 2 . . . n a a a a a Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 16 / 21

- 17. Experiments Experimental Results - Evenly distributed transitions Circular DFA k = 4, n = {24 , 25 , . . . , 217 } 23 28 213 218 0.1 1 10 100 Number of States CommunicationCost(MB) Moore-MR Hopcroft-MR 23 28 213 218 10 100 Number of States ExecutionTime(sec) Moore-MR Hopcroft-MR Circular DFA 0start 1 2 3 4 56 7 a b c d a b c d a b c d a b cd ab c d a b c d a b c d a b c d Minimized: 0, 4start 1, 5 2, 6 3, 7 a b, d c a b, d c a b, d c a b, d c Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 17 / 21

- 18. Experiments Experimental Results - The affect of alphabet size Circular DFA k = {22 , . . . , 27 }, n = 210 0 50 100 0 1 2 3 4 5 Alphabet Size CommunicationCost(GB) Moore-MR Hopcroft-MR 0 50 100 0 500 1,000 Alphabet Size ExecutionTime(sec) Moore-MR Hopcroft-MR Circular DFA 0start 1 2 3 4 56 7 a b c d a b c d a b c d a b cd ab c d a b c d a b c d a b c d Minimized: 0, 4start 1, 5 2, 6 3, 7 a b, d c a b, d c a b, d c a b, d c Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 18 / 21

- 19. Experiments Experimental Results - The affect of skewness Star DFA k = 4, n = 217 0-15 16-31 32-47 48-63 64-79 80-95 96-111 112-126 127 0 1 2 3 4 5 Reducer Number NumberofTransitions(·105 ) Circular Random Star 0-15 16-31 32-47 48-63 64-79 80-95 96-111 112-126 127 0 1 2 3 4 5 Reducer Number NumberofTransitions(·105 ) Circular Random Star Star DFA 0start 1 2 3 4 5 6 7 a b, c, d a b, c, d a b, c, d a b, c, d a b, c, d a b, c, d a b, c, d a, b, c, d Minimized: 0, 1, 2, 3, 4, 5, 6start 7 a, b, c, d a, b, c, d Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 19 / 21

- 20. Conclusion Outline 1 Introduction Preliminaries Minimization Algorithms 2 DFA Minimization in Map-Reduce Moore-MR Hopcroft-MR Communication Cost 3 Experimental Results 4 Conclusion Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 20 / 21

- 21. Conclusion Conclusions and Future Works Hopcroft-MR outperforms Moore-MR in communication cost when the cardinality of the alphabet is at least 16, in wall-clock time when the cardinality is at least 32 Both algorithms are equally sensitive to skewness in the input data. Future work, There is potential to reduce skew-sensitiveness in Moore-MR. Investigate the average communication cost Reducer capacity vs. Number of rounds Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 21 / 21

![DFA Minimization in Map-Reduce Moore-MR

Moore-MR: Moore’s algorithm in Map-Reduce

MAPPER REDUCER

PreProcessing

Pre-Processing

MAPPER REDUCER

First job

MAPPER REDUCER

PPHF-MR

Iterate till Q is not empty Construct

minimal DFA

δ

K = h(p)

∆+

0 , ∆−

0 ∆+

i−1 ∆−

i−1 p, a, q, πi

p, +

p, a, q, πi

p, −

[ p, true ]

K = h(q)

K = h(p) K = h(πi

p)

p, a, q, πi

p, +

p, a, q, πi

p, −

h(p) h(p)

h(p)

h(q)

h(πi

p)

∆+

0 = {(p, a, q, π0

p, +) : (p, a, q) ∈ δ}

∆−

0 = {(p, a, q, π0

q , −) : (p, a, q) ∈ δ}

πi

p = πi−1

p · πi−1

δ(p,a1)

· . . . · πi−1

δ(p,ak)

PPHF-MR in reducer j maps πp to {j · n, . . . , j · n + n − 1}

sub(πi

p): set of sub-strings obtained by dividing πi

p into (k + 1)

substrings. Thus h(p) emits p, true if |sub(πi

p)| > |sub(πi−1

p )|

Grahne, Harrafi, Hedayati, Moallemi DFA Minimization in Map-Reduce BeyondMR’16 8 / 21](https://image.slidesharecdn.com/fqxn3wggsou4hh5v02an-signature-6ef91e549fa4467d3f1054b08ce651e7b110bbb17dce98afd89fe2177b4671af-poli-160619044613/85/DFA-Minimization-in-Map-Reduce-8-320.jpg)