Rubrics for DMPs

- 1. Using community-generated rubrics to evaluate data management plans. Progress to date…

- 2. Data management plan As a Research Tool (DART) Amanda Whitmire | Stanford University Libraries Jake Carlson | University of Michigan Library Patricia M. Hswe | Pennsylvania State University Libraries Susan Wells Parham | Georgia Institute of Technology Library Brian Westra | University of Oregon Libraries This project was made possible in part by the Institute of Museum and Library Services grant number LG-07-13-0328. DARTTeam 24 Feb. 2016 2 @DMPResearch @DMPResearch

- 3. 5 June 2015 3 DART Premise DMP Research Data Management needs practices capabilities knowledge researcher

- 4. 27 Oct 2015 4 Solution: an analytic rubric Performance Levels PerformanceCriteria Winning Okay No Thing 1 Thing 2 Thing 3 @DMPResearch

- 5. 27 Oct 2015 5 Performance Level Performance Criteria Complete / detailed Addressed issue, but incomplete Did not address issue Directorates GeneralAssessment Criteria Describes what types of data will be captured, created or collected Clearly defines data type(s). E.g. text, spreadsheets, images, 3D models, software, audio files, video files, reports, surveys, patient records, samples, final or intermediate numerical results from theoretical calculations, etc. Also defines data as: observational, experimental, simulation, model output or assimilation Some details about data types are included, but DMP is missing details or wouldn’t be well understood by someone outside of the project No details included, fails to adequately describe data types. All Directorate-ordivision- specificassessmentcriteria Describes how data will be collected, captured, or created (whether new observations, results from models, reuse of other data, etc.) Clearly defines how data will be captured or created, including methods, instruments, software, or infrastructure where relevant. Missing some details regarding how some of the data will be produced, makes assumptions about reviewer knowledge of methods or practices. Does not clearly address how data will be captured or created. GEO AGS, GEO EAR SGP, MPS AST Identifies how much data (volume) will be produced Amount of expected data (MB, GB, TB, etc.) is clearly specified. Amount of expected data (GB, TB, etc.) is vaguely specified. Amount of expected data (GB, TB, etc.) is NOT specified. GEO EAR SGP, GEO AGS @DMPResearch

- 6. Our rubrics project timeline: February 2016 Workshop presentation at International Digital Curation Conference 2016 (Amanda Whitmire) March 2016 Initial call for interest on RESEARCH-DATAMAN@JISCMAIL.AC.UK April 2016 Breakout session at Research Data Management Forum 15 June 2016 Allocation of participants to working groups and distribution of links and documents September 2016 Target date for completion of first drafts November 2016 Target date for second drafts and start of consultation with funders January 2017 Get rubrics hosted on Research Data Network for community access

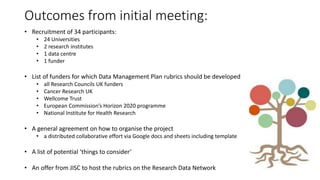

- 7. Outcomes from initial meeting: • Recruitment of 34 participants: • 24 Universities • 2 research institutes • 1 data centre • 1 funder • List of funders for which Data Management Plan rubrics should be developed • all Research Councils UK funders • Cancer Research UK • Wellcome Trust • European Commission’s Horizon 2020 programme • National Institute for Health Research • A general agreement on how to organise the project • a distributed collaborative effort via Google docs and sheets including template • A list of potential ‘things to consider’ • An offer from JISC to host the rubrics on the Research Data Network

- 8. Our process: 1 Identify the documents which will inform each rubric. 2 Develop a list of performance criteria for each data management plan 3 Develop descriptions of what constitutes each level of performance for each performance criteria. 4 Gather feedback on descriptions from working group / other participants. 5 Incorporate feedback into descriptions 6 Send completed draft rubrics to relevant funders for feedback and discussion 7 Incorporate funder feedback as appropriate 8 Make rubrics available to community via the Research Data Network 9 Update rubrics when input documentation / funder guidance changes (ideal) or when changes occur and someone has time (pragmatic)!

- 9. Where we are now: We set out to create rubrics for 11 funders… we currently have: 6 completed rubrics (BBSRC, EPSRC, NERC, Wellcome, CRUK (basic research), ESRC) 2 draft rubrics which are almost complete (MRC, CRUK (population research)) 2 outline drafts which need descriptions (AHRC, STFC) 2 rubrics which have not yet been worked on (H2020, NIHR) We intend to invite funders to review the rubrics and provide feedback… so far: 4 rubrics have been sent to reviewers for feedback (BBSRC, EPSRC, NERC, Wellcome) 3 rubrics are almost ready to be sent out (ESRC, MRC, CRUK (both versions)) We intend to make rubrics available as a community resource on the Research Data Network An early draft of the BBSRC rubric is currently available Other rubrics will become available once we have funder feedback

- 10. Next steps: Continue to work on the rubrics which are not yet finished… Get all of the rubrics out to funders for discussion and feedback… Use the rubrics as a tool to illustrate to funders that their expectations don’t match the text space they provide (BBSRC, we’re looking at your Joint Electronic Submission form here!) Make the rubrics available as a community resource

- 11. The future… Can we use the rubrics for more than just in-house evaluation of DMPs? Training resource – for researchers / reviewers of DMPs? Other uses? Do we want to use the rubrics to gather data on how data management is done / communicated in our institutions? This was a secondary output from the DART project and the data the team obtained was used to inform their subsequent data management training processes, and to identify good practice in certain sectors. How will we keep the rubrics from becoming obsolete? Do we want a project blog to publicise / document the project?

- 12. Thanks to: The DART project team: Amanda Whitmire Jake Carlson Patricia M. Hswe Susan Wells Brian Westra DART project slides were made available under a CC-BY licence. The UK DMP Rubrics team: Lee Bartlett Christina McMahon Libby Bishop Kerry Miller Fay Campbell Ben Mollitt Gareth Cole Niall O’Loughlin Grant Denkinson Georgina Parsons Mary Donaldson Stephen Pearson Chris Emmerson Wayne Peters Jamie Enoch Rachel Proudfoot Jenny Evans Hardy Schwamm Federica Fina Cheryl Smythe Stephen Grace John Southall Laurence Horton Isobel Stark Danielle Hoyle Paul Stokes Sarah Jones Marta Teperek Gareth Knight Mandy Thomas Frances Madden Laurian Williamson Michelle Mayer James Wilson

Editor's Notes

- #3: The Data Management plan As a Research Tool (DART) project was comprised of academic librarians from five universities in the United States. They received funding from the Institute of Museum and Library Services. They developed a tool to assess National Science Foundation data management plans. This facilitates administrators in supporting researchers in creating a good data management plan as well as using the content of plans to inform the research data support provided to researchers.

- #4: As an instrument created by researchers themselves, data management plans are a rich source of information about an institution’s researchers and their research data management (RDM) knowledge, capabilities, and needs. The DART project recognized that by careful evaluation of data management plans, they could determine information about four areas of research data management. 1 – They could determine how much the researchers who were writing the plans knew about their funder’s expectations. 2 – They could determine the extent to which their researchers and institutions had the capacity to meet funder data expectations. 3 – They could evaluate different research data management practices in their institution and compare and contrast between the groups in their study and also between different fields of research. 4 – They could determine where the gaps in data management knowledge were and then use this information to improve data management training. In order to do this, they needed a standardised way of evaluating data management plans.

- #5: A rubric is a descriptive scoring scheme. “A rubric is simply a scoring tool that identifies the various criteria relevant to an assignment or learning outcome, and then explicitly states the possible levels of achievement along a continuum.” http://teachingcommons.depaul.edu/Feedback_Grading/rubrics.html An analytic rubric provides detailed information about sub-components of the work through independent evaluation of various criteria. For our purposed, it was pretty clear that an analytic rubric would be necessary to provide sufficient detail from a Data Management Plan review. A shortcoming of using an analytic rubric is that there is a trade-off between rater consistency and level of diagnostic assessment. -> More granular assessment (one with more levels) leads to more variability in ratings (more room for error). This is a blank example of an analytic rubric.

- #6: An example of the rubric – NOT a complete final version (and isn’t complete for Section 1 due to space constraints). This is missing the example text for each assessment criterion A rubric is basically a descriptive scoring scheme. “A rubric is simply a scoring tool that identifies the various criteria relevant to an assignment or learning outcome, and then explicitly states the possible levels of achievement along a continuum.” http://teachingcommons.depaul.edu/Feedback_Grading/rubrics.html A holistic rubric generally provides an overall score for the thing being evaluated, while an analytics rubric provides more detailed information about sub-components of the work through independent evaluation of various criteria. For our purposed, it was pretty clear that an analytic rubric would be necessary to provide sufficient detail from a DMP review. When Amanda presented this concept, Mary realised that rubrics for evaluating data management plans would be useful in the UK context where institutions might be looking at plans for many different funders. It would allow us to become more consistent in our feedback. From this the UK DMP Rubrics project was born.

- #7: This slide is pretty self-explanatory. Amanda Whitmire of Stanford University Library gave an overview of the United States project at International Digital Curation Conference 2016 Major point is we’re still in the middle of this (but reasonably well on-target). Each group was given a skeleton structure for a rubric, similar to the blank outline in slide 4.

- #8: Rubric outline document was prepared in Google sheets. Supporting information was in Google docs. Things to consider included: 1 - whether we should ask funders to ‘approve’ developed rubrics, or just give them sight of the rubrics. We didn’t want to lose the community ownership of them, but we did want some level of buy-in from the funders. 2 – how we would go about keeping the rubrics up to date 3 – who would take responsibility for each rubric

- #9: 1, 2 and 3 are simplified versions of the instructions given to each working group. 4 and 5 have evolved. This process has been iterative. For some rubrics, there have been multiple revisions and comments, for others, there has been relatively little revision from the initial draft. 6 and 7 are mostly being done by Mary, but some other participants who have funder contacts are also helping.

- #10: As an update to this, we’ve had funder comments back from BBSRC. These will be incorporated in the next week or so and the revised rubric will be available to the community by Christmas (I hope).

- #11: The third point here is important. Some funders ask for a lot of info, but don’t provide much space in JeS. We hope to be able to demonstrate this, using the rubric to demonstrate what a comprehensive answer would look like. Alternatively, if would be interesting if they could provide what they consider to be a good example of a DMP that meets their needs, and for us to evaluate it using the rubric.

- #12: This is what I would like notes and idea on. There might be lots of ideas I haven’t thought of so far. The second point here is basically what the DART project did. However, instead of pulling 500 DMPs from their records and doing it as a study, we could do it as we go along and amass the data over time. Other institutions (eg LSHTM) do store a DMP for each project, so could do it as a study.