Fairness in AI (DDSW 2019)

- 1. Fairness in AI “Facilis descensus Averno” Henk Griffioen - 2019-05-07

- 2. Data Scientists have to put it the work to not end up in ML hell 2 “The gates of hell are open night and day; Smooth the descent, and easy is the way: But to return, and view the cheerful skies, In this the task and mighty labor lies.” The Works of Virgil (John Dryden)

- 3. The impact of AI on society is not all good. AI can encode and amplify human biases, leading to unfair outcomes at scale 3

- 4. Fairness is a hot topic and gaining traction! 4 https://fairmlclass.github.io/

- 5. Let’s work through an example 5

- 6. Predict if income exceeds $50K/year from 1994 census data with sensitive attributes 6

- 7. Getting a predictive model is easy! But is it fair? 7

- 8. How to measure fairness? 8

- 9. Ratio of • probability of a positive outcome given the sensitive attribute being true; • probability of a positive outcome given the sensitive attribute being false; is no less than p:100 p%-rule: measure demographic parity 9 40% : 50% = 80%10% : 50% = 20%

- 10. Our model is unfair: low probability of high income for black people and women 10

- 11. How are we getting bias in our systems? 11

- 12. • Skewed sample: initial bias that compounds over time • Tainted examples: bias in the data caused by humans • Sample size disparity: minority groups not as well represented • Limited features: less informative or accurate data collected on minority groups • Proxies: data implicitly encoding sensitive attributes • … Many reasons why bias is creeping into our systems 12Barocas & Selbst, 2016

- 13. Intermezzo: Dutch tax authorities in the news 13

- 14. Ethnic profiling by the Dutch tax authorities 14 Profiling people for fraud “A daycare center in Almere sounded the alarm when only non-Dutch parents were confronted with discontinuation of childcare allowances… …The Tax and Customs Administration says that it uses the data on Dutch nationality or non-Dutch nationality in the so-called automatic risk selection for fraud.” https://www.nrc.nl/nieuws/2019/05/20/autoriteit -persoonsgegevens-onderzoekt-mogelijke- discriminatie-door-belastingdienst-a3960840

- 15. Is it enough to leave out data on (second) nationality? 15 …In a response, the Tax and Customs Administration states that the information about the (second) nationality of parents or intermediary is not used in this investigation… “Since 2014, a second nationality with Dutch nationality is no longer included in the basic registration. This has been introduced to prevent discrimination for people with dual nationality.” Is this enough to assure that non-Dutch parents in Almere will not suffer another tax injustice? Towards a fair future?

- 16. The model is still unfair without sensitive data. Biases are still encoded by proxies in the dataset! 16

- 17. How can we enforce fairness?

- 18. Start out with our normal (biased) classifier… 18 Louppe, 2017

- 19. … and add an adversarial classifier as a fairness referee 19 Louppe, 2017

- 20. Warming up: train classifier to predict income 20

- 21. Warming up: train adversarial to detect unfairness 21 Louppe, 2017

- 22. Adversarial training: iteratively train classifier and adversarial 22 Louppe, 2017

- 23. After enough training rounds the classifier gives fair income predictions! 23 https://blog.godatadriven.com/fairness-in-ml

- 24. Putting in the work

- 25. Demographics parity • Decision uncorrelated with sensitive attribute Equality of opportunity • Outcome probability is same for different groups No mathematical formulation of fairness. There are many (conflicting) measures 25http://www.ece.ubc.ca/~mjulia/publications/Fairness_Definitions_ Explained_2018.pdf

- 26. Many ML fairness approaches 26 https://dzone.com/articles/machine-learning- models-bias-mitigation-strategies

- 27. • Identify product goals • What? For whom? • Get the right people in the room • Identify stakeholders • Who might be harmed? How? • Select a fairness approach • How to measure? What interaction? • Analyze and evaluate your system • What decisions are made? • Mitigates issues • Intervention needed? • Monitor continuously and escalation plans • Auditing and transparency • Who certifies your system? Fairness should be part of your product process and larger strategy 27 https://www.slideshare.net/KrishnaramKenthapadi/ fairnessaware-machine-learning-practical- challenges-and-lessons-learned-www-2019-tutorial

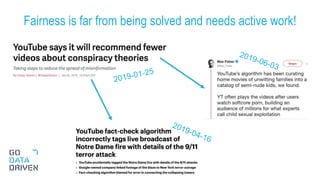

- 28. Fairness is far from being solved and needs active work! 28

- 29. Artificial Intelligence at Google: Our Principles - Objectives for AI applications 1. Be socially beneficial. 2. Avoid creating or reinforcing unfair bias. 3. Be built and tested for safety. 4. Be accountable to people. 5. Incorporate privacy design principles. 6. Uphold high standards of scientific excellence. 7. Be made available for uses that accord with these principles. Even big companies can’t always practice what they preach 29 https://ai.google/principles/

- 30. Data Scientists have to put it the work to not end up in ML hell 30 “The gates of hell are open night and day; Smooth the descent, and easy is the way: But to return, and view the cheerful skies, In this the task and mighty labor lies.” The Works of Virgil (John Dryden)

- 31. An ethics checklist for data scientists • http://deon.drivendata.org/ Tutorial on fairness for products • sites.google.com/view/wsdm19-fairness-tutorial Community concerned with fairness in ML • www.fatml.org Our blogs • blog.godatadriven.com/fairness-in-ml • blog.godatadriven.com/fairness-in-pytorch Where to go from here? 31

Editor's Notes

- #2: Fairness, privacy, transparency, explainability

- #3: An earlier version of ‘The road to hell is paved with good intentions’

- #21: https://www.slon.pics/shop/robot-referee-showing-the-red-card-3d-illustration-isolated-contains-clipping-path/

- #31: An earlier version of ‘The road to hell is paved with good intentions’