AML_030607.ppt

- 1. Software Packages & Datasets MLC++ Machine learning library in C++ http:// www.sgi.com/tech/mlc / WEKA http:// www.cs.waikato.ac.nz/ml/weka / Stalib Data, software and news from the statistics community http:// lib.stat.cmu.edu GALIB MIT GALib in C++ http:// lancet.mit.edu/ga Delve Data for Evaluating Learning in Valid Experiments http:// www.cs.utoronto.ca/~delve UCI Machine Learning Data Repository UC Irvine http:// www.ics.uci.edu/~mlearn/MLRepository.html UCI KDD Archive http:// kdd.ics.uci.edu/summary.data.application.html

- 2. Major conferences in ML ICML (International Conference on Machine Learning) ECML (European Conference on Machine Learning) UAI (Uncertainty in Artificial Intelligence) NIPS (Neural Information Processing Systems) COLT (Computational Learning Theory) IJCAI (International Joint Conference on Artificial Intelligence) MLSS (Machine Learning Summer School)

- 3. What is Learning All about? Get knowledge of by study, experience, or be taught Become aware by information or from observation Commit to memory Be informed of or receive instruction

- 4. A Possible Definition of Learning Things learn when they change their behavior in a way that makes them perform better in the future. Have your shoes learned the shape of your foot ? In learning the purpose is the learner’s, whereas in training it is the teacher’s.

- 5. Learning & Adaptation Machine Learning: 機器學習 ? Machine Automatic Learning Performance is improved “ A learning machine, broadly defined is any device whose actions are influenced by past experiences . ” (Nilsson 1965) “ Any change in a system that allows it to perform better the second time on repetition of the same task or on another task drawn from the same population .” (Simon 1983) “ An improvement in information processing ability that results from information processing activity.” (Tanimoto 1990)

- 6. Applications of ML Learning to recognize spoken words SPHINX (Lee 1989) Learning to drive an autonomous vehicle ALVINN (Pomerleau 1989) Taxi driver vs. Pilot Learning to pick patterns of terrorist action Learning to classify celestial objects (Fayyad et al 1995) Learning to play chess Learning to play go game (Shih, 1989) Learning to play world-class backgammon (TD-GAMMON, Tesauro 1992) Information Security: Intrusion detection system (normal vs. abnormal) Bioinformation

- 7. Prediction is the Key in ML We make predictions all the time but rarely investigate the processes underlying our predictions. In carrying out scientific research we are also governed by how theories are evaluated . To automate the process of making predictions we need to understand in addition how we search and refine “theories”

- 8. Types of learning problems A rough (and somewhat outdated) classification of learning problems: Supervised learning , where we get a set of training inputs and outputs classification, regression Unsupervised learning , where we are interested in capturing inherent organization in the data clustering, density estimation Semi-supervised learning Reinforcement learning , where we only get feedback in the form of how well we are doing (not what we should be doing) planning

- 9. Issues in Machine Learning What algorithms can approximate functions well and when? How does the number of training examples influence accuracy? How does the complexity of hypothesis representation impact it? How does noisy data influence accuracy? What are the theoretical limits of learnability?

- 10. Learning a Class from Examples: Inductive ( 歸納 ) Suppose we want to learn a class C Example: “sports car” Given a collection of cars, have people label them as sports car (positive example) or non-sports car (negative example) Task: find a description (rule) that is shared by all of the positive examples and none of the negative examples Once we have this definition for C , we can predict – given a new unlabeled car , predict whether or not it is a sports car describe/compress – understand what people expect in a car

- 11. Choosing an Input Representation Suppose that of all the features describing cars, we choose price and engine power. Choosing just two features makes things simpler allows us to ignore irrelevant attributes Let x 1 represent the price (in USD) x 2 represent the engine volume (in cm 3 ) Then each car is represented and its label y denotes its type each example is represented by the pair ( x , y ) and a training set containing N examples is represented by X y = { 1 if x is a positive example 0 if x is a negative example

- 12. Plotting the Training Data x 1 : price x 2 : engine power + – – + + – + + – – – – – – –

- 13. Hypothesis Class x 1 x 2 + – – + + – + + – – – – – – – suppose that we think that for a car to be a sports car, its price and its engine power should be in a certain range: ( p 1 ≤ price ≤ p 2 ) AND ( e 1 ≤ engine ≤ e 2 ) p 2 p 1 e 1 e 2

- 14. Concept Class x 1 x 2 + – – + + – + + – – – – – – – suppose that the actual class is C task: find h H that is consistent with X C no training errors false negatives p 2 p 1 e 1 e 2 h false positives

- 15. Choosing a Hypothesis Empirical Error: proportion of training instances where predictions of h do not match the training set Each ( p 1 , p 2 , e 1 , e 2 ) defines a hypothesis h H We need to find the best one…

- 16. Hypothesis Choice x 1 x 2 – – – – – – – – – – Most specific? Most general? S G p 2 ’ p 1 ’ e 2 ’ e 1 ’ Most general hypothesis G p 1 Most specific hypothesis S e 1 e 2 p 2 + + + + +

- 17. Consistent Hypothesis x 1 x 2 – – – – – – – – – – G and S define the boundaries of the Version Space. The set of hypotheses more general than S and more specific than G forms the Version Space , the set of consistent hypotheses + + + + + Any h between S and G

- 18. Now what? Using the average of S and G or just rejecting it to experts? x 1 x 2 – – – – – – – – – – x’ ? How do we make prediction for a new x’ ? x’ ? x’ ? + + + + +

- 19. Issues Hypothesis space must be flexible enough to represent concept Making sure that the gap of S and G sets do not get too large Assumes no noise ! inconsistently labeled examples will cause the version space to collapse there have been extensions to handle this…

- 20. Goal of Learning Algorithms The early learning algorithms were designed to find such an accurate fit to the data. The ability of a classifier to correctly classify data not in the training set is known as its generalization. Bible code? 1994 Taipei Mayor election? Predict the real future NOT fitting the data in your hand or predict the desired results

- 21. Binary Classification Problem Learn a Classifier from the Training Set Given a training dataset Main goal : Predict the unseen class label for new data Find a function by learning from data (I) (II) Estimate the posteriori probability of label

- 22. Binary Classification Problem Linearly Separable Case Malignant Benign A- A+

- 23. Probably Approximately Correct Learning pac Model according to a fixed but unknown distribution We call such measure risk functional and denote it as Key assumption: Training and testing data are generated i.i.d. Evaluate the “quality” of a hypothesis (classifier) should take the unknown distribution error” made by the ) ( i.e. “ average error” or “expected into account

- 24. Generalization Error of pac Model Let be a set of training examples chosen i.i.d. according to Treat the generalization error as a r.v. depending on the random selection of Find a bound of the trail of the distribution of in the form r.v. is a function of and ,where is the confidence level of the error bound which is given by learner

- 25. Probably Approximately Correct We assert: or The error made by the hypothesis then the error bound will be less that is not depend on the unknown distribution

- 26. PAC vs. 民意調查 成功樣本為 1265 個,以單純隨機抽樣方式( SRS )估計抽樣誤差,在 95 %的信心水準下,其最大誤差應不超過 ±2.76 %。

- 27. Find the Hypothesis with Minimum Expected Risk? The ideal hypothesis should has the smallest expected risk Unrealistic !!! Let the training examples chosen i.i.d. according to with the probability density be The expected misclassification error made by is

- 28. Empirical Risk Minimization (ERM) and are not needed) ( Only focusing on empirical risk will cause overfitting Find the hypothesis with the smallest empirical risk Replace the expected risk over by an average over the training example The empirical risk :

- 29. VC Confidence (The Bound between ) C. J. C. Burges, A tutorial on support vector machines for pattern recognition , Data Mining and Knowledge Discovery 2 (2) (1998), p.121-167 The following inequality will be held with probability

- 30. Why We Maximize the Margin? (Based on Statistical Learning Theory) The Structural Risk Minimization (SRM): The expected risk will be less than or equal to empirical risk (training error)+ VC (error) bound

- 31. Capacity (Complexity) of Hypothesis Space :VC-dimension A given training set is shattered by if for every labeling of with this labeling if and only consistent Three (linear independent) points shattered by a hyperplanes in

- 32. Shattering Points with Hyperplanes in Can you always shatter three points with a line in ? Theorem: Consider some set of m points in . Choose a point as origin. Then the m points can be shattered by oriented hyperplanes if and only if the position vectors of the rest points are linearly independent .

- 33. Definition of VC-dimension The Vapnik-Chervonenkis dimension, , of hypothesis space defined over the input space is the size of the (existent) largest finite subset shattered by of (A Capacity Measure of Hypothesis Space ) If arbitrary large finite set of can be shattered by , then Let then

- 34. Example I x R , H = interval on line There exists two points that can be shattered No set of three points can be shattered VC( H ) = 2 An example of three points (and a labeling) that cannot be shattered + – +

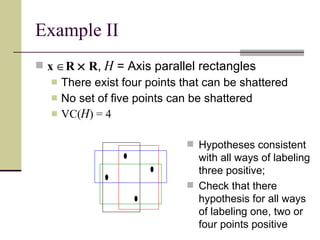

- 35. Example II x R R , H = Axis parallel rectangles There exist four points that can be shattered No set of five points can be shattered VC( H ) = 4 Hypotheses consistent with all ways of labeling three positive; Check that there hypothesis for all ways of labeling one, two or four points positive

- 36. Example III A lookup table has infinite VC dimension! A hypothesis space with low VC dimension no generalization some generalization no error in training some error in training

- 37. Comments VC dimension is distribution-free ; it is independent of the probability distribution from which the instances are drawn In this sense, it gives us a worse case complexity (pessimistic) In real life, the world is smoothly changing, instances close by most of the time have the same labels, no worry about all possible labelings However, this is still useful for providing bounds, such as the sample complexity of a hypothesis class. In general, we will see that there is a connection between the VC dimension (which we would like to minimize) and the error on the training set (empirical risk)

- 38. Summary: Learning Theory The complexity of a hypothesis space is measured by the VC-dimension There is a tradeoff between , and N

- 39. Noise Noise: unwanted anomaly in the data Another reason we can’t always have a perfect hypothesis error in sensor readings for input teacher noise: error in labeling the data additional attributes which we have not taken into account. These are called hidden or latent because they are unobserved.

- 40. When there is noise… There may not have a simple boundary between the positive and negative instances Zero ( training ) misclassification error may not be possible

- 41. Something about Simple Models Easier to classify a new instance Easier to explain Fewer parameters, means it is easier to train. The sample complexity is lower . Lower variance. A small change in the training samples will not result in a wildly different hypothesis High bias. A simple model makes strong assumptions about the domain; great if we’re right, a disaster if we are wrong. optimality ?: min (variance + bias) May have better generalization performance, especially if there is noise. Occam’s razor: simpler explanations are more plausible

- 42. Learning Multiple Classes K -class classification K two-class problems (one against all) could introduce doubt could have unbalance data

- 43. Regression Supervised learning where the output is not a classification (e.g. 0/1, true/false, yes/no), but the output is a real number. X =

- 44. Regression Suppose that the true function is y t = f ( x t ) + where is random noise Suppose that we learn g ( x ) as our model. The empirical error on the training set is Because y t and g ( x t ) are numeric, it makes sense for L to be the distance between them. Common distance measures: mean squared error absolute value of difference etc.

- 45. Example: Linear Regression Assume g ( x ) is linear and we want to minimize the mean squared error We can solve this for the w i that minimizes the error

- 46. Model Selection Learning problem is ill-posed Need inductive bias assuming a hypothesis class example: sports car problem, assuming most specific rectangle but different hypothesis classes will have different capacities higher capacity, better able to fit the data but goal is not to fit the data, it’s to generalize how do we measure? cross-validation : Split data into training and validation set; use training set to find hypothesis and validation set to test generalization. With enough data, the hypothesis that is most accurate on validation set is the best. choosing the right bias: model selection

- 47. Underfitting and Overfitting Matching the complexity of the hypothesis with the complexity of the target function if the hypothesis is less complex than the function, we have underfitting . In this case, if we increase the complexity of the model, we will reduce both training error and validation error. if the hypothesis is too complex, we may have overfitting . In this case, the validation error may go up even the training error goes down. For example, we fit the noise, rather than the target function.

- 48. Tradeoffs (Dietterich 2003) complexity/capacity of the hypothesis amount of training data generalization error on new examples

- 49. Take Home Remarks What is the hardest part of machine learning? selecting attributes (representation) deciding the hypothesis (assumption) space: big one or small one, that’s the question! Training is relatively easy DT, NN, SVM, (KNN), … The usual way of learning in real life not supervised, not unsupervised, but semi-supervised, even with some taste of reinforcement learning

- 50. Take Home Remarks Learning == Search in Hypothesis Space Inductive Learning Hypothesis: Generalization is possible . If a machine performs well on most training data AND it is not too complex , it will probably do well on similar test data. Amazing fact: in many cases this can actually be proven. In other words, if our hypothesis space is not too complicated/flexible (has a low capacity in some formal sense), and if our training set is large enough then we can bound the probability of performing much worse on test data than on training data. The above statement is carefully formalized in 40 years of research in the area of learning theory.

- 51. VS on another Example H = conjunctive rules S = x 1 ( x 3 ) ( x 4 ) G = x 1 , x 3 , x 4 1 0 0 x 4 0 1 1 0 3 1 0 0 1 2 1 0 1 1 1 y x 3 x 2 x 1 example #

- 52. Probably Approximately Correct Learning We allow our algorithms to fail with probability . Finding an approximately correct hypothesis with high probability Imagine drawing a sample of N examples, running the learning algorithm, and obtaining h . Sometimes the sample will be unrepresentative , so we want to insist that 1 – the time, the hypothesis will have error less than . For example, we might want to obtain a 99% accurate hypothesis 90% of the time.