Chapter-2-lexical-analyser and its property lecture note.ppt

- 2. Chapter Two This Chapter Covers: Role of lexical analyser Token Specification and Recognition NFA to DFA

- 3. Lexical Analyzer Lexical Analyzer reads the source program character by character to produce tokens. Normally a lexical analyzer doesn’t return a list of tokens at one shot, it returns a token when the parser asks a token from it. 1 3 2

- 4. Token Token represents a set of strings described by a pattern. Identifier represents a set of strings which start with a letter continues with letters and digits Lexeme: is a sequence of characters in the source program that matched by the pattern for a token. Tokens: identifier, number, addop, delimeter, … Since a token can represent more than one lexeme, additional information should be held for that specific lexeme. This additional information is called as the attribute of the token. For simplicity, a token may have a single attribute which holds the required information for that token. For identifiers, this attribute a pointer to the symbol table, and the symbol table holds the actual attributes for that token.

- 5. Token (Cont.) Some attributes: <id,attr> where attr is pointer to the symbol table <assgop,_> no attribute is needed (if there is only one assignment operator) <num,val> where val is the actual value of the number. Token type and its attribute uniquely identifies a lexeme. Regular expressions are widely used to specify patterns.

- 6. Scanner A scanner groups (classed together) input characters into tokens. For example, if the input is: x = x*(b+1); then the scanner generates the following sequence of tokens id(x), =, id(x), *, (, id(b), +, num(1), ), ; where id(x) indicates the identifier with name x (a programme variable in this case) and num(1) indicates the integer 1. Each time the parser needs a token, it sends a request to the scanner. Then, the scanner reads as many characters from the input stream as it is necessary to construct a single token.

- 7. Scanner (Cont.) The scanner may report an error during scanning. Otherwise, when a single token is formed, the scanner is suspended (stop from being active temporarily) and returns the token to the parser. The parser will repeatedly call the scanner to read all the tokens from the input stream or until an error is detected (such as a syntax error). Some tokens require some extra information. For example, an identifier is a token (so it is represented by some number) but it is also associated with a string that holds the identifier name.

- 8. Scanner (Cont.) For example, the token id(x) is associated with the string, "x". Similarly, the token num(1) is associated with the number, 1. Tokens are specified by patterns, called regular expressions. For example, the regular expression [a-z][a-zA-Z0-9]* recognises all identifiers with at least one alphanumeric letter whose first letter is lower-case alphabetic. A typical scanner: recognises the keywords of the language (these are the reserved words that have a special meaning in the language, such as the word class in Java); (such as the #include "file" directive in C).

- 9. Scanner (Cont.) recognises special characters, such as parentheses ( and ), or groups of special characters, such as := (equal by definition) and ==; recognises identifiers, integers, reals, decimals, strings, etc; ignores whitespaces and comments; Efficient Scanners can be built using regular expressions and finite automata. There are automated tools called scanner generators, such as flex (Fast Lexical Analyzer Generator) for C and JLex for Java, which construct a fast scanner automatically according to specifications (regular expressions).

- 10. Role of Lexical Analyser Lexical analyzer performs below given tasks:- Remove white spaces and comments from the source program. Correlates (make correct) error messages with the source program. Read input characters from the source program. Helps to identify token into the symbol table. Example: Symbol table for a code: //Define a global function int add(int a, int b) { int sum =0; sum =a+b; return sum; }

- 11. Lexical Analysis In lexical analysis, we read the source programme character by character and converge (meet) them to tokens. A token is the smallest unit recognisable by the compiler. Generally, we have four classes of tokens that are usually recognised and they are: 1. Keywords 2. Identifies 3. Constants 4. Delimiters

- 12. Construction of Lexical Analyser There are 2 general ways to construct lexical analyser: Hand implementation Automatic generation of lexical analyser Hand Implementation There are two ways to use hand implementation: Input Buffer approach Transitional diagrams approach Input Buffering The lexical analyser scans the characters of the source programme one at a time to discover tokens.

- 13. Cont. Often, many characters beyond (in addition to) the next token may have to be examined before the next token itself can be determined. For this and other reasons, it is desirable for the lexical analyser to read its input from an input buffer.

- 14. Operations on Languages Concatenation: The operation of joining two or more strings together. L1L2 = { s1s2 | s1 L1 and s2 L2 } Union: The operation of combining the result set of two or more strings. L1 L2 = { s| s L1 or s L2 } Exponentiation: Repeated strings of the base. L0 = {} L1 = L L2 = LL Kleene Closure : Infinite set of all possible strings, including the empty ε string. L* = Positive Closure : The infinite set of all possible strings, excluding ε. L+ = 0 i i L 1 i i L

- 15. Example L1 = {a,b,c,d} L2 = {1,2} L1L2 = {a1,a2,b1,b2,c1,c2,d1,d2} L1 L2 = {a,b,c,d,1,2} L1 3 = all strings with length three (using a,b,c,d} L1 * (zero or more) = all strings using letters a, b, c, d and empty (ε ) string. a* =i.e., it can generate {ε, a, aa, aaa, …} L1 + (one or more) = doesn’t include the empty (ε ) string. a+ = i.e., it can generate {a, aa, aaa, …}

- 16. Regular Expressions Regular Expressions (REs) We use regular expressions to describe tokens of a programming language. Regular expressions are a very convenient (suitable) form of representing (possibly infinite) sets of strings, called regular sets. For example, the RE (a| b)*aa represents the infinite set {``aa",``aaa",``baa",``abaa", ... }, which is the set of all strings with characters a and b that end in aa.

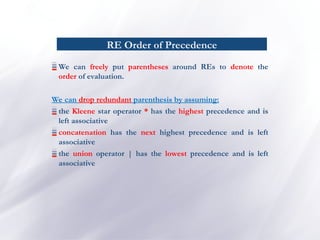

- 17. RE Order of Precedence We can freely put parentheses around REs to denote the order of evaluation. We can drop redundant parenthesis by assuming: the Kleene star operator * has the highest precedence and is left associative concatenation has the next highest precedence and is left associative the union operator | has the lowest precedence and is left associative

- 18. Tokens/Patterns/Lexemes/Attributes A token is sequence of characters which represents a unit of information in the source program. Lexeme: a sequence of characters in the source program that is matched by the pattern for a token A Pattern is a rule describing the set of lexemes that can represent a particular token in source programs. In other word: A set of strings in the input for which the same token is produced as output. Pattern is a regular definition or regular definition.

- 19. Cont. An attribute of a token is usually a pointer to the symbol table entry that gives additional information about the token, such as its type, value, line number, etc. Attributes are used to distinguish different lexemes in a token Example: Lexeme Token Pattern int keyword int if keyword if <,<=,=,>,>= relational operator < or <= or = or > or newval identifier newval

- 20. Regular Expression To write regular expression for some languages can be difficult, because their regular expressions can be quite complex. In those cases, we may use regular definitions. A regular definition is a sequence of the definitions of the form and they are described using regular definitions, as follow. digit → [0-9] ---- any of the numerals from 0-9. letter → [A-Za-z] ---- a set of upper and lower case letters. id → letter ( letter digit )* --- a set of letters, underscore or digits (0-9). relop → < | > | <= | >= | = | <>

- 21. Lexical Analyser generator Lex There are tools that can generate lexical analyzers. Lex is a special-purpose programming language for creating programmes to process streams of input characters. An input file, which we call lex.l, is written in the Lex language and describes the lexical analyzer to be generated. The Lex compiler transforms lex.l to a C program, in a file that is always named lex.yy.c. The latter file is compiled by the C compiler into a file called a.out, as always. The C-compiler output is a working lexical analyzer that can take a stream of input characters and produce a stream of tokens.

- 22. Cont. Creating a lexical analyzer with Lex Lex Specifications Lex source is separated into three sections by %% delimiters Declarations :- This section includes declaration of variables, constants and regular definitions. %% translation rules :- defines the rules that parse the input stream (regular expressions ). %% auxiliary functions (optional)

- 23. Steps in lex implementation 1. Read input language specification 2. Construct NFA with epsilon-moves (Can also do DFA directly) 3. Convert NFA to DFA 4. Optimise the DFA 5. Generate parsing tables & code

- 24. Finite Automata A finite automaton can be: deterministic(DFA) or non- deterministic (NFA) Both deterministic and non-deterministic finite automaton recognize regular sets. Which one? deterministic – faster recognizer, but it may take more space non-deterministic – slower, but it may take less space deterministic automatons are widely used lexical analyzers. First, we define regular expressions for tokens; Then we convert them into a DFA to get a lexical analyzer for our tokens.

- 25. Finite Automata (Cont.) Algorithm1: Regular Expression NFA DFA (two steps: first to NFA, then to DFA) Algorithm2: Regular Expression DFA (directly convert a regular expression into a DFA)

- 26. Converting a RE to an NFA Every regular expression (RE) can be converted into an equivalent NFA. Every NFA can be converted into an equivalent DFA. The task of a scanner generator, such as JLex, is to generate the transition tables or to synthesise the scanner programme given a scanner specification (in the form of a set of REs). This is accomplished in two steps: first it converts REs into an NFA and then it converts the NFA into a DFA (Algorithm1).

- 27. Converting a RE to an NFA (Cont.) An NFA is similar to a DFA but it also permits (allow) multiple transitions over the same character and transitions over ɛ. In the case of multiple transitions from a state over the same character, when we are at this state and we read this character, we have more than one choice; the NFA succeeds if at least one of these choices. The -transition does not ɛ consume any input characters, so you may jump to another state for free. Clearly DFAs are a subset of NFAs.

- 28. Non-Deterministic Finite Automaton A non-deterministic finite automaton (NFA) is a mathematical model that consists (made up) of: S - a set of states (sigma) - a set of input symbols (alphabet) move – a transition function s0 - a start (initial) state F – a set of accepting states (final states) - transitions are allowed in NFAs. In other words, we can move from one state to another one without consuming any symbol.

- 29. Non-Deterministic Finite Automaton A NFA accepts a string x, if and only if there is a path from the starting state to one of accepting states such that edge labels along this path spell out x. NFA (Example) 0 is the start state s0 {2} is the set of final states F = {a,b} S = {0,1,2} Transition Function: a b 0 {0,1} {0} 1 _ {2} 2 _ _ The language recognized by this NFA is (a|b)* ab

- 30. Transition Tables We can also represent an NFA by a transition table, whose rows correspond to states, and whose columns correspond to the input symbols and ɛ. The entry for a given state and input is the value of the transition function applied to those arguments. If the transition function has no information about that state- input pair, we put 0 in the table for the pair. Transition table for the NFA of RE (a|b)*abb

- 31. Deterministic Finite Automaton (DFA) A Deterministic Finite Automaton (DFA) is a special form of a NFA. no state has - transition for each state s and input symbol a there is exactly one transition out of s labelled a. A DFA represents a finite state machine that recognises a RE. The language recognized by this DFA is also (a|b)* ab

- 32. Converting a NFA into a DFA (Example) -closure({0}) = {0,1,2,4,7} mark S0 -closure(move(S0,a)) = -closure({3,8}) = {1,2,3,4,6,7,8} = S1 -closure(move(S0,b)) = -closure({5}) = {1,2,4,5,6,7} = S2 transfunc[S0,a] S1 transfunc[S0,b] S2 mark S1 -closure(move(S1,a)) = -closure({3,8}) = {1,2,3,4,6,7,8} = S1 -closure(move(S1,b)) = -closure({5}) = {1,2,4,5,6,7} = S2 transfunc[S1,a] S1 transfunc[S1,b] S2 mark S2 -closure(move(S2,a)) = -closure({3,8}) = {1,2,3,4,6,7,8} = S1 -closure(move(S2,b)) = -closure({5}) = {1,2,4,5,6,7} = S2 transfunc[S2,a] S1 transfunc[S2,b] S2

- 33. Converting a NFA into a DFA (Cont.) S0 is the start state of DFA since 0 is a member of S0={0,1,2,4,7} S1 is an accepting state of DFA since 8 is a member of S1 = {1,2,3,4,6,7,8}

- 34. Converting RE Directly to DFAs We may convert a regular expression into a DFA (without creating a NFA first). First we augment the given regular expression by concatenating it with a special symbol #. r (r)# augmented regular expression (make something) greater by adding to it.) Then, we create a syntax tree for this augmented regular expression. In this syntax tree, all alphabet symbols (plus # and the empty string) in the augmented regular expression will be on the leaves, and all inner nodes will be the operators in that augmented regular expression.

- 35. Regular Expression DFA (cont.) Then each alphabet symbol (plus #) will be numbered (position numbers). (a|b) * a (a|b)* a # augmented regular expression * | b a # a 1 4 3 2 Syntax tree of (a|b) * a # • each symbol is numbered (positions) • each symbol is at a leave • inner nodes are operators

- 36. Minimizing Number of States of a DFA partition the set of states into two groups: G1 : set of accepting states G2 : set of non-accepting states For each new group G partition G into subgroups such that states s1 and s2 are in the same group if, for all input symbols a, states s1 and s2 have transitions to states in the same group. Start state of the minimized DFA is the group containing the start state of the original DFA. Accepting states of the minimized DFA are the groups containing the accepting states of the original DFA.

- 37. Minimizing DFA - Example G1 = {2} G2 = {1,3} G2 cannot be partitioned because move(1,a)=2 move(1,b)=3 move(3,a)=2 move(2,b)=3 So, the minimized DFA (with minimum states)

- 38. Minimizing DFA – Another Example a b 1->2 1->3 2->2 2->3 3->4 3->3 So, the minimized DFA

- 40. Quiz 5% Write LEX program to implement a simple calculator? L1 = {0,1} L2 = {0,1} L1L2 = {} ? RE 0* = ? RE (0|1)* = ? RE (0|1)*11 = ?

![Scanner (Cont.)

For example, the token id(x) is associated with the string, "x".

Similarly, the token num(1) is associated with the number, 1.

Tokens are specified by patterns, called regular expressions.

For example, the regular expression [a-z][a-zA-Z0-9]* recognises all identifiers with at

least one alphanumeric letter whose first letter is lower-case alphabetic.

A typical scanner:

recognises the keywords of the language (these are the reserved words that have a

special meaning in the language, such as the word class in Java); (such as the #include

"file" directive in C).](https://image.slidesharecdn.com/chapter-2-lexical-analyser-250720220238-98c7c6bb/85/Chapter-2-lexical-analyser-and-its-property-lecture-note-ppt-8-320.jpg)

![Regular Expression

To write regular expression for some languages can be difficult,

because their regular expressions can be quite complex. In

those cases, we may use regular definitions.

A regular definition is a sequence of the definitions of the form

and they are described using regular definitions, as follow.

digit → [0-9] ---- any of the numerals from 0-9.

letter → [A-Za-z] ---- a set of upper and lower case letters.

id → letter ( letter digit )* --- a set of letters, underscore or

digits (0-9).

relop → < | > | <= | >= | = | <>](https://image.slidesharecdn.com/chapter-2-lexical-analyser-250720220238-98c7c6bb/85/Chapter-2-lexical-analyser-and-its-property-lecture-note-ppt-20-320.jpg)

![Converting a NFA into a DFA (Example)

-closure({0}) = {0,1,2,4,7}

mark S0

-closure(move(S0,a)) = -closure({3,8}) = {1,2,3,4,6,7,8} = S1

-closure(move(S0,b)) = -closure({5}) = {1,2,4,5,6,7} = S2

transfunc[S0,a] S1 transfunc[S0,b] S2

mark S1

-closure(move(S1,a)) = -closure({3,8}) = {1,2,3,4,6,7,8} = S1

-closure(move(S1,b)) = -closure({5}) = {1,2,4,5,6,7} = S2

transfunc[S1,a] S1 transfunc[S1,b] S2

mark S2

-closure(move(S2,a)) = -closure({3,8}) = {1,2,3,4,6,7,8} = S1

-closure(move(S2,b)) = -closure({5}) = {1,2,4,5,6,7} = S2

transfunc[S2,a] S1 transfunc[S2,b] S2](https://image.slidesharecdn.com/chapter-2-lexical-analyser-250720220238-98c7c6bb/85/Chapter-2-lexical-analyser-and-its-property-lecture-note-ppt-32-320.jpg)