Cluster

- 2. Motivation Problem: Query word could be ambiguous: Eg: Query “Star” retrieves documents about astronomy, plants, animals etc. Solution: Visualisation Clustering document responses to queries along lines of different topics. Problem 2: Manual construction of topic hierarchies and taxonomies Solution: Preliminary clustering of large samples of web documents. Problem 3: Speeding up similarity search Solution: Restrict the search for documents similar to a query to most representative cluster(s).

- 3. Example Scatter/Gather, a text clustering system, can separate salient topics in the response to keyword queries. (Image courtesy of Hearst)

- 4. Clustering Task : Evolve measures of similarity to cluster a collection of documents/terms into groups within which similarity within a cluster is larger than across clusters. Cluster Hypothesis: G iven a `suitable‘ clustering of a collection, if the user is interested in document/term d/t , he is likely to be interested in other members of the cluster to which d/t belongs. Similarity measures Represent documents by TFIDF vectors Distance between document vectors Cosine of angle between document vectors Issues Large number of noisy dimensions Notion of noise is application dependent

- 5. Top-down clustering k -Means: Repeat… Choose k arbitrary ‘centroids’ Assign each document to nearest centroid Recompute centroids Expectation maximization (EM): Pick k arbitrary ‘distributions’ Repeat: Find probability that document d is generated from distribution f for all d and f Estimate distribution parameters from weighted contribution of documents

- 6. Choosing `k’ Mostly problem driven Could be ‘data driven’ only when either Data is not sparse Measurement dimensions are not too noisy Interactive Data analyst interprets results of structure discovery

- 7. Choosing ‘k’ : Approaches Hypothesis testing: Null Hypothesis (H o ): Underlying density is a mixture of ‘k’ distributions Require regularity conditions on the mixture likelihood function (Smith’85) Bayesian Estimation Estimate posterior distribution on k, given data and prior on k. Difficulty: Computational complexity of integration Autoclass algorithm of (Cheeseman’98) uses approximations (Diebolt’94) suggests sampling techniques

- 8. Choosing ‘k’ : Approaches Penalised Likelihood To account for the fact that L k (D) is a non-decreasing function of k. Penalise the number of parameters Examples : Bayesian Information Criterion (BIC), Minimum Description Length(MDL), MML. Assumption: Penalised criteria are asymptotically optimal (Titterington 1985) Cross Validation Likelihood Find ML estimate on part of training data Choose k that maximises average of the M cross-validated average likelihoods on held-out data D test Cross Validation techniques: Monte Carlo Cross Validation (MCCV), v-fold cross validation (vCV)

- 10. Motivation Problem: Query word could be ambiguous: Eg: Query “Star” retrieves documents about astronomy, plants, animals etc. Solution: Visualisation Clustering document responses to queries along lines of different topics. Problem 2: Manual construction of topic hierarchies and taxonomies Solution: Preliminary clustering of large samples of web documents. Problem 3: Speeding up similarity search Solution: Restrict the search for documents similar to a query to most representative cluster(s).

- 11. Example Scatter/Gather, a text clustering system, can separate salient topics in the response to keyword queries. (Image courtesy of Hearst)

- 12. Clustering Task : Evolve measures of similarity to cluster a collection of documents/terms into groups within which similarity within a cluster is larger than across clusters. Cluster Hypothesis: Given a `suitable‘ clustering of a collection, if the user is interested in document/term d/t , he is likely to be interested in other members of the cluster to which d/t belongs. Collaborative filtering: Clustering of two/more objects which have bipartite relationship

- 13. Clustering (contd) Two important paradigms: Bottom-up agglomerative clustering Top-down partitioning Visualisation techniques: Embedding of corpus in a low-dimensional space Characterising the entities: Internally : Vector space model, probabilistic models Externally: Measure of similarity/dissimilarity between pairs Learning: Supplement stock algorithms with experience with data

- 14. Clustering: Parameters Similarity measure: (eg: cosine similarity) Distance measure: (eg: eucledian distance) Number “k” of clusters Issues Large number of noisy dimensions Notion of noise is application dependent

- 15. Clustering: Formal specification Partitioning Approaches Bottom-up clustering Top-down clustering Geometric Embedding Approaches Self-organization map Multidimensional scaling Latent semantic indexing Generative models and probabilistic approaches Single topic per document Documents correspond to mixtures of multiple topics

- 16. Partitioning Approaches Partition document collection into k clusters Choices: Minimize intra-cluster distance Maximize intra-cluster semblance If cluster representations are available Minimize Maximize Soft clustering d assigned to with `confidence’ Find so as to minimize or maximize Two ways to get partitions - bottom-up clustering and top-down clustering

- 17. Bottom-up clustering(HAC) Initially G is a collection of singleton groups, each with one document Repeat Find , in G with max similarity measure, s ( ) Merge group with group For each keep track of best Use above info to plot the hierarchical merging process (DENDOGRAM) To get desired number of clusters: cut across any level of the dendogram

- 18. Dendogram A dendogram presents the progressive, hierarchy-forming merging process pictorially.

- 19. Similarity measure Typically s ( ) decreases with increasing number of merges Self-Similarity Average pair wise similarity between documents in = inter-document similarity measure (say cosine of tfidf vectors) Other criteria: Maximium/Minimum pair wise similarity between documents in the clusters

- 20. Computation Un-normalized group profile: Can show: O ( n 2 log n ) algorithm with n 2 space

- 21. Similarity Normalized document profile: Profile for document group :

- 22. Switch to top-down Bottom-up Requires quadratic time and space Top-down or move-to-nearest Internal representation for documents as well as clusters Partition documents into `k’ clusters 2 variants “ Hard” (0/1) assignment of documents to clusters “ soft” : documents belong to clusters, with fractional scores Termination when assignment of documents to clusters ceases to change much OR When cluster centroids move negligibly over successive iterations

- 23. Top-down clustering Hard k -Means: Repeat… Choose k arbitrary ‘centroids’ Assign each document to nearest centroid Recompute centroids Soft k-Means : Don’t break close ties between document assignments to clusters Don’t make documents contribute to a single cluster which wins narrowly Contribution for updating cluster centroid from document related to the current similarity between and .

- 24. Seeding `k’ clusters Randomly sample documents Run bottom-up group average clustering algorithm to reduce to k groups or clusters : O ( knlogn ) time Iterate assign-to-nearest O (1) times Move each document to nearest cluster Recompute cluster centroids Total time taken is O ( kn ) Non-deterministic behavior

- 25. Choosing `k’ Mostly problem driven Could be ‘data driven’ only when either Data is not sparse Measurement dimensions are not too noisy Interactive Data analyst interprets results of structure discovery

- 26. Choosing ‘k’ : Approaches Hypothesis testing: Null Hypothesis (H o ): Underlying density is a mixture of ‘k’ distributions Require regularity conditions on the mixture likelihood function (Smith’85) Bayesian Estimation Estimate posterior distribution on k, given data and prior on k. Difficulty: Computational complexity of integration Autoclass algorithm of (Cheeseman’98) uses approximations (Diebolt’94) suggests sampling techniques

- 27. Choosing ‘k’ : Approaches Penalised Likelihood To account for the fact that L k (D) is a non-decreasing function of k. Penalise the number of parameters Examples : Bayesian Information Criterion (BIC), Minimum Description Length(MDL), MML. Assumption: Penalised criteria are asymptotically optimal (Titterington 1985) Cross Validation Likelihood Find ML estimate on part of training data Choose k that maximises average of the M cross-validated average likelihoods on held-out data D test Cross Validation techniques: Monte Carlo Cross Validation (MCCV), v-fold cross validation (vCV)

- 28. Visualisation techniques Goal: Embedding of corpus in a low-dimensional space Hierarchical Agglomerative Clustering (HAC) lends itself easily to visualisaton Self-Organization map (SOM) A close cousin of k-means Multidimensional scaling (MDS) minimize the distortion of interpoint distances in the low-dimensional embedding as compared to the dissimilarity given in the input data. Latent Semantic Indexing (LSI) Linear transformations to reduce number of dimensions

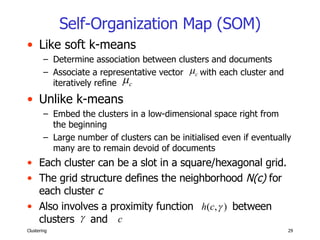

- 29. Self-Organization Map (SOM) Like soft k-means Determine association between clusters and documents Associate a representative vector with each cluster and iteratively refine Unlike k-means Embed the clusters in a low-dimensional space right from the beginning Large number of clusters can be initialised even if eventually many are to remain devoid of documents Each cluster can be a slot in a square/hexagonal grid. The grid structure defines the neighborhood N(c) for each cluster c Also involves a proximity function between clusters and

- 30. SOM : Update Rule Like Neural network Data item d activates neuron (closest cluster) as well as the neighborhood neurons Eg Gaussian neighborhood function Update rule for node under the influence of d is: Where is the ndb width and is the learning rate parameter

- 31. SOM : Example I SOM computed from over a million documents taken from 80 Usenet newsgroups. Light areas have a high density of documents.

- 32. SOM: Example II Another example of SOM at work: the sites listed in the Open Directory have beenorganized within a map of Antarctica at http://antarcti.ca/ .

- 33. Multidimensional Scaling(MDS) Goal “ Distance preserving” low dimensional embedding of documents Symmetric inter-document distances Given apriori or computed from internal representation Coarse-grained user feedback User provides similarity between documents i and j . With increasing feedback, prior distances are overridden Objective : Minimize the stress of embedding

- 34. MDS: issues Stress not easy to optimize Iterative hill climbing Points (documents) assigned random coordinates by external heuristic Points moved by small distance in direction of locally decreasing stress For n documents Each takes time to be moved Totally time per relaxation

- 35. Fast Map [Faloutsos ’95] No internal representation of documents available Goal find a projection from an ‘n’ dimensional space to a space with a smaller number ` k‘ ’ of dimensions. Iterative projection of documents along lines of maximum spread Each 1D projection preserves distance information

- 36. Best line Pivots for a line: two points ( a and b ) that determine it Avoid exhaustive checking by picking pivots that are far apart First coordinates of point on “best line”

- 37. Iterative projection For i = 1 to k Find a next (i th ) “best” line A “best” line is one which gives maximum variance of the point-set in the direction of the line Project points on the line Project points on the “hyperspace” orthogonal to the above line

- 38. Projection Purpose To correct inter-point distances between points by taking into account the components already accounted for by the first pivot line. Project recursively upto 1-D space Time: O(nk) time

- 39. Issues Detecting noise dimensions Bottom-up dimension composition too slow Definition of noise depends on application Running time Distance computation dominates Random projections Sublinear time w/o losing small clusters Integrating semi-structured information Hyperlinks, tags embed similarity clues A link is worth a ? words

- 40. Expectation maximization (EM): Pick k arbitrary ‘distributions’ Repeat: Find probability that document d is generated from distribution f for all d and f Estimate distribution parameters from weighted contribution of documents

- 41. Extended similarity Where can I fix my scooter ? A great garage to repair your 2-wheeler is at … auto and car co-occur often Documents having related words are related Useful for search and clustering Two basic approaches Hand-made thesaurus (WordNet) Co-occurrence and associations … car … … auto … … auto …car … car … auto … auto …car … car … auto … auto …car … car … auto car auto

- 42. Latent semantic indexing A Documents Terms U d t r D V d SVD Term Document car auto k k-dim vector

- 43. Collaborative recommendation People=record, movies=features People and features to be clustered Mutual reinforcement of similarity Need advanced models From Clustering methods in collaborative filtering, by Ungar and Foster

- 44. A model for collaboration People and movies belong to unknown classes P k = probability a random person is in class k P l = probability a random movie is in class l P kl = probability of a class- k person liking a class- l movie Gibbs sampling: iterate Pick a person or movie at random and assign to a class with probability proportional to P k or P l Estimate new parameters

- 45. Aspect Model Metric data vs Dyadic data vs Proximity data vs Ranked preference data. Dyadic data : domain with two finite sets of objects Observations : Of dyads X and Y Unsupervised learning from dyadic data Two sets of objects

- 46. Aspect Model (contd) Two main tasks Probabilistic modeling: learning a joint or conditional probability model over structure discovery: identifying clusters and data hierarchies.

- 47. Aspect Model Statistical models Empirical co-occurrence frequencies Sufficient statistics Data spareseness: Empirical frequencies either 0 or significantly corrupted by sampling noise Solution Smoothing Back-of method [Katz’87] Model interpolation with held-out data [JM’80, Jel’85] Similarity-based smoothing techniques [ES’92] Model-based statistical approach: a principled approach to deal with data sparseness

- 48. Aspect Model Model-based statistical approach: a principled approach to deal with data sparseness Finite Mixture Models [TSM’85] Latent class [And’97] Specification of a joint probability distribution for latent and observable variables [Hoffmann’98] Unifies statistical modeling Probabilistic modeling by marginalization structure detection (exploratory data analysis) Posterior probabilities by baye’s rule on latent space of structures

- 49. Aspect Model Realisation of an underlying sequence of random variables 2 assumptions All co-occurrences in sample S are iid are independent given P(c) are the mixture components

- 50. Aspect Model: Latent classes Increasing Degree of Restriction On Latent space

- 51. Aspect Model Symmetric Asymmetric

- 52. Clustering vs Aspect Clustering model constrained aspect model For flat: For hierarchical Group structure on object spaces as against partition the observations Notation P(.) : are the parameters P{.}: are posteriors

- 53. Hierarchical Clustering model One-sided clustering Hierarchical clustering

- 54. Comparison of E’s Aspect model One-sided aspect model Hierarchical aspect model

- 55. Tempered EM(TEM) Additively (on the log scale) discount the likelihood part in Baye’s formula: Set and perform EM until the performance on held--out data deteriorates (early stopping). Decrease e.g., by setting with some rate parameter . As long as the performance on held-out data improves continue TEM iterations at this value of Stop on i.e., stop when decreasing does not yield further improvements, otherwise goto step (2) Perform some final iterations using both, training and heldout data.

- 56. M-Steps Aspect Assymetric Hierarchical x-clustering One-sided x-clustering

- 57. Example Model [Hofmann and Popat CIKM 2001] Hierarchy of document categories

- 59. Topic Hierarchies To overcome sparseness problem in topic hierarchies with large number of classes Sparseness Problem: Small number of positive examples Topic hierarchies to reduce variance in parameter estimation Automatically differentiate Make use of term distributions estimated for more general, coarser text aspects to provide better, smoothed estimates of class conditional term distributions Convex combination of term distributions in a Hierarchical Mixture Model refers to all inner nodes a above the terminal class node c.

- 60. Topic Hierarchies (Hierarchical X-clustering) X = document, Y = word

- 61. Document Classification Exercise Modification of Naïve Bayes

- 62. Mixture vs Shrinkage Shrinkage [McCallum Rosenfeld AAAI’98] : Interior nodes in the hierarchy represent coarser views of the data which are obtained by simple pooling scheme of term counts Mixture : Interior nodes represent abstraction levels with their corresponding specific vocabulary Predefined hierarchy [Hofmann and Popat CIKM 2001] Creation of hierarchical model from unlabeled data [Hofmann IJCAI’99]

- 63. Mixture Density Networks(MDN) [Bishop CM ’94 Mixture Density Networks] broad and flexible class of distributions that are capable of modeling completely general continuous distributions superimpose simple component densities with well known properties to generate or approximate more complex distributions Two modules: Mixture models: Output has a distribution given as mixture of distributions Neural Network: Outputs determine parameters of the mixture model .

- 64. MDN: Example A conditional mixture density network with Gaussian component densities

- 65. MDN Parameter Estimation : Using Generalized EM (GEM) algo to speed up. Inference Even for a linear mixture, closed form solution not possible Use of Monte Carlo Simulations as a substitute

- 66. Vocabulary V , term w i , document represented by is the number of times w i occurs in document Most f ’s are zeroes for a single document Monotone component-wise damping function g such as log or square-root Document model

![Fast Map [Faloutsos ’95] No internal representation of documents available Goal find a projection from an ‘n’ dimensional space to a space with a smaller number ` k‘ ’ of dimensions. Iterative projection of documents along lines of maximum spread Each 1D projection preserves distance information](https://image.slidesharecdn.com/cluster-1198764831515372-5/85/Cluster-35-320.jpg)

![Aspect Model Statistical models Empirical co-occurrence frequencies Sufficient statistics Data spareseness: Empirical frequencies either 0 or significantly corrupted by sampling noise Solution Smoothing Back-of method [Katz’87] Model interpolation with held-out data [JM’80, Jel’85] Similarity-based smoothing techniques [ES’92] Model-based statistical approach: a principled approach to deal with data sparseness](https://image.slidesharecdn.com/cluster-1198764831515372-5/85/Cluster-47-320.jpg)

![Aspect Model Model-based statistical approach: a principled approach to deal with data sparseness Finite Mixture Models [TSM’85] Latent class [And’97] Specification of a joint probability distribution for latent and observable variables [Hoffmann’98] Unifies statistical modeling Probabilistic modeling by marginalization structure detection (exploratory data analysis) Posterior probabilities by baye’s rule on latent space of structures](https://image.slidesharecdn.com/cluster-1198764831515372-5/85/Cluster-48-320.jpg)

![Example Model [Hofmann and Popat CIKM 2001] Hierarchy of document categories](https://image.slidesharecdn.com/cluster-1198764831515372-5/85/Cluster-57-320.jpg)

![Mixture vs Shrinkage Shrinkage [McCallum Rosenfeld AAAI’98] : Interior nodes in the hierarchy represent coarser views of the data which are obtained by simple pooling scheme of term counts Mixture : Interior nodes represent abstraction levels with their corresponding specific vocabulary Predefined hierarchy [Hofmann and Popat CIKM 2001] Creation of hierarchical model from unlabeled data [Hofmann IJCAI’99]](https://image.slidesharecdn.com/cluster-1198764831515372-5/85/Cluster-62-320.jpg)

![Mixture Density Networks(MDN) [Bishop CM ’94 Mixture Density Networks] broad and flexible class of distributions that are capable of modeling completely general continuous distributions superimpose simple component densities with well known properties to generate or approximate more complex distributions Two modules: Mixture models: Output has a distribution given as mixture of distributions Neural Network: Outputs determine parameters of the mixture model .](https://image.slidesharecdn.com/cluster-1198764831515372-5/85/Cluster-63-320.jpg)