Introduction to XGBoost

- 1. INTRODUCTION TO XGBOOST 2018 Fall CS376 Machine Learning 2018. 9. 20. JoonyoungYi joonyoung.yi@kaist.ac.kr * Slide heavily adopted from the XGBoost author’s slide.

- 2. CONTENTS 1. Why XGBoost is Important? 2. Quick Start 3. Preliminary 4. Principle of XGBoost 5. Limitations and Tips

- 3. CONTENTS 1. Why XGBoost is Important? 2. Quick Start 3. Preliminary 4. Principle of XGBoost 5. Limitations and Tips

- 4. • XGBoost is a machine learning library like numpy, tensorflow, pytorch. • https://xgboost.readthedocs.io/en/latest/index.html • XGBoost is a useful tool to achieve good performance in the Kaggle or data science competitions. • If you don’t know how to start the term project, I recommend you using the XGBoost without any reasons. I’ll explain in the later slides. WHY XGBOOST IS IMPORTANT? 3

- 5. • Do you know the startup named Kaggle? • Invested $12.76M in 2 rounds and acquired by Google in 2017. • A site where numerous machine learning competitions have been held. • When the organizer (usually companies like Amazon, Netflix) provides the data (usually real-life dataset), the team who best predicts the correct answer with the provided data wins. • The winners will get a prize or get a chance to join the company depends on the competition. KAGGLE 4

- 6. • The winners have to disclose how they won. • http://blog.kaggle.com/category/winners-interviews/ • One of the Popular Tools of Winners is XGBoost. ONE IMPORTANT RULE IN KAGGLE 5

- 7. CONTENTS 1. Why XGBoost is Important? 2. Quick Start 3. Preliminary 4. Principle of XGBoost 5. Limitations and Tips

- 8. • I would like to show you how easy and powerful XGBoost is with this Quick start. • Let’s solve a real problem. • 1. Problem description • 2. Code (XGBoost Solution) • 3. Results • 4. Installation QUICK START 7

- 9. • Pima Indians Diabetes Prediction • Predict the onset of diabetes based on diagnostic measures. • https://www.kaggle.com/uciml/pima-indians-diabetes-database • Tabular Data : 768 rows x 9 columns • 768 people • 8 input features and 1 output • Input features (diagnostic measures) : X ∈ R768 x 8 • Pregnancies, glucose, blood pressure, skin thickness, insulin, BMI, diabetes pedigree function, age • Output : y ∈ R768 x 1 • Whether he / she has diabetes (0 or 1). PROBLEM DESCRIPTION 8

- 10. from xgboost import XGBClassifier model = XGBClassifier() model.fit(train_X, train_y) test_y_hat = model.predict(test_X) • https://github.com/JoonyoungYi/KAIST-2018-Fall-CS376-Machine-Learning- Intro-XGBoost/tree/master • Total 20 lines. • The core part of the code. • Very simple. Isn’t it? CODE 9

- 11. • Train error: 12.2 % / Test error: 19.7 % • Quite good performance. • Vary depending on the trial. • Also, this library is really fast. • It takes < 10 seconds to fit the model in my lab-top computer. RESULTS 10

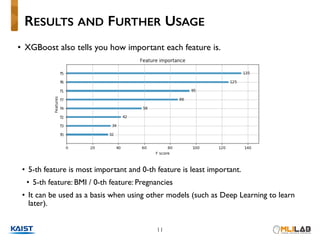

- 12. • XGBoost also tells you how important each feature is. • 5-th feature is most important and 0-th feature is least important. • 5-th feature: BMI / 0-th feature: Pregnancies • It can be used as a basis when using other models (such as Deep Learning to learn later). RESULTS AND FURTHER USAGE 11

- 13. • Also, it is easy to install by PIP. • https://en.wikipedia.org/wiki/Pip_(package_manager) • Install commands • If your machine needs sudo privileges, you can install it with sudo privileges. • You can also install it using the python virtualenv (or conda). • virtualenv: https://virtualenv.pypa.io/en/stable/ INSTALLATION 12 pip install xgboost pip install sklearn

- 14. CONTENTS 1. Why XGBoost is Important? 2. Quick Start 3. Preliminary 4. Principle of XGBoost 5. Limitations and Tips

- 15. DECISION TREE 15 • Output:Whether he / she has diabetes• Input: BMI, age, sex, … False True True True or False (1 or 0) in each leaf

- 16. • Classification and regression tree (CART) • Decision rules same as in decision tree. • Contains one score in each leaf value. • Recall:A function that maps the attributes to the score. CART 16 • Output:Whether he / she has diabetes• Input: BMI, age, sex, … -2 -0.1 +1 prediction score in each leaf

- 17. • In wikipedia, • Ensemble methods use multiple learning algorithms to obtain better predictive performance than could be obstained from any of the constituent learning algorithms alone. • https://en.wikipedia.org/wiki/Ensemble_learning • Any algorithms that integrate multiple models (algorithms) to better performance. • ex. bagging and boosting. ENSEMBLE METHODS 17

- 18. • CART makes ensemble more easy. • Prediction of is sum of scores predicted by each of the tree. CART ENSEMBLE 18 BMI < 25 -2 -0.1 +1 -0.9 +0.9 = -2 - 0.9 = -2.9 = +1+0.9 = +1.9

- 19. CONTENTS 1. Why XGBoost is Important? 2. Quick Start 3. Preliminary 4. Principle of XGBoost 5. Limitations and Tips

- 20. • Focus on high level concepts. • Focus on better using the library. • I think detailed algorithm is beyond the scope of this course. • If you curious, refer to the paper for detail algorithm. • XGBoost:A Scalable Tree Boosting System • https://arxiv.org/pdf/1603.02754.pdf • You might have noticed, XGBoost is a CART ensemble model. PRINCIPLE OF XGBOOST 20

- 21. • Model: assuming we have K trees. • Recall: regression tree is a function that maps the attributes to the score. • Parameters • Including structures of each tree, and the score in the leaf. • Or simply use function as parameters: • Instead learning weights in Rd, we are learning functions (trees). MODEL AND PARAMETERS 21 ˆy = KX k=1 fk(xi), fk 2 F <latexit sha1_base64="ej54yrMDGrMDaFntZQ6PqUzQS0Q=">AAACHXicbZDNSsNAFIUn9a/Wv6pLN4NFqCAlEUVdFAqCCG4qGFtoaphMJ+2QySTMTMQS8iRufBU3LlRcuBHfxknbhbYeGPg4917m3uPFjEplmt9GYW5+YXGpuFxaWV1b3yhvbt3KKBGY2DhikWh7SBJGObEVVYy0Y0FQ6DHS8oLzvN66J0LSiN+oYUy6Iepz6lOMlLbc8rEzQCodZrAOHZmEbhrUrezuCvpuAKsPLt0/GKFDOXRCpAYYsfQic8sVs2aOBGfBmkAFTNR0y59OL8JJSLjCDEnZscxYdVMkFMWMZCUnkSRGOEB90tHIUUhkNx2dl8E97fSgHwn9uIIj9/dEikIph6GnO/MV5XQtN/+rdRLln3ZTyuNEEY7HH/kJgyqCeVawRwXBig01ICyo3hXiARIIK51oSYdgTZ88C/Zh7axmXR9VGo1JGkWwA3ZBFVjgBDTAJWgCG2DwCJ7BK3gznowX4934GLcWjMnMNvgj4+sHbEehBA==</latexit><latexit sha1_base64="ej54yrMDGrMDaFntZQ6PqUzQS0Q=">AAACHXicbZDNSsNAFIUn9a/Wv6pLN4NFqCAlEUVdFAqCCG4qGFtoaphMJ+2QySTMTMQS8iRufBU3LlRcuBHfxknbhbYeGPg4917m3uPFjEplmt9GYW5+YXGpuFxaWV1b3yhvbt3KKBGY2DhikWh7SBJGObEVVYy0Y0FQ6DHS8oLzvN66J0LSiN+oYUy6Iepz6lOMlLbc8rEzQCodZrAOHZmEbhrUrezuCvpuAKsPLt0/GKFDOXRCpAYYsfQic8sVs2aOBGfBmkAFTNR0y59OL8JJSLjCDEnZscxYdVMkFMWMZCUnkSRGOEB90tHIUUhkNx2dl8E97fSgHwn9uIIj9/dEikIph6GnO/MV5XQtN/+rdRLln3ZTyuNEEY7HH/kJgyqCeVawRwXBig01ICyo3hXiARIIK51oSYdgTZ88C/Zh7axmXR9VGo1JGkWwA3ZBFVjgBDTAJWgCG2DwCJ7BK3gznowX4934GLcWjMnMNvgj4+sHbEehBA==</latexit><latexit sha1_base64="ej54yrMDGrMDaFntZQ6PqUzQS0Q=">AAACHXicbZDNSsNAFIUn9a/Wv6pLN4NFqCAlEUVdFAqCCG4qGFtoaphMJ+2QySTMTMQS8iRufBU3LlRcuBHfxknbhbYeGPg4917m3uPFjEplmt9GYW5+YXGpuFxaWV1b3yhvbt3KKBGY2DhikWh7SBJGObEVVYy0Y0FQ6DHS8oLzvN66J0LSiN+oYUy6Iepz6lOMlLbc8rEzQCodZrAOHZmEbhrUrezuCvpuAKsPLt0/GKFDOXRCpAYYsfQic8sVs2aOBGfBmkAFTNR0y59OL8JJSLjCDEnZscxYdVMkFMWMZCUnkSRGOEB90tHIUUhkNx2dl8E97fSgHwn9uIIj9/dEikIph6GnO/MV5XQtN/+rdRLln3ZTyuNEEY7HH/kJgyqCeVawRwXBig01ICyo3hXiARIIK51oSYdgTZ88C/Zh7axmXR9VGo1JGkWwA3ZBFVjgBDTAJWgCG2DwCJ7BK3gznowX4934GLcWjMnMNvgj4+sHbEehBA==</latexit><latexit sha1_base64="ej54yrMDGrMDaFntZQ6PqUzQS0Q=">AAACHXicbZDNSsNAFIUn9a/Wv6pLN4NFqCAlEUVdFAqCCG4qGFtoaphMJ+2QySTMTMQS8iRufBU3LlRcuBHfxknbhbYeGPg4917m3uPFjEplmt9GYW5+YXGpuFxaWV1b3yhvbt3KKBGY2DhikWh7SBJGObEVVYy0Y0FQ6DHS8oLzvN66J0LSiN+oYUy6Iepz6lOMlLbc8rEzQCodZrAOHZmEbhrUrezuCvpuAKsPLt0/GKFDOXRCpAYYsfQic8sVs2aOBGfBmkAFTNR0y59OL8JJSLjCDEnZscxYdVMkFMWMZCUnkSRGOEB90tHIUUhkNx2dl8E97fSgHwn9uIIj9/dEikIph6GnO/MV5XQtN/+rdRLln3ZTyuNEEY7HH/kJgyqCeVawRwXBig01ICyo3hXiARIIK51oSYdgTZ88C/Zh7axmXR9VGo1JGkWwA3ZBFVjgBDTAJWgCG2DwCJ7BK3gznowX4934GLcWjMnMNvgj4+sHbEehBA==</latexit> space of functions containing all regression trees ⇥ = {f1, f2, ..., fK}<latexit sha1_base64="RpwpIXs++KTACaZXsCC4iQhoox4=">AAACCHicbZBPS8MwGMbT+W/Of1WPXoJD8DBKOwT1IAy8CF4mrG6wlpJm6RaWpiVJhVF29eJX8eJBxasfwZvfxnTrQTdfSPjxPO9L8j5hyqhUtv1tVFZW19Y3qpu1re2d3T1z/+BeJpnAxMUJS0QvRJIwyomrqGKklwqC4pCRbji+LvzuAxGSJryjJinxYzTkNKIYKS0FJvQ6I6IQvIJeDqPAaeir2YCWZRV0600Ds25b9qzgMjgl1EFZ7cD88gYJzmLCFWZIyr5jp8rPkVAUMzKteZkkKcJjNCR9jRzFRPr5bJMpPNHKAEaJ0IcrOFN/T+QolnISh7ozRmokF71C/M/rZyq68HPK00wRjucPRRmDKoFFLHBABcGKTTQgLKj+K8QjJBBWOryaDsFZXHkZ3KZ1aTl3Z/VWs0yjCo7AMTgFDjgHLXAD2sAFGDyCZ/AK3own48V4Nz7mrRWjnDkEf8r4/AHMF5a5</latexit><latexit sha1_base64="RpwpIXs++KTACaZXsCC4iQhoox4=">AAACCHicbZBPS8MwGMbT+W/Of1WPXoJD8DBKOwT1IAy8CF4mrG6wlpJm6RaWpiVJhVF29eJX8eJBxasfwZvfxnTrQTdfSPjxPO9L8j5hyqhUtv1tVFZW19Y3qpu1re2d3T1z/+BeJpnAxMUJS0QvRJIwyomrqGKklwqC4pCRbji+LvzuAxGSJryjJinxYzTkNKIYKS0FJvQ6I6IQvIJeDqPAaeir2YCWZRV0600Ds25b9qzgMjgl1EFZ7cD88gYJzmLCFWZIyr5jp8rPkVAUMzKteZkkKcJjNCR9jRzFRPr5bJMpPNHKAEaJ0IcrOFN/T+QolnISh7ozRmokF71C/M/rZyq68HPK00wRjucPRRmDKoFFLHBABcGKTTQgLKj+K8QjJBBWOryaDsFZXHkZ3KZ1aTl3Z/VWs0yjCo7AMTgFDjgHLXAD2sAFGDyCZ/AK3own48V4Nz7mrRWjnDkEf8r4/AHMF5a5</latexit><latexit sha1_base64="RpwpIXs++KTACaZXsCC4iQhoox4=">AAACCHicbZBPS8MwGMbT+W/Of1WPXoJD8DBKOwT1IAy8CF4mrG6wlpJm6RaWpiVJhVF29eJX8eJBxasfwZvfxnTrQTdfSPjxPO9L8j5hyqhUtv1tVFZW19Y3qpu1re2d3T1z/+BeJpnAxMUJS0QvRJIwyomrqGKklwqC4pCRbji+LvzuAxGSJryjJinxYzTkNKIYKS0FJvQ6I6IQvIJeDqPAaeir2YCWZRV0600Ds25b9qzgMjgl1EFZ7cD88gYJzmLCFWZIyr5jp8rPkVAUMzKteZkkKcJjNCR9jRzFRPr5bJMpPNHKAEaJ0IcrOFN/T+QolnISh7ozRmokF71C/M/rZyq68HPK00wRjucPRRmDKoFFLHBABcGKTTQgLKj+K8QjJBBWOryaDsFZXHkZ3KZ1aTl3Z/VWs0yjCo7AMTgFDjgHLXAD2sAFGDyCZ/AK3own48V4Nz7mrRWjnDkEf8r4/AHMF5a5</latexit><latexit sha1_base64="RpwpIXs++KTACaZXsCC4iQhoox4=">AAACCHicbZBPS8MwGMbT+W/Of1WPXoJD8DBKOwT1IAy8CF4mrG6wlpJm6RaWpiVJhVF29eJX8eJBxasfwZvfxnTrQTdfSPjxPO9L8j5hyqhUtv1tVFZW19Y3qpu1re2d3T1z/+BeJpnAxMUJS0QvRJIwyomrqGKklwqC4pCRbji+LvzuAxGSJryjJinxYzTkNKIYKS0FJvQ6I6IQvIJeDqPAaeir2YCWZRV0600Ds25b9qzgMjgl1EFZ7cD88gYJzmLCFWZIyr5jp8rPkVAUMzKteZkkKcJjNCR9jRzFRPr5bJMpPNHKAEaJ0IcrOFN/T+QolnISh7ozRmokF71C/M/rZyq68HPK00wRjucPRRmDKoFFLHBABcGKTTQgLKj+K8QjJBBWOryaDsFZXHkZ3KZ1aTl3Z/VWs0yjCo7AMTgFDjgHLXAD2sAFGDyCZ/AK3own48V4Nz7mrRWjnDkEf8r4/AHMF5a5</latexit>

- 22. • Optimization form: • What is the possible ways to define ? • The number of nodes in the tree, depth. • L2 norm of the leaf weights. • L1 norm of the lear weights. • Think about the role of L1 norm and L2 norm. • How do we learn? OBJECTIVE FOR TREE ENSEMBLES OF XGBOOST 22 min NX i=1 L(yi, ˆyi) + KX k=1 ⌦(fk) <latexit sha1_base64="IXOnB6QLhCaw+v8QmLc38WSmEaY=">AAACMXicbVBNa9tAFFy5zUedj7rtsZelJmCTYKQSSHooGHpJaGlSiGOD5Yin9cpetLsSu6uCEPpNveSXFHJIDm3ptX8iK0eHxs7AwjAzj7dvwpQzbVz31mk8e762vrH5orm1vbP7svXq9aVOMkXogCQ8UaMQNOVM0oFhhtNRqiiIkNNhGH+q/OF3qjRL5IXJUzoRMJMsYgSMlYLWqS+YxL7ORFCwj1559RX7AsycAC++lJ08YAfYn4Mp8jJgXbxfR+Mq+hn7Z4LOAHeiIO4GrbbbcxfAq8SrSRvVOA9aP/1pQjJBpSEctB57bmomBSjDCKdl0880TYHEMKNjSyUIqifF4uQS71lliqNE2ScNXqj/TxQgtM5FaJPVOXrZq8SnvHFmouNJwWSaGSrJw6Io49gkuOoPT5mixPDcEiCK2b9iMgcFxNiWm7YEb/nkVTJ43/vQ874dtvv9uo1N9Ba9Qx3koSPURyfoHA0QQT/QDfqFfjvXzp3zx/n7EG049cwb9AjOv3vuwqjn</latexit><latexit sha1_base64="IXOnB6QLhCaw+v8QmLc38WSmEaY=">AAACMXicbVBNa9tAFFy5zUedj7rtsZelJmCTYKQSSHooGHpJaGlSiGOD5Yin9cpetLsSu6uCEPpNveSXFHJIDm3ptX8iK0eHxs7AwjAzj7dvwpQzbVz31mk8e762vrH5orm1vbP7svXq9aVOMkXogCQ8UaMQNOVM0oFhhtNRqiiIkNNhGH+q/OF3qjRL5IXJUzoRMJMsYgSMlYLWqS+YxL7ORFCwj1559RX7AsycAC++lJ08YAfYn4Mp8jJgXbxfR+Mq+hn7Z4LOAHeiIO4GrbbbcxfAq8SrSRvVOA9aP/1pQjJBpSEctB57bmomBSjDCKdl0880TYHEMKNjSyUIqifF4uQS71lliqNE2ScNXqj/TxQgtM5FaJPVOXrZq8SnvHFmouNJwWSaGSrJw6Io49gkuOoPT5mixPDcEiCK2b9iMgcFxNiWm7YEb/nkVTJ43/vQ874dtvv9uo1N9Ba9Qx3koSPURyfoHA0QQT/QDfqFfjvXzp3zx/n7EG049cwb9AjOv3vuwqjn</latexit><latexit sha1_base64="IXOnB6QLhCaw+v8QmLc38WSmEaY=">AAACMXicbVBNa9tAFFy5zUedj7rtsZelJmCTYKQSSHooGHpJaGlSiGOD5Yin9cpetLsSu6uCEPpNveSXFHJIDm3ptX8iK0eHxs7AwjAzj7dvwpQzbVz31mk8e762vrH5orm1vbP7svXq9aVOMkXogCQ8UaMQNOVM0oFhhtNRqiiIkNNhGH+q/OF3qjRL5IXJUzoRMJMsYgSMlYLWqS+YxL7ORFCwj1559RX7AsycAC++lJ08YAfYn4Mp8jJgXbxfR+Mq+hn7Z4LOAHeiIO4GrbbbcxfAq8SrSRvVOA9aP/1pQjJBpSEctB57bmomBSjDCKdl0880TYHEMKNjSyUIqifF4uQS71lliqNE2ScNXqj/TxQgtM5FaJPVOXrZq8SnvHFmouNJwWSaGSrJw6Io49gkuOoPT5mixPDcEiCK2b9iMgcFxNiWm7YEb/nkVTJ43/vQ874dtvv9uo1N9Ba9Qx3koSPURyfoHA0QQT/QDfqFfjvXzp3zx/n7EG049cwb9AjOv3vuwqjn</latexit><latexit sha1_base64="IXOnB6QLhCaw+v8QmLc38WSmEaY=">AAACMXicbVBNa9tAFFy5zUedj7rtsZelJmCTYKQSSHooGHpJaGlSiGOD5Yin9cpetLsSu6uCEPpNveSXFHJIDm3ptX8iK0eHxs7AwjAzj7dvwpQzbVz31mk8e762vrH5orm1vbP7svXq9aVOMkXogCQ8UaMQNOVM0oFhhtNRqiiIkNNhGH+q/OF3qjRL5IXJUzoRMJMsYgSMlYLWqS+YxL7ORFCwj1559RX7AsycAC++lJ08YAfYn4Mp8jJgXbxfR+Mq+hn7Z4LOAHeiIO4GrbbbcxfAq8SrSRvVOA9aP/1pQjJBpSEctB57bmomBSjDCKdl0880TYHEMKNjSyUIqifF4uQS71lliqNE2ScNXqj/TxQgtM5FaJPVOXrZq8SnvHFmouNJwWSaGSrJw6Io49gkuOoPT5mixPDcEiCK2b9iMgcFxNiWm7YEb/nkVTJ43/vQ874dtvv9uo1N9Ba9Qx3koSPURyfoHA0QQT/QDfqFfjvXzp3zx/n7EG049cwb9AjOv3vuwqjn</latexit> Training loss Complexity of the trees: Regularizer ⌦<latexit sha1_base64="+If7ZJYcV6N50jvOzBx2lLOEhyo=">AAAB7HicbVDLSgNBEOyNrxhfUY9eBoPgKeyKYLwFvHgzgpsEkiXMTmaTMfNYZmaFEPIPXjyoePWDvPk3TpI9aGJBQ1HVTXdXnHJmrO9/e4W19Y3NreJ2aWd3b/+gfHjUNCrThIZEcaXbMTaUM0lDyyyn7VRTLGJOW/HoZua3nqg2TMkHO05pJPBAsoQRbJ3U7N4JOsC9csWv+nOgVRLkpAI5Gr3yV7evSCaotIRjYzqBn9pogrVlhNNpqZsZmmIywgPacVRiQU00mV87RWdO6aNEaVfSorn6e2KChTFjEbtOge3QLHsz8T+vk9mkFk2YTDNLJVksSjKOrEKz11GfaUosHzuCiWbuVkSGWGNiXUAlF0Kw/PIqCS+q19Xg/rJSr+VpFOEETuEcAriCOtxCA0Ig8AjP8ApvnvJevHfvY9Fa8PKZY/gD7/MHyLSOxQ==</latexit><latexit sha1_base64="+If7ZJYcV6N50jvOzBx2lLOEhyo=">AAAB7HicbVDLSgNBEOyNrxhfUY9eBoPgKeyKYLwFvHgzgpsEkiXMTmaTMfNYZmaFEPIPXjyoePWDvPk3TpI9aGJBQ1HVTXdXnHJmrO9/e4W19Y3NreJ2aWd3b/+gfHjUNCrThIZEcaXbMTaUM0lDyyyn7VRTLGJOW/HoZua3nqg2TMkHO05pJPBAsoQRbJ3U7N4JOsC9csWv+nOgVRLkpAI5Gr3yV7evSCaotIRjYzqBn9pogrVlhNNpqZsZmmIywgPacVRiQU00mV87RWdO6aNEaVfSorn6e2KChTFjEbtOge3QLHsz8T+vk9mkFk2YTDNLJVksSjKOrEKz11GfaUosHzuCiWbuVkSGWGNiXUAlF0Kw/PIqCS+q19Xg/rJSr+VpFOEETuEcAriCOtxCA0Ig8AjP8ApvnvJevHfvY9Fa8PKZY/gD7/MHyLSOxQ==</latexit><latexit sha1_base64="+If7ZJYcV6N50jvOzBx2lLOEhyo=">AAAB7HicbVDLSgNBEOyNrxhfUY9eBoPgKeyKYLwFvHgzgpsEkiXMTmaTMfNYZmaFEPIPXjyoePWDvPk3TpI9aGJBQ1HVTXdXnHJmrO9/e4W19Y3NreJ2aWd3b/+gfHjUNCrThIZEcaXbMTaUM0lDyyyn7VRTLGJOW/HoZua3nqg2TMkHO05pJPBAsoQRbJ3U7N4JOsC9csWv+nOgVRLkpAI5Gr3yV7evSCaotIRjYzqBn9pogrVlhNNpqZsZmmIywgPacVRiQU00mV87RWdO6aNEaVfSorn6e2KChTFjEbtOge3QLHsz8T+vk9mkFk2YTDNLJVksSjKOrEKz11GfaUosHzuCiWbuVkSGWGNiXUAlF0Kw/PIqCS+q19Xg/rJSr+VpFOEETuEcAriCOtxCA0Ig8AjP8ApvnvJevHfvY9Fa8PKZY/gD7/MHyLSOxQ==</latexit><latexit sha1_base64="+If7ZJYcV6N50jvOzBx2lLOEhyo=">AAAB7HicbVDLSgNBEOyNrxhfUY9eBoPgKeyKYLwFvHgzgpsEkiXMTmaTMfNYZmaFEPIPXjyoePWDvPk3TpI9aGJBQ1HVTXdXnHJmrO9/e4W19Y3NreJ2aWd3b/+gfHjUNCrThIZEcaXbMTaUM0lDyyyn7VRTLGJOW/HoZua3nqg2TMkHO05pJPBAsoQRbJ3U7N4JOsC9csWv+nOgVRLkpAI5Gr3yV7evSCaotIRjYzqBn9pogrVlhNNpqZsZmmIywgPacVRiQU00mV87RWdO6aNEaVfSorn6e2KChTFjEbtOge3QLHsz8T+vk9mkFk2YTDNLJVksSjKOrEKz11GfaUosHzuCiWbuVkSGWGNiXUAlF0Kw/PIqCS+q19Xg/rJSr+VpFOEETuEcAriCOtxCA0Ig8AjP8ApvnvJevHfvY9Fa8PKZY/gD7/MHyLSOxQ==</latexit>

- 23. • We can’t apply Stochastic Gradient Descent (SGD). Why? • The variables we should optimize are trees instead of just numerical vectors. • Solution: Boosting (Additive Training) • Start from constant prediction, add a new function each time. HOW DO WE LEARN? 23

- 24. • As I already mentioned, detailed learning algorithm is beyond the scope of this course. • The XGBoost library will take care of learning instead of you. HOW DO WE LEARN? 24

- 25. CONTENTS 1. Why XGBoost is Important? 2. Quick Start 3. Preliminary 4. Principle of XGBoost 5. Limitations and Tips

- 26. • The quick start example only shows that the classification problem can be solved by XGBoost, but it can also be used for the regression problem. SOLVING REGRESSION PROBLEM 26 from xgboost import XGBRegressor model = XGBRegressor() model.fit(train_X, train_y) test_y_hat = model.predict(test_X)

- 27. • Can you guess of the limitations of the XGBoost? • 1.Appropriate algorithm for supervised learning. • 2.The more complex the data, the more likely it will not work properly. • Because, it is based on Decision Tree. • 3. Inappropriate for time-series data. Why? LIMITATIONS OF XGBOOST 27 Figure: http://oracledmt.blogspot.com/2006/03/time-series-forecasting-2-single-step.html Not randomly splitting training and test data, But using historical data as training and future data as test data. Since XGBoost is based on a decision tree, it will have difficulty in predicting. Do you have any idea to handle this issue in XGBoost?

- 28. • By setting L1 and L2 regularization constants, we can adjust the weights to have a specific trend. • Including the L1 and L2 regularizers, there are many options we can adjust. • https://xgboost.readthedocs.io/en/latest/index.html • The document sometimes said what option is proper in some kind of data. • Read the documents carefully! ADJUSTING REGULARIZER 28

- 29. ANY QUESTIONS?

- 30. 1. https://kaggle.com/ 2. http://blog.kaggle.com/category/winners-interviews/ 3. https://homes.cs.washington.edu/~tqchen/pdf/BoostedTree.pdf 4. https://brunch.co.kr/@snobberys/137 5. https://www.slideshare.net/rahuldausa/introduction-to-machine-learning- 38791937 REFERENCES