Java.util.concurrent.concurrent hashmap

- 1. java.util.concurrent.ConcurrentHashMap Srinivasan Raghavan Senior Member of Technical Staff Java Platform Group

- 2. A Little bit of History Lesson ● Is all started with Hashtable.java in jdk1.0, which was reely slow and bad deisgn. ● Wonder what's worst of jdk 1.0 ?. ● Its the Stack which extends vector which is synchronized ● Stack was example of worst usage of inheritance ● As java moved on we had to deal with the Collection.synchronizedCollection ● After the dot com bubble most which survived were companies which handled massive data and massive traffic ● And processors weren't getting any fast cause there is a theoretical limit to processor clock speed . In came multicore . ● Any they started with two , four and like octopus there were octa core processor even in mobile devices by the turn of decade, and far more than that in server environments

- 3. The Dark Knight Begins ● Having multicore doesn't make your code run any faster . So parallel programming was the need of the hour ● So Dough leah went to his cave in new york and became the dark knight of java and released his concurrency framework .. ● One of the major overhauls in java approach towards concurrency .It was released in September 2004 ● java.util.concurrent.* was added which included Blocking Queue, Latches, Barriers , Executor and Concurrent Hash Map ● We will take a deep look into the evolution of the concurrent hash map .

- 4. Concurrenthashmap V5 ● Hashmap is divide into segments uses a concept of lock stripping ● Each segment has a Hash table within it ● Segment extends reentrant lock and the segment gives to volatile reads and guarded writes by locks ● As mentioned by Doug lea in the doc Segment is opportunistic subclass of the Reentrant Lock. ● Sub classing is pathetic .. ● But Doug lea can do it ● The most important to see here subclassing is recommended only if its the same package ● He has only one rule … ie not to break your code . he can break all others ● As concurrenthashmap matures in he will break more rules

- 5. v5 implementation V put(K,V) ● The segment gives to volatile reads and guarded writes by locks ● the segment has volatile count which is incremented in the put method guarded by locks public V put(K key, V value) { if (value == null) throw new NullPointerException(); int hash = hash(key); return segmentFor(hash).put(key, hash, value, false); } V put(K key, int hash, V value, boolean onlyIfAbsent) { lock(); try { int c = count; if (c++ > threshold) // ensure capacity rehash(); HashEntry[] tab = table; int index = hash & (tab.length - 1); HashEntry<K,V> first = (HashEntry<K,V>) tab[index]; HashEntry<K,V> e = first; while (e != null && (e.hash != hash || !key.equals(e.key))) e = e.next; V oldValue; if (e != null) { oldValue = e.value; if (!onlyIfAbsent) e.value = value; } else { oldValue = null; tab[index] = new HashEntry<K,V>(key, hash, first, value); count = c; // write-volatile } return oldValue; } finally { unlock(); } }

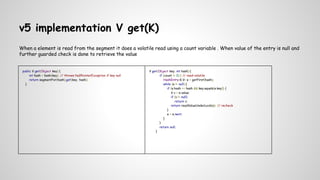

- 6. v5 implementation V get(K) When a element is read from the segment it does a volatile read using a count variable . When value of the entry is null and further guarded check is done to retrieve the value public V get(Object key) { int hash = hash(key); // throws NullPointerException if key null return segmentFor(hash).get(key, hash); } V get(Object key, int hash) { if (count != 0) { // read-volatile HashEntry<K,V> e = getFirst(hash); while (e != null) { if (e.hash == hash && key.equals(e.key)) { V v = e.value; if (v != null) return v; return readValueUnderLock(e); // recheck } e = e.next; } } return null; }

- 7. v5 performance issues ● The v5 implementation is fairly simple but there are some performance issues ● There in v5 because the segments objects are created initially increases memory footprint ● Now with no objects the map there are minimum set of segment objects is created. When more maps are declared it can result in serious performance blots ● While locking for put and in the case non volatile reads as it happens in special cases no spin locks are used . Without spin locks throughput of the map decreases

- 8. In comes the v6 implementation ● It comes with the mysterious yet very powerful UNSAFE mechanics ● sun.misc.Unsafe is like the god particle of the java . It can do a lot native memory stuffs which is illegal in regular java ● and it can be only used inside java libs and not for developers ● A lot of things can be done with sun.misc.Unsafe like volatile reads and contiguous memory allocation ,compare and swap , park threads and unparking threads ● in v6 main change we can see is the Segment is allocated using UNSAFE.putOrderedObject() . This ensure volatile reads of the segment and allocation of the segment memory on the fly ,thus removing the overhead of the v5 implementation

- 9. v6 implementation V get(K) and put(K,V) ● we will see how the segment is accessed ? ● Using the hash key supplied the segment hash is generated ● The segment is accessed using a non volatile UNSAFE.getObject () with the hash . If entry is found further put operation is taken care ● If segment is not found ensuresegment() is called and using UNSAFE.compareAndSwapObject() the segment object is created atomically ● As put operation should be guarded by locks .But instead of using a brute lock as used in the v5 . Spin locks are used using try locks. ● The get operation is done by a volatile read of the segment UNSAFE.getObjectVolatile() and another volatile read of the Hash Node to get the value

- 10. Spin locks The below example is a generic example how a spin locks int retries = -1; while (!tryLock()) { if (++retries > MAX_SCAN_RETRIES) { lock(); break; } } A spin lock can improve performance than the traditional lock chance for acquiring a lock without queueing is good

- 11. The Dark Knight rises ● After all the work with segment for many years, In Concurrenthashmap v8 the segments and reentrant locks are thrown away ● This map usually acts as a binned (bucketed) hash table. Each key-value mapping is held in a Node. Most nodes are instances of the basic Node class with hash, key, value, and next fields. ● However, various subclasses exist: Tree Nodes are arranged in balanced trees, not lists. ● Insertion (via put or its variants) of the first node in an empty bin is performed by just CASing it to the bin. ● This is by far the most common case for put operations under most key/hash distributions ● space required to associate a distinct lock object with each bin is not wasted , so instead use the first node of a bin list itself as a lock. Locking support for these locks relies on built in "synchronized" monitors.

- 12. v8 implementation ● The main disadvantage of per-bin locks is that other update operations on other nodes in a bin list protected by the same lock can stall, for example when user equals() or mapping functions take a long time. ● However, statistically, under random hash codes, this is not a common problem. Ideally, the frequency of nodes in bins follows a Poisson distribution ● Lock contention probability for two threads accessing distinct elements is roughly 1 / (8 #elements) under random hashes. ● For dumb or hostile usages in which multiple keys are designed to have identical hash codes or ones that differs only in masked-out high bits ● when the number of nodes in a bin exceeds a threshold,TreeBins use a balanced tree to hold nodes (a specialized form of red-black trees), bounding search time to O(log N)

- 13. v8 implementation V put(K,V) V get(K) ● if the tab is empty and new node is created by UNSAFE.compareAndSwapObject() ● When there are collisions or the map needs a update synchronized (firstnode) is used ● When the TREEIFY_THRESHOLD is broken the linkedlist is converted in balanced Red Black trees. ● the get operation is fairly straight forward by reading the bin bucket UNSAFE.getObjectVolatile() and traversing through the objects ● But the most significant change in v8 is power to do bulk operations

- 14. Bulk Operations •suppose we want to find the first word that occurs more than 1,000 times. We need to search keys and values String result = map.search(threshold, (k, v) -> v > 1000 ? k : null) •Another usage in filter , consumer which one we saw in streams can be implemented map.forEach(threshold, (k, v) -> v > 1000 ? k + " -> " + v : null, // Filter and transformer System.out::println); •reduce does a filter and accumulator . Like if we want to count how many entries have value > 1000 Long count = map.reduceValues(threshold, v -> v > 1000 ? 1L : null, Long::sum)

![v5 implementation V put(K,V)

● The segment gives to volatile reads and guarded writes by locks

● the segment has volatile count which is incremented in the put method guarded by locks

public V put(K key, V value) {

if (value == null)

throw new NullPointerException();

int hash = hash(key);

return segmentFor(hash).put(key, hash, value, false);

}

V put(K key, int hash, V value, boolean onlyIfAbsent) {

lock();

try {

int c = count;

if (c++ > threshold) // ensure capacity

rehash();

HashEntry[] tab = table;

int index = hash & (tab.length - 1);

HashEntry<K,V> first = (HashEntry<K,V>) tab[index];

HashEntry<K,V> e = first;

while (e != null && (e.hash != hash || !key.equals(e.key)))

e = e.next;

V oldValue;

if (e != null) {

oldValue = e.value;

if (!onlyIfAbsent)

e.value = value;

}

else {

oldValue = null;

tab[index] = new HashEntry<K,V>(key, hash, first, value);

count = c; // write-volatile

}

return oldValue;

} finally {

unlock(); }

}](https://image.slidesharecdn.com/java-150113085256-conversion-gate02/85/Java-util-concurrent-concurrent-hashmap-5-320.jpg)