Lecture_1_Introduction_to_Adversarial_Machine_Learning.pptx

- 1. CS 502 Directed Studies: Adversarial Machine Learning Dr. Alex Vakanski

- 2. 2 CS 502, Fall 2020 Lecture 1 Introduction to Adversarial Machine Learning

- 3. 3 CS 502, Fall 2020 Lecture Outline • Machine Learning (ML) • Adversarial ML (AML) – Adversarial examples • Attack taxonomy • Common adversarial attacks – Noise, semantic attack, FGSM, BIM, PGD, DeepFool, CW attack • Defense against adversarial attacks – Adversarial training, random resizing and padding, detect adversarial examples • Conclusion • References • Other AML resources

- 4. 4 CS 502, Fall 2020 Machine Learning (ML) • ML tasks – Supervised, unsupervised, semi-supervised, self-supervised, meta learning, reinforcement learning • Data collection and preprocessing – Sensors, cameras, I/O devices, etc. • Apply a ML algorithm – Training phase: learn ML model (parameter learning, hyperparameter tuning) – Testing phase (inference): predict on unseen data Slide credit: Binghui Wang: Adversarial Machine Learning — An Introduction

- 5. 5 CS 502, Fall 2020 ML is Ubiquitous Healthcare Picture from: He Xiaoyi – Adversarial Machine Learning

- 6. 6 CS 502, Fall 2020 Adversarial ML • The classification accuracy of GoogLeNet on MNIST under adversarial attacks drops from 98% to 18% (for ProjGrad attack) or 1% (DeepFool attack) Picture from: https://blog.floydhub.com/introduction-to-adversarial-machine-learning/

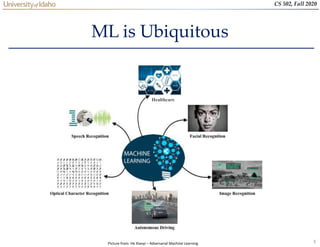

- 7. 7 CS 502, Fall 2020 Adversarial Examples • What do you see? Slide credit: Binghui Wang: Adversarial Machine Learning — An Introduction

- 8. 8 CS 502, Fall 2020 Adversarial Examples • The classifier misclassifies adversarially manipulated images Slide credit: Binghui Wang: Adversarial Machine Learning — An Introduction

- 9. 9 CS 502, Fall 2020 Adversarial Examples • The differences between the original and manipulated images are very small (hardly noticeable to the human eye) Original image Attack image Difference Slide credit: Binghui Wang: Adversarial Machine Learning — An Introduction

- 10. 10 CS 502, Fall 2020 Adversarial Examples • An adversarially perturbated image of a panda is misclassified as a gibbon • The image with the perturbation to the human eye looks indistinguishable from the original image Small adversarial noise Classified as panda 57.7% confidence Original image Classified as gibbon 99.3% confidence Adversarial image Gibbon Picture from: https://blog.floydhub.com/introduction-to-adversarial-machine-learning/

- 11. 11 CS 502, Fall 2020 Adversarial Examples • Similar example Picture from: Szagedy (2014) – Intriguing Properties of Neural Networks

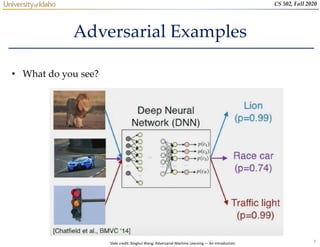

- 12. 12 CS 502, Fall 2020 Adversarial Examples • If a stop sign is adversarially manipulated and it is not recognized by a self-driving car, it can result in an accident Small adversarial noise ? Slide credit: He Xiaoyi – Adversarial Machine Learning

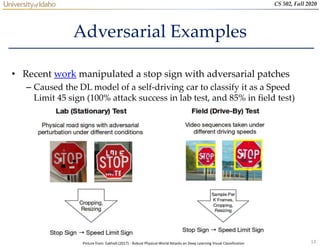

- 13. 13 CS 502, Fall 2020 Adversarial Examples • Recent work manipulated a stop sign with adversarial patches – Caused the DL model of a self-driving car to classify it as a Speed Limit 45 sign (100% attack success in lab test, and 85% in field test) Picture from: Eykholt (2017) - Robust Physical-World Attacks on Deep Learning Visual Classification

- 14. 14 CS 502, Fall 2020 Adversarial Examples • Lab test images for signs with a target class Speed Limit 45 Picture from: Eykholt (2017) - Robust Physical-World Attacks on Deep Learning Visual Classification

- 15. 15 CS 502, Fall 2020 Adversarial Examples • In this example, a 3D-printed turtle is misclassified by a DNN as a rifle (video link)

- 16. 16 CS 502, Fall 2020 Adversarial Examples • A person wearing an adversarial patch is not detected by a person detector model (YOLOv2)

- 17. 17 CS 502, Fall 2020 Adversarial Examples • A “train” in the hallway? Picture from: Yevgeniy Vorobeychik, Bo Li - Adversarial Machine Learning Tutorial

- 18. 18 CS 502, Fall 2020 Adversarial Examples • Non-scientific: a Tesla owner checks if the car can distinguish a person wearing a cover-up from a traffic cone (video link)

- 19. 19 CS 502, Fall 2020 Adversarial Examples • Abusive use of machine learning – Using GANs to generate fake content (a.k.a. deep fakes) Videos of politicians saying things they never said – Barak Obama’s deep fake, or the House Speaker Nancy Pelosi appears drunk in a video Bill Hader impersonation of Arnold Schwarzenegger – Can have strong societal implications: elections, automated trolling, court evidence

- 20. 20 CS 502, Fall 2020 Adversarial ML • AML is a research field that lies at the intersection of ML and computer security – E.g., network intrusion detection, spam filtering, malware classification, biometric authentication (facial detection) • ML algorithms in real-world applications mainly focus on increased accuracy – However, few techniques and design decisions focus on keeping the ML models secure and robust • Adversarial ML: ML in adversarial settings – Attack is a major component of AML – Bad actors do bad things Their main objective is not to get detected (change behavior to avoid detection) Slide credit: Binghui Wang: Adversarial Machine Learning — An Introduction

- 21. 21 CS 502, Fall 2020 Attack Taxonomy • Data poisoning (Causative attack): – Attack on the training phase Attackers perturb the training set to fool the model – Insert malicious inputs in the training set – Modify input instances in the training set – Change the labels to training inputs Attackers attempt to influence or corrupt the ML model or the ML algorithm itself Slide credit: Binghui Wang: Adversarial Machine Learning — An Introduction

- 22. 22 CS 502, Fall 2020 Attack Taxonomy • Evasion attack (Exploratory attack): – Attack on the testing phase – Attackers do not tamper with the ML model, but instead cause it to produce adversary outputs – Evasion attack is the most common attack Slide credit: Binghui Wang: Adversarial Machine Learning — An Introduction

- 23. 23 CS 502, Fall 2020 Evasion Attack • Evasion attack can be further classified into: – White-box attack Attackers have full knowledge about the ML model I.e., they have access to parameters, hyperparameters, gradients, architecture, etc. – Black-box attack Attackers don’t have access to the ML model parameters, gradients, architecture Perhaps they have some knowledge about the used ML algorithm – E.g., attackers may know that a ResNet50 model is used for classification, but they don’t have access to the model parameters Attackers may query the model to obtain knowledge (can get examples) Slide credit: Binghui Wang: Adversarial Machine Learning — An Introduction

- 24. 24 CS 502, Fall 2020 Attack Taxonomy • Depiction of the adversarial attack taxonomy from Alessio's Adversarial ML presentation at FloydHub Evasion Attack Data Poisoning Attack Picture from: https://blog.floydhub.com/introduction-to-adversarial-machine-learning/

- 25. 25 CS 502, Fall 2020 Attack Taxonomy • Each of the above attacks can further be: – Non-targeted attack The goal is to mislead the classifier to predict any labels other than the ground truth label Most existing work deals with this goal E.g., perturb an image of a military tank, so that the model predicts it is any other class than a military tank – Targeted attack The goal is to mislead the classifier to predict a target label for an image More difficult E.g., perturb an image of a turtle, so that the model predicts it is a riffle E.g., perturb an image of a Stop sign, so that the model predicts it is a Speed Limit sign

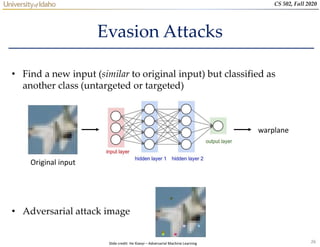

- 26. 26 CS 502, Fall 2020 Evasion Attacks • Find a new input (similar to original input) but classified as another class (untargeted or targeted) • Adversarial attack image Original input warplane Slide credit: He Xiaoyi – Adversarial Machine Learning

- 27. 27 CS 502, Fall 2020 Evasion Attacks • How to find adversarial images? – Given an image x, which is labeled by the classifier (e.g., LogReg, SVM, or NN) as class 𝑞, i.e., 𝐶 𝑥 = 𝑞 – Create an adversarial image 𝑥𝑎𝑑𝑣 by adding small perturbations 𝛿 to the original image, i.e., 𝑥𝑎𝑑𝑣 = 𝑥 + 𝛿, such that the distance 𝐷 𝑥, 𝑥𝑎𝑑𝑣 = 𝐷 𝑥, 𝑥 + 𝛿 is minimal – So that the classifier assigns a label to the adversarial image that is different than 𝑞, i.e., 𝐶 𝑥𝑎𝑑𝑣 = 𝐶 𝑥 + 𝛿 = 𝑡 ≠ 𝑞 distance between x and x+𝛿 x+𝛿 is classified as target class t each element of x+𝛿 is in [0,1] (to be a valid image)

- 28. 28 CS 502, Fall 2020 Evasion Attacks • Distance metrics between 𝑥 and 𝑥𝑎𝑑𝑣: 𝐷 𝑥, 𝑥𝑎𝑑𝑣 – ℓ0 norm: the number of elements in 𝑥𝑎𝑑𝑣 such that 𝑥𝑖 ≠ 𝑥𝑎𝑑𝑣 𝑖 Corresponds to the number of pixels that have been changed in the image 𝑥𝑎𝑑𝑣 – ℓ1 norm: city-block distance, or Manhattan distance ℓ1 = 𝑥1 − 𝑥𝑎𝑑𝑣 1 + 𝑥2 − 𝑥𝑎𝑑𝑣 2 + ⋯ + 𝑥𝑛 − 𝑥𝑎𝑑𝑣 𝑛 – ℓ2 norm: Euclidean distance, or mean-squared error ℓ2 = 𝑥1 − 𝑥𝑎𝑑𝑣 1 2 + 𝑥2 − 𝑥𝑎𝑑𝑣 2 2 + ⋯ + 𝑥𝑛 − 𝑥𝑎𝑑𝑣 𝑛 2 – ℓ∞ norm: measures the maximum change to any of the pixels in the 𝑥𝑎𝑑𝑣 image ℓ∞ = 𝑚𝑎𝑥 𝑥1 ≠ 𝑥𝑎𝑑𝑣 1 , 𝑥2 ≠ 𝑥𝑎𝑑𝑣 2 , … , 𝑥𝑛 ≠ 𝑥𝑎𝑑𝑣 𝑛

- 29. 29 CS 502, Fall 2020 Spam Filtering Adversarial Game • Based on cumulative weights assigned to words, an email is classified as a spam or a legitimate message cheap = 1.0 mortgage = 1.5 Total score = 2.5 From: spammer@example.com Cheap mortgage now!!! Feature Weights > 1.0 (threshold) 1. 2. 3. Spam Slide credit: Daniel Lowd - Adversarial Machine Learning

- 30. 30 CS 502, Fall 2020 Spam Filtering Adversarial Game • The spammers adapt to evade the classifier cheap = 1.0 mortgage = 1.5 Eugene = -1.0 Oregon = -1.0 Total score = 0.5 From: spammer@example.com Cheap mortgage now!!! Eugene Oregon Feature Weights < 1.0 (threshold) 1. 2. 3. OK Slide credit: Daniel Lowd - Adversarial Machine Learning

- 31. 31 CS 502, Fall 2020 Spam Filtering Adversarial Game • The classifier is adapted by changing the feature weights cheap = 1.5 mortgage = 2.0 Eugene = -0.5 Oregon = -0.5 Total score = 2.5 Feature Weights > 1.0 (threshold) 1. 2. 3. OK Spam From: spammer@example.com Cheap mortgage now!!! Eugene Oregon Slide credit: Daniel Lowd - Adversarial Machine Learning

- 32. 32 CS 502, Fall 2020 Common Adversarial Attacks • Noise attack • Semantic attack • Fast gradient sign method (FGSM) attack • Basic iterative method (BIM) attack • Projected gradient descent (PGD) attack • DeepFool attack • Carlini-Wagner (CW) attack

- 33. 33 CS 502, Fall 2020 Noise Attack • Noise attack – The simplest form of adversarial attack – Noise is a random arrangement of pixels containing no information – In Python, noise is created by the randn() function I.e., random numbers from a normal distribution (0 mean and 1 st. dev.) – It represents a non-targeted black-box evasion attack + = Prediction: gorilla Prediction: fountain Picture from: https://blog.floydhub.com/introduction-to-adversarial-machine-learning/

- 34. 34 CS 502, Fall 2020 Semantic Attack • Semantic attack – Hosseini (2017) - On the Limitation of Convolutional Neural Networks in Recognizing Negative Images – Use negative images Reverse all pixels intensities E.g., change the sign of all pixels, if the pixels values are in range [-1,1] Prediction: gorilla Prediction: weimaraner Weimaraner (a dog breed) Original image Negative image Picture from: https://blog.floydhub.com/introduction-to-adversarial-machine-learning/

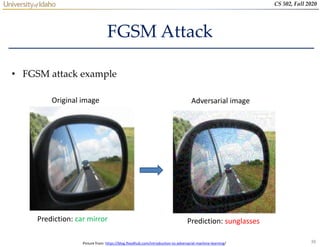

- 35. 35 CS 502, Fall 2020 FGSM Attack • Fast gradient sign method (FGSM) attack – Goodfellow (2015) - Explaining and Harnessing Adversarial Examples • An adversarial image xadv is created by adding perturbation noise to an image x 𝑥𝑎𝑑𝑣 = 𝑥 + 𝜖 ∙ sign 𝛻𝑥ℒ ℎ 𝑥, 𝑤 , 𝑦 – Notation: input image x, cost function ℒ, NN model h, NN weights (parameters) 𝑤, gradient 𝛻 (Greek letter “nabla”), noise magnitude 𝜖 – Perturbation noise is calculated as the gradient of the loss function ℒ with respect to the input image x for the true class label y – This increases the loss for the true class y → the model misclassifies the image xadv

- 36. 36 CS 502, Fall 2020 FGSM Attack • FGSM is a white-box non-targeted evasion attack – White-box, since we need to know the gradients to create the adversarial image – The noise magnitude is ε = 0.007 Note: nematode is an insect referred to as roundworm

- 37. 37 CS 502, Fall 2020 FGSM Attack • Recall that training NNs is based on the gradient descent algorithm – The values of the network parameters (weights) w are iteratively changed until a minimum of the loss function is reached – Gradients of the loss function with respect to the model parameters (𝜕𝑙 𝜕𝑤) give the direction and magnitude for updating the parameters – The step of each update is the learning rate α Picture from: https://blog.floydhub.com/introduction-to-adversarial-machine-learning/

- 38. 38 CS 502, Fall 2020 FGSM Attack • The sign and magnitude of the gradient give the direction and the slope of the steepest descent – Left image: + and – sign of the gradient – Right image: small, adequate, and large slope of the weight update, based on the magnitude of the gradient – Middle image: small and large α (learning rate) • To minimize the loss function, the weights w are changed in the opposite direction of the gradient, i.e., 𝑤 = 𝑤 − α 𝜕𝑙𝑜𝑠𝑠 𝜕𝑤 Sign + direction Sign - direction Slope too large Slope too small Slope right Large α Small α

- 39. 39 CS 502, Fall 2020 FGSM Attack • FGSM attack example Prediction: car mirror Prediction: sunglasses Original image Adversarial image Picture from: https://blog.floydhub.com/introduction-to-adversarial-machine-learning/

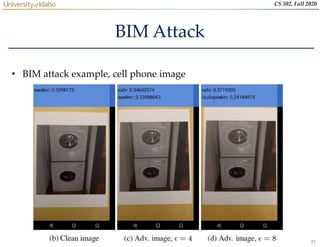

- 40. 40 CS 502, Fall 2020 BIM Attack • Basic iterative method (BIM) attack – Kurakin (2017) Adversarial Examples in the Physical World • BIM is a variant of FGSM: it repeatedly adds noise to the image x in multiple iterations, in order to cause misclassification – The number of iterations steps is t, and α is the amount of noise that is added at each step 𝑥𝑎𝑑𝑣 𝑡 = 𝑥𝑡−1 + 𝛼 ∙ sign 𝛻𝑥ℒ ℎ 𝑥𝑡−1 , 𝑦 – The perturbed image after the t iterations is 𝑥𝑎𝑑𝑣 𝑡 – Multiple steps of adding noise increase the chances of misclassifying the image – Compare to FGSM 𝑥𝑎𝑑𝑣 = 𝑥 + 𝜖 ∙ sign 𝛻𝑥ℒ ℎ 𝑥 , 𝑦

- 41. 41 CS 502, Fall 2020 BIM Attack • BIM attack example, cell phone image

- 42. 42 CS 502, Fall 2020 PGD Attack • Projected gradient descent (PGD) attack – Madry (2017) Towards Deep Learning Models Resistant to Adversarial Attacks • PGD is an extension of BIM (and FGSM), where after each step of perturbation, the adversarial example is projected back onto the 𝜖- ball of x using a projection function Π 𝑥𝑎𝑑𝑣 𝑡 = Π𝜖 𝑥𝑡−1 + 𝛼 ∙ sign 𝛻𝑥ℒ ℎ 𝑥𝑡−1 , 𝑦 • Different from BIM, PGD uses random initialization for x, by adding random noise from a uniform distribution with values in the range −𝜖, 𝜖 • PGD is regarded as the strongest first-order attack – First-order attack means that the adversary uses only the gradients of the loss function with respect to the input

- 43. 43 CS 502, Fall 2020 PGD Attack • PGD attack example Prediction: baboon Prediction: Egyptian cat Egyptian cat Original image Adversarial image Picture from: https://blog.floydhub.com/introduction-to-adversarial-machine-learning/

- 44. 44 CS 502, Fall 2020 PGD Attack • Gradient approaches can also be designed as targeted white-box attacks – The added perturbation noise aims to minimize the loss function of the image for a specific class label In this example, the target class is maraca The iterations loop doesn’t break until the image is classified into the target class, or until the maximum number of iterations is reached Prediction: hippopotamus Prediction: maraca Maraca Original image Adversarial image Picture from: https://blog.floydhub.com/introduction-to-adversarial-machine-learning/

- 45. 45 CS 502, Fall 2020 PGD Attack • For a targeted attack, if the target class label is denoted t, adversarial examples are created by using 𝑥𝑎𝑑𝑣 𝑡 = Π𝜖 𝑥𝑡−1 − 𝛼 ∙ sign 𝛻𝑥ℒ ℎ 𝑥𝑡−1 , 𝑡 – I.e., it is based on minimizing the loss function with respect to the target class t – This is opposite to non-targeted attacks, which maximize the loss function with respect to the true class label

- 46. 46 CS 502, Fall 2020 DeepFool Attack • DeepFool attack – Moosavi-Dezfooli (2015) DeepFool: A Simple and Accurate Method to Fool Deep Neural Networks • DeepFool is an untargeted white-box attack – It mis-classifies the image with the minimal amount of perturbation possible – There is no visible change to the human eye between the two images Prediction: canon Prediction: Projector Difference Picture from: https://blog.floydhub.com/introduction-to-adversarial-machine-learning/

- 47. 47 CS 502, Fall 2020 DeepFool Attack • Image example – Original image: whale – Both DeepFool and FGSM perturb the image to be classifier as turtle – DeepFool leads to a smaller perturbation Prediction: Turtle Difference Prediction: Turtle Difference DeepFool FGSM

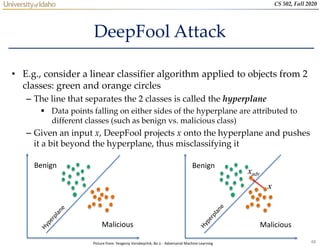

- 48. 48 CS 502, Fall 2020 DeepFool Attack • E.g., consider a linear classifier algorithm applied to objects from 2 classes: green and orange circles – The line that separates the 2 classes is called the hyperplane Data points falling on either sides of the hyperplane are attributed to different classes (such as benign vs. malicious class) – Given an input x, DeepFool projects x onto the hyperplane and pushes it a bit beyond the hyperplane, thus misclassifying it Benign Malicious Benign Malicious x xadv Picture from: Yevgeniy Vorobeychik, Bo Li - Adversarial Machine Learning

- 49. 49 CS 502, Fall 2020 DeepFool Attack • For a multiclass problem with linear classifiers, there are multiple hyperplanes that separate an input x from other classes – E.g., an example with 4 classes is shown in the image below • DeepFool finds that closest hyperplane to the input x0, in this case the hyperplane ℱ3 (most similar class of the other 3 classes) – Then, projects the input and pushes it a little beyond the hyperplane

- 50. 50 CS 502, Fall 2020 DeepFool Attack • For non-linear classifiers (such as neural networks), the authors perform several iterations of adding perturbations to the image – At each iteration, the classifier function is linearized around the current image, and a minimal perturbation is calculated – The algorithm stopes when the class of the image change to another label than the true class

- 51. 51 CS 502, Fall 2020 Carlini Wagner (CW) Attack • Carlini-Wagner (CW) attack – Carlini (2017) Towards Evaluating the Robustness of Neural Networks • The initial formulation for creating adversarial attacks is difficult to solve • Carlini-Wagner propose a reformulation of it which is solvable

- 52. 52 CS 502, Fall 2020 Carlini Wagner (CW) Attack • The authors considered several variants for the function f • The best results were obtained by f6

- 53. 53 CS 502, Fall 2020 Carlini Wagner (CW) Attack • Results on the MNIST dataset 𝐿2 attack 𝐿0 attack 𝐿∞ attack

- 54. 54 CS 502, Fall 2020 Evasion Attacks on Black-Box Models • Adversarial example transferability – Cross-model transferability: the same adversarial example is often misclassified by a variety of classifiers with different architectures – Cross-training set transferability: the same adversarial example is often misclassified trained on different subsets of the training data • Therefore, an attacker can take the following steps to reverse- engineer the classifier: 1. Train his own (white-box) substitute model 2. Generate adversarial samples 3. Apply the adversarial samples to the target ML model Slide credit: Binghui Wang: Adversarial Machine Learning — An Introduction

- 55. 55 CS 502, Fall 2020 Defense Against Adversarial Attacks • Adversarial samples can cause any ML algorithm to fail – However, they can be used to build more accurate and robust models • AML is a two-player game: – Attackers aim to produce strong adversarial examples that evade a model with high confidence while requiring only a small perturbation – Defenders aim to produce models that are robust to adversarial examples (i.e., the models don’t have adversarial examples, or the adversaries cannot find them easily) • Defense strategies against adversarial attacks include: – Adversarial training – Detecting adversarial examples – Gradient masking – Robust optimization (regularization, certified defenses) • A list of adversarial defenses can be found at this link

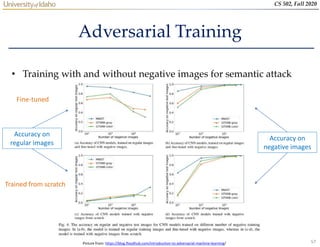

- 56. 56 CS 502, Fall 2020 Adversarial Training • Learning the model parameters using adversarial samples is referred to as adversarial training • The training dataset is augmented with adversarial examples produced by known types of attacks – For each training input add an adversarial example • However, if a model is trained only on adversarial examples, the accuracy to classify regular examples will reduce significantly • Possible strategies: – Train the model from scratch using regular and adversarial examples – Train the model on regular examples and afterward fine-tune with adversarial examples

- 57. 57 CS 502, Fall 2020 Adversarial Training • Training with and without negative images for semantic attack Accuracy on regular images Accuracy on negative images Fine-tuned Trained from scratch Picture from: https://blog.floydhub.com/introduction-to-adversarial-machine-learning/

- 58. 58 CS 502, Fall 2020 Adversarial Training • The plots show the cross-entropy loss values for standard and adversarial training on MNIST and CIFAR10 datasets while creating adversarial examples using PDG attack (Madry, 2018) – 20 runs are shown, each starting at a random point within a perturbation range −𝜖, 𝜖 – The final loss values on adversarially trained models are much smaller than on the original training datasets Picture from: Madry (2018) Towards Deep Learning Models Resistant to Adversarial Attacks

- 59. 59 CS 502, Fall 2020 Random Resizing and Padding • Model training with randomly resizing the image and applying random padding on all four sides have shown to improve the robustness to adversarial attacks – Xie (2018) – Mitigating Adversarial Effects Through Randomization

- 60. 60 CS 502, Fall 2020 Detecting Adversarial Examples • A body of work focused on distinguishing adversarial examples from regular clean examples – If the defense method detects that an input example is adversarial, the classifier will refuse to predict its class label • Example detection defense methods – Kernel Density (KD) detector based on Bayesian uncertainty features Feinman (2017) – Detecting Adversarial Samples from Artifacts – Local Intrinsic Dimensionality (LID) of adversarial subspaces Ma (2018) - Characterizing Adversarial Subspaces Using Local Intrinsic Dimensionality – Adversary detection networks Metzen (2017) On detecting adversarial perturbations

- 61. 61 CS 502, Fall 2020 Gradient Masking • Gradient masking defense methods deliberately hide the gradient information of the model – Since most attacks are based on the model’s gradient information • Distillation defense – changes the scaling of the last hidden layer in NNs, hindering the calculation of gradients – Papernot (2016) Distillation as a defense to adversarial perturbations against deep neural networks • Input preprocessing by discretization of image’s pixel values, or resizing and cropping, or smoothing – Buckman (2018) Thermometer encoding: One hot way to resist adversarial examples • DefenseGAN – uses a GAN model to transform perturbed images into clean images – Samangouei (2017) Defense-GAN: Protecting classifiers against adversarial attacks using generative models

- 62. 62 CS 502, Fall 2020 Robust Optimization • Robust optimization aims to evaluate, and improve, the model robustness to adversarial attacks – Consequently, learn model parameters that minimize the misclassification of adversarial examples • Regularization methods – train the model by penalizing large values of the parameters, or large values of the gradients – Cisse (2017) Parseval networks: Improving robustness to adversarial examples • Certified defenses – for a given dataset and model, find the lower bound of the minimal perturbation: the model will be safe against any perturbations smaller than the lower bound – Raghunathan (2018) Certified defenses against adversarial examples

- 63. 63 CS 502, Fall 2020 Conclusion • ML algorithms and methods are vulnerable to many types of attacks • Adversarial examples show its transferability in ML models – I.e., either cross-models or cross-training sets • Adversarial examples can be leveraged to improve the performance or the robustness of ML models

- 64. 64 CS 502, Fall 2020 References 1. Introduction to Adversarial Machine Learning – blog post by Arunava Chakraborty 2. Binghui Wang: Adversarial Machine Learning — An Introduction 3. Daniel Lowd, Adversarial Machine Learning 4. Yevgeniy Vorobeychik, Bo Li, Adversarial Machine Learning (Tutorial)

- 65. 65 CS 502, Fall 2020 Other AML Recourses • Cleverhans - a repository from Google that implements latest research in AML – The library is being updated to support TensorFlow2, PyTorch, and Jax • Adversarial Robustness Toolbox - a toolbox from IBM that implements state-of-the-art attacks and defenses – The algorithms are framework-independent, and support TensorFlow, Keras, PyTorch, MXNet, XGBoost, LightGBM, CatBoost, etc. • ScratchAI – a smaller AML library developed in PyTorch, and explained in this blog post • Robust ML Defenses - list of adversarial defenses with code • AML Tutorial – by Bo Li, Dawn Song, and Yevgeniy Vorobeychik • Nicholas Carlini website

![27

CS 502, Fall 2020

Evasion Attacks

• How to find adversarial images?

– Given an image x, which is labeled by the classifier (e.g., LogReg,

SVM, or NN) as class 𝑞, i.e., 𝐶 𝑥 = 𝑞

– Create an adversarial image 𝑥𝑎𝑑𝑣 by adding small perturbations 𝛿 to

the original image, i.e., 𝑥𝑎𝑑𝑣 = 𝑥 + 𝛿, such that the distance

𝐷 𝑥, 𝑥𝑎𝑑𝑣 = 𝐷 𝑥, 𝑥 + 𝛿 is minimal

– So that the classifier assigns a label to the adversarial image that is

different than 𝑞, i.e., 𝐶 𝑥𝑎𝑑𝑣 = 𝐶 𝑥 + 𝛿 = 𝑡 ≠ 𝑞

distance between x and x+𝛿

x+𝛿 is classified as target class t

each element of x+𝛿 is in [0,1] (to be a valid image)](https://image.slidesharecdn.com/lecture1introductiontoadversarialmachinelearning-221213091000-62272d22/85/Lecture_1_Introduction_to_Adversarial_Machine_Learning-pptx-27-320.jpg)

![34

CS 502, Fall 2020

Semantic Attack

• Semantic attack

– Hosseini (2017) - On the Limitation of Convolutional Neural Networks

in Recognizing Negative Images

– Use negative images

Reverse all pixels intensities

E.g., change the sign of all pixels, if the pixels values are in range [-1,1]

Prediction: gorilla Prediction: weimaraner

Weimaraner (a dog breed)

Original image Negative image

Picture from: https://blog.floydhub.com/introduction-to-adversarial-machine-learning/](https://image.slidesharecdn.com/lecture1introductiontoadversarialmachinelearning-221213091000-62272d22/85/Lecture_1_Introduction_to_Adversarial_Machine_Learning-pptx-34-320.jpg)