MongoDB Revised Sharding Guidelines MongoDB 3.x_Kimberly_Wilkins

- 1. Percona Live 2016 Kimberly Wilkins Updated Sharding Guidelines in MongoDB 3.x and Storage Engine Considerations Principal Engineer - Databases Rackspace/ObjectRocket www.linkedin.com/in/wilkinskimberly, @dba_denizen, kimberly.wilkins@rackspace.com

- 2. My Background • 18+ Years working on various database platforms • Mainly Oracle (dab’s, RAC, Enterprise Manager, GoldenGate Replication, DataGuard, Database Vault, Exadata) • MongoDB NoSQL and Big Data Infrastructure and techs at OR • Industries –early online auto auctions, gaming, social media • Specialties –re-architect enterprise db environments, infrastructure, implementations, RAC, replication, system kernels, database storage • Re-engineered the database infrastructure for SWTOR –Star Wars The Old Republic MMO Game

- 3. Overview - Sharding • What is Sharding? • Why Shard? • When to Shard? When not to Shard? • Sharding Process • Selecting <Good> Shard Keys • Specific Tips and Examples, Managing Shards and Scaling • Radical Ideas (?) and Storage Engine Considerations

- 4. Sharding – What is it? • Sharding = Horizontal Scaling, Partitioning • Scale Out – add physical or virtual hosts • Add supporting network and app layers Redundancy Flexible, Scalable Architectures Add Resources on the fly HA DR Fault Tolerant Clusters Many Different Sources, Types

- 5. Commodity Hardware vs. Big Iron • Multiple smaller hosts or Virtuals/Containerization • Larger Single Servers with Massive CPU, RAM, SAN’s

- 7. Out (Horizontal) vs Up (Vertical) • Multiple smaller hosts or Virtuals/Containerization • Larger Single Servers with Massive CPU, RAM, SAN’s • Out NOT Up, more smaller not fewer BIGGER

- 8. Why Would You Need or Want to Shard? • Scalability, Performance, High Availability, Redundancy

- 9. High Availability Matters; Redundancy Matters. Without a way to post, creep and comment on things within the world's most popular social network, many turned to Twitter with their updates. PANIC!! “#facebookdown day 1, minute 3: we still have electricity, poppa has been hoarding antibiotics and microbrews. We're gonna ride this out.” There are people on the streets hurling printed- out pics of their kids at strangers bellowing “Like them. Like them.” #facebookdown

- 10. Why Do it? Big Data Requirements

- 11. Now Internet of All Things …

- 12. IoT, IoE -Internet of Things, Internet of Everything $$

- 13. Why to Shard? … Why NoSQL? • Faster, more flexible development – 24% • Lower software, hardware, and deployment $$ - 21% • Performance - faster writes, faster reads • Developers – “schemaless”, cool toys • ^^ dev’s than ^ dba’s • Variety of NoSQL Technologies

- 14. RDBMS NoSQL records documents tables collections, buckets, tables rows fields set data types flexible data types rigid schemas, structured data Unstructured & structured data primary keys document or objectId’s normalized de-normalized referential integrity duplicated data is OK joins index intersections, partials

- 15. When To Shard? • Need Better Performance • Need Additional Write Scopes • App development today => Think ahead, expect growth • EARLY – Shard BEFORE You Run Out of Resources • Have Different Use cases • Best Tool for the Job - aka Polyglot Persistence

- 18. How to Shard? • Architectural Overview • General Process and Steps for Sharding • Shard Key Selection • Details, Examples, and Tips • Managing Shards and Replication • Radical ideas and Storage Engine Consideration

- 19. Primary Secondary Secondary Heartbeat Single Replica Set Basic MongoDB Architecture

- 20. Shard 1 Secondary Secondary Primary Shard 2 Secondary Secondary Primary Shard 3 Secondary Secondary Primary Client Drivers MongoS Tier (Router) MongoD Tier Replica Sets MongoS MongoS MongoS MongoS Config Servers (Metadata) Config 3 Config 1 Config 2 Replica Set 3.2 Sharded Cluster

- 21. • Good key, good performance. Bad key, bad performance. • NO PERFECT Shard Key –trade-offs – users/social apps • Shard Key - in all docs – immutable ** • Shard Key -used in queries, know your query patterns • Easily divisible – for balanced chunks, increase cardinality • Consider Compound Keys to better limit return set • Shard early, shard often – impactful so don’t wait Shard Key Considerations

- 22. • What Does your App do? How does it work? • More read heavy? More write heavy? Balanced 50/50? • 1 activity more important than others - ex. we write a lot but we make our $ by people querying • Expected growth patterns - per week? per month? per year? • Busy times of day? week? month? year? • Bulk Loads/Deletes? ever? when? • Current pain or performance problem areas? ASK <Additional> QUESTIONS!!!

- 23. • Profiler to ALL - REPRESENTATIVE time period • Type of queries, # per namespace • Patterns and predicate via aggregs • Check for nulls – NO nulls allowed shard keys • Consider Compound Keys - limit return set • Check Cardinality – on secondaries – less hurtful!! • NO PERFECT Shard Key –trade-offs – users/social apps How to Shard – General Steps

- 24. How to Shard – Specific Tasks • Perform Profiling and Query Pattern Analysis • Select the BEST option for the Shard Key • Create the Required Shard Key Index • Disable the Balancer • Enable Sharding at The DB level • Shard the Collection / Add Shards • Re-enable the Balancer

- 25. Sample Shard Key Evaluation Queries/Aggs • **Run Queries and Aggregations against unused Secondaries** SECONDARY> db.events.new.aggregate([{$project:{”BoxId":1}},{ $group: { _id: "$BoxId"} },{ $group: { _id: 1, count: { $sum: 1 } } }],{allowDiskUse:true}) { "_id" : 1, "count" : 3303464 ** Note good cardinality here** • SECONDARY> db.events.new.aggregate([{$project:{”BoxId":1}},{$group: { _id:"$BoxId",number : {$sum: 1}}},{$sort:{number:-1}},{$limit:20}],{allowDiskUse:true}) • { "_id" : "pnx-xxxxxxxx.003", "number" : 46889 } • { "_id" : "jhx-xxxxxxxx.002, "number" : 23644 } • { "_id" : "3tq9-xxxxxxxx.001", "number" : 17769 } -Look for NULLS and for DISTINCT -Look at sample values of documents and fields near FRONT and BACK of the collection

- 26. Another Shard Key Aggregation example • Run aggregation query to find the most common reference id (rid) values and sort to give you the top 5. • Run against the PROFILE collection and can run on SECONDARIES of busy systems to prevent impact to your application that works via primaries. SECONDARY> db.items.aggregate([{$project:{rid:1}}, {$group:{_id:"$rid",count:{$sum:1}}},{$sort: {count:-1}},{$limit:5}])

- 27. Another Shard Key Aggregation example –cont’d { "_id" : ObjectId("f58400d9a5e140d83af22035"), "count" : 1719248 }, { "_id" : ObjectId("f1430058d66d4d2861c1f435"), "count" : 1618900 }, { "_id" : ObjectId("eb80103780289205d2ed1645"), "count" : 1205436 }, { "_id" : ObjectId("ee220058d66d4d2853495435"), "count" : 1194683 }, { "_id" : ObjectId("cd0c103780289205fe7bb845"), "count" : 1158741

- 28. Actual Sample Sharding Commands • Shard Key Selection Analysis and Considerations • Create required index : use users; db.users.ensureIndex( {“_id” : “hashed”},{background:true} ); • Enable sharding at the db level : use admin; db.runCommand( {enablesharding: “users”} ); • Shard the collection db.adminCommand( { shardCollection :“users.users”,key : {“_id”:”hashed”} } );

- 29. Pre-Sharding for Very Active, Larger Collections • Connect to a‘non-real’ mongo shell • Use javascript to create javascript for desired goals • Start a screen or tmux and name it • Connect via MongoS to your real desired instance as admin db • Use the generated scripts/commands to enable sharding at the db level then create the collections with desired #of pre- allocated initial chunks

- 30. Snippet - javascript to create javascript [host1] > mongo –nodb > count=16; while (count<17){ print("db.runCommand( { enablesharding : ’hits-2016-"+count+"' } ) ;"); print("db.adminCommand({shardCollection:’hits-2016-"+count+".hits-2016-"+count +"', key:{'_id' : 'hashed'}, numInitialChunks : 2000});") count++ }

- 31. Running Snippet to actually pre-create chunks mongos> sh.getBalancerState() false mongos> sh.isBalancerRunning() false mongos> sh.stopBalancer() -db.runCommand( { enablesharding : ’hits-2016-16' } ) ; db.adminCommand({shardCollection:’hits-2016-16.hits-2016-16', key:{'_id' : 'hashed'}, numInitialChunks : 2000}); …… . . . . . . db.runCommand( { enablesharding : ’hits-2016-17' } ) ; db.adminCommand({shardCollection:’hits-2016-17.hits-2016-17', key:{'_id' : 'hashed'}, numInitialChunks : 2000});

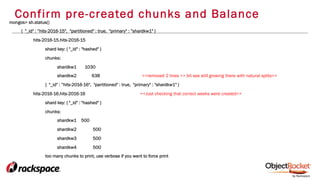

- 32. Confirm pre-created chunks and Balance mongos> sh.status() { "_id" : ”hits-2016-15", "partitioned" : true, "primary" : ”shardkw1" } hits-2016-15.hits-2016-15 shard key: { "_id" : "hashed" } chunks: shardkw1 1030 shardkw2 638 <<removed 2 lines >> bit see still growing there with natural splits>> { "_id" : ”hits-2016-16", "partitioned" : true, "primary" : "shardkw1" } hits-2016-16.hits-2016-16 <<Just checking that correct weeks were created>> shard key: { "_id" : "hashed" } chunks: shardkw1 500 shardkw2 500 shardkw3 500 shardkw4 500 too many chunks to print, use verbose if you want to force print

- 33. • 1st case - Large # of of Small sized Shards • MANY Smaller shards as they need additional write scopes • 2nd case - Medium # of Medium sized Shards • Larger but still need write scopes but without users spread so far across all of the shards when reading • 3rd case - Smaller # of larger sized shards • Need additional resources for higher number of connections, higher number of queries • IN ALL 3 Cases – they are sharded on write friendly "_id" : "hashed” 3 Very Different Use Cases for Sharding

- 34. BY - Large # Small Shards DR – Medium # Medium Shards BS – Small # Large Shards mobile analytics and marketing app Shard Key - "_id" : "hashed" social media app holding connective user data Shard Key - "_id" : "hashed” Mobile game marketing and monetization customer Shard Key - "_id" : "hashed" 256 million smaller user docs of ~2143 bytes Smaller user updates and campaigns ~82 million bigger user docs of ~26036 bytes ~~10 billion smaller device docs of ~ 252 bytes Lots and lots of devices - mobile phones 45 shards @ 20G Plan size 22 shards @ 100G Plan size 7 shards @ 500G Plan Size 100 – 160 Queries per Second 100 – 125 Queries per Second 400 – 2000 Queries per Second *have seen up to 300,000 QPS 20 – 40 Updates per Second 85 – 110 Updates per Second 20 – 40 Updates per Second 10 – 20 Inserts per Second ~1200 connections per shard * 45 shards so ~54,000 connections ~4000 connections per shard * 22 shards so ~88,000 connections ~5700 connections per shard *7 shards so ~40,000 connections Need more smaller shards for the lot more write scopes Need more write scopes but not the associated spread out scatter gathers so not as many shards Need additional resources of larger shards due to higher number of queries, connections, and smaller size of objects Well balanced chunks and disks Well balanced chunks and disks AFTER initially taking a bit to get balanced Not balanced naturally – must manipulate via numInitialCHhnks at new db and sharded collection creation point

- 35. Bad Shard Keys…. $#@#$

- 36. Bad Shard Keys…. Bad Performance • Hot Spotting for Writes • Hot Spotting for Reads • Disk Imbalance • Jumbo Chunks • Slow Queries • Slow Performance • Slow Apps • Angry Customers

- 37. Bad Shard Key… What to Do? Fix It !!! - dump & restore - drains

- 38. Bad Shard Key… Fixing • Dump and Restore – Dump collection; drop collection; recreate collection – Re-shard collection, restore collection • Drain Shard – Estimate moveChunk time db.getSiblingDB("config").changelog.find({"what" : "moveChunk.commit"},{time:1,_id: 0}).sort({time:-1}) – Run js script to generate moveChunk commands – Stop Balancer -Run moveChunk script – -Run removeShard command twice – Restart Balancer • ;

- 39. Bad Shard Key… Fixing … script examples

- 40. Larger Replica Set vs. Sharding Replica Set Sharding Want simplification Expertise for Sharding Lots of reads – don’t want scatter gathers Lots of writes/updates – want to go directly to exact shards Lots of data, lower activity Lots of data, lots of activity Need More ‘normal’ resources – just disks, just memory, etc. Need more of all resources – disks, RAM, CPU, write scopes Application Knowledge Application Knowledge Religious War - Do Not Engage Religious War - Do Not Engage

- 41. Storage Engine Considerations • Workloads • Document Sizes – Now – Future • Collection Sizes – Now – Future • Hardware

- 42. WiredTiger vs. MMAPv1 –Generalizations ONLY WiredTiger MMAPv1 Freq writes, inserts, appends Still better for heavy read loads Compression; defragmentation No compression, fragmentation Intent level locking (document) Collection level locking Mass bulk loads, small docs V Updates in place, esp, that grow Complete write and replace Updates existing, grow and move Cache Eviction settings and issues, Cache settings, threads Will use all memory allocated – memory mapped files

![Sample Shard Key Evaluation Queries/Aggs

• **Run Queries and Aggregations against unused Secondaries**

SECONDARY> db.events.new.aggregate([{$project:{”BoxId":1}},{ $group: { _id: "$BoxId"} },{ $group: { _id:

1, count: { $sum: 1 } } }],{allowDiskUse:true})

{ "_id" : 1, "count" : 3303464 ** Note good cardinality here**

• SECONDARY> db.events.new.aggregate([{$project:{”BoxId":1}},{$group: { _id:"$BoxId",number : {$sum:

1}}},{$sort:{number:-1}},{$limit:20}],{allowDiskUse:true})

• { "_id" : "pnx-xxxxxxxx.003", "number" : 46889 }

• { "_id" : "jhx-xxxxxxxx.002, "number" : 23644 }

• { "_id" : "3tq9-xxxxxxxx.001", "number" : 17769 }

-Look for NULLS and for DISTINCT

-Look at sample values of documents and fields near FRONT and BACK of the collection](https://image.slidesharecdn.com/kimberlywilkinsmongodbrevisedshardingperc2016scpublicapril2016-160420001346/85/MongoDB-Revised-Sharding-Guidelines-MongoDB-3-x_Kimberly_Wilkins-25-320.jpg)

![Another Shard Key Aggregation example

• Run aggregation query to find the most common reference id (rid) values

and sort to give you the top 5.

• Run against the PROFILE collection and can run on SECONDARIES of busy

systems to prevent impact to your application that works via primaries.

SECONDARY> db.items.aggregate([{$project:{rid:1}},

{$group:{_id:"$rid",count:{$sum:1}}},{$sort:

{count:-1}},{$limit:5}])](https://image.slidesharecdn.com/kimberlywilkinsmongodbrevisedshardingperc2016scpublicapril2016-160420001346/85/MongoDB-Revised-Sharding-Guidelines-MongoDB-3-x_Kimberly_Wilkins-26-320.jpg)

![Snippet - javascript to create javascript

[host1] > mongo –nodb

>

count=16;

while (count<17){

print("db.runCommand( { enablesharding : ’hits-2016-"+count+"' } ) ;");

print("db.adminCommand({shardCollection:’hits-2016-"+count+".hits-2016-"+count

+"', key:{'_id' : 'hashed'}, numInitialChunks : 2000});")

count++

}](https://image.slidesharecdn.com/kimberlywilkinsmongodbrevisedshardingperc2016scpublicapril2016-160420001346/85/MongoDB-Revised-Sharding-Guidelines-MongoDB-3-x_Kimberly_Wilkins-30-320.jpg)