Ovs dpdk hwoffload way to full offload

- 1. 1© 2018 Mellanox Technologies Towards VXLAN full hardware offload March 2019, roni bar yanai OVS-DPDK HW- OFFLOAD

- 2. 2© 2018 Mellanox Technologies Agenda Motivation Hardware offload in OVS VXLAN full hardware offload

- 3. 3© 2018 Mellanox Technologies Motivation

- 4. 4© 2018 Mellanox Technologies Demand Increases Cloud NFV HPC Storage SDN, Tunneling, Isolation, Connection Tracking requires complex switching/routing. Switching becomes the current bottleneck of network virtualization, even with DPDK. Requirement: Higher PPS Lower Latency Less Host CPU usage for moving packets Jitter We are looking for the next performance scale up x3-x10

- 5. 5© 2018 Mellanox Technologies OLD HW Accelerations Dumb NIC CHKSUM/TSO/LRO/RSS Tunneling NEW HW Accelerations Smart(SoC)/Intelligent NIC Rule Based Varity of Matches and Actions Switching Capabilities (e-switch) Network Hardware Acceleration is already here

- 6. 6© 2018 Mellanox Technologies OVS a great opportunity for hardware acceleration Integration Support for different OS/Containers Support of orchestration Bring the best performance to the guest as an infra. Guest can get performance much closer to native SR-IOV. Buy A better nic and you get even better performance to the guest. Challenges: Full hardware acceleration bypassing OVS. (SR-IOV, vDPA will have same issues). Different hardware with different architecture and API SR-IOV NIC OVS VM VM eSwitch VM SR-IOV

- 7. 7© 2018 Mellanox Technologies Representors Representor ports are a ethdev modeling of eSwitch ports The VF representor supports the following operations • Send packet from the host CPU to VF (OVS Re-injection) • Receive of eSwitch “miss” packets • Flow configuration (add/remove) • Flow statistics read for the purposes of aging and statistics hypervisor OVS vPort1 representor Ext port PF vPort 1 VM vPort2 representor vPort3 representor HW eSwitch vPort 2 VM vPort 3 VM VF 1 VF 2 VF 3 PF Net device eSW port Flows FDB

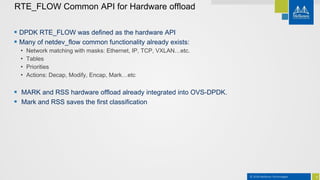

- 8. 8© 2018 Mellanox Technologies DPDK RTE_FLOW was defined as the hardware API Many of netdev_flow common functionality already exists: • Network matching with masks: Ethernet, IP, TCP, VXLAN…etc. • Tables • Priorities • Actions: Decap, Modify, Encap, Mark…etc MARK and RSS hardware offload already integrated into OVS-DPDK. Mark and RSS saves the first classification RTE_FLOW Common API for Hardware offload

- 9. 9© 2018 Mellanox Technologies Hardware offload in OVS

- 10. 10© 2018 Mellanox Technologies Hardware offload next steps Searching for DP flow by matching packet fields DP Flow actions are executed Execution may include changing the packet (VXLAN pop) and recircle the packet again. Packet will have to run through the same process again Some actions such as connection tracking might include another search, access and modification All is done in software and some actions require additional memory access VXLAN with connection tracking will have at least three cycles of processing. rx, pop , and connection tracking Match Action recircle output upcall • Waste of CPU cycles • Latency • Throughput

- 11. 11© 2018 Mellanox Technologies Software based vs Hardware based OVS-vswitchd OVS DPDK DP OVS-vswitchd OVS DPDK DP Smart Nic Hardware Traditional Model: All Software High Latency, Low Bandwidth, CPU Intensive ConnectX: Hardware Offload Low Latency, High Bandwidth, Efficient CPU First flow packet Fallback FRWD path HW forwarded Packets

- 12. 12© 2018 Mellanox Technologies OVS DPDK with/without full HW offload 7.6 MPPS 66 MPPS 0 10 20 30 40 50 60 70 OVS over DPDK OVS Offload MillionPacketPerSecond OVS over DPDK OVS Offload Test Full HW offload Without offload Benefit 1 Flow VXLAN 66M PPS 7.6M PPS (VLAN) 8.6X 60K flows VXLAN 19.8M PPS 1.9M PPS 10.4X 4 cores1 core. 100% idle

- 13. 13© 2018 Mellanox Technologies OVS DPDK with/without partial HW offload Test Partial HW offload Without offload Benefit 1 Flow VXLAN 10M PPS 3M PPS 3.3X 32K flows VXLAN 7.5M PPS 2.2M PPS 3.4X 0 1 2 3 4 5 6 7 8 9 10 no offload offload MPPS no offload offload Partial: VXLAN pop is done in hardware, and than mark and RSS. The forwarding is done in SW and limited by the PMD thread 2 cores

- 14. 14© 2018 Mellanox Technologies VXLAN full offload

- 15. 15© 2018 Mellanox Technologies VXLAN full offload Why start with VXLAN offload? Common use case Same implementation with minor changes can be implemented for other tunneling. Simple compared to CT and can be supported with current RTE_FLOW implementation. CT requires non raw networking fields such a ZONE,MARK…etc. RX benefit can be effective where processing continues on SW (CT is common use case), because VXLAN pop is done first. Market is starving for more PPS and VXLAN hardware offload has enough benefit for being deployed full/partial.

- 16. 16© 2018 Mellanox Technologies OVS-DPDK Hardware offload guidelines Generic: Any hardware that implements the required RTE_FLOW match/action should support it. Minimal Interface with OVS code. Follow OVS netdev-flow as close as possible. Incremental.

- 17. 17© 2018 Mellanox Technologies Vxlan in OVS DPDK There are 2 level of switch that are cascade The HW classification accelerate only the lower switch (br-phys1) br-phy1 is a kernel interface for vxlan The OVS data path executes pop_tnl and inner packet classification (and output). TX Direction, only classification of inner packet Br-phy1 Br-int vPorts VF 2 VF 3 VF n T a p VMVM VM Uplink/ PF VF 1 T a p VM vxlan VM VM IP address Br-phy1

- 18. 18© 2018 Mellanox Technologies TNL_PUSH recirc_id(0),in_port(2),packet_type(ns=0,id=0),eth(src=52:54:00:a6:9b:1f,dst=6e:7f:14:8b:f9:99),eth_typ e(0x0800),ipv4(tos=0/0x3,frag=no), packets:4, bytes:594, used:2.855s, flags:SFP., actions:clone(tnl_push(tnl_port(4),header(size=50,type=4,eth(dst=98:03:9b:64:0b:fa,src=98:03:9b:64:0b: 32,dl_type=0x0800),ipv4(src=11.0.0.36,dst=11.0.0.35,proto=17,tos=0,ttl=64,frag=0x4000),udp(src=0,dst= 4789,csum=0x0),vxlan(flags=0x8000000,vni=0x64)),out_port(6)),5) TNL_PUSH is straight forward. • flow contains the entire header as byte array • Can be implemented with RTE_FLOW raw encap • Only UDP port and checksum are fixed in code (can be offloaded to hardware in any case) Inner PacketVXLAN (eth,ip,udp,vxlan)

- 19. 19© 2018 Mellanox Technologies TNL_POP recirc_id(0),in_port(5),packet_type(ns=0,id=0),eth(src=98:03:9b:64:0b:fa,dst=98:03:9b:64:0b:32),eth_ty pe(0x0800),ipv4(dst=11.0.0.36,proto=17,frag=no),udp(dst=4789), packets:5, bytes:723, used:1.142s, actions:tnl_pop(4) tunnel(tun_id=0x64,src=11.0.0.35,dst=11.0.0.36,flags(-df- csum+key)),recirc_id(0),in_port(4),packet_type(ns=0,id=0),eth(src=6e:7f:14:8b:f9:99,dst=52:54:00:a6:9 b:1f),eth_type(0x0800),ipv4(frag=no), packets:5, bytes:473, used:2.854s, flags:FP., actions:2 Executed by two netdev_flow flows • tnl_pop, remove tnl and forward to vport (not a DPDK entity). • second flow has match on meta data

- 20. 20© 2018 Mellanox Technologies odp_port_t in_port = flow->flow.in_port.odp_port; . . port = dp_netdev_lookup_port(pmd->dp, in_port); . . ret = netdev_flow_put(port->netdev, &offload->match, CONST_CAST(struct nlattr *, offload->actions), offload->actions_len, &flow->mega_ufid, &info, NULL); vport_netdev VPORT has no RTE offload and requires some special handling

- 21. 21© 2018 Mellanox Technologies VPORT can be represented as a Table The action of a rule can be to go to other table. It can be use to chain classification Table 0 Match A flow ID 1 Match B drop Match C Table 1 Match D Table 1 Default no flow ID Table 1 Match E flow ID 2 Match F flow ID 3 Default flow ID 4

- 22. 22© 2018 Mellanox Technologies VXLAN HW offload concept If the action is to forward to vport add HW rule to point to a table representing the vport interface. If the in port of the rule is vport (like vxlan) add rule to the table representing the interface. We don’t pop the tunnel yet! We can’t have metadata. vport will match on both inner and outer (already in the flow), meta data will matched against the outer header. Match will work exactly the same ,because we didn’t strip the tunnel yet. The vport will execute the pop. The vport will mark/rss or output the packet. Br-phy1 Br-int VM Uplink/ PF Tap VM vxlan IP address Br-phy1MatchA flow ID 1 MatchB drop + count MatchC Table 1 + count MatchD Table 1 + count Default no flow ID MatchE flow ID 2 MatchF flow ID 3 Default flow ID 4 Table 1 all the rules that the src port is the vxlan interface VM Tap VM Table 0

- 23. 23© 2018 Mellanox Technologies Vxlan HW offload vport table has a default rule that will forward to software if no other match. flows are not atomic, it is possible that the vport table will not have a match yet, but packets will be forwarded to it. vport is represented by HW table but it is not an OVS table, we still forward only on table 0. Exception: if we have a match on the default flow, we already did some of the way, we mark with a special mark. Special mark will be mapped to VXLAN software implantation in this case. Br-phy1 Br-int VM Uplink/ PF Tap VM vxlan IP address Br-phy1MatchA flow ID 1 MatchB drop + count MatchC Table 1 + count MatchD Table 1 + count Default no flow ID MatchE flow ID 2 MatchF flow ID 3 Default flow ID 4 Table 1 all the rules that the src port is the vxlan interface VM Tap VM Table 0

- 24. 24© 2018 Mellanox Technologies Vxlan HW offload We offload only if all actions are supported. In case offload is not yet possible, we fallback to mark/rss. If flow offload include jump, we must add a counter because it won’t hit software. Software counters must read the hardware counters for such flows or else flow will age out. Br-phy1 Br-int VM Uplink/ PF Tap VM vxlan IP address Br-phy1MatchA flow ID 1 MatchB drop + count MatchC Table 1 + count MatchD Table 1 + count Default no flow ID MatchE flow ID 2 MatchF flow ID 3 Default flow ID 4 Table 1 all the rules that the src port is the vxlan interface VM Tap VM Table 0

- 25. 25© 2018 Mellanox Technologies VPORT offloads Since netdev_flow api uses the odp_port and netdev to offload, we need to add offload API for vport which are part of DPDK DP. The actual hardware that the rule will be configured is any uplink. If we have also output action we must verify we configure output rule only on the uplink which is on the same e-switch as the output port (VF). Theoretically, packet can get from any uplink and go to any VF (or even PF). for hardware offload it is possible to send packets that are on the same e-switch (each port belongs to e-switch domain which is a unique entity). From performance point of view, we expect users to have packets forwarded only on same switch (for partial we do both because it goes to software anyway).

- 26. 26© 2018 Mellanox Technologies Where we are? We implemented partial offload and did a performance testing Started to push up stream (first phase is code split which is currently in review process). We are preparing the rest of the patches. We started coding the full offload, our expectation is to complete it on the next two months. We started CT offload architecture.

- 27. 27© 2018 Mellanox Technologies Thank You