Process and CPU Scheduling.pptx it is about Operating system

- 2. Introduction ■Basic Concepts ■Scheduling Criteria ■Scheduling Algorithms ■Multiple-Processor Scheduling ■Real-Time Scheduling ■Algorithm Evaluation

- 3. Basic Concepts ■Maximum CPU utilization obtained with multiprogramming. ■CPU–I/O Burst Cycle – Process execution consists of a cycle of CPU execution and I/O wait. ■CPU burst distribution.

- 4. Alternating Sequence of CPU And I/O Bursts

- 5. CPU Scheduler ■Selects from among the processes in memory that are ready to execute, and allocates the CPU to one of them. ■CPU scheduling decisions may take place when a process: 1. Switches from running to waiting state. 2. Switches from running to ready state. 3. Switches from waiting to ready. 4. Terminates. ■Scheduling under 1 and 4 is nonpreemptive. ■All other scheduling is preemptive.

- 6. Dispatcher ■Dispatcher module gives control of the CPU to the process selected by the short-term scheduler; this involves: ❑switching context ❑switching to user mode ❑jumping to the proper location in the user program to restart that program ■Dispatch latency – time it takes for the dispatcher to stop one process and start another running.

- 7. Scheduling Criteria ■CPU utilization – keep the CPU as busy as possible ■Throughput – # of processes that complete their execution per time unit ■Turnaround time – amount of time to execute a particular process ■Waiting time – amount of time a process has been waiting in the ready queue ■Response time – amount of time it takes from when a request was submitted until the first response is produced, not output (for time-sharing environment)

- 8. Various Times related to the Process

- 9. Optimization Criteria ■Max CPU utilization ■Max throughput ■Min turnaround time ■Min waiting time ■Min response time

- 10. First-Come, First-Served (FCFS) Scheduling ■ Example: Process Burst Time P1 24 P2 3 P3 3 ■ Suppose that the processes arrive in the order: P1 , P2 , P3 The Gantt Chart for the schedule is: ■ Waiting time for P1 = 0; P2 = 24; P3 = 27 ■ Average waiting time: (0 + 24 + 27)/3 = 17 P1 P2 P3 24 27 30 0

- 11. FCFS Scheduling (Cont.) Suppose that the processes arrive in the order P2 , P3 , P1 . ■The Gantt chart for the schedule is: ■Waiting time for P1 = 6; P2 = 0; P3 = 3 ■Average waiting time: (6 + 0 + 3)/3 = 3 ■Much better than previous case. ■Convoy effect short process behind long process P1 P3 P2 6 3 30 0

- 12. Shortest-Job-First (SJF) Scheduling ■Associate with each process the length of its next CPU burst. Use these lengths to schedule the process with the shortest time. ■Two schemes: ❑ nonpreemptive – once CPU given to the process it cannot be preempted until completes its CPU burst. ❑ Preemptive – if a new process arrives with CPU burst length less than remaining time of current executing process, preempt. This scheme is know as the Shortest-Remaining-Time-First (SRTF). ■SJF is optimal – gives minimum average waiting time for a given set of processes.

- 13. Example of Non-Preemptive SJF Process Arrival Time Burst Time P1 0 7 P2 2 4 P3 4 1 P4 5 4 ■SJF (non-preemptive) ■Average waiting time = (0 + 6 + 3 + 7)/4 = 4 P1 P2 7 3 16 P4 8 12 P3 0

- 14. Example of Preemptive SJF(SRTF) Process Arrival Time Burst Time P1 0 7 P2 2 4 P3 4 1 P4 5 4 ■SJF (preemptive) P1 P3 P2 4 2 11 0 P4 5 7 P2 P1 16

- 15. Round Robin (RR) ■Each process gets a small unit of CPU time (time quantum), usually 10-100 milliseconds. After this time has elapsed, the process is preempted and added to the end of the ready queue. ■If there are n processes in the ready queue and the time quantum is q, then each process gets 1/n of the CPU time in chunks of at most q time units at once. No process waits more than (n-1)q time units. ■Performance ❑ q large ⇒ FIFO ❑ q small ⇒ q must be large with respect to context switch, otherwise overhead is too high.

- 16. Example: RR with Time Quantum = 20 Process Burst Time P1 53 P2 17 P3 68 P4 24 ■The Gantt chart is: Average waiting time = (81+20+94+97)/4 = 73 ■Typically, higher average turnaround than SJF, but better response. P1 P2 P3 P4 P1 P3 P4 P1 P3 P3 0 20 37 57 77 97 117 121 134 154 162

- 17. How a Smaller Time Quantum Increases Context Switches

- 18. Turnaround Time Varies With The Time Quantum

- 19. Priority Scheduling ■A priority number (integer) is associated with each process ■The CPU is allocated to the process with the highest priority (smallest integer ≡ highest priority). ❑ Preemptive ❑ nonpreemptive ■SJF is a priority scheduling where priority is the predicted next CPU burst time. ■Problem ≡ Starvation – low priority processes may never execute. ■Solution ≡ Aging – as time progresses increase the priority of the process.

- 20. Multilevel Queue ■ Ready queue is partitioned into separate queues: foreground (interactive) background (batch) ■ Each queue has its own scheduling algorithm, foreground – RR background – FCFS ■ Scheduling must be done between the queues. ❑ Fixed priority scheduling; i.e., serve all from foreground then from background. Possibility of starvation. ❑ Time slice – each queue gets a certain amount of CPU time which it can schedule amongst its processes; i.e., 80% to foreground in RR ❑ 20% to background in FCFS

- 22. Multilevel Feedback Queue ■A process can move between the various queues; aging can be implemented this way. ■Multilevel-feedback-queue scheduler defined by the following parameters: ❑number of queues ❑scheduling algorithms for each queue ❑method used to determine when to upgrade a process ❑method used to determine when to demote a process ❑method used to determine which queue a process will enter when that process needs service

- 24. Example of Multilevel Feedback Queue ■ Three queues: ❑ Q0 – time quantum 8 milliseconds ❑ Q1 – time quantum 16 milliseconds ❑ Q2 – FCFS ■ Scheduling ❑ A new job enters queue Q0 which is served FCFS. When it gains CPU, job receives 8 milliseconds. If it does not finish in 8 milliseconds, job is moved to queue Q1. ❑ At Q1 job is again served FCFS and receives 16 additional milliseconds. If it still does not complete, it is preempted and moved to queue Q2.

- 25. Multiple-Processor Scheduling ■CPU scheduling more complex when multiple CPUs are available. ■Homogeneous processors within a multiprocessor. ■Load sharing ■Asymmetric multiprocessing – only one processor accesses the system data structures, alleviating the need for data sharing.

- 26. Real-Time Scheduling ■Hard real-time systems – required to complete a critical task within a guaranteed amount of time. ■Soft real-time computing – requires that critical processes receive priority over less fortunate ones.

- 27. Dispatch Latency

- 28. Algorithm Evaluation ■Deterministic modeling – takes a particular predetermined workload and defines the performance of each algorithm for that workload. ■Queuing models ■Implementation

- 29. Evaluation of CPU Schedulers by Simulation

- 30. Case Study Unix Process Management

- 33. Zombies ● A process which has finished the execution but still has entry in the process table to report to its parent process is known as a zombie process. ● A child process always first becomes a zombie before being removed from the process table. ● The parent process reads the exit status of the child process which reaps off the child process entry from the process table. ● zombie is not really a process as it has terminated but the system retains an entry in the process table for the non- existing child process. ● A zombie is put to rest when the parent finally executes a wait().

- 34. A C program to demonstrate Zombie Process. // Child becomes Zombie as parent is sleeping // when child process exits. #include <stdlib.h> #include <sys/types.h> #include <unistd.h> int main() { // Fork returns process id // in parent process int p_pid = fork(); // Parent process if (p_pid > 0) sleep(50); // parent process else exit(0); return 0; }

- 35. A C program to demonstrate Zombie Process.

- 36. Orphans ∙ A process whose parent process no more exists i.e. either finished or terminated without waiting for its child process to terminate is called an orphan process. ∙ When a parent terminates, orphans and zombies are adopted by the init process (process-id -1) of the system.

- 37. A C program to demonstrate Orphan Process. // Parent process finishes execution while the // child process is running. The child process // becomes orphan. #include<stdio.h> #include <sys/types.h> #include <unistd.h> int main() { // Create a child process int pid = fork(); if (pid > 0) printf("in parent process"); // Note that pid is 0 in child process // and negative if fork() fails else if (pid == 0) { sleep(30); printf("in child process"); } return 0; }

- 38. A C program to demonstrate Orphan Process. // Parent process finishes execution while the // child process is running. The child process // becomes orphan. #include<stdio.h> #include <sys/types.h> #include <unistd.h> int main() { // Create a child process int pid = fork(); if (pid > 0) printf("in parent process"); // Note that pid is 0 in child process // and negative if fork() fails else if (pid == 0) { sleep(30); printf("in child process"); } return 0; }

- 39. Daemons ● Daemons are server processes that run continuously. ● Most of the time, they are initialized at system startup and then wait in the background until their service is required. ● A typical example is the networking daemon- xinetd, syslogd which is started in almost every boot procedure. After the system is booted, the network daemon just sits and waits until a client program, such as an FTP client, needs to connect.

- 41. Process Creation ■ Parent process creates children processes, which, in turn create other processes, forming a tree of processes. ■ Resource sharing ❑ Parent and children share all resources. ❑ Children share subset of parent’s resources. ❑ Parent and child share no resources. ■ Execution ❑ Parent and children execute concurrently. ❑ Parent waits until children terminate. ■ Address space ❑ Child duplicate of parent. ❑ Child has a program loaded into it. ■ UNIX examples ❑ fork system call creates new process ❑ exec system call used after a fork to replace the process’ memory space with a new program.

- 43. A Tree of Processes On A Typical UNIX System

- 44. Process Termination ■Process executes last statement and asks the operating system to decide it (exit). ❑ Output data from child to parent (via wait). ❑ Process’ resources are deallocated by operating system. ■Parent may terminate execution of children processes (abort). ❑ Child has exceeded allocated resources. ❑ Task assigned to child is no longer required. ❑ Parent is exiting. ■ Operating system does not allow child to continue if its parent terminates. ■ Cascading termination.

- 45. Threads ■A thread (or lightweight process) is a basic unit of CPU utilization; it consists of: ❑ program counter ❑ register set ❑ stack space ■A thread shares with its peer threads its: ❑ code section ❑ data section ❑ operating-system resources collectively known as a task. ■A traditional or heavyweight process is equal to a task with one thread

- 46. Threads ● Global memory ● Process ID and parent process ID ● Controlling terminal ● Process credentials (user ) ● Open file information ● Timers ● ……… Threads share…. Threads specific Attributes…. ● Thread ID ● Thread specific data ● CPU affinity ● Stack (local variables and function call linkage information) ● ……

- 48. Threads in Memory Memory is allocated for a process in segments or parts: 0000000 Increasing virtual address Text ( program code) Initialized data Un-initialized data Heap Stack for main thread argv, environment Stack for thread 2 Stack for thread 3 Stack for thread 1 Main thread executing here Thread 1 executing here Thread 3 executing here Thread 2 executing here

- 49. Multiple Threads within a Task

- 52. States of a Thread • A Thread can be in one of four states: – Ready: all set to run – Running: actually doing something – Waiting, or blocked: needs something – Dead: will never do anything again • State names vary across textbooks • You have some control, but the Java scheduler has more

- 54. Benefits of Threads ■Takes less time to create a new thread than a process ■Less time to terminate a thread than a process ■Faster context switch - Less time to switch between two threads within the same process ■Resource sharing and Communication:- Since threads within the same process share memory and files, they can communicate with each other without invoking the kernel.

- 55. Benefits ● Responsiveness Interactive application can delegate background functions to a thread and keep running ● Resource Sharing Several different threads can access the same address space ● Economy Allocating memory and new processes is costly. Threads are much ‘cheaper’ to initiate. ● Scalability Use threads to take advantage of multiprocessor architecture

- 56. User Threads ● Thread management done by user-level threads library ● Three primary thread libraries: ● POSIX Pthreads ● Win32 threads ● Java threads

- 57. Kernel Threads ● Supported by the Kernel ● Examples ● Windows XP/2000 ● Solaris ● Linux ● Tru64 UNIX ● Mac OS X

- 58. Two ways of creating Threads • You can extend the Thread class: – class Animation extends Thread {…} – Limiting, since you can only extend one class • Or you can implement the Runnable interface: – class Animation implements Runnable {…} – requires public void run( ) • I recommend the second for most programs

- 59. Demo

- 60. Example I class MyThread extends Thread { private String name, msg; public MyThread(String name, String msg) { this.name = name; this.msg = msg; } public void run() { System.out.println(name + " starts its execution"); for (int i = 0; i < 5; i++) { System.out.println(name + " says: " + msg); try { Thread.sleep(5000); } catch (InterruptedException ie) {} } System.out.println(name + " finished execution"); } }

- 61. Example I public class test { public static void main(String[] args) { MyThread mt1 = new MyThread("thread1", "ping"); MyThread mt2 = new MyThread("thread2", "pong"); mt1.start(); mt2.start(); } } These Two Threads will run in parallel

- 62. Example II class MyThread implements Runnable { private String name, msg; public MyThread(String name, String msg) { this.name = name; this.msg = msg; } public void run() { System.out.println(name + " starts its execution"); for (int i = 0; i < 5; i++) { System.out.println(name + " says: " + msg); try { Thread.sleep(5000); } catch (InterruptedException ie) {} } System.out.println(name + " finished execution"); } }

- 63. Example II public class test { public static void main(String[] args) { MyThread mt1 = new MyThread("thread1", "ping"); MyThread mt2 = new MyThread("thread2", "pong"); Thread T1 = new Thread(mt1); Thread T2 = new Thread(mt2); T1.start(); T2.start(); } } These Two Threads will run in parallel

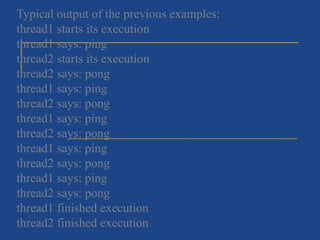

- 64. Typical output of the previous examples: thread1 starts its execution thread1 says: ping thread2 starts its execution thread2 says: pong thread1 says: ping thread2 says: pong thread1 says: ping thread2 says: pong thread1 says: ping thread2 says: pong thread1 says: ping thread2 says: pong thread1 finished execution thread2 finished execution

- 65. S.NO Process Thread 1 Process means any program under execution. Thread means segment of a process. 2 Process is called heavy weight process. A Thread is lightweight as each thread in a process shares code, data and resources. 3 Process has its own Process Control Block, Stack and Address Space. Thread has Parents’ PCB, its own Thread Control Block and Stack and common Address space. 4 Process is isolated. Threads share memory. 5 Process takes more time for creation and to terminate. Thread takes less time for creation and to terminate. 6 It also takes more time for context switching. It takes less time for context switching. 7 Process is less efficient in term of communication. Thread is more efficient in term of communication. 8 Multi programming holds the concepts of multi process. We don’t need multi programs in action for multiple threads because a single process consists of multiple threads. 9 Process switching uses interface in operating system. Thread switching does not require to call a operating system and cause an interrupt to the kernel. 10 If one process is blocked then it will not effect the execution of other process Second thread in the same task could not run, while one server thread is blocked. 11 Changes to the parent process does not affect child processes. Since all threads of the same process share address space and other resources so any changes to the main thread may affect the behavior of the other threads of the process. Difference between Process and Thread

Editor's Notes

- #31: Unix employs two RUNNING states to indicate whether the process is executing in user mode or kernel mode.

- #32: Preempted and Ready to run, in memory are nearly identical A process may be preempted for a higher-priority process at the end of a system call. Preemption can only occur when a process is about to move from kernel mode to user mode. While a process is running in kernel mode, it may not be preempted. This makes UNIX unsuitable for real time processing. Zombie – Saves information to be passed to the parent of this process. Two processes are unique in UNIX: Process 0 – Swapper, created at boot time Process 1 – Init, creates other processes and it is spawned by process 0.

- #39: xinetd- is an open-source super-server daemon which runs on many Unix-like systems and manages Internet-based connectivity. It offers a more secure alternative to the older inetd ("the Internet daemon"), which most modern Linux distributions have deprecated.

- #48: Text: Made read-only so you don’t accidently modify your own instructions. Since many processes may be running the same program, this may be shared. uninitialized data may be set to 000’s

![Example I

public class test {

public static void main(String[] args) {

MyThread mt1 = new MyThread("thread1", "ping");

MyThread mt2 = new MyThread("thread2", "pong");

mt1.start();

mt2.start();

}

}

These Two Threads will run in

parallel](https://image.slidesharecdn.com/processandcpuscheduling-250522150704-c7d13fff/85/Process-and-CPU-Scheduling-pptx-it-is-about-Operating-system-61-320.jpg)

![Example II

public class test {

public static void main(String[] args) {

MyThread mt1 = new MyThread("thread1", "ping");

MyThread mt2 = new MyThread("thread2", "pong");

Thread T1 = new Thread(mt1);

Thread T2 = new Thread(mt2);

T1.start();

T2.start();

}

}

These Two Threads will run in

parallel](https://image.slidesharecdn.com/processandcpuscheduling-250522150704-c7d13fff/85/Process-and-CPU-Scheduling-pptx-it-is-about-Operating-system-63-320.jpg)