Search pitb

- 1. Tech Talk Full-text Search Nawab Iqbal February 2018 © 2018 Nawab Iqbal

- 3. Agenda ❖ What is Full-text Search ❖ Searching for exact substrings. ❖ Common search concerns. ❖ Solr Search platform.

- 4. Full-text Search “In a full-text search, a search engine examines all of the words in every stored document as it tries to match search criteria” - wikipedia

- 5. Full-text Search It is a bit like LIKE:- … where LOWER(column_name) LIKE LOWER(‘%query_string%'); What will be the big O for above SQL query?

- 6. Big O for finding a word in text ? Length of Text = n Length of text = m Start comparing relevant characters in both strings and repeat from next index if a character match fails. W E R Q W E Q W E R QQText Query

- 7. Find String Algorithm W E R Q W E Q W E R QQ For our example:- starting with index 0, the comparison fails at index 1

- 8. Find String Algorithm W E R Q W EQQ Q W E R We increment the index by 1; and start the comparison again, eventually finding a match. Comparing all characters of text with all characters of query will take O(n*m)

- 9. Find String Algorithm: Example 2 E Q W E R T Q W E R WQ 0 1 2 3 4 5 6 7 Where should we start after this mismatch ?

- 10. Find String Algorithm: Example 2 E Q W E R T Q W E R WQ 0 1 2 3 4 5 6 7 We have enough knowledge in the query word to decide if we should start the next match at ‘1’, ‘2’, ’3’ ! Query preprocessing time: O(m) Execution time: O(n) Reference: Knuth-Morris-Platt algorithm O(n+m)

- 11. Preprocessing the text instead of query ❖ What if we pre-process the text instead of query? ❖ Pre-processing the text makes the query execution faster; decreasing the query execution time to O(m) ❖ E.g. Suffix trees

- 12. Pre-process the text E Q W E R TWQ 0 1 2 3 4 5 6 7 E Q W E R TW E Q W E R T Q W E R T W E R T E R T R T T Find all the suffixes of the text Original Text

- 13. Pre-process the text E Q W E R TWQ 0 1 2 3 4 5 6 7 E Q W E R TW E Q W E R T Q W E R T W E R T E R T R T T Make (compressed) trie* of the suffixes. Trie is a prefix tree, where common prefixes are extracted in the parent

- 14. Pre-process the text Q E W R T E Q W E R T W E Q W E R T Q W E R T R T R T R T T $ Each node also tracks the offset in original string

- 15. Search in O(m): m = length of query Q E W R T E Q W E R T W E Q W E R T Q W E R T R T R T R T T $ Each node also tracks the offset in original string Search for ‘QWER’

- 16. Suffix trie ❖ Take O(n) space and O(n*lgn) time for construction. ❖ Efficient for exact match. O(m) execution time per query! ❖ where m = length of query ❖ n = length of text ❖ Good for text which don’t change that often. (e.g., search within a book).

- 17. Precision and Recall ❖ Precision: ❖ Number of relevant instances that have been retrieved / total retrieved instances ❖ Recall: ❖ Number of relevant instances that have been retrieved / total relevant instances

- 19. Search concerns: 1 ❖ We commonly search on words (delimiters and spaces are ignored) ❖ Spell correction ❖ Query ‘runnind’ should show results for running ❖ Synonyms ❖ Should query:’killer’ show results for ‘murderer’, ‘assassin’ ? ❖ Removing elision: it’s -> it is , don’t -> do not etc.

- 20. Search concerns: 2 ❖ Match words with same root even if the form is different ❖ Searching for ‘eating’ should also show results for ‘eaten’, ‘eat’ and ‘ate’. ❖ Stemming generalizes some language rules (e.g., trimming ing, ed from the end of words to make root) It will fail in special cases e.g, eaten. However, it is faster. ❖ Lemmatization: takes the language dictionary into account. So, eaten’s root can be correctly found as eat.

- 21. Search concerns: 3 ❖ Should we skip indexing of common words (aka stop words)? e.g., is, are, I, the, not ? ❖ Advantage: Smaller index size. Mostly ignoring these words will not affect search results, since these are present in almost all the documents - rendering each document equally valuable (or useless). ❖ Disadvantage: we won’t be able to search queries like: ‘to be or not to be’

- 22. Search concerns: 4 ❖ Ignore case ❖ Even though the term doesn’t match exactly, but most users will like to see results with résumé, resumé, resume, or RESUME when searching for resumé

- 23. Search Design: 1 ❖ While indexing, break the text into terms. ❖ Where terms are generally defined as substrings separated by whitespace (space, tabs, unicode space,…), or delimiters. ❖ Create term to locations mapping. ❖ aka inverted index or postings list. ❖ Logically, you can think of: ❖ HashMap <Term, List<Occurrence>>

- 24. Search Design: 2 ❖ For any query, split into terms and find each term in the above created index. ❖ Separately find each term in the above created index to find sets of documents which match each term respectively. ❖ An intersection of all these sets gives the documents which have all the terms. ❖ This is a very basic way of query execution. Real world is more complex.

- 25. Inverted Index: Sample texts Doc text Tokens It’s your job, not mine It is your job not mine It is a gold mine It is a gold mine mine the bitcoin mine the bitcoin

- 26. Inverted Index and term dictionary Words Doc Frequency Document Id: Number of occurrences a 1 1 : 1 It 2 1: 1 2: 1 is 2 1: 1 2: 1 your 1 1: 1 not 1 1: 1 job 1 1: 1 mine 3 1: 1 2: 1 3:1 bitcoin 1 3:1 the 1 3:1

- 27. Inverted Index and term dictionary Words Doc Frequency Document: Number of occurrences a 1 1 : 1 It 2 1: 1 2: 1 is 2 1: 1 2: 1 your 1 1: 1 not 1 1: 1 job 1 1: 1 mine 3 1: 1 2: 1 3:1 bitcoin 1 3:1 the 1 3:1 This is Term dictionary. Usually small and kept in memory

- 28. Inverted Index and term dictionary Words Doc Frequency Document: Number of occurrences a 1 1 : 1 It 2 1: 1 2: 1 is 2 1: 1 2: 1 your 1 1: 1 not 1 1: 1 job 1 1: 1 mine 3 1: 1 2: 1 3:1 bitcoin 1 3:1 the 1 3:1 This are postings lists. Usually kept in files. relevant lists are read as needed for each query execution. This can be configured to track the count of terms in each document, as well as the offset within the document. We can also keep term offsets for each document occurrence instead of just the count.

- 29. Inverted Index and term dictionary Words Doc Frequency Document: Number of occurrences a 1 1 : 1 It 2 1: 1 2: 1 is 2 1: 1 2: 1 your 1 1: 1 not 1 1: 1 job 1 1: 1 mine 3 1: 1 2: 1 3:1 bitcoin 1 3:1 the 1 3:1 Doc Freq: is used to calculate score for each matching document. If a term is in fewer documents across the whole index then a match on this term should give a higher total score for the document. So, a query : bitcoin it should rank document 3 higher than 1 & 2.

- 30. Calculating score for a document ❖ TF-IDF ❖ Term Factor (TF) = This is proportional to the number of occurrences of the term in the document. ❖ Inverse Document Frequency (IDF) = Inverse of the number of documents in the your collection which contain the term. ❖ Different implementations may have slightly different formulas.

- 31. Calculating score for a document Words DF occure TF a 1 1: 1 It 2 1: 1 2: 1 is 2 1: 1 2: 1 your 1 1: 1 not 1 1: 1 job 1 1: 1 mine 3 1: 1 2: 1 3:1 bitcoin 1 3:1 the 1 3:1 Query = bitcoin it Score Formula = TF * Inverse-DF Term = it DF = 2 Score (doc1, ‘it’) = 1/2 Score (doc2, ‘it’) = 1 /2 Score(doc3, ‘it’) = 0 Term = bitcoin DF = 1 Score (doc1, ‘bitcoin’) = 0 Score (doc2, ‘bitcoin’) = 0 Score (doc3, ‘bitcoin’) = 1 Total score(doc) = Score(doc, term1) + Score(doc, term2) … Score(doc1) = 1/2 Score(doc2) = 1/2 Score(doc3) = 1

- 32. BM-25 ❖ Relevance score calculation with more theoretical foundation ❖ Many search platforming are changing default to BM25. ❖ Also, considers average length of document in the whole collection. ❖ http://opensourceconnections.com/blog/2015/10/16/ bm25-the-next-generation-of-lucene-relevation/

- 33. Updating the Inverted Index ❖ Inverted Index is optimized for disk-storage and reads; but not for updates. ❖ Lets say if the term ‘mine’ is replaced in Doc3 with ‘whine’ ❖ If we delete ‘Doc3’ from occurrence list of ‘mine’, it will require shifting the data structure on file and may trigger a full rewrite of the file.

- 34. Inverted Index and Segments ❖ We will mark doc3 as deleted in its original segment. ❖ Append the document to segment which is currently open for editing. ❖ Collection of all the segments (and their delete files) is your complete index. Words DF TF a 1 1: 1 It 2 1: 1 2: 1 is 2 1: 1 2: 1 your 1 1: 1 not 1 1: 1 job 1 1: 1 mine 3 1: 1 2: 1 3:1 bitcoin 1 3:1 the 1 3:1 Solr adds the deleted document in “deleted" file for the same segment. Words DF TF whine 1 3:1 the 1 3:1 bitcoin 1 3:1 Deleted documents Doc3

- 35. Inverted Index and Segments: 2 ❖ Important segue: Solr keeps writing to an inverted index until a “commit” is called, or it fills the allocated memory. At which point, it is flushed to the drive (as Lucene immutable segment). In case of commit, it is also added to a segments file which contains list of all committed segments. ❖ All the transactions for which Solr has acknowledged success, are written to Tlog (and flushed to drive), so even if commit is not executed on index, indexed data is not lost. ❖ Solr will issue commit before shutting down (if graceful), and, Solr will also replay the Tlog (between now and last_commit) when it restarts.

- 36. Lucene ❖ Lucene Core ❖ A set of Java JARs for indexing and search ❖ spellchecking, ❖ hit highlighting ❖ advanced analysis/tokenization capabilities.

- 37. Solr ❖ search platform built on Apache Lucene™. ❖ web admin interface ❖ XML/HTTP and JSON/Python/Ruby APIs ❖ hit highlighting ❖ faceted search ❖ caching ❖ replication

- 38. Zookeeper ❖ Leader election for Solr replicas ❖ Zookeeper is needed index has multiple replicas ❖ Optionally also used for storing ‘core’ config. ❖ Ref: https://lucene.apache.org/solr/guide/6_6/ setting-up-an-external-zookeeper-ensemble.html

- 39. Nutch ❖ Website crawler ❖ Can run on a single machine or a Hadoop cluster. ❖ Specify a list of URLs to start crawling ❖ Parse the text (customizable options) ❖ Send the parsed documents to Solr

- 40. Start your solr server ❖ JDK 8 (get latest. Solr hits a bug in some earlier JDK 8) ❖ Ant ❖ Ivy ❖ If you want to follow my text analysis examples: ❖ https://github.com/niqbal/solr_cores

- 41. Documents to bag of words! For indexing, different document formats should be converted to streams of text. (Ref: Apache Tike/ Solr Cell) Doc: A critical abstract provides … Movie: Tom and Jerry Jump Chart: Population 7Billion Book: text . text

- 42. Solr Glossary: 1 ❖ Solr is a JVM process ❖ Core ❖ This is like a table in RDBMS ❖ schema.xml ❖ Schema of the “table”. ❖ Document ❖ One row in the “table”

- 43. Solr Glossary: 2 ❖ Each solr process has ❖ zoo.cfg ❖ solr.xml ❖ One solr process can host multiple cores (“table”), each with its own config files.

- 44. Solr Core configs ❖ Solr core can be pre-created or created using an api while sever is already running ❖ Following config are specific to each core ❖ solrconfig.xml ❖ Query parser config, Indexing memory and threads. ❖ core.properties ❖ This file identifies its containing folder as a solr core; so that it gets discovered by the solr process. ❖ Schema: schema definition of this core

- 45. schema.xml: Field classes ❖ Solr has Field classes, which can be used to define Field types. e.g., ❖ binary : base64 ❖ TextField: general text, usually multiple words/tokens ❖ DoublePointField: double ❖ DatePointField: Date type data ❖ …

- 46. schema.xml: Field types ❖ Field type contains following specification: ❖ Name of field-type ❖ Parent field class (e.g., DoublePointField, TextField, etc.) ❖ DocValues: For efficient sorting, and faceting on this field. Available for a subset of all field classes. ❖ Index-time analysis config ❖ Query-time analysis config

- 47. schema.xml: Field definition ❖ A Field in Solr schema is like a column in Relational databases. ❖ Field definition consists of: ❖ Field name ❖ Field type (also defined in your schema) ❖ Stored ? : Needed for hit highlighting, field retrieval, etc. ❖ When you retrieve field value from Solr, Stored values can be returned. ❖ Indexed ?: Needed for searching in this text

- 48. schema.xml: Dynamic Fields ❖ Ability to create new fields in your “table” (core in Solr terms) on the fly. ❖ May not be needed for most use cases.

- 49. schema.xml: copyField ❖ copyField works like a trigger to populate another field based on a value in one field. ❖ Possible use: if you want to do multiple types of analyses on same value but don’t want to expose the detail to indexing client. ❖ <copyField source="title" dest=“tokenized_title”/>

- 50. solrconfig.xml: Index config ❖ ramBufferSizeMB and maxBufferedDocs ❖ When to flush a segment to the disk ❖ MergePolicy and MergeScheduler ❖ when to trigger merging of segments

- 51. solrconfig.xml: Query config <requestHandler name="/query" class="solr.SearchHandler"> <lst name="defaults"> <str name="wt">json</str> <str name="defType">edismax</str> <str name="qf">text^0.5 features^1.0 name^1.2</str> <str name="hl">on</str> <str name=“hl.fl">features name</str> </lst> </requestHandler> ❖ Specify a request handler for the core ❖ Configure Query parser here: Lucene, Dismax, eDismax, etc. hl fields should have stored=true

- 52. solrconfig.xml: hard & soft commits ❖ Hard commit: flushes the in-memory indexes to new Lucene segments. and optionally makes the available for new incoming queries. ❖ Soft commit: makes in-memory index available for new incoming queries, without flushing to disk. ❖ You can tune both intervals independently.

- 53. Solr Glossary: 3 ❖ Shard: When data grows more than the capacity of one core, then the core can be divided into “shards”. Each shard will have a piece of the table. ❖ Collection: a collection of all shards of one type of data. ❖ Replica: Number of copies of identical data. ❖ Leader: For all the replicas of a shard, there is only one leader. i.e., there is one leader for each shard/partition. Zookeeper is used to elect the shard leader from replicas.

- 54. Solr Cloud ❖ All replicas of each shard participate in indexing and querying. ❖ Queries are equally distributed across all copies/ replicas of the shards. ❖ Indexing/querying continues as long as there is one healthy replica per shard.

- 55. Indexing flow ❖ Index request can be sent to any node ❖ Node gets shard distribution from zookeeper and finds the leader core of the target shard. ❖ Leader receives the indexing request and indexes locally. ❖ Even if the indexed document is not flushed to disk, the request is still logged in tlog and persisted. ❖ Leader sends indexing request to healthy replicas. ❖ Sends SUCCESS code after sending indexing request to replicas. (doesn’t wait for replica’s response. tlog is there for consistency)

- 56. Indexing flow (on each core) ❖ A core receives the indexing request, and analyzes each field based on its analysis configured in schema.xml ❖ If indexed=true, then analyzed text is saved in index (inverted list!). ❖ If stored=true, then exact text is stored. ❖ Both indexed and stored can be used independent of each other (all 4 combinations are valid) ❖ Even if the indexed document is not flushed to disk, the request is still logged in tlog and persisted. ❖ Leader sends indexing request to healthy replicas. ❖ Sends SUCCESS code after sending indexing request to replicas. (doesn’t wait for replica’s response. tlog is there for consistency)

- 57. Query flow (SolrCloud) ❖ Query can be sent to any node. ❖ This first node becomes the controller or aggregator node and uses information in zk to determine replicas. ❖ The query is distributed to one replica of each shard. ❖ Top n results from the merged results from all shards is determined. ❖ Second query to selective subset of shards to get more fields of top n documents.

- 58. Query flow (on each core) ❖ Query reaches a query handler. (e.g., solr.SearchHandler) ❖ Parsed using one of the parsers (Lucene, DisMax, eDisMax) ❖ Options like split-on-whitespace (sow=true/false) are important ❖ Each term in the query is then analyzed by each Field definition specified in the call parameters. Then the term is searched in that field in all* documents of the node. ❖ You can also add a filter so that all documents are searched on. ❖ Score is generated on each field of a document and then cumulative score for the whole document. ❖ Documents are ranked and returned to client, which can be a user, or aggregator node (in case of SolrCloud)

- 59. System Design Web Servers Solr Client Database Solr Odd number of zookeeper machines, (in case of SolrCloud) Solr Client can be optionally made ZK aware

- 60. Solr standalone: 1 ❖ bin/solr start -s /path/to/solr_core_root ❖ many optional parameters to specify port, solrCloud (or no solrCloud), number of replicas, etc. ❖ default port: 8983 ❖ bin/solr stop ❖ stops the solr server ❖ Production install scripts are different. ‘bin/solr’ is good for sample code and quick-start.

- 61. Solr standalone: 2 ❖ Index document in itu core: ❖ http://localhost:8983/ solr/#/itu/documents ❖ sample json: ❖ {“id”:"3", "author":"Javed", “impactfactor”:5} ❖ You can also use other api clients. Ensure that the double quotes are in ASCII not unicode.

- 62. Solr standalone: 3 ❖ query ‘*’ ❖ http://localhost:8983/solr/itu/select? defType=edismax&q=*&qf=author&fl=* ❖ query jav* ❖ http://localhost:8983/solr/itu/select? defType=edismax&q=jav*&qf=author&fl=*

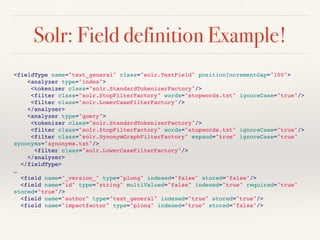

- 63. Solr: Field definition Example! <fieldType name="text_general" class="solr.TextField" positionIncrementGap="100"> <analyzer type="index"> <tokenizer class="solr.StandardTokenizerFactory"/> <filter class="solr.StopFilterFactory" words="stopwords.txt" ignoreCase="true"/> <filter class="solr.LowerCaseFilterFactory"/> </analyzer> <analyzer type="query"> <tokenizer class="solr.StandardTokenizerFactory"/> <filter class="solr.StopFilterFactory" words="stopwords.txt" ignoreCase="true"/> <filter class="solr.SynonymGraphFilterFactory" expand="true" ignoreCase="true" synonyms="synonyms.txt"/> <filter class="solr.LowerCaseFilterFactory"/> </analyzer> </fieldType> … <field name="_version_" type="plong" indexed="false" stored="false"/> <field name="id" type="string" multiValued="false" indexed="true" required="true" stored="true"/> <field name="author" type="text_general" indexed="true" stored="true"/> <field name="impactfactor" type="plong" indexed="true" stored="false"/>

- 64. Some text analysis! ❖ {"id":"4", "author_shingles":"pitb is in technology park”} ❖ /select?defType=edismax&q=technology%20park&qf=author_shingles ❖ Supporting phrase query - somewhat efficiently. ❖ {"id":"5", "author_ngram”:"kilogram is bigger”} ❖ /select?defType=edismax&q=gram&qf=author_ngram ❖ similar to wildcard functionality (without the overhead of wildcard) ❖ {"id":"6", "author_edge_ngram":"milligram is smaller”} ❖ select?defType=edismax&q=milli&qf=author_edge_ngram ❖ Similar to wildcard at end only

- 65. ❖ ifconfig (ipconfig in Windows) to find your local ip ❖ SolrCloud requires an entry in hosts file for the hostname (see last entry above) ❖ /etc/hosts on Mac Solr cloud config demo: 1

- 66. Solr cloud config demo: 2 ❖ ./bin/solr start -e cloud ❖ a utility to create multiple Solr processes on localhost ❖ Specify ❖ number of Solr processes ❖ number of shards ❖ number of replicas for each shard ❖ if you don’t specify ports as parameter, then Solr runs on default ports (printed in console output)

- 67. ❖ This script relies on embedded zookeeper. In production, the recommended practice is to run zookeeper on separate hosts. ❖ To stop all processes at once: bin/solr stop -all ❖ Find more: https://lucene.apache.org/solr/guide/6_6/ shards-and-indexing-data-in-solrcloud.html ❖ Some Solr documentation pages are obsolete. Make sure you read the latest documents. Solr cloud config demo: 3

- 68. ❖ ‘’ Solr cloud config demo: 4 http://localhost:8983/solr/#/~cloud OR http://localhost:7574/solr/#/~cloud SolrCloud intelligently distributes shards among available solr processes. The replicas of a shard *don’t* reside on same process (see port).

- 69. ❖ Use any host to insert following or similar documents. ❖ {"id":"1", “_text_":"asad"} ❖ {"id":"2", “_text_":"iqbal"} ❖ These will distributed among the two shards. Solr cloud config demo: 5

- 70. ❖ Query from any node: ❖ [Host]/solr/ferozepur/ select? defType=edismax&q=asad%2 0OR%20iqbal ❖ where host can be any of: ❖ http://192.168.8.101:7574 ❖ http://192.168.8.101:8983 ❖ Note the checkbox on ‘edismax’ ! Solr cloud config demo: 6

- 71. Solr admin UI pages

- 72. Measuring search quality ❖ Click-Through Rate (CTR): What fraction of searches resulted in navigation to a search result. ❖ Mean Reciprocal Rank (MRR): If user clicked, then was the clicked document high in the results? ❖ Normalized Discounted Cumulative Gain (nDCG): ❖ Considers ranking of top few results instead of only the clicked document (which is MRR). ❖ Requires more involved user studies.

- 73. More… ❖ Streaming ❖ Deep paging (use cursor mark): ❖ Graph search ❖ Solr Joins (employ wisely!) ❖ 1:N relationship in Solr (expensive for updates) ❖ Query Completion / Autosuggest ❖ Phrase search/ Proximity search

- 74. More… More… ❖ Language Identification (see: LangDetect or Tika) ❖ Custom document routing ❖ Parallel SQL ❖ Tuning caches (documentCache, queryResultsCache, filterCache, …) ❖ Tuning soft commit and hard commit; and near-realtime search.

- 75. ❖ This is the last slide. ❖ Devil is in the detail and it surfaces at scale.

![❖ Query from any node:

❖ [Host]/solr/ferozepur/

select?

defType=edismax&q=asad%2

0OR%20iqbal

❖ where host can be any of:

❖ http://192.168.8.101:7574

❖ http://192.168.8.101:8983

❖ Note the checkbox on

‘edismax’ !

Solr cloud config demo: 6](https://image.slidesharecdn.com/searchpitb-190425070402/85/Search-pitb-70-320.jpg)