Synchronization in distributed computing

- 1. Synchronization in Distributed Systems Presented by S.Vijayalakshmi I-Msc(IT) Nadar Saraswathi College of arts and science, Theni Department of CS & IT

- 3. Synchronisation In a centralized system: all processes reside on the same system utilize the same clock. In a distributed system: like synchronize everyone’s watch in the classroom.

- 4. Global Time Global Time is utilized to provide timestamps for processes and data. Physical clock: concerned with “People” time Logical clock: concerned with relative time and maintain logical consistency

- 5. Physical Clocks There are two aspects: Obtaining an accurate value for physical time Synchronizing the concept of physical time throughout the distributed system These can be implemented using centralized algorithms or distributed algorithms

- 6. Obtaining an Accurate Physical Time A physical time server is needed to access the current time from a universal time coordinator (UTC). Two sources for UTC: WWV shortwave radio station in Ft. Collins, Colorado Geostationary Operational Environmental Satellites (GEOS)

- 7. Synchronizing Physical Time The difference in time between two clocks due to drifting is defined as clock skew. As long as any and every two clocks differ by a value less than the maximum skew value, the time service is considered to be maintaining synchronization.

- 8. How to synchronize two clocks in A and B? The information necessary to read the value must be communicated across the network to location B. B’s clock value must be read. B’s clock value is communicated back to location A. B’s clock value is adjusted to reflect the time necessary to travel across the network. B’s clock value is compared to A’s clock value.

- 9. Centralized Physical Time Services Broadcast Based Request Driven

- 10. Broadcast Based – first approach The centralized time server’s action: The physical time service broadcasts periodically the current time to members of the distributed systems. The participants’ action: If a given participant’s clock is ahead of the time server’s clock, the participant slows down its clock so that it will continually move closer to the accurate time. If a participant’s clock is behind the time server’s clock, the participant moves its clock forward. Alternatives do include gradually speeding up the clock.

- 11. For example Current time = 720 Location A Broadcast based Time server Current time=740 Delay of 10 Current time=720 Adjusted current time=750 New current time=750 Location A

- 12. Broadcast Based – second approach (Berkeley algorithm) Current time=720 Move forward=6 Location A Current time=740 Adjusted location A =730 Adjusted location B =738 Average and the new current time=736 Time Server Current time=732 Slow clock down to accommodate 2 Location B 1 1 2 2 4 5 1. Current time = 740 2. My current time = 720 3. My current time = 732 4. Adjust forward = 6 5. Adjust slowdown to accommodate 2 Delay=10 Delay=6

- 13. Request Driven Current time=730 Adjusted time=750 New current time=750 Current time=740 Location A Timer Server Request for current time Current time=740 Delay=10

- 14. Distributed Physical Time Service Each location broadcasts its current time at predefined set intervals. Once a location has broadcast its time, it starts a timer. It then collects time messages that it receives. Each time message that arrives is stamped with the local current time. This process continues until the timer expires. Upon the expiration of the timer, each message is adjusted to reflect the network delay time estimated for the message source. At this stage, the participant calculates the average time according to one of the following approaches:

- 15. Calculate the average of all messages 720 724 726 718 722 723 Adjusted received times

- 16. Delete the times that are above the threshold and then average the rest. 760 X 724 726 718 702 X 723 Adjusted received times The numbers besides X are deleted. The rest are averaged.

- 17. Discard the highest x and the lowest x values and then average 760 X 724 726 718 702 X 723 703 X 765 X Adjusted received times

- 18. Logical Clocks Why Logical Clocks? It is difficult to utilize physical clocks to order events uniquely in distributed systems. The essence of logical clocks is based on the happened-before relationship presented by Lamport.

- 19. Happen-Before Relationship If two events, a and b, occurred at the same process, they occurred in the order of which they were observed. That is, a > b. If a sends a message to b, then a > b. That is, you cannot receive something before it is sent. This relationship holds regardless of where events a and b occur. The happen-before relationship is transitive. If a happens before b and b happens before c, then a happens before c. That is, if a > b and b > c, then a > c.

- 20. Logical Ordering If T(a) is the timestamp for event a, the following relationships must hold in a distributed system utilizing logical ordering. If two events, a and b, occurred at the same process, they occurred in the order of which they were observed. That is T(a) > T(b). If a sends a message to b, then T(a) > T(b). If a happens before b and b happens before c, T(a) > T(b), T(b) > T(c), and T(a) > T(c).

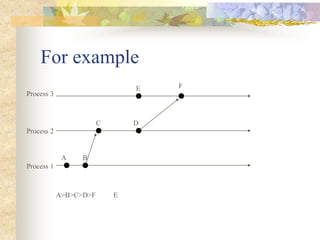

- 21. For example Process 1 Process 2 Process 3 A B C D E F A>B>C>D>F E

- 22. Lamport’s Algorithm Each process increments its clock counter between every two consecutive events. If a sends a message to b, then the message must include T(a). Upon receiving a and T(a), the receiving process must set its clock to the greater of [T(a)+d, Current Clock]. That is, if the recipient’s clock is behind, it must be advanced to preserve the happen-before relationship. Usually d=1.

- 23. For example Process 1 Process 2 Process 3 A(1) B(2) C(3) D(4) E(1) F(5)

- 24. Total Ordering with Logical Clocks Process 1 Process 2 Process 3 A(1.1) B(2.1) D(4.2) E(1.3) F(5.3) C(3.2) A>E>B>C>D>F

- 25. Mutual Exclusion In single-processor systems, critical regions are protected using semaphores, monitors, and similar constructs. In distributed systems, since there is no shared memory, these methods cannot be used.

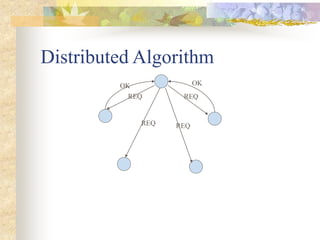

- 26. A Centralized Algorithm Advantages: It is fair, easy to implement, and requires only three messages per use of a critical region (request, grant, release). Disadvantages: single point of failure. coordinator process Request Grant Enter crical section Exit Release

- 29. A Comparison of the Three Algorithms Algorithm Messages per entry/exit Delay before entry Problems Centralized 3 2 Coordinator crash Distributed 2(n-1) 2(n-1) Crash of any process Token ring 1 to ∞ 0 to n-1 Lost token, process crash

- 30. Election Algorithm The bully algorithm When a process notices that the coordinator is no longer responding to requests, it initiates an election. A process, P, holds an election as follows: P sends an ELECTION message to all processes with higher numbers. If no one responds, P wins the election and becomes coordinator. If one of the higher-ups answers, it takes over. P’s job is done.

- 31. For example 7 1 2 4 5 3 6 Election 7 1 2 4 5 3 6 Ok 7 1 2 4 5 3 6 Election 7 1 2 4 5 3 6 Ok 7 1 2 4 5 3 6 Coordinator

- 32. A Ring Algorithm 1 2 4 5 3 7 6 2 2 3 2 3 4 2 3 4 5 2 3 4 5 6 2 3 4 5 6 1 6

- 33. Atomic Transactions All the synchronization techniques we have studied so far are essentially low level, like semaphores. What we would really like is a much higher-level abstraction such as atomic transaction.

- 34. For example Atomic bank transactions: 1. Withdraw(amount, account1) 2. Deposit(amount, account2)

- 35. Stable Storage Stable storage is designed to survive anything except major calamities such as floods and earthquakes. Stable storage can be implemented with a pair of ordinary disks. Stable storage is well suited to applications that require a high degree of fault tolerance, such as atomic transactions.

- 36. s a o h t f b w s a t f b w s a’ o h t f b w s a t f b w s a o h t f b w s a t f b w Stable storage Stable storage Stable storage Drive 1 Drive 2 (a) Stable storage (b) Crash after drive 1 is updated © Bad spot

- 37. Transaction Primitives 1 BEGIN_TRANSACTION: Mark the start of a transaction. 2 END_TRANSACTION: Terminate the transaction and try to commit. 3 ABORT_TRANSACTION: Kill the transaction; restore the old values. 4 READ: Read data from a file (or other object). 5 WRITE: Write data to a file (or other object). For example, BEGIN_TRANSACTION reserve Austin-Houston; reserve Houston-Los Angeles; reserve Los Angeles-Seatle; END_TRANSCATION

- 38. Properties of Transactions 1 Atomic: To the outside world, the transaction happens indivisibly. 2 Consistent: The transaction does not violate system invariants. 3 Isolated: Concurrent transactions do not interfere with each other. 4 Durable: Once a transaction commits, the changes are permanent.

- 39. Isolated or serializable Isolated or serializable means that if two or more transactions are running at the same time, to each of them and to other processes, the final result looks as though all transactions ran sequentially in some (system dependent) order.

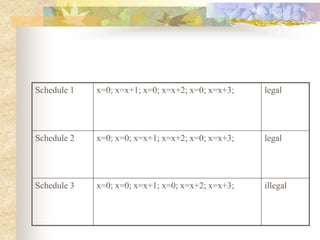

- 40. An example BEGIN_TRANACATION X = 0; X=X+1; END_TRANSACTION (a) BEGIN_TRANSACTION X=0; X= X+2; END_TRANSACTION (b) BEGIN_TRANSACTION X=0; X=X+3; END_TRANSACTION (c )

- 41. Schedule 1 x=0; x=x+1; x=0; x=x+2; x=0; x=x+3; legal Schedule 2 x=0; x=0; x=x+1; x=x+2; x=0; x=x+3; legal Schedule 3 x=0; x=0; x=x+1; x=0; x=x+2; x=x+3; illegal

- 42. Nest Transactions Transactions may contain subtransactions, often called nested transactions. If the subtransaction commits and the parent transaction aborts, the permanence applies only to top-level transactions.

- 43. Implementation Private Workspace 1 0 2 0 1 2 1 0 2 1 2 Index 0’ 3’ 0 3 Index Index 0 1 2 0’ 1 2 3’ 0 1 2 3 Private workspace

- 44. Writeahead log x=0; y=0; BEGIN_TRANSACTION x=x+1; log: x=0/; y=y+2; log: x=0/1; y=0/2; x=y * y; log: x=0/1; y=0/2; x=1/4; END_TRANSACTION

- 45. Achieving atomic commit in a distributed system Two-Phase Commit Protocol Coordinator Subordinates Write “Prepare” in the log Send “Prepare” message Write “Ready” in the log Send “Ready” message Collect all replies Phase 1 Phase 2 Write log record (if all are ready, commit; if not, abort) Send “Commit” message Write “Commit” in the log Commit Send “Finished” message

- 46. Concurrency Control When multiple transactions are executing simultaneously in different processes, some mechanism is needed to keep them out of each other’s way. That mechanism is called a concurrency control algorithm.

- 47. Concurrency control algorithms Locking In the simplest form, when a process needs to read or write a file (or other object) as part of a transaction, it first locks the file. Distinguishing read locks from write locks. The unit of locking can be an individual record or page, a file, or a larger item.

- 48. Two-phase locking The process first acquires all the locks it needs during the growing phase, then releases them during the shrinking phase. In many systems, the shrinking phase does not take place until the transaction has finished running and has either committed or aborted. This policy is called strict two-phase locking.

- 49. Two-phase locking Time Growing phase Shrinking phase Lock point Number of locks

- 50. Optimistic Concurrency Control A second approach to handling multiple transactions at the same time is optimistic concurrency control. The idea is simple: just go ahead and do whatever you want to, without paying attention to what anybody else is doing. If there is a problem, worry about it later.

- 51. Timestamps TRD TWR T () () () TWR TRD T () () () Write TRD T () () TWR T () () Do tentative write Abort Abort Do tenative write

![Lamport’s Algorithm

Each process increments its clock counter

between every two consecutive events.

If a sends a message to b, then the message must

include T(a). Upon receiving a and T(a), the

receiving process must set its clock to the greater

of [T(a)+d, Current Clock]. That is, if the

recipient’s clock is behind, it must be advanced to

preserve the happen-before relationship. Usually

d=1.](https://image.slidesharecdn.com/synchronizationindistributedcomputing-220517071919-52387f7d/85/Synchronization-in-distributed-computing-22-320.jpg)