Yuwu chen

- 1. + Training Combined Cycle Power Plant Data Set on HPC Yuwu Chen 4/28/2015

- 2. + Data Description Data Summary Method Description Method Comparison Conclusion Reference

- 3. + Data Description In electric power generation a combined cycle is an assembly of heat engines that work in series from the same source of heat. The principle is that after completing its cycle in the first engine, the working fluid of the first heat engine is still low enough in its entropy that a second subsequent heat engine may extract energy from the waste heat (energy) of the working fluid of the first engine. The electrical energy output of a power plant is influenced by four main parameters. The goal is to find a model for testing the influence. Turbine Electric generator

- 4. + Data Description The initial dataset was donated by a confidential power plant. The full dataset is available on UCI’s website: http://archive.ics.uci.edu/ml/datasets/Combined+Cycle+P ower+Plant The dataset recorded 6 years (2006-2011) of electrical power output when the plant was set to work with full load. 9568 observations, 5 variables. Observations are independent from each other. Data has been cleaned (e.g.: no missing values) before uploaded to the UCI.

- 5. + Data Description Independent variables: Temperature (T)1.81°C - 37.11°C Ambient Pressure (AP) 992.89 -1033.30 milibar Relative Humidity (RH) 25.56% - 100.16% Exhaust Vacuum (V) 25.36-81.56 cm Hg Dependent variable: Net hourly electrical energy output (PE) 420.26-495.76 MW

- 7. + Data Summary Visualize Correlation Matrix

- 8. + Method Description Step1: Assess the training data by several untrained models Multiple linear regression Backward selection Ridge regression Elasticnet Lasso SVM with linear kernel Pruned tree MARS Boosted tree Bagging tree Step2: Fit the test data set with the obtained models and evaluate the model in terms of the RMSE and MAD values. Step3: Train models with resampling methods on the LSU HPC, and evaluate each model by RMSE and MAD 𝑀𝐴𝐷 = 1 𝑁 × 𝑖=1 𝑁 𝑦𝑖 − 𝑦𝑖RMSE = 𝑖=1 𝑁 𝑦𝑖 − 𝑦𝑖 2/𝑁

- 10. + Backward selection Backward selection didn’t remove any independent variables from the model. So it will give the same RMSE and MAD as MLR.

- 11. + Ridge regression and Lasso Consider fire area as binary logical response λ=1 is used for the RMSE and MAD calculation ridge=glmnet (x.train,y.train,alpha =0) lasso=glmnet (x.train,y.train,alpha =1)

- 12. + Elasticnet Consider fire area as binary logical response λ=1 is used for the Elasticnet calculation elastic=glmnet (x.train,y.train,alpha =0.5)

- 13. + SVM with linear kernel Consider fire area as binary logical response ksvm <- ksvm(PE ~ AT+V+AP+RH, data = data.train,kernel="vanilladot",C=1)

- 14. + Single pruned tree Consider fire area as binary logical response rpart <- rpart(PE ~ AT+V+AP+RH, data = data.train,control = rpart.control(xval = 10, minbucket = 100,cp = 0.01))

- 15. + MARS Consider fire area as binary logical response mars <- earth(PE ~ AT+V+AP+RH, data = data.train,degree=1)

- 16. + Boosted tree Consider fire area as binary logical response boost<- gbm(PE ~ AT+V+AP+RH, data = data.train,distribution="gaussian",n.trees =1000, interaction.depth=4,shrinkage =0.01)

- 17. + Bagging tree Consider fire area as binary logical response bag <- randomForest(PE ~ AT+V+AP+RH, data = data.train, mtry=4, importance =TRUE)

- 18. + The Predictive Results in terms of the MAD and RMSE values (untrained) Model Package RMSE MAD MLR 4.583379 3.622121 Backward leaps 4.583379 3.622121 Ridge glmnet 4.92302 3.928416 Lasso glmnet 5.039077 4.015422 Elesticnet glmnet 4.848145 3.866809 SVM-linear kernel kernlab 4.588058 3.604371 Pruned tree rpart 5.422748 4.241274 MARS earth 4.282067 3.330725 Boost tree gbm 3.978378 3.026208 Bagging tree randomForest 3.604678 2.615768 𝑀𝐴𝐷 = 1 𝑁 × 𝑖=1 𝑁 𝑦𝑖 − 𝑦𝑖RMSE = 𝑖=1 𝑁 𝑦𝑖 − 𝑦𝑖 2/𝑁

- 19. + Train models with resampling methods Train method: The train function in the caret package Can train all models used in this project with resampling methods Easy to manipulate, well documented. Will automatically parallelize when multiple cpu cores are registered.

- 20. + Train models with resampling methods Model Resampling method Tuning parameter MLR bootstrapping N/A Backward Selection cross-validation #Randomly Selected Predictors Ridge cross-validation λ Lasso cross-validation λ Elesticnet cross-validation α and λ SVM-linear kernel cross-validation cost Pruned tree bootstrapping cp MARS bootstrapping #prune and degree Boost tree repeat cross- validation #.trees, shrinkage interaction.depth, Bagging (RF) cross-validation #Randomly Selected Predictors

- 21. + Parallel computing in R Motivation: Save computation time. A for loop can be very slow if there are a large number of computations that need to be carried out. Almost all computers now have multicore processors. As long as these computations do not need to communicate (resampling methods are excellent examples), they can be spread across multiple cores and executed in parallel. The parallel package

- 22. + Running R on LONI and LSU HPC clusters LONI QueenBee-2 landed 46th on TOP500 in the world (Nov. 2014) Training model: MLR Resampling: Bootstrapped (10000 reps)

- 23. + Training backward selection The 10 CV training still didn’t remove any independent variables from the model. So it will give the same RMSE and MAD as MLR.

- 24. + Training ridge, elasticnet and Lasso The final λ for ridge is 1.417 The final λ for lasso is 0.0497 The final α is 0.5 and λ is 0.0497 for elasticnet

- 25. + Training SVM with linear kernel The final selected cost is 2

- 26. + Training single tree Consider fire area as binary logical response treetrain <- train(PE ~ ., data = data.train,method = "rpart",trControl = trainControl(method = "boot",number = 1000),tuneLength=10)

- 27. + Training MARS Consider fire area as binary logical response marsGrid <- expand.grid(degree = c(1,2), nprune = (1:10) * 2) earthtrain <- train(PE ~ ., data = data.train,method = "earth",tuneGrid = marsGrid,maximize = FALSE,trControl = trainControl(method = "boot",number = 1000)) The # of prune is 14 The degree is 2

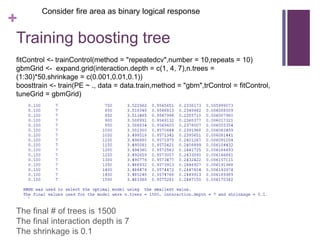

- 28. + Training boosting tree Consider fire area as binary logical response fitControl <- trainControl(method = "repeatedcv",number = 10,repeats = 10) gbmGrid <- expand.grid(interaction.depth = c(1, 4, 7),n.trees = (1:30)*50,shrinkage = c(0.001,0.01,0.1)) boosttrain <- train(PE ~ ., data = data.train,method = "gbm",trControl = fitControl, tuneGrid = gbmGrid) The final # of trees is 1500 The final interaction depth is 7 The shrinkage is 0.1

- 29. + Training bagging trees Consider fire area as binary logical response Convert Bagging trees to RF The optimized model retained two predict variables

- 30. + Training improvement: RMSE Consider fire area as binary logical response

- 31. + Training improvement: MAD Consider fire area as binary logical response

- 32. + Values Consider fire area as binary logical response RMSE MAD untrained trained untrained trained MLR 4.583379 4.583379 3.622121 3.622121 Backward 4.583379 4.583379 3.622121 3.622121 Ridge 4.92302 4.923921 3.928416 3.92914 Elasticnet 4.848145 4.584562 3.866809 3.626797 Lasso 5.039077 4.584209 4.015422 3.624946 SVM linear kernel 4.588058 4.588086 3.604371 3.604358 Pruned tree 5.422748 5.006409 4.241274 3.913017 MARS 4.282067 4.233078 3.330725 3.279154 BoostingTree 3.978378 3.468537 3.026208 2.506058 BaggingTree 3.604678 3.498071 2.615768 2.536362

- 33. + t-test to evaluate the null hypothesis that there is no difference between models Consider fire area as binary logical response The bagging(rf) model is significantly different from other two models.

- 34. + Summary Ten models have been used for testing the influence of the independent variables. The training process in caret package improves the performance of seven models. Parallel computation on the HPC can speed up the resampling calculation significantly. The RMSE and MAD values indicate that, after the training, the bagging(RF) and boosting trees tend to produce the best predictions.

- 35. + Future work The mechanism of the training in the caret package should be explored. E.g. there is no tuning parameter available when training MLR model, so which part has been bootstrapped?

- 36. + Reference Pınar Tüfekci, Prediction of full load electrical power output of a base load operated combined cycle power plant using machine learning methods, International Journal of Electrical Power & Energy Systems, Volume 60, September 2014, Pages 126-140, Heysem Kaya, Pınar Tüfekci , Sadık Fikret Gürgen: Local and Global Learning Methods for Predicting Power of a Combined Gas & Steam Turbine, Proceedings of the International Conference on Emerging Trends in Computer and Electronics Engineering ICETCEE 2012, pp. 13-18