01 analysis-of-algorithms

- 1. Algoritm (CSC 206, Autumn’06) • Text (Required): Introduction to Algorithms by Cormen, Lieserson, Rivest & Stein • Instructor: Dr. Ali Sabbir • Office Rm 5009 D.

- 2. Grading • Attendance is required • At least 1 midterm. • Exactly 1 in class Final. • Several quizzes (Announced and Unannounced) • Lots of assignments.

- 3. Pre-Requisites • A solid math background. • Some exposure to high level language coding, e.g., C, C++ etc. • Preferred language for this course C++.

- 4. Algorithms LECTURE 1 Analysis of Algorithms • Insertion sort • Asymptotic analysis • Merge sort • Recurrences

- 5. Analysis of algorithms The theoretical study of computer-program performance and resource usage. What’s more important than performance? • modularity • user-friendliness • correctness • programmer time • maintainability • simplicity • functionality • extensibility • robustness • reliability

- 6. Why study algorithms and performance? • Algorithms help us to understand scalability. • Performance often draws the line between what is feasible and what is impossible. • Algorithmic mathematics provides a language for talking about program behavior. • Performance is the currency of computing. • The lessons of program performance generalize to other computing resources. • Speed is fun!

- 7. The problem of sorting Input: sequence a1, a2, …, an of numbers. Output: permutation a'1, a'2, …, a'n such that a'1 a'2 … a'n . Example: Input: 8 2 4 9 3 6 Output: 2 3 4 6 8 9

- 8. Insertion sort INSERTION-SORT (A, n) A[1 . . n] for j ← 2 to n do key ← A[ j] i←j–1 “pseudocode” while i > 0 and A[i] > key do A[i+1] ← A[i] i←i–1 A[i+1] = key

- 9. Insertion sort INSERTION-SORT (A, n) A[1 . . n] for j ← 2 to n do key ← A[ j] i←j–1 “pseudocode” while i > 0 and A[i] > key do A[i+1] ← A[i] i←i–1 A[i+1] = key 1 i j n A: key sorted

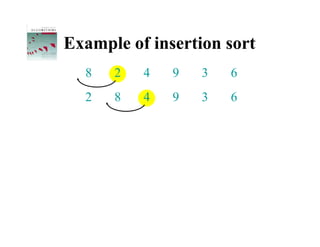

- 10. Example of insertion sort 8 2 4 9 3 6

- 11. Example of insertion sort 8 2 4 9 3 6

- 12. Example of insertion sort 8 2 4 9 3 6 2 8 4 9 3 6

- 13. Example of insertion sort 8 2 4 9 3 6 2 8 4 9 3 6

- 14. Example of insertion sort 8 2 4 9 3 6 2 8 4 9 3 6 2 4 8 9 3 6

- 15. Example of insertion sort 8 2 4 9 3 6 2 8 4 9 3 6 2 4 8 9 3 6

- 16. Example of insertion sort 8 2 4 9 3 6 2 8 4 9 3 6 2 4 8 9 3 6 2 4 8 9 3 6

- 17. Example of insertion sort 8 2 4 9 3 6 2 8 4 9 3 6 2 4 8 9 3 6 2 4 8 9 3 6

- 18. Example of insertion sort 8 2 4 9 3 6 2 8 4 9 3 6 2 4 8 9 3 6 2 4 8 9 3 6 2 3 4 8 9 6

- 19. Example of insertion sort 8 2 4 9 3 6 2 8 4 9 3 6 2 4 8 9 3 6 2 4 8 9 3 6 2 3 4 8 9 6

- 20. Example of insertion sort 8 2 4 9 3 6 2 8 4 9 3 6 2 4 8 9 3 6 2 4 8 9 3 6 2 3 4 8 9 6 2 3 4 6 8 9 done

- 21. Running time • The running time depends on the input: an already sorted sequence is easier to sort. • Parameterize the running time by the size of the input, since short sequences are easier to sort than long ones. • Generally, we seek upper bounds on the running time, because everybody likes a guarantee.

- 22. Kinds of analyses Worst-case: (usually) • T(n) = maximum time of algorithm on any input of size n. Average-case: (sometimes) • T(n) = expected time of algorithm over all inputs of size n. • Need assumption of statistical distribution of inputs. Best-case: (bogus) • Cheat with a slow algorithm that works fast on some input.

- 23. Machine-independent time What is insertion sort’s worst-case time? • It depends on the speed of our computer: • relative speed (on the same machine), • absolute speed (on different machines). BIG IDEA: • Ignore machine-dependent constants. • Look at growth of T(n) as n → ∞ . “Asymptotic Analysis”

- 24. -notation Math: (g(n)) = { f (n) : there exist positive constants c1, c2, and n0 such that 0 c1 g(n) f (n) c2 g(n) for all n n0 } Engineering: • Drop low-order terms; ignore leading constants. • Example: 3n3 + 90n2 – 5n + 6046 = (n3)

- 25. Asymptotic performance When n gets large enough, a (n2) algorithm always beats a (n3) algorithm. • We shouldn’t ignore asymptotically slower algorithms, however. • Real-world design situations often call for a T(n) careful balancing of engineering objectives. • Asymptotic analysis is a useful tool to help to n n0 structure our thinking.

- 26. Insertion sort analysis Worst case: Input reverse sorted. n T ( n) ( j ) n 2 [arithmetic series] j 2 Average case: All permutations equally likely. n T ( n) ( j / 2) n 2 j 2 Is insertion sort a fast sorting algorithm? • Moderately so, for small n. • Not at all, for large n.

- 27. Merge sort MERGE-SORT A[1 . . n] 1. If n = 1, done. 2. Recursively sort A[ 1 . . n/2 ] and A[ n/2+1 . . n ] . 3. “Merge” the 2 sorted lists. Key subroutine: MERGE

- 28. Merging two sorted arrays 20 12 13 11 7 9 2 1

- 29. Merging two sorted arrays 20 12 13 11 7 9 2 1 1

- 30. Merging two sorted arrays 20 12 20 12 13 11 13 11 7 9 7 9 2 1 2 1

- 31. Merging two sorted arrays 20 12 20 12 13 11 13 11 7 9 7 9 2 1 2 1 2

- 32. Merging two sorted arrays 20 12 20 12 20 12 13 11 13 11 13 11 7 9 7 9 7 9 2 1 2 1 2

- 33. Merging two sorted arrays 20 12 20 12 20 12 13 11 13 11 13 11 7 9 7 9 7 9 2 1 2 1 2 7

- 34. Merging two sorted arrays 20 12 20 12 20 12 20 12 13 11 13 11 13 11 13 11 7 9 7 9 7 9 9 2 1 2 1 2 7

- 35. Merging two sorted arrays 20 12 20 12 20 12 20 12 13 11 13 11 13 11 13 11 7 9 7 9 7 9 9 2 1 2 1 2 7 9

- 36. Merging two sorted arrays 20 12 20 12 20 12 20 12 20 12 13 11 13 11 13 11 13 11 13 11 7 9 7 9 7 9 9 2 1 2 1 2 7 9

- 37. Merging two sorted arrays 20 12 20 12 20 12 20 12 20 12 13 11 13 11 13 11 13 11 13 11 7 9 7 9 7 9 9 2 1 2 1 2 7 9 11

- 38. Merging two sorted arrays 20 12 20 12 20 12 20 12 20 12 20 12 13 11 13 11 13 11 13 11 13 11 13 7 9 7 9 7 9 9 2 1 2 1 2 7 9 11

- 39. Merging two sorted arrays 20 12 20 12 20 12 20 12 20 12 20 12 13 11 13 11 13 11 13 11 13 11 13 7 9 7 9 7 9 9 2 1 2 1 2 7 9 11 12

- 40. Merging two sorted arrays 20 12 20 12 20 12 20 12 20 12 20 12 13 11 13 11 13 11 13 11 13 11 13 7 9 7 9 7 9 9 2 1 2 1 2 7 9 11 12 Time = (n) to merge a total of n elements (linear time).

- 41. Analyzing merge sort T(n) MERGE-SORT A[1 . . n] (1) 1. If n = 1, done. 2T(n/2) 2. Recursively sort A[ 1 . . n/2 ] Abuse and A[ n/2+1 . . n ] . (n) 3. “Merge” the 2 sorted lists Sloppiness: Should be T( n/2 ) + T( n/2 ) , but it turns out not to matter asymptotically.

- 42. Recurrence for merge sort (1) if n = 1; T(n) = 2T(n/2) + (n) if n > 1. • We shall usually omit stating the base case when T(n) = (1) for sufficiently small n, but only when it has no effect on the asymptotic solution to the recurrence. • CLRS and Lecture 2 provide several ways to find a good upper bound on T(n).

- 43. Recursion tree Solve T(n) = 2T(n/2) + cn, where c > 0 is constant.

- 44. Recursion tree Solve T(n) = 2T(n/2) + cn, where c > 0 is constant. T(n)

- 45. Recursion tree Solve T(n) = 2T(n/2) + cn, where c > 0 is constant. cn T(n/2) T(n/2)

- 46. Recursion tree Solve T(n) = 2T(n/2) + cn, where c > 0 is constant. cn cn/2 cn/2 T(n/4) T(n/4) T(n/4) T(n/4)

- 47. Recursion tree Solve T(n) = 2T(n/2) + cn, where c > 0 is constant. cn cn/2 cn/2 cn/4 cn/4 cn/4 cn/4 (1)

- 48. Recursion tree Solve T(n) = 2T(n/2) + cn, where c > 0 is constant. cn cn/2 cn/2 h = lg n cn/4 cn/4 cn/4 cn/4 (1)

- 49. Recursion tree Solve T(n) = 2T(n/2) + cn, where c > 0 is constant. cn cn cn/2 cn/2 h = lg n cn/4 cn/4 cn/4 cn/4 (1)

- 50. Recursion tree Solve T(n) = 2T(n/2) + cn, where c > 0 is constant. cn cn cn/2 cn/2 cn h = lg n cn/4 cn/4 cn/4 cn/4 (1)

- 51. Recursion tree Solve T(n) = 2T(n/2) + cn, where c > 0 is constant. cn cn cn/2 cn/2 cn h = lg n cn/4 cn/4 cn/4 cn/4 cn … (1)

- 52. Recursion tree Solve T(n) = 2T(n/2) + cn, where c > 0 is constant. cn cn cn/2 cn/2 cn h = lg n cn/4 cn/4 cn/4 cn/4 cn … (1) #leaves = n (n)

- 53. Recursion tree Solve T(n) = 2T(n/2) + cn, where c > 0 is constant. cn cn cn/2 cn/2 cn h = lg n cn/4 cn/4 cn/4 cn/4 cn … (1) #leaves = n (n) Total (n lg n)

- 54. Conclusions • (n lg n) grows more slowly than (n2). • Therefore, merge sort asymptotically beats insertion sort in the worst case. • In practice, merge sort beats insertion sort for n > 30 or so. • Go test it out for yourself!

![Insertion sort

INSERTION-SORT (A, n) A[1 . . n]

for j ← 2 to n

do key ← A[ j]

i←j–1

“pseudocode” while i > 0 and A[i] > key

do A[i+1] ← A[i]

i←i–1

A[i+1] = key](https://image.slidesharecdn.com/01-analysis-of-algorithms-120118211648-phpapp01/85/01-analysis-of-algorithms-8-320.jpg)

![Insertion sort

INSERTION-SORT (A, n) A[1 . . n]

for j ← 2 to n

do key ← A[ j]

i←j–1

“pseudocode” while i > 0 and A[i] > key

do A[i+1] ← A[i]

i←i–1

A[i+1] = key

1 i j n

A:

key

sorted](https://image.slidesharecdn.com/01-analysis-of-algorithms-120118211648-phpapp01/85/01-analysis-of-algorithms-9-320.jpg)

![Insertion sort analysis

Worst case: Input reverse sorted.

n

T ( n) ( j ) n 2 [arithmetic series]

j 2

Average case: All permutations equally likely.

n

T ( n) ( j / 2) n 2

j 2

Is insertion sort a fast sorting algorithm?

• Moderately so, for small n.

• Not at all, for large n.](https://image.slidesharecdn.com/01-analysis-of-algorithms-120118211648-phpapp01/85/01-analysis-of-algorithms-26-320.jpg)

![Merge sort

MERGE-SORT A[1 . . n]

1. If n = 1, done.

2. Recursively sort A[ 1 . . n/2 ]

and A[ n/2+1 . . n ] .

3. “Merge” the 2 sorted lists.

Key subroutine: MERGE](https://image.slidesharecdn.com/01-analysis-of-algorithms-120118211648-phpapp01/85/01-analysis-of-algorithms-27-320.jpg)

![Analyzing merge sort

T(n) MERGE-SORT A[1 . . n]

(1) 1. If n = 1, done.

2T(n/2) 2. Recursively sort A[ 1 . . n/2 ]

Abuse and A[ n/2+1 . . n ] .

(n) 3. “Merge” the 2 sorted lists

Sloppiness: Should be T( n/2 ) + T( n/2 ) ,

but it turns out not to matter asymptotically.](https://image.slidesharecdn.com/01-analysis-of-algorithms-120118211648-phpapp01/85/01-analysis-of-algorithms-41-320.jpg)