Cvpr2007 tutorial bag_of_words

- 1. Part 1: Bag-of-words models by Li Fei-Fei (Princeton)

- 2. Related works • Early “bag of words” models: mostly texture recognition – Cula & Dana, 2001; Leung & Malik 2001; Mori, Belongie & Malik, 2001; Schmid 2001; Varma & Zisserman, 2002, 2003; Lazebnik, Schmid & Ponce, 2003; • Hierarchical Bayesian models for documents (pLSA, LDA, etc.) – Hoffman 1999; Blei, Ng & Jordan, 2004; Teh, Jordan, Beal & Blei, 2004 • Object categorization – Csurka, Bray, Dance & Fan, 2004; Sivic, Russell, Efros, Freeman & Zisserman, 2005; Sudderth, Torralba, Freeman & Willsky, 2005; • Natural scene categorization – Vogel & Schiele, 2004; Fei-Fei & Perona, 2005; Bosch, Zisserman & Munoz, 2006

- 3. Object Bag of ‘words’

- 4. Analogy to documents China is forecasting a trade surplus of $90bn Of all the sensory impressions proceeding to (£51bn) to $100bn this year, a threefold the brain, the visual experiences are the increase on 2004's $32bn. The Commerce dominant ones. Our perception of the world Ministry said the surplus would be created by around us is based essentially on the a predicted 30% jump in exports to $750bn, messages that reach the brain from our eyes. compared with a 18% rise in imports to For a long time it was thought that the retinal $660bn. The figures are likely to further image was transmitted pointbrain, to visual sensory, by point China, trade, annoy the US, which has long argued that centers in the brain; the cerebral cortex was visual, perception, a movie screen, so to speak, upon which the surplus, commerce, China's exports are unfairly helped by a retinal, cerebral cortex, deliberately undervalued yuan. Beijing exports, imports, US, image in the eye was projected. Through the agrees the surplus is too high, but says the discoveries of Hubelcell, optical eye, and Wiesel we now yuan, bank, domestic, yuan is only one factor. Bank of China know that behind the origin of the visual nerve, image perception in the brain there is a considerably foreign, increase, governor Zhou Xiaochuan said the country also needed to do more to boost domestic more complicated course Wiesel By Hubel, of events. trade, value demand so more goods stayed within the following the visual impulses along their path country. China increased the value of the to the various cell layers of the optical cortex, yuan against the dollar by 2.1% in July and Hubel and Wiesel have been able to permitted it to trade within a narrow band, but demonstrate that the message about the the US wants the yuan to be allowed to trade image falling on the retina undergoes a step- freely. However, Beijing has made it clear wise analysis in a system of nerve cells that it will take its time and tread carefully stored in columns. In this system each cell before allowing the yuan to rise further in has its specific function and is responsible for value. a specific detail in the pattern of the retinal image.

- 5. A clarification: definition of “BoW” • Looser definition – Independent features

- 6. A clarification: definition of “BoW” • Looser definition – Independent features • Stricter definition – Independent features – histogram representation

- 7. learnin recognition g codewords dictionary feature detection & representation image representation category models category (and/or) classifiers decision

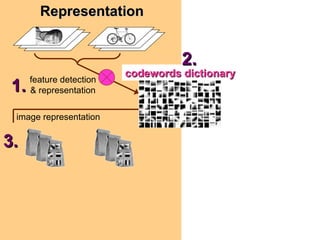

- 8. Representation 2. codewords dictionary feature detection 1. & representation image representation 3.

- 9. 1.Feature detection and representation

- 10. 1.Feature detection and representation • Regular grid – Vogel & Schiele, 2003 – Fei-Fei & Perona, 2005

- 11. 1.Feature detection and representation • Regular grid – Vogel & Schiele, 2003 – Fei-Fei & Perona, 2005 • Interest point detector – Csurka, et al. 2004 – Fei-Fei & Perona, 2005 – Sivic, et al. 2005

- 12. 1.Feature detection and representation • Regular grid – Vogel & Schiele, 2003 – Fei-Fei & Perona, 2005 • Interest point detector – Csurka, Bray, Dance & Fan, 2004 – Fei-Fei & Perona, 2005 – Sivic, Russell, Efros, Freeman & Zisserman, 2005 • Other methods – Random sampling (Vidal-Naquet & Ullman, 2002) – Segmentation based patches (Barnard, Duygulu, Forsyth, de Freitas, Blei, Jordan, 2003)

- 13. 1.Feature detection and representation Compute SIFT Normalize descriptor patch [Lowe’99] Detect patches [Mikojaczyk and Schmid ’02] [Mata, Chum, Urban & Pajdla, ’02] [Sivic & Zisserman, ’03] Slide credit: Josef Sivic

- 14. 1.Feature detection and representation …

- 15. 2. Codewords dictionary formation …

- 16. 2. Codewords dictionary formation … Vector quantization Slide credit: Josef Sivic

- 17. 2. Codewords dictionary formation Fei-Fei et al. 2005

- 18. Image patch examples of codewords Sivic et al. 2005

- 19. 3. Image representation frequency ….. codewords

- 20. Representation 2. codewords dictionary feature detection 1. & representation image representation 3.

- 21. Learning and Recognition codewords dictionary category models category (and/or) classifiers decision

- 22. Learning and Recognition 1. Generative method: - graphical models 2. Discriminative method: - SVM category models (and/or) classifiers

- 23. 2 generative models 1. Naïve Bayes classifier – Csurka Bray, Dance & Fan, 2004 2. Hierarchical Bayesian text models (pLSA and LDA) – Background: Hoffman 2001, Blei, Ng & Jordan, 2004 – Object categorization: Sivic et al. 2005, Sudderth et al. 2005 – Natural scene categorization: Fei-Fei et al. 2005

- 24. First, some notations • wn: each patch in an image – wn = [0,0,…1,…,0,0]T • w: a collection of all N patches in an image – w = [w1,w2,…,wN] • dj: the jth image in an image collection • c: category of the image • z: theme or topic of the patch

- 25. Case #1: the Naïve Bayes model c w N N c ∗ = arg max p (c | w) ∝ p (c) p ( w | c) = p(c)∏ p( wn | c) c n =1 Object class Prior prob. of Image likelihood decision the object classes given the class Csurka et al. 2004

- 26. Csurka et al. 2004

- 27. Csurka et al. 2004

- 28. Case #2: Hierarchical Bayesian text models Probabilistic Latent Semantic Analysis (pLSA) d z w N D Hoffman, 2001 Latent Dirichlet Allocation (LDA) c π z w N D Blei et al., 2001

- 29. Case #2: Hierarchical Bayesian text models Probabilistic Latent Semantic Analysis (pLSA) d z w N D “face” Sivic et al. ICCV 2005

- 30. Case #2: Hierarchical Bayesian text models “beach” Latent Dirichlet Allocation (LDA) c π z w N D Fei-Fei et al. ICCV 2005

- 31. d z w Case #2: the pLSA model N D

- 32. d z w Case #2: the pLSA model N D K p ( wi | d j ) = ∑ p ( wi | z k ) p ( z k | d j ) k =1 Observed codeword Codeword distributions Theme distributions distributions per theme (topic) per image Slide credit: Josef Sivic

- 33. Case #2: Recognition using pLSA ∗ z = arg max p ( z | d ) z Slide credit: Josef Sivic

- 34. Case #2: Learning the pLSA parameters Observed counts of word i in document j Maximize likelihood of data using EM M … number of codewords N … number of images Slide credit: Josef Sivic

- 36. task: face detection – no labeling

- 37. Demo: feature detection • Output of crude feature detector – Find edges – Draw points randomly from edge set – Draw from uniform distribution to get scale

- 38. Demo: learnt parameters • Learning the model: do_plsa(‘config_file_1’) • Evaluate and visualize the model: do_plsa_evaluation(‘config_file_1’) Codeword distributions Theme distributions per theme (topic) per image p(w | z ) p( z | d )

- 40. Demo: categorization results • Performance of each theme

- 41. Learning and Recognition 1. Generative method: - graphical models 2. Discriminative method: - SVM category models (and/or) classifiers

- 42. Discriminative methods based on ‘bag of words’ representation Decision Zebra boundary Non-zebra

- 43. Discriminative methods based on ‘bag of words’ representation • Grauman & Darrell, 2005, 2006: – SVM w/ Pyramid Match kernels • Others – Csurka, Bray, Dance & Fan, 2004 – Serre & Poggio, 2005

- 44. Summary: Pyramid match kernel optimal partial matching between sets of features Grauman & Darrell, 2005, Slide credit: Kristen Grauman

- 45. Pyramid Match (Grauman & Darrell 2005) Histogram intersection Slide credit: Kristen Grauman

- 46. Pyramid Match (Grauman & Darrell 2005) Histogram intersection matches at this level matches at previous level Difference in histogram intersections across levels counts number of new pairs matched Slide credit: Kristen Grauman

- 47. Pyramid match kernel histogram pyramids number of newly matched pairs at level i measure of difficulty of a match at level i • Weights inversely proportional to bin size • Normalize kernel values to avoid favoring large sets Slide credit: Kristen Grauman

- 48. Example pyramid match Level 0 Slide credit: Kristen Grauman

- 49. Example pyramid match Level 1 Slide credit: Kristen Grauman

- 50. Example pyramid match Level 2 Slide credit: Kristen Grauman

- 51. Example pyramid match pyramid match optimal match Slide credit: Kristen Grauman

- 52. Summary: Pyramid match kernel optimal partial matching between sets of features difficulty of a match at level i number of new matches at level i Slide credit: Kristen Grauman

- 53. Object recognition results • ETH-80 database 8 object classes (Eichhorn and Chapelle 2004) • Features: – Harris detector – PCA-SIFT descriptor, d=10 Kernel Complexity Recognition rate Match [Wallraven et al.] 84% Bhattacharyya affinity 85% [Kondor & Jebara] Pyramid match 84% Slide credit: Kristen Grauman

- 54. Object recognition results • Caltech objects database 101 object classes • Features: – SIFT detector – PCA-SIFT descriptor, d=10 • 30 training images / class • 43% recognition rate (1% chance performance) • 0.002 seconds per match Slide credit: Kristen Grauman

- 55. learnin recognition g codewords dictionary feature detection & representation image representation category models category (and/or) classifiers decision

- 56. What about spatial info? ?

- 57. What about spatial info? • Feature level – Spatial influence through correlogram features: Savarese, Winn and Criminisi, CVPR 2006

- 58. What about spatial info? • Feature level • Generative models – Sudderth, Torralba, Freeman & Willsky, 2005, 2006 – Niebles & Fei-Fei, CVPR 2007

- 59. What about spatial info? • Feature level • Generative models – Sudderth, Torralba, Freeman & Willsky, 2005, 2006 – Niebles & Fei-Fei, CVPR 2007 P1 P2 P3 P4 w Image Bg

- 60. What about spatial info? • Feature level • Generative models • Discriminative methods – Lazebnik, Schmid & Ponce, 2006

- 61. Invariance issues • Scale and rotation – Implicit – Detectors and descriptors Kadir and Brady. 2003

- 62. Invariance issues • Scale and rotation • Occlusion – Implicit in the models – Codeword distribution: small variations – (In theory) Theme (z) distribution: different occlusion patterns

- 63. Invariance issues • Scale and rotation • Occlusion • Translation – Encode (relative) location information • Sudderth, Torralba, Freeman & Willsky, 2005, 2006 • Niebles & Fei-Fei, 2007

- 64. Invariance issues • Scale and rotation • Occlusion • Translation • View point (in theory) – Codewords: detector and descriptor – Theme distributions: different view points Fergus, Fei-Fei, Perona & Zisserman, 2005

- 65. Model properties Of all the sensory impressions proceeding to the brain, the visual experiences are the dominant ones. Our perception of the world around us is based essentially on the messages that reach the brain from our eyes. For a long time it was thought that the retinal image was transmitted pointbrain, to visual sensory, by point centers in the brain; the cerebral cortex was • Intuitive visual, perception, a movie screen, so to speak, upon which the retinal, cerebral cortex, image in the eye was projected. Through the – Analogy to documents discoveries of Hubelcell, optical eye, and Wiesel we now know that behind the origin of the visual nerve, image perception in the brain there is a considerably more complicated course Wiesel By Hubel, of events. following the visual impulses along their path to the various cell layers of the optical cortex, Hubel and Wiesel have been able to demonstrate that the message about the image falling on the retina undergoes a step- wise analysis in a system of nerve cells stored in columns. In this system each cell has its specific function and is responsible for a specific detail in the pattern of the retinal image.

- 66. Model properties Sivic, Russell, Efros, Freeman, Zisserman, 2005 • Intuitive • generative models Dataset Incremental learning model – Convenient for weakly- or un-supervised, Classification incremental training – Prior information – Flexibility (e.g. HDP) Li, Wang & Fei-Fei, CVPR 2007

- 67. Model properties • Intuitive • generative models • Discriminative method – Computationally efficient Grauman et al. CVPR 2005

- 68. Model properties • Intuitive • generative models • Discriminative method • Learning and recognition relatively fast – Compare to other methods

- 69. Weakness of the model • No rigorous geometric information of the object components • It’s intuitive to most of us that objects are made of parts – no such information • Not extensively tested yet for – View point invariance – Scale invariance • Segmentation and localization unclear

Editor's Notes

- #33: For that we will use a method called probabilistic latent semantic analysis. pLSA can be thought of as a matrix decomposition. Here is our term-document matrix (documents, words) and we want to find topic vectors common to all documents and mixture coefficients P(z|d) specific to each document. Note that these are all probabilites which sum to one. So a column here is here expressed as a convex combination of the topic vectors. What we would like to see is that the topics correspond to objects. So an image will be expressed as a mixture of different objects and backgrounds.

- #34: An images is a mixture of learned topic vectors and mixing weights. Fit the model and use the learned mixing coeficents to classify an image. In particular assign to the highest P(z|d)

- #35: We use maximum likelihood approach to fit parameters of the model. We write down the likelihood of the whole collection and maximize it using EM. M,N goes over all visual words and all documents. P(w|d) factorizes as on previous slide the entries in those matrices are our parameters. n(w,d) are the observed counts in our data

![1.Feature detection and representation

Compute

SIFT Normalize

descriptor patch

[Lowe’99]

Detect patches

[Mikojaczyk and Schmid ’02]

[Mata, Chum, Urban & Pajdla, ’02]

[Sivic & Zisserman, ’03]

Slide credit: Josef Sivic](https://image.slidesharecdn.com/cvpr2007tutorialbagofwords-121203135643-phpapp02/85/Cvpr2007-tutorial-bag_of_words-13-320.jpg)

![First, some notations

• wn: each patch in an image

– wn = [0,0,…1,…,0,0]T

• w: a collection of all N patches in an image

– w = [w1,w2,…,wN]

• dj: the jth image in an image collection

• c: category of the image

• z: theme or topic of the patch](https://image.slidesharecdn.com/cvpr2007tutorialbagofwords-121203135643-phpapp02/85/Cvpr2007-tutorial-bag_of_words-24-320.jpg)

![Object recognition results

• ETH-80 database

8 object classes

(Eichhorn and Chapelle 2004)

• Features:

– Harris detector

– PCA-SIFT descriptor, d=10

Kernel Complexity Recognition rate

Match [Wallraven et al.] 84%

Bhattacharyya affinity 85%

[Kondor & Jebara]

Pyramid match 84%

Slide credit: Kristen Grauman](https://image.slidesharecdn.com/cvpr2007tutorialbagofwords-121203135643-phpapp02/85/Cvpr2007-tutorial-bag_of_words-53-320.jpg)