deep-learning-ppt-full-notes.pdf notesss

- 1. Deep Learning PPT - full notes b.tech (GL Bajaj Institute of Technology and Management) Scan to open on Studocu Studocu is not sponsored or endorsed by any college or university Deep Learning PPT - full notes b.tech (GL Bajaj Institute of Technology and Management) Scan to open on Studocu Studocu is not sponsored or endorsed by any college or university Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 2. Topics to be Covered Introduction: Deep Learning Deep and Shallow Neural Network Machine Learning vs Deep Learning Deep Learning Models Logistic Regression Gradient Descent and Types Regularization Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 3. What is Deep Learning? Deep Learning is the subset of machine learning or can be said as a special kind of machine learning. It works technically in the same way as machine learning does, but with different capabilities and approaches. Deep learning models are capable enough to focus on the accurate features themselves by requiring a little guidance from the programmer. Deep learning is implemented with the help of Neural Networks, and the idea behind the motivation of neural network is the biological neurons, which is nothing but a brain cell. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 4. Example of Deep Learning Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 5. Architectures Shallow neural network: The Shallow neural network has only one hidden layer between the input and output. Deep Neural Networks It is a neural network that incorporates the complexity of a certain level, which means several numbers of hidden layers are encompassed in between the input and output layers. They are highly proficient on model and process non-linear associations. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 6. Machine Learning Vs Deep Learning Machine Learning and Deep Learning are the two main concepts of Data Science and the subsets of Artificial Intelligence. Most of the people think the machine learning, deep learning, and as well as artificial intelligence as the same buzzwords. But in actuality, all these terms are different but related to each other. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 7. How Machine Learning Works? The working of machine learning models can be understood by the example of identifying the image of a cat or dog. To identify this, the ML model takes images of both cat and dog as input, extracts the different features of images such as shape, height, nose, eyes, etc., applies the classification algorithm, and predict the output. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 8. How Deep Learning Works? We can understand the working of deep learning with the same example of identifying cat vs. dog. The deep learning model takes the images as the input and feed it directly to the algorithms without requiring any manual feature extraction step. The images pass to the different layers of the artificial neural network and predict the final output. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 9. Which one is Select – ML or DL Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 10. Deep Learning Models Some popular deep learning models are: Convolutional Neural Network (CNN) Recurrent Neural Network (RNN) Autoencoders Classic Neural Networks, etc. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 11. Deep Learning Applications Self-Driving Cars Voice Controlled Assistance Automatic Image Caption Generation Automatic Machine Translation Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 12. Logistic Regression Logistic regression is one of the most popular Machine Learning algorithms, which comes under the Supervised Learning technique. It is used for predicting the categorical dependent variable using a given set of independent variables. Logistic regression predicts the output of a categorical dependent variable. Therefore the outcome must be a categorical or discrete value. It can be either Yes or No, 0 or 1, true or False, etc. but instead of giving the exact value as 0 and 1, it gives the probabilistic values which lie between 0 and 1. Logistic Regression is much similar to the Linear Regression except that how they are used. Linear Regression is used for solving Regression problems, whereas Logistic regression is used for solving the classification problems. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 13. Logistic Regression In Logistic regression, instead of fitting a regression line, we fit an "S" shaped logistic function, which predicts two maximum values (0 or 1). Logistic regression uses the concept of predictive modeling as regression; therefore, it is called logistic regression, but is used to classify samples; Therefore, it falls under the classification algorithm. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 14. Logistic Function (Sigmoid Function) The sigmoid function is a mathematical function used to map the predicted values to probabilities. It maps any real value into another value within a range of 0 and 1. The value of the logistic regression must be between 0 and 1, which cannot go beyond this limit, so it forms a curve like the "S" form. The S-form curve is called the Sigmoid function or the logistic function. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 15. Types of Logistic Regression On the basis of the categories, Logistic Regression can be classified into three types: • Binomial: In binomial Logistic regression, there can be only two possible types of the dependent variables, such as 0 or 1, Pass or Fail, etc. • Multinomial: In multinomial Logistic regression, there can be 3 or more possible unordered types of the dependent variable, such as "cat", "dogs", or "sheep”. • Ordinal: In ordinal Logistic regression, there can be 3 or more possible ordered types of dependent variables, such as "low", "Medium", or "High". Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 16. Gradient Descent Gradient Descent is an optimization algorithm for finding a local minimum of a differentiable function. Gradient descent is simply used to find the values of a function's parameters (coefficients) that minimize a cost function as far as possible. Most machine learning and deep learning algorithms involve some sort of optimization. Optimization refers to the process of either minimizing or maximizing some function by altering its parameters. With gradient descent, you start with a cost function (also known as a loss or error function) based on a set of parameters. The goal is to find the parameter values that minimize the cost function. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 17. Gradient Descent Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 18. Gradient Descent How can we avoid local minima and always try and get the optimized weights based on global minima? Different types of Gradient descents are Batch Gradient Descent Stochastic Gradient Descent Mini batch Gradient Descent Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 19. Batch Gradient Descent In batch gradient we use the entire dataset to compute the gradient of the cost function for each iteration of the gradient descent and then update the weights. Since we use the entire dataset to compute the gradient convergence is slow. If the dataset is huge and contains millions or billions of data points then it is memory as well as computationally intensive. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 20. Stochastic Gradient Descent In stochastic gradient descent we use a single data point or example to calculate the gradient and update the weights with every iteration. We first need to shuffle the dataset so that we get a completely randomized dataset. As the dataset is randomized and weights are updated for each single example, update of the weights and the cost function will be noisy jumping all over the place Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 21. Mini Batch Gradient Descent Mini-batch gradient is a variation of stochastic gradient descent where instead of single training example, mini-batch of samples is used. Mini batch gradient descent is widely used and converges faster and is more stable. Batch size can vary depending on the dataset. As we take a batch with different samples, it reduces the noise which is variance of the weight updates and that helps to have a more stable converge faster. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 22. Regularization Regularization is one of the most important concepts of machine learning. It is a technique to prevent the model from overfitting by adding extra information to it. Sometimes the machine learning model performs well with the training data but does not perform well with the test data. It means the model is not able to predict the output when deals with unseen data by introducing noise in the output, and hence the model is called overfitted. This problem can be deal with the help of a regularization technique. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 23. Regularization This technique can be used in such a way that it will allow to maintain all variables or features in the model by reducing the magnitude of the variables. Hence, it maintains accuracy as well as a generalization of the model. It mainly regularizes or reduces the coefficient of features toward zero. In simple words, In regularization technique, we reduce the magnitude of the features by keeping the same number of features. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 24. Types of Regularization Ridge Regression Ridge regression is one of the types of linear regression in which a small amount of bias is introduced so that we can get better long-term predictions. Ridge regression is a regularization technique, which is used to reduce the complexity of the model. It is also called as L2 regularization. Lasso Regression: Lasso regression is another regularization technique to reduce the complexity of the model. It stands for Least Absolute and Selection Operator. It is similar to the Ridge Regression except that the penalty term contains only the absolute weights instead of a square of weights. It is also called as L1 regularization. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 25. References https://medium.com/odscjournal/understanding-the-3-primary-types-of-gradient-descent- 987590b2c36 https://medium.com/@arshren/gradient-descent-5a13f385d403 https://www.javatpoint.com/deep-learning https://www.geeksforgeeks.org/gradient-descent-algorithm-and-its-variants/ https://www.javatpoint.com/machine-learning-vs-deep-learning https://www.javatpoint.com/regularization-in-machine-learning https://www.javatpoint.com/logistic-regression-in-machine-learning https://www.coursera.org/lecture/introduction-to-deep-learning-with-keras/shallow-versus- deep-neural-networks-3pKHn Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 26. THANK YOU Hit Academic Booster on YouTube for GATE & Interview Preparation Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 27. Topics to be Covered Introduction: CNN The LeNet Architecture Operations of CNN Convolution Introducing Non Linearity Pooling Fully Connected Layer Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 28. What is CNN? Convolutional Neural Networks (ConvNets or CNNs) are a category of neural networks that have proven very effective in areas such as image recognition and classification. ConvNets have been successful in identifying faces, objects and traffic signs apart from powering vision in robots and self driving cars. ConvNets, therefore, are an important tool for most machine learning practitioners today. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 29. What is CNN? Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 30. The LeNet Architecture LeNet was one of the very first convolutional neural networks which helped propel the field of Deep Learning. There have been several new architectures proposed in the recent years which are improvements over the LeNet, but they all use the main concepts from the LeNet and are relatively easier to understand if you have a clear understanding of the former. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 31. Operations of CNN There are four main operations in the ConvNet: Convolution Non Linearity (ReLU) Pooling or Sub Sampling Classification (Fully Connected Layer) These operations are the basic building blocks of every Convolutional Neural Network, so understanding how these work is an important step to developing a sound understanding of ConvNets. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 32. Image is a Matrix An image from a standard digital camera will have three channels – red, green and blue – you can imagine those as three 2d-matrices stacked over each other (one for each color), each having pixel values in the range 0 to 255. A grayscale image, on the other hand, has just one channel. For the purpose of this post, we will only consider grayscale images, so we will have a single 2d matrix representing an image. The value of each pixel in the matrix will range from 0 to 255 – zero indicating black and 255 indicating white. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 33. The Convolution Step ConvNets derive their name from the “convolution” operator. The primary purpose of Convolution in case of a ConvNet is to extract features from the input image. Convolution preserves the spatial relationship between pixels by learning image features using small squares of input data. We will not go into the mathematical details of Convolution here, but will try to understand how it works over images. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 34. The Convolution Step Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 35. The Convolution Step In CNN terminology, the 3×3 matrix is called a ‘filter‘ or ‘kernel’ or ‘feature detector’ and the matrix formed by sliding the filter over the image and computing the dot product is called the ‘Convolved Feature’ or ‘Activation Map’ or the ‘Feature Map‘. It is important to note that filters acts as feature detectors from the original input image. It is evident from the animation above that different values of the filter matrix will produce different Feature Maps for the same input image. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 36. The Convolution Step Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 37. The Convolution Step In practice, a CNN learns the values of these filters on its own during the training process (although we still need to specify parameters such as number of filters, filter size, architecture of the network etc. before the training process). The more number of filters we have, the more image features get extracted and the better our network becomes at recognizing patterns in unseen images. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 38. The Convolution Step The size of the Feature Map (Convolved Feature) is controlled by three parameters that we need to decide before the convolution step is performed: Depth: Depth corresponds to the number of filters we use for the convolution operation. Stride: Stride is the number of pixels by which we slide our filter matrix over the input matrix. When the stride is 1 then we move the filters one pixel at a time. When the stride is 2, then the filters jump 2 pixels at a time as we slide them around. Having a larger stride will produce smaller feature maps. Zero-padding: Sometimes, it is convenient to pad the input matrix with zeros around the border, so that we can apply the filter to bordering elements of our input image matrix. A nice feature of zero padding is that it allows us to control the size of the feature maps. Adding zero-padding is also called wide convolution, and not using zero-padding would be a narrow convolution. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 39. Introducing Non Linearity (ReLU) ReLU stands for Rectified Linear Unit and is a non-linear operation. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 40. ReLU ReLU is an element wise operation (applied per pixel) and replaces all negative pixel values in the feature map by zero. The purpose of ReLU is to introduce non-linearity in our ConvNet, since most of the real-world data we would want our ConvNet to learn would be non-linear (Convolution is a linear operation – element wise matrix multiplication and addition, so we account for non-linearity by introducing a non-linear function like ReLU). Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 41. The Pooling Step Spatial Pooling (also called subsampling or downsampling) reduces the dimensionality of each feature map but retains the most important information. Spatial Pooling can be of different types: Max, Average, Sum etc. In case of Max Pooling, we define a spatial neighborhood (for example, a 2×2 window) and take the largest element from the rectified feature map within that window. Instead of taking the largest element we could also take the average (Average Pooling) or sum of all elements in that window. In practice, Max Pooling has been shown to work better. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 42. The Pooling Step Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 43. Fully Connected Layer The Fully Connected layer is a traditional Multi Layer Perceptron that uses a softmax activation function in the output layer (other classifiers like SVM can also be used, but will stick to softmax in this post). The term “Fully Connected” implies that every neuron in the previous layer is connected to every neuron on the next layer. The output from the convolutional and pooling layers represent high-level features of the input image. The purpose of the Fully Connected layer is to use these features for classifying the input image into various classes based on the training dataset. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 44. Fully Connected Layer Apart from classification, adding a fully-connected layer is also a (usually) cheap way of learning non-linear combinations of these features. Most of the features from convolutional and pooling layers may be good for the classification task, but combinations of those features might be even better. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 45. Training using Backpropagation Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 46. Training using Backpropagation Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 47. References https://ujjwalkarn.me/2016/08/11/intuitive-explanation-convnets/ https://www.analyticsvidhya.com/blog/2018/12/guide-convolutional-neural-network-cnn/ https://medium.com/@RaghavPrabhu/understanding-of-convolutional-neural-network-cnn- deep-learning-99760835f148 https://en.wikipedia.org/wiki/Convolutional_neural_network https://towardsdatascience.com/applied-deep-learning-part-4-convolutional-neural- networks-584bc134c1e2 https://www.coursera.org/lecture/deep-learning-business/5-1-deep-learning-with-cnn- convolutional-neural-network-6t88U Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 48. THANK YOU Hit Academic Booster on YouTube for GATE & Interview Preparation Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 49. Topics to be Covered Generative Adversarial Networks Working of GANs Semi Supervised Learning Dimensionality Reduction PCA and LDA Auto Encoders CNN Architectures AlexNet, VGGNet, Inception, ResNet Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 50. What is GAN? Generative Adversarial Networks, or GANs for short, are an approach to generative modeling using deep learning methods, such as convolutional neural networks. Generative modeling is an unsupervised learning task in machine learning that involves automatically discovering and learning the regularities or patterns in input data in such a way that the model can be used to generate or output new examples that plausibly could have been drawn from the original dataset. GAN is proposed by Ian Goodfellow and few other researchers including Yoshua Bengio in 2014. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 51. What is GAN? Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 52. What is GAN? In GAN we have a Generator that is pitted against an adversarial network called Discriminator. Hence the name Generative Adversarial Network. Both Generator and Discriminator are multilayer perceptrons (MLP). Generator’s objective is to model or generate data that is very similar to the training data. Generator needs to generate data that is indistinguishable from the real data. Generated data should be such that discriminator is tricked to identify it as real data. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 53. What is GAN? • Discriminator objective is to identify if the data is real or fake. It gets two sets of input. One input comes from the training dataset and the other input is the modelled dataset generated by Generator. • Generator can be thought as team of counterfeiters making fake currency which looks exactly like real currency. Discriminators can be considered as team of cops trying to detect the counterfeit currency. Counterfeiters and cops both are trying to beat each other at their game. • GAN do not need any approximate inference or markov chains. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 54. How does GAN work? Generator Input to the Generator is random noise created from the training data. Training data can be an image. Generator tries to mimic the input image as close as possible to the real image from the training data. Generator’s goal is to to fool the Discriminator. Discriminator Discriminator gets two inputs. One is the real data from training dataset and other is the fake data from the Generator. Goal of the Discriminator is to identify which input is real and which is fake. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 55. How does GAN work? Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 56. Usage of GAN Generating a high resolution image from a low resolution image Generating a fine image from a coarse image Generate descriptions based on images Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 57. Semi Supervised Learning The most basic disadvantage of any Supervised Learning algorithm is that the dataset has to be hand-labeled either by a Machine Learning Engineer or a Data Scientist. This is a very costly process, especially when dealing with large volumes of data. The most basic disadvantage of any Unsupervised Learning is that it’s application spectrum is limited. To counter these disadvantages, the concept of Semi-Supervised Learning was introduced. In this type of learning, the algorithm is trained upon a combination of labeled and unlabeled data. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 58. Semi Supervised Learning Typically, this combination will contain a very small amount of labeled data and a very large amount of unlabeled data. The basic procedure involved is that first, the programmer will cluster similar data using an unsupervised learning algorithm and then use the existing labeled data to label the rest of the unlabeled data. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 59. Semi Supervised Learning A Semi-Supervised algorithm assumes the following about the data – • Continuity Assumption: The algorithm assumes that the points which are closer to each other are more likely to have the same output label. • Cluster Assumption: The data can be divided into discrete clusters and points in the same cluster are more likely to share an output label. • Manifold Assumption: The data lie approximately on a manifold of much lower dimension than the input space. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 60. Applications of Semi Supervised Learning • Speech Analysis: Since labeling of audio files is a very intensive task, Semi-Supervised learning is a very natural approach to solve this problem. • Internet Content Classification: Labeling each webpage is an impractical and unfeasible process and thus uses Semi-Supervised learning algorithms. Even the Google search algorithm uses a variant of Semi-Supervised learning to rank the relevance of a webpage for a given query. • Protein Sequence Classification: Since DNA strands are typically very large in size, the rise of Semi-Supervised learning has been imminent in this field. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 61. Dimensionality Reduction Dimensionality Reduction the process of reducing the number of random variables under consideration via obtaining a set of principal variables. It can be divided into feature selection and feature extraction. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 62. Components of Dimensionality Reduction There are two components of dimensionality reduction: Feature selection: In this, we try to find a subset of the original set of variables, or features, to get a smaller subset which can be used to model the problem. It usually involves three ways: • Filter • Wrapper • Embedded Feature extraction: This reduces the data in a high dimensional space to a lower dimension space, i.e. a space with lesser no. of dimensions. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 63. Methods of Dimensionality Reduction The various methods used for dimensionality reduction include: Principal Component Analysis (PCA) Linear Discriminant Analysis (LDA) Generalized Discriminant Analysis (GDA) Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 64. Principal Component Analysis This method was introduced by Karl Pearson. It works on a condition that while the data in a higher dimensional space is mapped to data in a lower dimension space, the variance of the data in the lower dimensional space should be maximum. It involves the following steps: Calculate the mean of each attribute Find the Covariant matrix Find the Eigen values Find the Eigen vectors Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 65. Principal Component Analysis Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 66. Principal Component Analysis Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 67. Linear Discriminant Analysis Linear Discriminant Analysis or Normal Discriminant Analysis or Discriminant Function Analysis is a dimensionality reduction technique which is commonly used for the supervised classification problems. It is used for modeling differences in groups i.e. separating two or more classes. It is used to project the features in higher dimension space into a lower dimension space. For example, we have two classes and we need to separate them efficiently. Classes can have multiple features. Using only a single feature to classify them may result in some overlapping Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

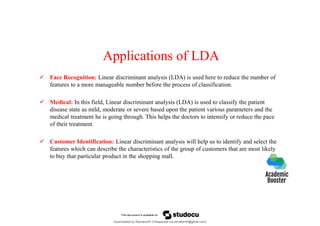

- 68. Applications of LDA Face Recognition: Linear discriminant analysis (LDA) is used here to reduce the number of features to a more manageable number before the process of classification. Medical: In this field, Linear discriminant analysis (LDA) is used to classify the patient disease state as mild, moderate or severe based upon the patient various parameters and the medical treatment he is going through. This helps the doctors to intensify or reduce the pace of their treatment. Customer Identification: Linear discriminant analysis will help us to identify and select the features which can describe the characteristics of the group of customers that are most likely to buy that particular product in the shopping mall. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 69. Advantages of Dimensionality Reduction It helps in data compression, and hence reduced storage space. It reduces computation time. It also helps remove redundant features, if any. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 70. Disadvantages of Dimensionality Reduction It may lead to some amount of data loss. PCA tends to find linear correlations between variables, which is sometimes undesirable. PCA fails in cases where mean and covariance are not enough to define datasets. We may not know how many principal components to keep- in practice, some thumb rules are applied. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 71. Auto-Encoder Autoencoder is an unsupervised artificial neural network that learns how to efficiently compress and encode data then learns how to reconstruct the data back from the reduced encoded representation to a representation that is as close to the original input as possible. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 72. Auto-Encoder : Components Autoencoders consists of 4 main parts: Encoder: In which the model learns how to reduce the input dimensions and compress the input data into an encoded representation. Bottleneck: which is the layer that contains the compressed representation of the input data. This is the lowest possible dimensions of the input data. Decoder: In which the model learns how to reconstruct the data from the encoded representation to be as close to the original input as possible. Reconstruction Loss: This is the method that measures how well the decoder is performing and how close the output is to the original input. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 73. CNN Architectures LeNet-5 (1998) LeNet-5, a pioneering 7-level convolutional network by LeCun et al in 1998, that classifies digits, was applied by several banks to recognise hand-written numbers on checks (cheques) digitized in 32x32 pixel greyscale inputimages. The ability to process higher resolution images requires larger and more convolutional layers, so this technique is constrained by the availability of computing resources. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 74. CNN Architectures AlexNet (2012) In 2012, AlexNet significantly outperformed all the prior competitors and won the challenge by reducing the top-5 error from 26% to 15.3%. The second place top-5 error rate, which was not a CNN variation, was around 26.2%. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 75. CNN Architectures GoogLeNet/Inception (2014) It achieved a top-5 error rate of 6.67%! This was very close to human level performance which the organisers of the challenge were now forced to evaluate. As it turns out, this was actually rather hard to do and required some human training in order to beat GoogLeNets accuracy. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 76. CNN Architectures VGGNet (2014) VGGNet consists of 16 convolutional layers and is very appealing because of its very uniform architecture. Similar to AlexNet, only 3x3 convolutions, but lots of filters. Trained on 4 GPUs for 2–3 weeks. It is currently the most preferred choice in the community for extracting features from images. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 77. CNN Architectures ResNet (2015) At last, at the ILSVRC 2015, the so-called Residual Neural Network (ResNet) by Kaiming He et al introduced anovel architecture with “skip connections” and features heavy batch normalization. Such skip connections are also known as gated units or gated recurrent units and have a strong similarity to recent successful elements applied in RNNs. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 78. References https://machinelearningmastery.com/what-are-generative-adversarial-networks-gans/ https://medium.com/datadriveninvestor/deep-learning-generative-adversarial-network-gan- 34abb43c0644 https://developers.google.com/machine-learning/gan/generative https://developers.google.com/machine-learning/gan/gan_structure https://www.geeksforgeeks.org/ml-semi-supervised-learning/ https://medium.com/inside-machine-learning/placeholder-3557ebb3d470 https://www.geeksforgeeks.org/dimensionality-reduction/ https://medium.com/machine-learning-researcher/dimensionality-reduction-pca-and-lda- 6be91734f567 Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 79. THANK YOU Hit Academic Booster on YouTube for GATE & Interview Preparation Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 80. Topics to be Covered Introduction: RNN How RNN Works Problems in RNN What is LSTM Advantages of RNN Disadvantages of RNN Use Case and Application of Deep Learning Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 81. Recurrent Neural Network Recurrent Neural Network (RNN) are a type of Neural Network where the output from previous step are fed as input to the current step. In traditional neural networks, all the inputs and outputs are independent of each other, but in cases like when it is required to predict the next word of a sentence, the previous words are required and hence there is a need to remember the previous words. Thus RNN came into existence, which solved this issue with the help of a Hidden Layer. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 82. Recurrent Neural Network The main and most important feature of RNN is Hidden state, which remembers some information about a sequence. RNN have a “memory” which remembers all information about what has been calculated. It uses the same parameters for each input as it performs the same task on all the inputs or hidden layers to produce the output. This reduces the complexity of parameters, unlike other neural networks. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 83. Recurrent Neural Network Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 84. How RNN works? Suppose there is a deeper network with one input layer, three hidden layers and one output layer. Then like other neural networks, each hidden layer will have its own set of weights and biases, let’s say, for hidden layer 1 the weights and biases are (w1, b1), (w2, b2) for second hidden layer and (w3, b3) for third hidden layer. This means that each of these layers are independent of each other, i.e. they do not memorize the previous outputs. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 85. How RNN works? Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 86. How RNN works? Now the RNN will do the following: RNN converts the independent activations into dependent activations by providing the same weights and biases to all the layers, thus reducing the complexity of increasing parameters and memorizing each previous outputs by giving each output as input to the next hidden layer. Hence these three layers can be joined together such that the weights and bias of all the hidden layers is the same, into a single recurrent layer. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 87. How RNN works? Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 88. Recurrent Neural Network Formula for calculating current state: Formula for applying Activation Function: Formula for calculating output: Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 89. Training through RNN A single time step of the input is provided to the network. Then calculate its current state using set of current input and the previous state. The current ht becomes ht-1 for the next time step. One can go as many time steps according to the problem and join the information from all the previous states. Once all the time steps are completed the final current state is used to calculate the output. The output is then compared to the actual output i.e the target output and the error is generated. The error is then back-propagated to the network to update the weights and hence the network (RNN) is trained. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 90. Problems in RNN Although the basic Recurrent Neural Network is fairly effective, it can suffer from a significant problem. For deep networks, The Back-Propagation process can lead to the following issues:- • Vanishing Gradients: This occurs when the gradients become very small and tend towards zero. • Exploding Gradients: This occurs when the gradients become too large due to back- propagation. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 91. Solutions of Problem The problem of Exploding Gradients may be solved by using a hack – By putting a threshold on the gradients being passed back in time. But this solution is not seen as a solution to the problem and may also reduce the efficiency of the network. To deal with such problems, two main variants of Recurrent Neural Networks were developed Long Short Term Memory Networks (LSTM) Gated Recurrent Unit Networks (GRU) Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 92. Long Short Term Memory To solve the problem of Vanishing and Exploding Gradients in a deep Recurrent Neural Network, many variations were developed. One of the most famous of them is the Long Short Term Memory Network(LSTM). In concept, an LSTM recurrent unit tries to “remember” all the past knowledge that the network is seen so far and to “forget” irrelevant data. This is done by introducing different activation function layers called “gates” for different purposes. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 93. Long Short Term Memory Each LSTM recurrent unit also maintains a vector called the Internal Cell State which conceptually describes the information that was chosen to be retained by the previous LSTM recurrent unit. A Long Short Term Memory Network consists of four different gates for different purposes as described below:- Forget Gate (f) Input Gate (i) Input Modulation Gate (g) Output Gate (o) Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 94. Long Short Term Memory • Forget Gate(f): It determines to what extent to forget the previous data. • Input Gate(i): It determines the extent of information to be written onto the Internal Cell State. • Input Modulation Gate(g): It is often considered as a sub-part of the input gate and many literatures on LSTM’s do not even mention it and assume it inside the Input gate. It is used to modulate the information that the Input gate will write onto the Internal State Cell by adding non-linearity to the information and making the information Zero-mean. This is done to reduce the learning time as Zero-mean input has faster convergence. Although this gate’s actions are less important than the others and is often treated as a finesse-providing concept, it is good practice to include this gate into the structure of the LSTM unit. • Output Gate(o): It determines what output(next Hidden State) to generate from the current Internal Cell State. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 95. Long Short Term Memory The basic work-flow of a Long Short Term Memory Network is similar to the work-flow of a Recurrent Neural Network with only difference being that the Internal Cell State is also passed forward along with the Hidden State. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 96. Advantages of RNN An RNN remembers each and every information through time. It is useful in time series prediction only because of the feature to remember previous inputs as well. This is called Long Short Term Memory. Recurrent neural network are even used with convolutional layers to extend the effective pixel neighborhood. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 97. Disadvantages of RNN Gradient vanishing and exploding problems. Training an RNN is a very difficult task. It cannot process very long sequences if using tanh or Relu as an activation function. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 98. Applications of Deep Learning Self-driving cars Companies building these types of driver-assistance services, as well as full-blown self- driving cars like Google’s, need to teach a computer how to take over key parts (or all) of driving using digital sensor systems instead of a human’s senses. To do that companies generally start out by training algorithms using a large amount of data. You can think of it how a child learns through constant experiences and replication. These new services could provide unexpected business models for companies. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 99. Applications of Deep Learning Voice Search & Voice-Activated Assistants One of the most popular usage areas of deep learning is voice search & voice-activated intelligent assistants. With the big tech giants have already made significant investments in this area, voice-activated assistants can be found on nearly every smartphone. Apple’s Siri is on the market since October 2011. Google Now, the voice-activated assistant for Android, was launched less than a year after Siri. The newest of the voice-activated intelligent assistants is Microsoft Cortana. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 100. Applications of Deep Learning Deep Learning in Healthcare AI is completely reshaping life sciences, medicine, and healthcare as an industry. Innovations in AI are advancing the future of precision medicine and population health management in unbelievable ways. Computer-aided detection, quantitative imaging, decision support tools and computer-aided diagnosis will play a big role in years to come. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 101. Applications of Deep Learning Automatic Machine Translation This is a task where given words, phrase or sentence in one language, automatically translate it into another language. Automatic machine translation has been around for a long time, but deep learning is achieving top results in two specific areas: Automatic Translation of Text Automatic Translation of Images Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 102. Applications of Deep Learning Automatic Text Generation This is an interesting task, where a corpus of text is learned and from this model new text is generated, word-by-word or character-by-character. The model is capable of learning how to spell, punctuate, form sentences and even capture the style of the text in the corpus. Large recurrent neural networks are used to learn the relationship between items in the sequences of input strings and then generate text. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 103. Applications of Deep Learning Image Recognition Another popular area regarding deep learning is image recognition. It aims to recognize and identify people and objects in images as well as to understand the content and context. Image recognition is already being used in several sectors like gaming, social media, retail, tourism, etc. Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 104. References https://stanford.edu/~shervine/teaching/cs-230/cheatsheet-recurrent-neural-networks https://towardsdatascience.com/recurrent-neural-networks-d4642c9bc7ce https://www.geeksforgeeks.org/introduction-to-recurrent-neural-network/ https://www.geeksforgeeks.org/recurrent-neural-networks-explanation/?ref=rp https://www.geeksforgeeks.org/long-short-term-memory-networks-explanation/?ref=rp https://medium.com/breathe-publication/top-15-deep-learning-applications-that-will-rule- the-world-in-2018-and-beyond-7c6130c43b01 https://www.mygreatlearning.com/blog/deep-learning-applications/ Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120

- 105. THANK YOU Hit Academic Booster on YouTube for GATE & Interview Preparation Downloaded by Ramakanth Chhaparwal (ca.ramakanth@gmail.com) lOMoARcPSD|50943120