GTC 2018 で発表された自動運転最新情報

- 1. 馬路 徹 技術顧問、GPUエバンジェリスト エヌビディア GTC 2018 で発表された 自動運転最新情報

- 2. 目次 GTC2018 での NVIDIA 自動運転関係・技術発表及び関連アナウンスメント (補 足スライド入り) 1. S8666: Deploying Autonomous Vehicles with NVIDIA DRIVE 2. S8531: Deep Learning Infrastructure for Autonomous Vehicles 3. S8294: NVIDIA DRIVE Safety: NVIDIA's Strategies for Enabling Safety in Automotive Platforms 4. S8324: Synthetic Data Generation for an All-in-One Driver Monitoring System 5. ANNOUNCEMENT: NVIDIA and ARM Partner to Bring Deep Learning to Billions of IoT Devices P2

- 3. Shri Sundaram DEPLOYING AUTONOMOUS VEHICLES WITH NVIDIA DRIVE S8666

- 4. ANNOUNCING “PEGASUS” ROBOTAXI DRIVE PX レベル5 完全自動運転 ▪ Xavier (Volta GPU integrated) x 2 ▪ Next generation discrete-GPU x 2 ▪ 320 TOPS CUDA TensorCore ▪ ASIL D Certification ▪ Combined Memory Bandwidth: >1TBytes/sec ▪ Automotive I/Os ▪ 16x GMSL High-speed Camera Inputs ▪ Multiple 10Gbit Ethernet ▪ CAN, Flexray ▪ Late Q1 Early Access Partners ▪ Supercomputing Data Center in your Trunk 補足スライド

- 5. DRIVE DEVELOPMENT PLATFORM Development Platform for DRIVE Xavier & DRIVE Pegasus – building on the capabilities of DRIVE PX 2 Auto-grade ASIL-D Safety MCU Upto 16x CAN 2x Flexray Ethernet: 4x 10Gbps 7x 1Gbps or 100Mbps 5x 100Mbps 2x Xavier SOC: CV & DL Accelerator CUDA Processing 137GB/s LPDDR4x 2x discrete GPU: Next Gen CUDA GPU Tensor Core Support for IST 384GB/s GDDR6 Raw Sensor Input 16x GMSL 91Gbps XAVIER Next Generation GPU Next Generation GPU DeSer DeSer DeSer XAVIER DeSer MCU PCIE Switch NVLINK NVLINK ENET 2x Xavier Developer I/O HDMI 4 x USB UART (via USB) JTAG Only with Pegasus DRIVE PX2 | DRIVE Xavier | DRIVE Pegasus | One Architecture

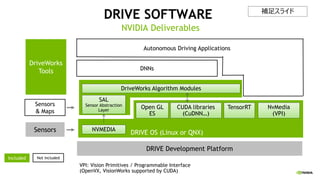

- 6. DRIVE SOFTWARE NVIDIA Deliverables DRIVE Development Platform Sensors Sensors & Maps NVMEDIA DRIVE OS, CUDA CUDA accelerated libraries (notably TensorRT) DriveWorks Algorithm Modules Autonomous Driving Applications DriveWorks Tools DNNs Included Not included DRIVE OS (Linux or QNX) NVMEDIA SAL Sensor Abstraction Layer CUDA libraries (CuDNN…) DriveWorks Algorithm Modules TensorRT NvMedia (VPI) Open GL ES VPI: Vision Primitives / Programmable Interface (OpenVX, VisionWorks supported by CUDA)

- 7. NvMedia (Camera, ISP, Encoder TensorRT NvMedia (VPI) CUDA OpenGL Xavier CPU Video Encoder 0.9 - 1.4 Gpix/s Video Decoder – Gpix/s Deep Learning Accelerator (DLA) 5 FP16 / 10 INT8 TOPs DL ISP 1.5 Gpix/s VIC 2 Gpix/s PVA 0.5 - 1.3 TOPS Stereo & OF (SOFE) 6 TOPS X 16 Camera • Raw data for DNN training • Detection, classification, segmentation • Computer vision, autonomous vehicle algorithms • Archiving, computer data for DNN, automotive simulation • Visualization, in- vehicle display GPU Compute 1.3FP32 TFLOPS CUDA 20INT8 TOPs DL GPU Graphics 1.3 TFLOPS FP32 Human Interface Compressed Storage Math Computation DNN Inference Raw Data Storage Computer Vision • Autonomous vehicle algorithms Lidar Radar ... XAVIER SOC ENGINES & DRIVE OS Xavier HW Engines DRIVE OS SW APIs Inputs Data Destination Purpose ISP: Image Signal Processor VIC: Video Imaging Controller PVA: Programmable Vision Accelerator OF: Optical Flow SOFE: Stereo Optical Flow Engine

- 8. DRIVEWORKS Modules (with C APIs): Sensor, Vehicle I/O abstraction Automotive image processing modules • Stereo/Rectification, Color Correction… Automotive Computer Vision modules • Point Cloud Processing, SFM(Structure from Motion), 2D Tracker… Tools Recording, Replaying, Visualization. Abstracting the vehicle

- 9. DRIVE DEVZONE SW Installer & Dev. Tools Latest SW Documentation Links to Support Updates on Ecosystem https://developer.nvidia.com/DRIVE

- 10. REVISITING THE FLOW… to put an Autonomous Vehicle on the road? Curated Annotated Training Data Data Acquired From Sensors Trained Deep Neural Network Autonomous Vehicle Applications Autonomous Vehicle Application Development Test/Drive Simulation & Re-Simulation HD Map Neural Network Training 1 2 3 Data Acquisition to train DNN and create HD Map Autonomous Vehicle Application Development Testing In-Vehicle or With Simulation 1 2 3 DRIVE PX DRIVE PX (HIL) DRIVE PX DGX (SIL)

- 11. DRIVE PX 2 2 PARKER SoC + 2 PASCAL GPU | 20 TOPS DL | 120 SPECINT | 80W DRIVE PX XAVIER 20 TOPS DL | 160 SPECINT | 20W (20 TOPS DL/20Wは高電力効率使用の場合) DRIVE PX XAVIER 開発時:DRIVE PX2 量産時:DRIVE PX XAVIER 補足スライド

- 12. NVIDIA DRIVE ROADMAP ONE ARCHITECTURE Auto-Grade Super Energy-Efficient ASIL-D Functional Safety DRIVE PX Parker DRIVE PX 2 DRIVE Xavier OrinDRIVE Pegasus Performance Timeline 20182016

- 13. 2018 2019 DRIVE AI COMPUTER Development to Production | One Architecture DRIVE™ Xavier 30 TOPS | 1x Xavier DRIVE™ Pegasus 320 TOPS | 2x Xavier + 2x Next Gen GPU DRIVE PX 2 24 TOPS | 2x Parker + 2x Pascal dGPU DRIVE™ Development Platform - Xavier 60 TOPS | 2x Xavier DRIVE™ Development Platform - Pegasus 320 TOPS | 2x Xavier + 2x Next Gen GPU DEVELOPMENT PRODUCTION

- 14. SIMULATION — THE PATH TO BILLIONS OF MILES World drives trillions of miles each year. U.S. has 770 accidents per billion miles. A fleet of 20 test cars cover 1 million miles per year.

- 15. ANNOUNCING NVIDIA DRIVE SIM AND CONSTELLATION AV VALIDATION SYSTEM ▪ Virtual Reality AV Simulator ▪ Same Architecture as DRIVE Computer ▪ Simulate Rare and Difficult Conditions ▪ Recreate Scenarios ▪ Run Regression Tests ▪ Drive Billions of Virtual Miles 10,000 Constellations Drive 3B Miles per Year DRIVE SIM Generating Virtual Reality 3D Image Stimulus Constallation Running Autonomous Driving Application on real HW Interfaces HW Platform 3D Virtual Images Car Responses Example: 4 Camera based Autonomous Driving Simulation HIL (Hardware In the Loop) Simulation

- 16. 16 S8531

- 17. 自動運転用ディープラーニングの課題 ▪ 機能安全は最重要要件。その他に再現性、推論性能他も重要。 ▪ 学習に膨大なデータが必要。Raw Data、10以上のセンサー入力、地域依存性 ▪ 全ての周囲環境を把握・判断するための何種類ものディープ・ニューラルネット ▪ 長大な学習に要する時間 ▪ 全End-to-end workflowの最大限の時間短縮が必要 【データ収集、ラベリング、学習、再 学習、検証、全車に展開】 膨大なデータ、長大な学習時間

- 18. 自動運転用ディープラーニングの課題 ▪ データ収集車: 100台 ▪ 収集データスケール:2000時間 データ/車/年 ▪ 各車に5台の2M画素カメラ、レーダ他センサー実装:1 Tバイト / 車 / 時間 ▪ 1年間に総計200 Pバイト(Peta:1015)の膨大なデータを収集 ▪ ただし収集データの1/1000 のみを深層学習に使用(キュレート、ラベル付け済みデータ) ▪ ResNet50クラスの画像認識ニューラルネットを上記データで学習: ▪ 1台のPascal GPU, 12.1 年 ▪ 1基のDGX1 AIスーパコンピュータ (Pascal GPU x 8):1.5年 ▪ 今日、上記の1/10のデータ、8基のDGX-1(Pascal GPUx8 x8)では1週間で学習完了 (最新のVolta GPU使用DGX-1はx12の性能) スケールの想定

- 19. NVIDIA DRIVE SAFETY GTC 2018 S8294

- 20. NVIDIA DRIVE FUNCTIONAL SAFETY ARCHITECTURE HD Map DRIVE XAVIER DDPX DGX (DDPX Emulation) AutoSIM (Photo Realistic 3D-CG by DGX for Corner Cases etc) DRIVE OS ▪ Diverse Engines ▪ Dual Execution ▪ ECC/Parity ▪ Diagnosis, BIST Hypervisor CUDA, TensorRT ISO 26262 DRIVE AV System Operates Safely Even when Faults Detected Holistic System — Process & Methods, Processor Design, Software, Algorithms, System Design, Validation ISO 26262 ASIL-D Safety Level | Partnership with BlackBerry QNX and TTTech | New AutoSIM Virtual Reality 3D Simulator Same GPU Architecture Re-SIM (Captured Real Data) Traffic Environment Input(Stimulus) and Testing/VerificationHIL SIL HIL: Hardware (DDPX) In the Loop SIL: Software(DGX) In the LoopISO26262 ASIL-D

- 21. DRIVE SOFTWARE NVIDIA Deliverables DRIVE Development Platform Sensors Sensors & Maps NVMEDIA DRIVE OS, CUDA CUDA accelerated libraries (notably TensorRT) DriveWorks Algorithm Modules Autonomous Driving Applications DriveWorks Tools DNNs Included Not included DRIVE OS (Linux or QNX) NVMEDIA SAL Sensor Abstraction Layer CUDA libraries (CuDNN…) DriveWorks Algorithm Modules TensorRT NvMedia (VPI) Open GL ES VPI: Vision Primitives / Programmable Interface (OpenVX, VisionWorks supported by CUDA) 補足スライド

- 22. Critical Components are ASIL-D QNX RTOS, Classic AUTOSAR, & Hypervisor To be Safe, must be Secure Secure boot, Security Services, Firewall, & OTA To be Safe, must be Real-time QNX RTOS for mission application Hypervisor for Quality of Service (QoS) DRIVE OS Safe, Secure, & Real-time

- 23. NVIDIA CONFIDENTIAL. DO NOT DISTRIBUTE. WHY QNX FOR SAFETY? Safety OS Key Selection Criteria: ISO 26262 ASIL D Certified RTOS ISO 26262 qualified tool chain (up to TCL 3) POSIX PSE52 standards certification - Requirement for CUDA, cuDNN support Common Unix heritage with Linux - Rich dependent library support TCL3: Tool Confidence Level POSIX PSE52: support application portability at source code level

- 25. NVIDIA CONFIDENTIAL. DO NOT DISTRIBUTE. SOFTWARE SAFETY FRAMEWORK Three Level Safety Supervision (3LSS) architecture Coherent with Xavier SOC safety architecture and DRIVE platform • Support for Xavier SOC HW Safety Manager • Integrated into DRIVE OS to be available for DRIVE platform • Anchored by ASIL D capable Safety-MCU on DRIVE platform Provides standard mechanism for handling of potentially safety critical errors and performing diagnostics Supporting freedom from interference in execution, memory and information exchange domains Overview

- 26. NVIDIA SAFETY FRAMEWORK THREE LEVEL SAFETY SUPERVISION (3LSS) L1SS One Partition on Hypervisor CCPLEX(CPU Complex) = Carmel CPU x 8 L2SS Safety OS & AutoSAR SCE(Safety Control Engine) = R5 CPU x 2 in Lock-step) L3SS Safety OS & AutoSAR External ASIL-D MCU SHM (Safety Hardware Manager) Safety Supervision Serial Channel on SPI I/F Error Signaling Pin Heartbeat Monitoring on SPI I/F Safety PMIC (Power Management IC) XAVIER ▪ Diverse Engines ▪ Dual Execution ▪ ECC/Parity ▪ Diagnosis ▪ BIST (Built In Self Test)

- 27. SOFTWARE SAFETY FRAMEWORK Flexible and extendable to handle the increasing safety requirements Configurable and portable to new DRIVE versions Off the shelf safety solution, optimizing/reducing effort on application side. Enables easy deployment of safety applications on DRIVE platform • Provides safety services such as flow monitoring Supports the fault tolerant foundation of our Drive platform Outcome

- 28. Sagar Bhokre SYNTHETIC DATA GENERATION FOR AN ALL-IN-ONE DMS S8324

- 29. NVIDIA DRIVE IX SDK IX (Intelligent eXperience) Toolkit Sense Inside & Outside the Vehicle | Deep Learning Powered | Early Access Q4 Your Car is an AI Customer Application DRIVE OS DRIVE AV Object, Path, Wait Perception DRIVE IX Gaze, Head Pose, Gestures, Recognize Face, Voice Recognition & Lip Reading Exterior Driver Recognition Automatic Personalization Inattentive Driver Alert Cyclist Alert Distracted Driver Alert Driver/Passenger Recognition Multiple In-Car Sensors 補足スライド

- 30. NEED FOR SYNTHETIC DATA Example synthetic image

- 31. NEED FOR SYNTHETIC DATA No devices available – e.g. face landmarks at extreme angles Manual labelling – limited by human precision and error Manpower and time limits – recording in different environments Sensor interference with scene – glasses for eye tracking, optical markers Needs high resolution devices – head poses, gaze Lacks associativity and completeness – multiple recordings for multiple parameters Where does real world data fall short?

- 32. NEED FOR SYNTHETIC DATA More flexible - environment parameters, camera distance, background Error free - Free from manual labelling errors / Human errors Accurate - Synthetic data readings are highly accurate. No sensor noise High resolution as well as low resolution images can be generated Allows labelling of occluded areas 3D as well as 2D labels can be accurately generated Fast and economical Where does synthetic data offer advantages?

- 33. AGENDA Data Generation Pipeline Parameter Settings Post Processing Deployment

- 34. DATA GENERATION PIPELINE Steps involved in synthetic data generation: 3D Head Scan with High Resolution Retopology Defining Mesh Deformation Annotation

- 35. DATA GENERATION PIPELINE High Resolution 3D Head Scan • Capture accurate 3D face details using depth sensor or multiple synchronized cameras using triangulation • High density mesh (~0.1 mm resolution) • Why can’t we use such high resolution scan? • Needs lots of computation power to transform each mesh vertex • More manual efforts for defining deformation and key shapes http://ten24.info/10-x-high-resolution-head-scans-avaliable-to-download/ [2]

- 36. DATA GENERATION PIPELINE Retopology Reduce mesh vertices count to save on computation cost • Reduced vertex count (~1000 x reduction) • Add displacement map to preserve details • Easier to define shape keys manually on reduced mesh

- 37. DATA GENERATION PIPELINE Mesh Deformation • Define shape keys and set vertices manually to define a face feature (e.g. eyes looking down, smile, eyebrow raised) • Intermediate values are interpolated automatically • Allows programmatic control of parameters [1]

- 38. DATA GENERATION PIPELINE Landmark annotation • Manually mark points of interest • Allows tracking and automatic labelling of face landmarks • 2D and 3D point coordinates are available as the environment is synthetic • Occluded points could be accepted/rejected programmatically [1]

- 39. DATA GENERATION PIPELINE Example annotated image Following features are saved along with image in a file • Face bounding box • Face landmarks • Head pose • Gaze • Eye lid, pupil(eyeball), iris markers • Face ID

- 41. PARAMETER SETTING Head Pose control • Position camera to cover head • Camera oriented towards center of both eyes • Head orientations can be set to captured values to mimic human head motions

- 42. PARAMETER SETTING Gaze control • Position subject with respect to camera • Set target gaze location • Rotate eyes to look at target location • Render cases which honor anatomic constraints • Unobstructed ( Pupil is partly exposed > 35% ) • Within anatomically allowed pitch/yaw range • Visible to the camera Constraints

- 44. POST PROCESSING

- 45. POST PROCESSING Domain adaption is used to operate on synthetic data to closely resemble real data. Following techniques can be used to adopt to real world domain: Gaussian filtering/Blurring – This effect imitates focal blur of the camera Noise addition – Imitates sensor noise, environment noise/dust Brightness/contrast correction – To simulate different lighting conditions Scaling – To make up for face distance, bounding box tightness Mirroring – Most of the use cases are agnostic to mirroring; others can use transformed parameters

- 46. DEPLOYMENT

- 47. DEPLOYMENT 40 8 0.8 0 5 10 15 20 25 30 35 40 45 CPU GPU GCF Time(sec) Device Type Time per frame 1 5 50 0 10 20 30 40 50 60 CPU GPU GCF Gainfactor Device Type Performance gain Comparing performance on different devices GCF: GPU Compute Farm (e.g. DGX)

- 48. DEPLOYMENT Execution SEC/FRAME 150K FRAMES COMMENTS CPU 40 70 days Consumes entire CPU GPU 8 14 days 30-60% GPU(~5 sec/frame) GCF (10 GPUs) 0.8 1.4 days Training a DNN requires images in the order of ~150K and higher Speed up of 5x with a GPU and (10x) times using GCF (GPU Compute Farm)

- 49. 目次 GTC2018でのNVIDIA自動運転関係・技術発表及び関連アナウンスメント (補 足スライド入り) 1. S8666: Deploying Autonomous Vehicles with NVIDIA DRIVE 2. S8531: Deep Learning Infrastructure for Autonomous Vehicles 3. S8294: NVIDIA DRIVE Safety: NVIDIA's Strategies for Enabling Safety in Automotive Platforms 4. S8324: Synthetic Data Generation for an All-in-One Driver Monitoring System 5. ANNOUNCEMENT: NVIDIA and ARM Partner to Bring Deep Learning to Billions of IoT Devices

- 50. NVIDIA AND ARM PARTNER TO BRING DEEP LEARNING TO BILLIONS OF IOT DEVICES NVIDIA Deep Learning Accelerator IP to be Integrated into ARM Project Trillium Platform, Easing Building of Deep Learning IoT Chips GPU Technology Conference — NVIDIA and Arm today announced that they are partnering to bring deep learning inferencing to the billions of mobile, consumer electronics and Internet of Things devices that will enter the global marketplace. Under this partnership, NVIDIA and Arm will integrate the open-source NVIDIA Deep Learning Accelerator (NVDLA) architecture into Arm’s Project Trillium platform for machine learning. The collaboration will make it simple for IoT chip companies to integrate AI into their designs and help put intelligent, affordable products into the hands of billions of consumers worldwide. Tuesday, March 27, 2018

- 51. ANNOUNCING DRIVE XAVIER SAMPLING IN Q1 Most Complex SOC Ever Made | 9 Billion Transistors, 350mm2, 12nFFN | ~8,000 Engineering Years Diversity of Engines Accelerate Entire AV Pipeline | Designed for ASIL-D AV Volta GPU FP32 / FP16 / INT8 Multi Precision 512 CUDA Cores 1.3 CUDA TFLOPS 20 Tensor Core TOPS ISP 1.5 GPIX/s Native Full-range HDR Tile-based Processing PVA 1.6 TOPS Stereo Disparity Optical Flow Image Processing Video Processor 1.2 GPIX/s Encode 1.8 GPIX/s Decode 16 CSI 109 Gbps 1Gbps E & 10Gbps Eithernet 256-Bit LPDDR4 137 GB/s DLA 5 TFLOPS FP16 10 TOPS INT8 Carmel ARM64 CPU 8 Cores 10-wide Superscalar 2700 SpecInt2000 Functional Safety Features Dual Execution Mode Parity & ECC World’s First Autonomous Machine Processor

- 52. NVDLA (NVIDIA DEEP LEARNING ACCELERATOR) XAVIER SOCにも内蔵 更なる電力効率向上 Command Interface Tensor Execution Micro-controller Memory Interface Input DMA (Activations and Weights) Unified 512KB Input Buffer Activations and Weights Sparse Weight Decompre- ssion Native Winograd Input Transform MAC Array 2048 Int8 or 1024 Int16 or 1024 FP16 Output Accumu- lators Output Post processor (Activation Function, Pooling etc.) Output DMA 係数が疎になる性質を利用して メモリバンド幅削減 極力乗算を減らす最新アルゴリズムで チップサイズ、消費電力低減 期待される数々の最新技術例 他機能は理にかなった 合理的なアクセラレーション Reference NVDLA: http://nvdla.org

- 53. なぜ AI 推論で演算精度・乗算回数を低減する必要があるか? Artem Vasilyev, “CNN Optimization for Embedded Systems and FFT” CS231n: CNN for Visual Recognition Course, Stanford, 2017 INT16では乗算は加算の 13倍の電力を消費 FPFF16では乗算は加算の 2.25倍の電力を消費 FP32では乗算は加算の 4.7倍の電力を消費 可能な限り演算精度を 下げる必要があることは 言うまでもない。 乗算回数低減はcuDNNライブラリや DLA (Deep Learning Accelerator) アーキテクチャで実施

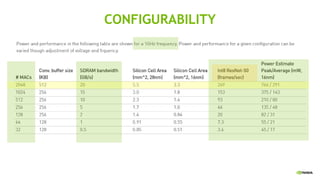

- 54. CONFIGURABILITY

- 55. XAVIER サンプリング:Q4 2017 ▪ 7 Billion Transistors TSMC Custom 12nm FFN Process ▪ 8 Core Custom ARM64 CPU ▪ 512 Core Volta GPU 20TOPS DL ▪ Computer Vision Accelerator CVA • including NVDLA (Deep Learning Accelerator) • 2,048 8-bit MAC x 2, 5TOPS x 2 = 10 TOPS DL • 1,024 FP16 or INT16 MAC x 2 too • Automatically Optimized, Compiled by TensorRT3 Max 30TOPS DL @ 30Watt Reference: Microprocessor Report “Xavier Simplifies Self-Driving Cars” June 19, 2017

- 56. NEW NVIDIA TENSORRT 3 DRIVE PX 2 JETSON TX2 NVIDIA DLA DLA: Deep Learning Accelerator TESLA P4 TensorRT TESLA V100 推論のプログラマブルな最適化環境 ニューラルネットの最適化 及びコンパイル データセンター 自動運転 スーパーコンピュータ 組み込みIoT SoC内蔵IoT 各ターゲット・プラットフォーム向け最適化全てのフレームワークをサポート 学習済の ニューラルネット 最適化された 実行コード

- 58. 室河 徹 ソリューション アーキテクト (オートモーティブ) エヌビディア GTC 2018 で発表された 自動運転最新情報

- 59. SAN JOSE CONFERENCE CENTER

- 64. SJCC ENTRANCE

- 70. EXHIBITION HALL

- 122. SIMULATION

- 138. AUTOMOTIVE SENSORS

- 154. 自動運転全般、アルゴリズム関連 • S81014 - Advancing State-of-the-Art of Autonomous Vehicles and Robotics Research using AWS GPU Instances (Presented by Amazon Web Services) • TRIによるAWSの活用事例、開発環境の紹介 • S8140 - Deep Learning for Automated Systems: From the Warehouse to the Road • Clemson Univ. 自動運転ソフトウェア開発フロー • S8862 - Autonomous Algorithms • Panelセッション。NNAISENSE社によるAUDI自動駐車実験の紹介や、fka社による経路生成におけ るDNNの活用など

- 155. マップ、ローカライゼーション関連 • S8834 - In-Vehicle Change Detection, Closing the Loop in the Car • HERE – セルフヒーリングマップ、フリート用センサーの紹介 • S8861 - Crowd-sourcing, Map updates, and Predictions as Complementary Solutions for Mapping • Panel (explorer.ai, VoxelMaps, DeepMap) – クラウドソーシングによるマップ、 Voxelベースの地図、オープンプロジェクト等 • S8618 - GPU Accelerated LIDAR Based Localization for Automated Driving Applications • FORD – GPUによるローカライゼーションの高速化

- 156. • S8758 - The Future of the In-Car Experience • Affectiva社による感情検出AIの紹介、学習データセットの事例や転移学習の成果について • S8970 - Creating AI-Based Digital Companion for Mercedes-Benz Vehicles • Mercedes Benzでの車載AIの実装 インキャビン関連

- 157. www.nvidia.com/dli

![DATA GENERATION PIPELINE

High Resolution 3D Head Scan

• Capture accurate 3D face details using depth sensor

or multiple synchronized cameras using triangulation

• High density mesh (~0.1 mm resolution)

• Why can’t we use such high resolution scan?

• Needs lots of computation power to transform each

mesh vertex

• More manual efforts for defining deformation and

key shapes

http://ten24.info/10-x-high-resolution-head-scans-avaliable-to-download/

[2]](https://image.slidesharecdn.com/nvdls1805bajimurokawa-180425032428/85/GTC-2018-35-320.jpg)

![DATA GENERATION PIPELINE

Mesh Deformation

• Define shape keys and set vertices manually

to define a face feature (e.g. eyes looking

down, smile, eyebrow raised)

• Intermediate values are interpolated

automatically

• Allows programmatic control of parameters

[1]](https://image.slidesharecdn.com/nvdls1805bajimurokawa-180425032428/85/GTC-2018-37-320.jpg)

![DATA GENERATION PIPELINE

Landmark annotation

• Manually mark points of interest

• Allows tracking and automatic labelling of face landmarks

• 2D and 3D point coordinates are available as the environment is synthetic

• Occluded points could be accepted/rejected programmatically

[1]](https://image.slidesharecdn.com/nvdls1805bajimurokawa-180425032428/85/GTC-2018-38-320.jpg)

![PARAMETER SETTING

Eye Openness

[1]](https://image.slidesharecdn.com/nvdls1805bajimurokawa-180425032428/85/GTC-2018-43-320.jpg)