Leveraging Azure Databricks to minimize time to insight by combining Batch and Stream processing pipelines.

- 6. A fast, easy and collaborative Apache® Spark™ based analytics platform optimized for Azure Best of Databricks Best of Microsoft Designed in collaboration with the founders of Apache Spark One-click set up; streamlined workflows Interactive workspace that enables collaboration between data scientists, data engineers, and business analysts. Native integration with Azure services (Power BI, SQL DW, Cosmos DB, Blob Storage, ADF, SQL DB, AAD) Enterprise-grade Azure security (Active Directory integration, compliance, enterprise -grade SLAs – 99.95%)

- 7. An unified, open source, parallel, data processing framework for Big Data Analytics Spark Core Engine Spark SQL Interactive Queries Spark Structured Streaming Stream processing Spark MLlib Machine Learning Yarn Mesos Standalone Scheduler MLlib Machine Learning Streaming Stream processing GraphX Graph Computation

- 8. Data Sources (Azure Storage, Cosmos DB, SQL) Cluster Manager Worker Node Worker Node Worker Node Driver Program SparkContext

- 12. COMPLEX DATA Diverse data formats (json, avro, binary, …) Data can be dirty, late, out-of-order COMPLEX SYSTEMS Diverse storage systems (Kafka, Azure Storage,Event Hubs, SQL DW, …) System failures COMPLEX WORKLOADS Combining streaming with interactive queries Machine learning

- 13. you should not have to reason about streaming

- 14. you should write simple queries & Azure Databricks should continuously update the answer

- 18. • High-Level APIs — DataFrames, Datasets and SQL. Same in streaming and in batch. • Event-time Processing — Native support for working with out-of-order and late data. • End-to-end Exactly Once — Transactional both in processing and output.

- 21. eventsDF = spark.readStream .format("kafka") .option(“subscribe", “myTopic") .option("startingOffsets", "earliest") .load() ) Source • Specify readStream • Specify format • Specify one or more locations to read data from • Provide an offset • using union()

- 24. DataFrames eventsDF .where("event_type = 'view'") .join(table("campaigns"), "ad_id") .groupBy( 'campaign_id) .count() Spark SQL SELECT campaign_id, count(*) FROM eventsDataFrame JOIN campaigns ON (campaigns.ad_id = events.ad_id) WHERE event_type = "view" GROUP BY "campaign_id"

- 26. eventsDF .filter( clicks > 9999) .writeStream .foreach(new EmailAlert()) E.g. Trigger an Alert when clicks > 9999 Latency: ~100 ms Generate Email Alert

- 29. filteredEvents .where("eventType = ‘view’") to_json filteredLogs "topic", “views" Forward filtered and augmented events back to Kafka Latency: ~100ms average Filter to_json() to convert columns back into json string, and then save as different Kafka topic

- 30. ETL Store augmented stream as efficient columnar data for later processing Latency: ~1 minute .repartition(1) .trigger("1 minute") Buffer data and write one large file every minute for efficient reads

- 33. parquet.`/data/events` Trouble shoot problems as they occur with latest information Latency: ~1 minute Ad-hoc Analysis will read latest data when query executed

- 34. Delta is a new Table Format that solves problems with Parquet Delta brings data reliability and performance optimizations to the cloud data lake. ● Improves performance by organizing data and creating metadata - Manages optimal files sizes - Stores statistics that enable data skipping - Creates and maintains indexes ● Improves data reliability by adding data management capabilities - Transactional guarantees simplify data pipelines - Schema enforcement ensures data is not corrupted - Upserts makes fixing bad data simple

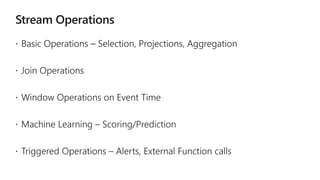

- 41. Unifies streaming, interactive and batch queries—a single API for both static bounded data and streaming unbounded data. Runs on Spark SQL. Uses the Spark SQL Dataset/DataFrame API used for batch processing of static data. Runs incrementally and continuously and updates the results as data streams in. Supports app development in Scala, Java, Python and R. Supports streaming aggregations, event-time windows, windowed grouped aggregation, stream-to-batch joins. Features streaming deduplication, multiple output modes and APIs for managing/monitoring streaming queries. Built-in sources: Kafka, File source (json, csv, text, parquet) A unified system for end-to-end fault-tolerant, exactly-once stateful stream processing

- 42. spark.readStream .format("kafka") .option("subscribe", "input") .load() .format("kafka") .option("topic", "output") .trigger("1 minute") .outputMode(OutputMode.Complete()) .option("checkpointLocation", "…") .start() Source • Specify one or more locations to read data from • Built in support for Files/Kafka/Socket, pluggable. • Can include multiple sources of different types using union()

- 43. spark.readStream .format("kafka") .option("subscribe", "input") .load() .groupBy('value.cast("string") as 'key) .agg(count("*") as 'value) .writeStream .format("kafka") .option("topic", "output") .trigger("1 minute") .outputMode(OutputMode.Complete()) .option("checkpointLocation", "…") .start() Transformation • Using DataFrames, Datasets and/or SQL. • Catalyst figures out how to execute the transformation incrementally. • Internal processing always exactly-once.

- 44. DataFrames, Datasets, SQL input = spark.readStream .format("kafka") .option("subscribe", "topic") .load() result = input .select("device", "signal") .where("signal > 15") result.writeStream .format("parquet") .start("dest-path") Logical Plan Read from Kafka Project device, signal Filter signal > 15 Write to Kafka Series of Incremental Execution Plans Kafka Source Optimized Operator codegen, off- heap, etc. Kafka Sink Optimized Physical Plan process newdata t = 1 t = 2 t = 3 process newdata process newdata

- 45. spark.readStream .format("kafka") .option("subscribe", "input") .load() .groupBy('value.cast("string") as 'key) .agg(count("*") as 'value) .writeStream .format("kafka") .option("topic", "output") .trigger("1 minute") .outputMode(OutputMode.Complete()) .option("checkpointLocation", "…") .start() Sink • Accepts the output of each batch. • When supported sinks are transactional and exactly once (Files). • Use foreach to execute arbitrary code.

- 46. spark.readStream .format("kafka") .option("subscribe", "input") .load() .groupBy('value.cast("string") as 'key) .agg(count("*") as 'value) .writeStream .format("kafka") .option("topic", "output") .trigger("1 minute") .outputMode("update") .option("checkpointLocation", "…") .start() Output mode – What's output • Complete – Output the whole answer every time • Update – Output changed rows • Append – Output new rows only Trigger – When to output • Specified as a time, eventually supports data size • No trigger means as fast as possible

- 47. spark.readStream .format("kafka") .option("subscribe", "input") .load() .groupBy('value.cast("string") as 'key) .agg(count("*") as 'value) .writeStream .format("kafka") .option("topic", "output") .trigger("1 minute") .outputMode("update") .option("checkpointLocation", "…") .start() Checkpoint • Tracks the progress of a query in persistent storage • Can be used to restart the query if there is a failure.

- 48. Checkpointing tracks progress (offsets) of consuming data from the source and intermediate state. Offsets and metadata saved as JSON Can resume after changing your streaming transformations end-to-end exactly-once guarantees process newdata t = 1 t = 2 t = 3 process newdata process newdata write ahead log

- 49. Specify options to configure How? kafka.boostrap.servers => broker1,broker2 What? subscribe => topic1,topic2,topic3 // fixed list of topics subscribePattern => topic* // dynamic list of topics assign => {"topicA":[0,1] } // specific partitions Where? startingOffsets => latest(default) / earliest / {"topicA":{"0":23,"1":345} } val rawData = spark.readStream .format("kafka") .option("kafka.boostrap.servers",... ) .option("subscribe", "topic") .load()

- 50. rawData dataframe has the following columns val rawData = spark.readStream .format("kafka") .option("kafka.boostrap.servers",... ) .option("subscribe", "topic") .load() key value topic partitio n offset timestamp [binary] [binary] "topicA" 0 345 1486087873 [binary] [binary] "topicB" 3 2890 1486086721

- 51. Cast binary value to string Name it column json val parsedData = rawData .selectExpr("cast (value as string) as json") .select(from_json("json", schema).as("data")) .select("data.*")

- 52. Cast binary value to string Name it column json Parse json string and expand into nested columns, name it data val parsedData = rawData .selectExpr("cast (value as string) as json" .select(from_json("json", schema).as("data") .select("data.*") json { "timestamp": 1486087873, "device": "devA", …} { "timestamp": 1486082418, "device": "devX", …} data (nested) timestamp device … 1486087873 devA … 1486086721 devX … from_json("json") as "data"

- 53. Transforming Data Cast binary value to string Name it column json Parse json string and expand into nested columns, name it data Flatten the nested columns val parsedData = rawData .selectExpr("cast (value as string) as json" .select(from_json("json", schema).as("data") .select("data.*") data (nested) timestam p device … 14860878 73 devA … 14860867 21 devX … timestam p device … 14860878 73 devA … 14860867 21 devX … select("data.*") (not nested)

- 54. Transforming Data Cast binary value to string Name it column json Parse json string and expand into nested columns, name it data Flatten the nested columns val parsedData = rawData .selectExpr("cast (value as string) as json") .select(from_json("json", schema).as("data")) .select("data.*") powerful built-in APIs to perform complex data transformations from_json, to_json, explode, ... 100s of functions (see our blog post)

- 55. Writing to Save parsed data as Parquet table in the given path Partition files by date so that future queries on time slices of data is fast e.g. query on last 48 hours of data val query = parsedData.writeStream .option("checkpointLocation", ...) .partitionBy("date") .format("parquet") .start("/parquetTable")

- 56. Checkpointing Enable checkpointing by setting the checkpoint location to save offset logs start actually starts a continuous running StreamingQuery in the Spark cluster val query = parsedData.writeStream .option("checkpointLocation", ... .format("parquet") .partitionBy("date") .start("/parquetTable/")

- 57. Streaming Query query is a handle to the continuously running StreamingQuery Used to monitor and manage the execution val query = parsedData.writeStream .option("checkpointLocation", ... .format("parquet") .partitionBy("date") .start("/parquetTable")/") process newdata t = 1 t = 2 t = 3 process newdata process newdata StreamingQuery

- 58. Data Consistency on Ad-hoc Queries Data available for complex, ad-hoc analytics within seconds Parquet table is updated atomically, ensures prefix integrity Even if distributed, ad-hoc queries will see either all updates from streaming query or none, read more in our blog https://databricks.com/blog/2016/07/28/structured-streaming-in-apache-spark.html complex, ad-hoc queries on latest data seconds!

- 59. More Kafka Support [Spark 2.2] Write out to Kafka Dataframe must have binary fields named key and value Direct, interactive and batch queries on Kafka Makes Kafka even more powerful as a storage platform! result.writeStream .format("kafka") .option("topic", "output") .start() val df = spark .read // not readStream .format("kafka") .option("subscribe", "topic") .load() df.registerTempTable("topicData") spark.sql("select value from topicData")

- 61. Event Time Many use cases require aggregate statistics by event time E.g. what's the #errors in each system in the 1 hour windows? Many challenges Extracting event time from data, handling late, out-of-order data DStream APIs were insufficient for event-time stuff

- 62. Event time Aggregations Windowing is just another type of grouping in Struct. Streaming number of records every hour Support UDAFs! parsedData .groupBy(window("timestamp","1 hour")) .count() parsedData .groupBy( "device", window("timestamp","10 mins")) .avg("signal")avg signal strength of each device every 10 mins

- 63. Stateful Processing for Aggregations Aggregates has to be saved as distributed state between triggers Each trigger reads previous state and writes updated state State stored in memory, backed by write ahead log in HDFS/S3 Fault-tolerant, exactly-once guarantee! process newdata t = 1 sink src t = 2 process newdata sink src t = 3 process newdata sink src state state write ahea d log state updates are written to log for checkpointing state

- 64. Automatically handles Late Data 12:00 - 13:00 1 12:00 - 13:00 3 13:00 - 14:00 1 12:00 - 13:00 3 13:00 - 14:00 2 14:00 - 15:00 5 12:00 - 13:00 5 13:00 - 14:00 2 14:00 - 15:00 5 15:00 - 16:00 4 12:00 - 13:00 3 13:00 - 14:00 2 14:00 - 15:00 6 15:00 - 16:00 4 16:00 - 17:00 3 13:0 0 14:0 0 15:0 0 16:0 0 17:0 0 Keeping state allows late data to update counts of old windows red = state updated with late data But size of the state increases indefinitely if old windows are not dropped

- 65. Watermarking Watermark - moving threshold of how late data is expected to be and when to drop old state Trails behind max seen event time Trailing gap is configurable event time max event time watermark data older than watermark not expected 12:30 PM 12:20 PM trailing gap of 10 mins

- 66. Watermarking Data newer than watermark may be late, but allowed to aggregate Data older than watermark is "too late" and dropped Windows older than watermark automatically deleted to limit the amount of intermediate state max event time event time watermark late data allowed to aggregate data too late, dropped

- 67. Watermarking max event time event time watermark allowed lateness of 10 mins parsedData .withWatermark("timestamp", "10 minutes") .groupBy(window("timestamp","5 minutes")) .count() late data allowed to aggregate data too late, dropped Useful only in stateful operations (streaming aggs, dropDuplicates, mapGroupsWithState, ...) Ignored in non-stateful streaming queries and batch queries

- 68. Watermarking data too late, ignored in counts, state dropped Processing Time12:0 0 12:0 5 12:1 0 12:1 5 12:10 12:15 12:20 12:0 7 12:1 3 12:0 8 EventTime 12:1 5 12:1 8 12:0 4 watermark updated to 12:14 - 10m = 12:04 for next trigger, state < 12:04 deleted data is late, but considered in counts parsedData .withWatermark("timestamp", "10 minutes") .groupBy(window("timestamp","5 minutes")) .count() system tracks max observed event time 12:0 8 wm = 12:04 10 min 12:1 4 More details in my blog post

- 69. Clean separation of concerns parsedData .withWatermark("timestamp", "10 minutes") .groupBy(window("timestamp","5 minutes")) .count() .writeStream .trigger("10 seconds") .start() Query Semantics Processing Details separated from

- 70. Clean separation of concerns parsedData .withWatermark("timestamp", "10 minutes") .groupBy(window("timestamp","5 minutes")) .count() .writeStream .trigger("10 seconds") .start() Query Semantics How to group data by time? (same for batch & streaming) Processing Details

- 71. Clean separation of concerns parsedData .withWatermark("timestamp", "10 minutes") .groupBy(window("timestamp","5 minutes")) .count() .writeStream .trigger("10 seconds") .start() Query Semantics How to group data by time? (same for batch & streaming) Processing Details How late can data be?

- 72. Clean separation of concerns parsedData .withWatermark("timestamp", "10 minutes") .groupBy(window("timestamp","5 minutes")) .count() .writeStream .trigger("10 seconds") .start() Query Semantics How to group data by time? (same for batch & streaming) Processing Details How late can data be? How often to emit updates?

- 73. Arbitrary Stateful Operations [Spark 2.2] mapGroupsWithState allows any user-defined stateful function to a user-defined state Direct support for per-key timeouts in event-time or processing-time Supports Scala and Java 73 ds.groupByKey(_.id) .mapGroupsWithState (timeoutConf) (mappingWithStateFunc) def mappingWithStateFunc( key: K, values: Iterator[V], state: GroupState[S]): U = { // update or remove state // set timeouts // return mapped value }

- 74. Other interesting operations Streaming Deduplication Watermarks to limit state Stream-batch Joins Stream-stream Joins Can use mapGroupsWithState Direct support oming soon! val batchData = spark.read .format("parquet") .load("/additional-data") parsedData.join(batchData, "device") parsedData.dropDuplicates("eventId")

![Specify options to configure

How?

kafka.boostrap.servers => broker1,broker2

What?

subscribe => topic1,topic2,topic3 // fixed list of topics

subscribePattern => topic* // dynamic list of topics

assign => {"topicA":[0,1] } // specific partitions

Where?

startingOffsets => latest(default) / earliest / {"topicA":{"0":23,"1":345} }

val rawData = spark.readStream

.format("kafka")

.option("kafka.boostrap.servers",...

)

.option("subscribe", "topic")

.load()](https://image.slidesharecdn.com/brk3314-180515233724/85/Leveraging-Azure-Databricks-to-minimize-time-to-insight-by-combining-Batch-and-Stream-processing-pipelines-49-320.jpg)

![rawData dataframe has the

following columns

val rawData = spark.readStream

.format("kafka")

.option("kafka.boostrap.servers",...

)

.option("subscribe", "topic")

.load()

key value topic partitio

n

offset timestamp

[binary] [binary] "topicA" 0 345 1486087873

[binary] [binary] "topicB" 3 2890 1486086721](https://image.slidesharecdn.com/brk3314-180515233724/85/Leveraging-Azure-Databricks-to-minimize-time-to-insight-by-combining-Batch-and-Stream-processing-pipelines-50-320.jpg)

![More Kafka Support [Spark 2.2]

Write out to Kafka

Dataframe must have binary fields named

key and value

Direct, interactive and batch

queries on Kafka

Makes Kafka even more powerful as a

storage platform!

result.writeStream

.format("kafka")

.option("topic", "output")

.start()

val df = spark

.read // not readStream

.format("kafka")

.option("subscribe", "topic")

.load()

df.registerTempTable("topicData")

spark.sql("select value from topicData")](https://image.slidesharecdn.com/brk3314-180515233724/85/Leveraging-Azure-Databricks-to-minimize-time-to-insight-by-combining-Batch-and-Stream-processing-pipelines-59-320.jpg)

![Arbitrary Stateful Operations [Spark 2.2]

mapGroupsWithState

allows any user-defined

stateful function to a

user-defined state

Direct support for per-key

timeouts in event-time or

processing-time

Supports Scala and Java

73

ds.groupByKey(_.id)

.mapGroupsWithState

(timeoutConf)

(mappingWithStateFunc)

def mappingWithStateFunc(

key: K,

values: Iterator[V],

state: GroupState[S]): U = {

// update or remove state

// set timeouts

// return mapped value

}](https://image.slidesharecdn.com/brk3314-180515233724/85/Leveraging-Azure-Databricks-to-minimize-time-to-insight-by-combining-Batch-and-Stream-processing-pipelines-73-320.jpg)