Measuring massive multitask language understanding

- 1. Measuring Massive Multitask Language Understanding San Kim 2021.04.14 ChDan Hendrycks(1), Collin Burns(2), Steven Basart(3), Andy Zou(1), Mantas Mazeika(4), Dawn Song(1), Jacob Steinhardt(1) 1. UC Berkeley 2. Columbia University 3. Uchicago 4. UIUC

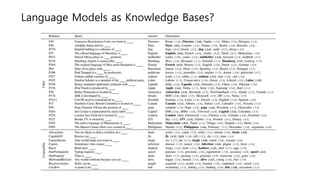

- 2. Language Models as Knowledge Bases? FAIR, UCL 1. Without fine-tuning, BERT contains relational knowledge competitive with traditional NLP methods that have some access to oracle knowledge. 2. BERT also does remarkably well on open-domain question answering against a supervised baseline 3. Factual knowledge can be recovered surprisingly well from pretrained language models, however, for some relations (particularly N-to-M relations) performance is very poor 4. BERT-large consistently outperforms other language models in recovering factual and commonsense knowledge while at the same time being more robust to the phrasing of a query

- 3. Language Models as Knowledge Bases?

- 4. Language Models are Unsupervised Multitask Learners OpenAI

- 5. Language Models are Unsupervised Multitask Learners

- 6. GPT3 there is still a need for task-specific datasets and task-specific fine-tuning: to achieve strong performance on a desired task typically requires fine-tuning on a dataset of thousands to hundreds of thousands of examples specific to that task. • the need for a large dataset of labeled examples for every new task limits the applicability of language models. • the potential to exploit spurious correlations in training data fundamentally grows with the expressiveness of the model and the narrowness of the training distribution. • humans do not require large supervised datasets to learn most language tasks - a brief directive in natural language or at most a tiny number of demonstrations is often sufficient to enable a human to perform a new task Pre-trained transformer language models Motivation OpenAI

- 7. GPT3

- 8. GPT3

- 9. GPT3

- 10. T5 Google

- 12. UnifiedQA

- 13. UnifiedQA

- 14. Measuring Massive Multitask Language Understanding Linguistics Commonsense

- 15. Measuring Massive Multitask Language Understanding

- 16. Measuring Massive Multitask Language Understanding Transformer models have driven this recent progress by pretraining on massive text corpora, including all of Wikipedia, thousands of books, and numerous websites. These models consequently see extensive information about specialized topics, most of which is not assessed by existing NLP benchmarks. a new benchmark for assessing models across a diverse set of subjects that humans learn. • zero-shot and few-shot settings • 57 subjects across STEM, the humanities, the social sciences, and more • difficulty from an elementary level to an advanced professional level • it tests both world knowledge and problem solving ability • Mathmatics, history, law, ethics … • The granularity and breadth of the subjects makes the benchmark ideal for identifying a model’s blind spots.

- 17. Measuring Massive Multitask Language Understanding Recent models learn enough information from pretraining that they can serve as knowledge bases. However, no prior work has comprehensively measured the knowledge models have across many real-world domains. Humanities • Law, philosophy, history, … • Legal understanding: how to apply rules and standard to complex scenarios. Understanding following rules and regulations (a necessary capability to constrain open-world machine) • Philosophy: logical fallacies, formal logic, famous philosophical arguments • Ethics: test a model’s understanding of normative statements through predicting widespread moral intuitions • History: covers a wide range of time periods and geographical location, including prehistory and other advanced subjects

- 18. Measuring Massive Multitask Language Understanding Social Science • Economics, sociology, politics, geography, psychology, … • Economics: microeconomics, macroeconomics, econometrics, cover different types of problems – require a mixture of world knowledge, qualitative reasoning, or quantitative reasoning STEM • Physics, computer science, mathematics, … • Conceptual physics (harder version of the physical commonsense benchmark Physical IQA) • College mathematics questions (like those found on the GRE mathematics) (LaTeX) • STEM subjects require knowledge of empirical methods, fluid intelligence, and procedural knowledge Others • Professional Medicine task, finance, accounting, marketing, knowledge of global facts – (e.g. statistics about poverty in different countries over time)

- 19. Measuring Massive Multitask Language Understanding

- 20. Measuring Massive Multitask Language Understanding

- 21. Measuring Massive Multitask Language Understanding

- 22. Measuring Massive Multitask Language Understanding GPT-3 (few-shot, zero-shot setting) UnifiedQA (without any further tuning to assess its transfer accuracy) RoBERTa-base, ALBERT-xxlarge, GPT-2 (fine-tuned on UnifiedQA training data and dev+val set.)

- 23. Measuring Massive Multitask Language Understanding

- 24. Measuring Massive Multitask Language Understanding Since language models train on vast text corpora, there is some chance that they have seen the exact question and answer during pretraining. If they memorized the exact question and answer, then they would attain higher accuracy than their true ability. Likewise, a question’s entropy would be especially low if it were memorized. We also note that most of our questions came from PDFs or websites where questions and answers are on separate pages. This suggests that our exact questions were not memorized. However, during pretraining models encountered text related to our questions through processing Wikipedia. We also note that most of our questions came from PDFs or websites where questions and answers are on separate pages.

- 25. Measuring Massive Multitask Language Understanding

- 26. Measuring Massive Multitask Language Understanding • Poorly on highly procedural problems. • Calculation-heavy STEM subjects tend to have low accuracy compared to verbal subjects • For GPT-3, 9 out of the 10 lowest-accuracy tasks are STEM subjects. (poor performance on Elementary Mathematics and many other STEM subjects with “plug and chug” problems. The tasks with near-random accuracy include calculation-heavy subjects such as physics and mathematics and subjects related to human values such as law and morality. Worryingly, we also find that GPT-3 does not have an accurate sense of what it does or does not know since its average confidence can be up to 24% off from its actual accuracy. • Multimodal Understanding: While text is capable of conveying an enormous number of concepts about the world, many important concepts are conveyed mainly through other modalities, such as images, audio, and physical interaction. … One such benchmark could be a “Turk Test,” consisting of Amazon Mechanical Turk Human Intelligence Tasks. • The Internet as a Training Set: A major distinction between our benchmark and previous multitask NLP benchmarks is that we do not require large training sets. Instead, we assume that models have acquired the requisite knowledge from reading vast quantities of diverse text from the internet.

- 27. Measuring Massive Multitask Language Understanding • Model Limitation: Models do not match expert-level performance (90%) on any subject, so for all subjects it is subhuman. On average, models are only now starting to move beyond random-chance accuracy levels. Addressing these shortcomings may be challenging. To illustrate this, we attempted to create a better Professional Law model by pretraining on specialized data but achieved only limited success. We collected approximately 2,000 additional Professional Law training examples. After fine-tuning a RoBERTa-base model (Liu et al., 2019) using this custom training set, our model attained 32.8% test accuracy. To test the impact of additional specialized training data, we also had RoBERTa continue pretraining on approximately 1.6 million legal case summaries using Harvard’s Law Library case law corpus case.law, but after fine-tuning it only attained 36.1% accuracy. This suggests that while additional pretraining on relevant high quality text can help, it may not be enough to substantially increase the performance of current models. It is unclear whether simply scaling up existing language models will solve the test. Current understanding indicates that a 10× increase in model size must be accompanied by an approximate 5× increase in data (Kaplan et al., 2020). Aside from the tremendous expense in creating multi-trillion parameter language models, data may also become a bottleneck, as there is far less written about esoteric branches of knowledge than about everyday situations.

- 28. Measuring Massive Multitask Language Understanding

- 29. Measuring Massive Multitask Language Understanding