OOP 2014 - Lifecycle By Design

- 1. LIFECYCLE BY DESIGN Enabling Collaboration across Development, Test and Production Teams Wolfgang Gottesheim COMPANY CONFIDENTIAL – DO NOT DISTRIBUTE 1

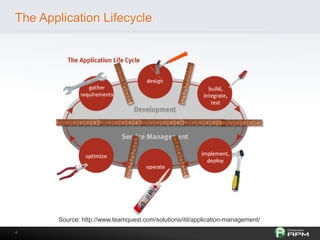

- 3. Application Lifecycle Management Application lifecycle management (ALM) is the product lifecycle management (governance, development, and maintenance) of application software. It encompasses requirements management, software architecture, computer programming, software testing, software maintenance, change management, project management, and release management. Source: http://en.wikipedia.org/wiki/Application_lifecycle_management accessed Dec 16, 2013 3

- 4. The Application Lifecycle Source: http://www.teamquest.com/solutions/itil/application-management/ 4

- 5. Project deliverables What you set out to build… …and what you get 5

- 6. What we tried to do… • Update our Customer Self-Service Portal 6

- 7. Our (Business) Requirements • “Provide a modern user experience” – aka ―Get the Community from Web 1.0 to Web 2.0‖ – New features: AJAXified editors, Popups when someone else is editing the same page, notifications about new comments, auto refresh in the background,… 7

- 8. What went wrong? Our mistakes: – We left PERFORMANCE out – We thought about new features instead of thinking about the user experience 8

- 9. 9

- 10. Design • We wanted to continue using Confluence – 3rd party software major design decisions made elsewhere – Extended by custom plugins ensure compatibility • Our mistake: assuming that resource usage will remain stable in the new version 10

- 11. Build/Integrate/Test • Testing was focused on FUNCTIONALITY – ―Does the site work as expected?‖ – Tests in small-scale staging environment some findings disregarded because of environmental differences • Our mistake: we didn’t test from the end-user perspective in a realistic environment 11

- 12. Deploy • Actual rollout was documented and well-tested in staging environment • Also part of deployment phase: User Training – We thought the new features are so obvious that people will find them • Our mistake: we didn’t make sure that features are accessible 12

- 13. Operate • Mistakes we made earlier added up… – Slow response times – High resource usage (CPU, memory) – Timeouts – Crashes – User complaints • Blame it on IT? 13

- 14. What problems did we see? 14

- 16. Compatibility Issues with custom plugins 16

- 17. Requesting too much data from DB 17

- 18. Oversized Pages 17! JS Files – 1.7MB in Size Useless Information! Even might be a security risk! 18

- 19. Caches 62! Resources not cached 49! Resources with short expiration 19

- 21. 21

- 22. Optimize • Monitor the impact of new features on server-side performance and end-user experience • Monitor actual usage and consider tradeoff between performance and features • Our success: after rollout, we were able to identify the bottlenecks and make necessary changes 22

- 23. 23

- 24. Very ―expensive‖ to work on these issues YES we know this BUT ~ 80% caused by 24 of problems ~20% patterns

- 25. Lots of Problems that could have been avoided • BUT WHY are we still dragging them along across the lifecycle – up into production? 25

- 26. Requirements EXCLUDED in Stories / Tasks Performance Scalability Deployability 26 Testability Deployability

- 27. Test and Ops EXCLUDED from Agile Process Stand-Ups 27 Sharing Tools Feedback

- 29. Measurement: Define KPIs accepted by all teams Perf Test Code Coverage # of SQL Executions Time for Rollback Response Times MBs / Uses # of Log Lines Time for Deployment 29

- 30. DevOps Automation in Action Lets look behind the scenes • Most problems can be identified in CI Architectural Data Test Framework Results Build # Test Case Status Build 17 testPurchase OK 12 0 120ms testSearch OK 3 1 68ms testPurchase FAILED 12 5 60ms testSearch OK 3 1 68ms testPurchase OK 75 0 230ms testSearch OK 3 1 68ms Build 18 Build 19 Build 20 30 # SQL # Excep CPU 12 0 120ms Exceptions probably reason 3 1 68ms testSearch identified a regresesion OK We for failed tests Problem fixed but now we have an Problem solved architectural regression Now we have the functional and architectural confidence testPurchase OK

- 31. Performance Focus in Test Automation Analyzing All Unit / Performance Tests Jump in DB Calls from one Build to the next Analyzing Metrics such as DB Exec Count 31

- 32. Performance Focus in Test Automation Cross Impact of KPIs 32

- 33. Performance Focus in Test Automation Embed your Architectural Results in Jenkins 33

- 34. Performance Focus in Test Automation Compare Build that shows BAD Behavior! With Build that shows GOOD Behavior! Here is the difference! 34

- 35. Performance Focus in Test Automation CalculateUserStats is the new Plugin that causes problems 35

- 36. Thank You Participate in Compuware APM Discussion Forums apmcommunity.compuware.com Follow us on Twitter twitter.com/CompuwareAPM Like us on Facebook Read our Blog facebook.com/CompuwareAPM http://apmblog.compuware.com Join our LinkedIn group Watch our Videos & product Demos Compuware APM User Group youtube.com/Compuware www.compuware.com/APM 36 © 2011 Compuware Corporation — All Rights Reserved

- 37. 37 COMPANY CONFIDENTIAL – DO NOT DISTRIBUTE © 2011 Compuware Corporation — All Rights Reserved

Editor's Notes

- #2: AbstractHow do companies developing business-critical Java enterprise Web applications increase releases from 40 to 300 per year and still remain confident about a spike of 1,800 percent in traffic during key events such as Super Bowl Sunday or Cyber Monday? It takes a fundamental change in culture. Although DevOps is often seen as a mechanism for taming the chaos, adopting an agile methodology across all teams is only the first step. This session explores best practices for continuous delivery with higher quality for improving collaboration between teams by consolidating tools and for reducing overhead to fix issues. It shows how to build a performance-focused culture with tools such as Hudson, Jenkins, Chef, Puppet, Selenium, and Compuware APM/dynaTrace

- #3: We’re all familiar with this or a similar process model – but there are two things wrong with it:While the steps should be integrated and have feedback loops, most of the time we see walls being built between them

- #5: We’re all familiar with this or a similar process model – but there are two things wrong with it:While the steps should be integrated and have feedback loops, most of the time we see walls being built between them – different teams work on different aspects, but often the big picture is lostDifferent silos -> different tools - reduces automation and increases management efforts, learning curvesService management aspects are not taken into account by developers (and vice versa)

- #7: New features: new rich text editor, notifications for forum replies, new layout

- #8: No considerations about response time, web page size, etc.

- #11: e.g. Notification mechanism for new forum posts – users want to know when someone replied to their post, but does really have to be within a 10sec timeframe?

- #12: Didn’t use a “full” browser Web2.0 features were left outDidn’T measure the KPIs that were actually interesting – like the number of db calls, number of web requests, the page size, the effect of heavy client-side scripting on our end-user browsers

- #13: Performance results from staging are not comparable to our production environment – different hardware, different test data, different indexes in db,…

- #14: IT were the ones with the least involvement across the process

- #16: Resource Pool ExhaustionMisconfiguration or failed deployment, e.g: default config from devActual resource leak -> can be identified with Unit/Integration Tests

- #17: Deployment Issues leading to heavy logging resulting in high I/O and CPUUsing the same deployment tools in Test and Ops can prevent thisAnalyzing Log Output per Component in Dev prevents this problem

- #18: Too much data requested from DatabaseDev and Test need to have “production-like” database – Otherwise these problem patterns can only be found in prodEducate Developers on “the power of SQL” – instead of loading everything in memory and performing filters/aggregations/… in the App

- #19: Not following WPO (Web Performance Optimization Rules)Non optimized content, e.g: compression, merging, …Educate developers and automate WPO checks

- #20: Not leveraging Browser-side CachingMisconfigured CDNs or missing cache settings -> automate cache configuration deploymentEducate developers; Educate testers to do “real life” testing (CDN, …)

- #21: Slow or failing 3rd party contentImpacts page load time; Ops is required to monitor 3rd party servicesEducatedevs to optimize loading; Educate test to include 3rd party testing

- #25: We all know this statistic in one form or another – so – it is clear that these problems that are handled in War Rooms are VERY EXPENSIVEBUTWhat is interesting is that these problems are typically not detected earlier because the focus of engineering is on building new features instead of focusing on performance and scalable architecture.What’s interesting though is that many of these problems could easily be found earlier on – LETS have a look at these common problems that we constantly run into …

- #27: Agile Development (Stories & Tasks) excludesPerformance and Scalability Requirements from TestTestability Requirements from TestDeployment and Stability Requirements from OpsRequirements: are currently mainly brought in by the business side who demand more features. What is missing are the requirements from Test and Ops.

- #28: Agile Process excludes Test and OpsNot part of Standups, Reviews, Planning'sNo active sharing of data, requirements, feedbackNo common toolset/platform/metrics that makes sharing easyCollaboration: Test & Ops are not part of the agile process. There is no active involvement in the standups, reviews or planning meetings. The lack of common tools and a different understanding of quality, metrics and requirements also make it hard to share dataSharing ToolsThe different teams currently use their own set of tools that help them in their day-to-day work in their “local” environment.Developers focus on development tools to help them with developing code, debugging and analyzing the basic problems.Testers use their load testing tools and combine them with some system monitoring tools to e.g: capture CPU, Memory, Network UtilizationOps uses their tools to analyze network traffic, host health, log analyzers, …When these teams need to collaborate in order to identify the root cause of a problem they typically speak a different language. Developers are used to debuggers, thread and memory dumps. But what they get is things like “the system is slow with that many Virtual Users on the system where Host CPU starts showing a problem”.When there is a production problem both developers and testers are typically not satisfied with network statistics or operating system event logs that don’t tell them what really went on in the application. Test wont be able to reproduce the problem with that information nor will devs be able to debug through their code based on that informationIn order to make life easier for developers to troubleshoot the issue they would like to install their tools in test and ops – but these tools are typically not fit for high load and production enviornments. Debuggers have too much overhead, they require restarts and changes to the system -> Ops doesn’t like change!!

- #29: Automation: C/I currently only executes tests that cover functionality, e.g: unit and maybe integration or some functional tests (Selenium, …). What is missing is the concept of already executing small scale performance and scalability tests that would allow us to automatically detect those problem patterns discussed earlier. With that we could already eliminate the need for MOST War Room situations.

- #30: Some examples on KPIsNumber of SQL Statements executed -> tells Ops on what to expect in production-> tells architects on whether to optimize this with a cache or a different DB Access StrategyNumber of Log Lines-> tells Ops how to optimize storage for Logging-> tells architects whether there is LOG SPAM happeningMemory Consumption per User Session-> tells Ops how to scale their production environment-> tells architects whether you are “wasteful” with heap spaceTime for a single DeploymentTime for rollbacks

- #31: When we look at the results of your Testing Framework from Build over Build we can easily spot functional regressions. In our example we see that testPurchase fails in Build 18. We notify the developer, problem gets fixed and with Build 19 we are back to functional correctness. Looking behind the scenesThe problem is that Functional Testing only verifies the functionality to the caller of the tested function. Using dynaTrace we are able to analyze the internals of the tested code. We analyze metrics such as Number of Executed SQL Statements, Number of Exceptions thrown, Time spent on CPU, Memory Consumption, Number of Remoting Calls, Transfered Bytes, …In Build 18 we can see a nice correlation of Exceptions to the failed functional test. We can assume that one of these exceptions caused the problem. For a developer it would be very helpful to get exception information which helps to quickly identify the root cause of the problem and solve it faster.In Build 19 the Testing Framework indicates ALL GREEN. When we look behind the scenes we see that we have a big jump in SQL Statements as well as CPU Usage. What just happened? The Developer fixed the functional problem but introduced an architectural regression. This needs to be looked into – otherwise this change will have negative impact on the application once tested under loadIn Build 20 all these problems are fixed. We are still meeting our functional goals and are back to acceptable number of SQL Statements, Exceptions, CPU Usage, …

- #33: Web Architectural Metrics# of JS Files, # of CSS, # of redirectsSize of Images