Preference and Desirability Testing, Measuring Emotional Response to Guide Design - UPA 2011 - Michael Hawley & Paul Doncaster

- 1. Michael Hawley Chief Design Officer, Mad*Pow @hawleymichael Paul Doncaster Senior User Experience Designer, Thomson Reuters

- 2. Why we should care Why it’s not always as simple as asking: “Which option do you prefer?” Methods to consider Case Study: Greenwich Hospital Case Study: WestlawNext Summary/Comparison

- 3. 3

- 6. 6 http://www.behaviormodel.org/ Core motivators include: • Pleasure/pain • Hope/fear • Acceptance/rejection

- 8. 8

- 10. “There’s just something about it . . .” “It reminds me of…” “I ordinarily don’t like red, but for some reason it works here . . .” “It’s nice and clean.” “It’s better than the other ones.”

- 11. “We should go with design C over A and B, because I feel it evokes the right kind of emotional response in our audience that is closer to our most important brand attributes.”

- 12. 12

- 13. Present three different concepts or ideas to participants, and ask them to identify how two of them are different from the third and why. 13

- 14. Broad, experience-based questionnaires, that also include questions relating to visual appeal and aesthetics • SUS (System Usability Scale) • QUIS (Questionnaire for User Interface Satisfaction) • WAMMI (Website Analysis and Measurement Inventory) 14

- 15. Show participants a user interface for a very brief moment, then take it away. Participants recall their first impression, then moderator probes for meaning. • Helpful for layout decisions, prominence of content, labels • www.fivesecondtest.com 15 Attention designers: You have 50 milliseconds to make a good first impression

- 16. • Electroencephalography (EEG): Brain activity • Electromyography (EMG): Muscles and Excitement • Electrodermal Activity (EDA): Sweat, Excitement • Blood Volume Pressure (BVP): Arousal • Pupil Dilation: Arousal and Mental Workload • Respiration: Negative Valence or Arousal 16

- 17. 17 Dr. Pieter Desmet, Technical University of Delft http://www.premo-online.com

- 19. 19

- 20. Determine intended brand attributes (and their opposites) 20 1. Leverage existing marketing/brand materials 2. Alternatively, stakeholder brainstorm to identify key brand attributes/descriptors using full list of product reaction cards as a start 3. Tip: “If the brand was a person, how would it speak to your customers?”

- 21. Methodology 1. Include 60/40 split of positive and negative words 2. Target 60 words, optimized to test brand 3. Simple question: “Which of the following words do you feel best describe the site/design/product (please select 5):” 4. One comp per participant, or multiple comps per participant (no more than 3) Participants 1. Qualitative: Paired with usability testing 2. Quantitative: Target minimum of 30 per option if possible 21

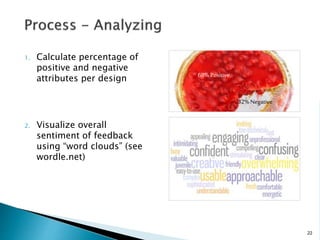

- 22. 1. Calculate percentage of positive and negative attributes per design 2. Visualize overall sentiment of feedback using “word clouds” (see wordle.net) 22 68%Positive 32%Negative

- 23. • Align the website with the character of the Hospital • Update the site after nearly 10 years • Counter impressions that Greenwich is more than just maternity and elder care • Communicate that they are long-standing members of the community 23

- 24. • 3 visually designed comps • 50 people reacted to each comp (quantitative) via survey • Additional feedback obtained via participant interviews (qualitative) 24 Hello, I am requesting feedback on a website I am working on. Your answers let me know if the site is conveying the right feel. 1. What are your initial reactions to the web site? 2. Which of the following words best do you feel best describe the site (select 5): Survey Questions

- 25. 25

- 29. • Mix of qualitative and quantitative is key o Qualitative helps provide color to the results o Quantitative resonates with stakeholders and executives • Position results as one form of input to decision-making process, not declaring a “winner” • Simple, cost-efficient way to assess audience’s emotional response to a design 29

- 30. 30 UX Research Team: Paul Doncaster Drew Drentlaw Shannon O’Brien Bill Quie November Samnee

- 32. for Phase 1 • Use large sample sizes to establish a design “baseline,” from which to advance the design direction in subsequent iterations • Isolate preference trends for specific page design aspects • Determine tolerance for manipulation of the site “brand” • Maintain tight security

- 33. Sessions were held in 4 cities over 5 days ◦ Seattle ◦ Denver ◦ Memphis ◦ Minneapolis-St. Paul 4 sessions were held per day, with a maximum of 25 participants per session 1.5 hours allotted per study, most participants finished in less than 1 hour 319 participants successfully completed their sessions

- 34. Participants completed the study at individual workstations at their own pace All workstations included a 20” monitor, at 1024x768 resolution Memphis, TN, May 2009

- 35. 1. Brief review of Westlaw critical screens 2. Positive/negative word selection to describe Westlaw 35

- 36. 1. Each set of Element variations were viewed in full screen 2. Participant selects “top choice” by dragging a thumbnail image to a drop area 36

- 37. 37

- 38. 1. All options viewed in full screen 2. Participant selects “top choice” by dragging a thumbnail image to a drop area

- 41. Visual Weight (6 options) Use of Imagery (8 options) Components (4 options) Search Area (4 options) Palette (10 options)

- 42. 1. 19 HP designs viewed in full screen (randomized) 2. All 19 options are presented again; participant assigns a rating using a 10-point slider. 3. Top 5 and Bottom 2 choices are positioned in order of rating values on one long, scrollable page. Next to each design displayed, rates key aspects for each design on a 5-point scale

- 48. Repeat the process for Results List design: • Design Elements • Column Collapsing (4 options) • Column Separation (2 options) • Theme/Color (8 options) • Design Gallery • 14 Results Lists designs (randomized) • Key Aspects Rated • Color scheme • Global Header • Summary and Excerpt (list contents) • Filters design (left column) • Overall look and feel

- 49. Repeat the process for Document Display design: • Design Elements • Tabs vs. Links (4 options) • Background Separation (4 options) • Margin Width (3 options) • Font Size (12 options) • Locate (2 options) • Design Gallery • 9 Document Display designs (randomized) • Key Aspects Rated • Color scheme • Layout of content • Text formatting • Overall look and feel

- 50. “Based on the designs I’ve liked most today . . .” 50

- 51. Results were analyzed across 8 different sample filters • Job Title • Age • Testing Location • Years of Experience • Hours per Week Researching • Organization Size • Role (decision-maker status) The top picks were surprisingly consistent across all of the ‘Top 5’ lists analyzed

- 52. 52 1 2 3 4 5 Overall (319) Job Title. Top 5 out of 19 possible. Associate (189) Librarian (37) Partner (81) 1 2 3 4 5 1 2 3 4 5 1 2 3 4 5 Solo Practitioner (5) 1 2 3 4 5 HP16 HP10 HP15 HP8 HP5 HP16 HP15 HP15 HP15 HP10 HP1 HP8 HP8 HP16 HP5 HP5 HP14 HP16 HP8 HP19 HP1 HP6 HP8 HP7 HP13

- 53. Home Page (19) ◦ HP16 & HP15 designs consistently placed in the Top 5 across all filters Results List (14) ◦ RL4 consistently placed in the Top 3 across all sample filters, and was the #1 choice for 80% of all participants Document Display (9) ◦ DD3 placed in the Top 5 across all sample filters and was the #1 choice for 77% of all participants

- 54. Note, participants were asked to describe the current Westlaw before being shown the new designs. 54 Current Westlaw 1. Cluttered 2. Helpful 3. Comfortable 4. Efficient 5. Credible New Designs 1. Attractive 2. Modern 3. Efficient 4. Helpful 5. Comfortable

- 55. 5 design themes were derived from post-session discussions • “New design(s) are better than current Westlaw” • “Clean and Fresh” • “Contrast is Important” • “Prefer Westlaw Blue” • “No Big Fonts Please” The study narrowed the list of potential designs, and we better understood what design elements that Westlaw users liked and disliked.

- 56. 56 Kansas City, MO, Sept 2009

- 57. Goals • Refine preferences for selected design directions • Understand users personal reasons for liking their preferred choices • Get closure on other design options for online and printed content • Sustain tight security Tool • Same as in Round 1, with some minor revisions to accommodate specialized input

- 58. Method ◦ View, Rate, and Pick Top Choice for Homepage (3 options) Result List (2 options) Document Display (2 options) “Why?” ◦ Simple preference selection for two unresolved UI design issues Citing References: Grid display or List display? Out of Plan Indication design (6 options) ◦ Type formatting preferences for 3 different content types Font Face Font Size Margin Width

- 59. Logistics ◦ 3 cities (Philadelphia, Kansas City, Los Angeles) ◦ 1 Day ◦ 226 participants Analysis ◦ Filters (8 categories) were used to score the designs for each visual preference Results ◦ Clear choices for top designs in each of all categories ◦ “Why” feedback shed new light on designs under consideration and helped focus “homestretch” design activities

- 60. Home Page (3) ◦ HP3 ranked #1in 94% of filter groups (54% of total participants) Results List (2) ◦ RL5 ranked #1in 97% of filter groups (58% of total participants) Document Display (2) ◦ DD7 ranked #1in 94% of filter groups (61% of total participants)

- 61. The main concerns regarding Homepage Design HP3 ◦ Search Box Too small How do I do a Terms-and-Connectors search? ◦ Browse Section How do I specify multiple or specific search content? Poor organization Poor label ◦ Need access to “often-used” content ◦ Need better access to help 61

- 62. Goals ◦ Get feedback on branding options from decision makers and those who influence purchase of the product ◦ Get closure on final outstanding design issues Tool ◦ Same as in Rounds 1 & 2, with some minor revisions to accommodate specialized input

- 63. Method ◦ Wordmark/Branding View wordmark color combinations and design elements against different backgrounds, pick top choice and provide comments Make a final “Top Choice” from all selections ◦ Simple preference selection for outstanding UI design issues Header Space: Tile or No Tile? Notes Design Location: Inline or Column? State: Open or Closed? Headnote Icon design (4 variations)

- 64. 1 2 3 4 Your Most Liked Your Least Liked What color combination do you prefer? Please rank the 4 combinations below according to your preferences. To rank, click and drag an item from the left to a box on the right.

- 65. Logistics ◦ 3 cities (Seattle, Denver, Boston) ◦ 1 Day ◦ 214 participants Analysis ◦ Simple preference, no advanced filters Results ◦ Decision-makers confirmed that critical brand elements should be retained

- 66. Image Overall DM Votes Sole Decision Makers Decision Making Committee Member Influence Decision Makers 1 38% (46/121) 9 14 23 2 36% (43/121) 11 15 17 3 21% (25/121) 9 6 10 4 6% (7/121) 2 1 4

- 67. 67

- 68. Measuring Emotional Response to Guide Design • Quantitative & qualitative data to identify preference trends • “Slicing” across identifiable filters • Emphasis on “gut-level” reactions • Intolerance for manipulation of product brand • Rapid turnaround of data to all stakeholders o Executive o Design o Development

- 69. 69 May 2009 Sept 2009 Feb 2010

- 70. At what cost(s)? • We held off asking “why” until the second round • If we had asked why in the first round, we might have o avoided some of internal design battles o gotten more granular ammunition for communicating the design vision to stakeholders • “Need for speed” attained at the cost of detailed analysis

- 71. Recommendations for anyone thinking of undertaking something like this • Procure a “Matt” to create and administer your tool • Get a good technical vendor for on-site • Report results in as close to real-time as possible on a wiki or other web-page

- 72. 72

- 73. Both groups valued support in design decision making Align methodology with needs of the project Research-inspired, not research-decided 73

- 74. 74

- 75. Benedek, Joey and Trish Miner. “Measuring Desirability: New Methods for Evaluating Desirability in a Usability Lab Setting.” Proceedings of UPA 2002 Conference, Orlando, FL, July 8–12, 2002. http://www.microsoft.com/usability/uepostings/desirabilitytoolkit.doc Lindgaard, Gitte, Gary Fernandes, Cathy Dudek, and J. Brown. "Attention Web Designers: You Have 50 Milliseconds to Make a Good First Impression!" Behaviour and Information Technology, 2006. http://www.imagescape.com/library/whitepapers/first-impression.pdf Rohrer, Christian. “Desirability Studies: Measuring Aesthetic Response to Visual Designs.” xdStrategy.com, October 28, 2008. Retrieved February 10, 2010. http://www.xdstrategy.com/2008/10/28/desirability_studies 75

- 76. User Focus. "Measuring satisfaction: Beyond the Usability Questionnaire." Retrieved February 10, 2010. http://www.userfocus.co.uk/articles/satisfaction.html UserEffect. "Guide to Low-Cost Usability Tools." Retrieved May 12, 2010. http://www.usereffect.com/topic/guide-to-low-cost-usability-tools Tullis, Thomas and Jacqueline Stetson. “A Comparison of Questionnaires for Assessing Website Usability.” Usability Professionals’ Association Conference, 2004. home.comcast.net/~tomtullis/publications/UPA2004TullisStetson.pdf Westerman, S. J., E. Sutherland, L. Robinson, H. Powell, and G. Tuck. “A Multi-method Approach to the Assessment of Web Page Designs.” Proceedings of the 2nd international conference on Affective Computing and Intelligent Interaction, 2007. http:// portal.acm.org/citation.cfm?id=1422200 76

- 77. Five Second Test http://fivesecondtest.com/ Feedback Army http://www.feedbackarmy.com Wordle http://www.wordle.net PrEmo http://www.premo-online.com 77